(AI generated content material/Shutterstock)

The meteoric rise of DeepSeek R-1 has put the highlight on an rising kind of AI mannequin known as a reasoning mannequin. As generative AI functions transfer past conversational interfaces, reasoning fashions are prone to develop in functionality and use, which is why they need to be in your AI radar.

A reasoning mannequin is a sort of enormous language mannequin (LLM) that may carry out complicated reasoning duties. As an alternative of rapidly producing output primarily based solely on a statistical guess of what the following phrase needs to be in a solution, as an LLM sometimes does, a reasoning mannequin will take time to interrupt a query down into particular person steps and work by means of a “chain of thought” course of to give you a extra correct reply. In that method, a reasoning mannequin is far more human-like in its method.

OpenAI debuted its first reasoning fashions, dubbed o1, in September 2024. In a weblog publish, the corporate defined that it used reinforcement studying (RL) strategies to coach the reasoning mannequin to deal with complicated duties in arithmetic, science, and coding. The mannequin carried out on the stage of PhD college students for physics, chemistry, and biology, whereas exceeding the flexibility of PhD college students for math and coding.

In accordance with OpenAI, reasoning fashions work by means of issues extra like a human would in comparison with earlier language fashions.

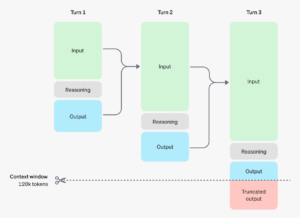

Reasoning fashions contain a chain-of-thought course of that includes further tokens (Picture supply: OpenAI)

“Just like how a human might imagine for a very long time earlier than responding to a troublesome query, o1 makes use of a sequence of thought when making an attempt to resolve an issue,” OpenAI stated in a technical weblog publish. “By way of reinforcement studying, o1 learns to hone its chain of thought and refine the methods it makes use of. It learns to acknowledge and proper its errors. It learns to interrupt down tough steps into less complicated ones. It learns to strive a unique method when the present one isn’t working. This course of dramatically improves the mannequin’s capacity to purpose.”

Kush Varshney, an IBM Fellow, says reasoning fashions can test themselves for correctness, which he says represents a sort of “meta cognition” that didn’t beforehand exist in AI. “We are actually beginning to put knowledge into these fashions, and that’s an enormous step,” Varshney informed an IBM tech reporter in a January 27 weblog publish.

That stage of cognitive energy comes at a value, notably at runtime. OpenAI, as an example, costs 20x extra for o1-mini than GPT-4o mini. And whereas its o3-mini is 63% cheaper than o1-mini per token, it’s nonetheless considerably costlier than GPT-4o-mini, reflecting the higher variety of tokens, dubbed reasoning tokens, which are used in the course of the “chain of thought” reasoning course of.

That’s one of many explanation why the introduction of DeekSeek R-1 was such a breakthrough: It has dramatically diminished computational necessities. The corporate behind DeepSeek claims that it educated its V-3 mannequin on a small cluster of older GPUs that solely price $5.5 million, a lot lower than the a whole lot of thousands and thousands it reportedly price to coach OpenAI’s newest GPT-4 mannequin. And at $.55 per million enter tokens, DeepSeek R-1 is about half the price of OpenAI o3-mini.

The stunning rise of DeepSeek-R1, which scored comparably to OpenAI’s o1 reasoning mannequin on math, coding, and science duties, is forcing AI researchers to rethink their method to growing and scaling AI. As an alternative of racing to construct ever-bigger LLMs that sport trillions of parameters and are educated on enormous quantities of information culled from quite a lot of sources, the success we’re witnessing with reasoning fashions like DeepSeek R-1 counsel that having a bigger variety of smaller fashions educated utilizing a combination of consultants (MoE) structure could also be a greater method.

One of many AI leaders who’s responding to the speedy modifications is Ali Ghodsi. In a current interview posted to YouTube, the Databricks CEO mentioned the importance of the rise of reasoning fashions and DeepSeek.

“The sport has clearly modified. Even within the large labs, they’re focusing all their efforts on these reasoning fashions,” Ghodsi says in within the interview. “So not [focusing on] scaling legal guidelines, not coaching gigantic fashions. They’re truly placing their cash on loads of reasoning.”

The rise of DeepSeek and reasoning fashions can even have an effect on processor demand. As Ghodsi notes, if the market shifts away from coaching ever-bigger LLMs which are generalist jacks of all trades, and strikes in direction of coaching smaller reasoning fashions that had been distilled from the huge LLMs, and enhanced utilizing RL strategies to be consultants in specialised fields, that may invariably influence the kind of {hardware} that’s wanted.

“Reasoning simply requires totally different sorts of chips,” Ghodsi says within the YouTube video. “It doesn’t require these networks the place you’ve these GPUs interconnected. You’ll be able to have an information middle right here, an information middle there. You’ll be able to have some GPUs over there. The sport has shifted.”

GPU-maker Nvidia acknowledges the shift this might have for its enterprise. In a weblog publish, the corporate touts the inference efficiency of the 50-series RTX line of PC-based GPUs (primarily based on the Blackwell GPUs) for operating a number of the smaller scholar fashions distilled from the bigger 671 -billion parameter DeepSeek-R1 mannequin.

“Excessive-performance RTX GPUs make AI capabilities all the time obtainable–even with out an web connection–and supply low latency and elevated privateness as a result of customers don’t must add delicate supplies or expose their queries to a web-based service,” Nvidia’s Annamalai Chockalingam writes in a weblog final week.

Reasoning fashions aren’t the one recreation on the town, in fact. There’s nonetheless a substantial funding occurring in constructing retrieval augmented (RAG) pipelines to current LLMs with information that displays the correct context. Many organizations are working to include graph databases as a supply of information that may be injected into the LLMs, what’s often called a GraphRAG method. Many organizations are additionally transferring ahead with plans to fine-tune and practice open supply fashions utilizing their very own information.

However the sudden look of reasoning fashions on the AI scene positively shakes issues up. Because the tempo of AI evolution continues to speed up, it will appear probably that these kinds of surprises and shocks will turn into extra frequent. Which will make for a bumpy journey, nevertheless it finally will create AI that’s extra succesful and helpful, and that’s finally a great factor for us all.

Associated Objects:

AI Classes Realized from DeepSeek’s Meteoric Rise

DeepSeek R1 Stuns the AI World

The Way forward for GenAI: How GraphRAG Enhances LLM Accuracy and Powers Higher Choice-Making