GPUs have an insatiable want for information, and retaining these processors fed could be a problem. That’s one of many massive causes that WEKA launched a brand new line of knowledge storage home equipment final week that may transfer information at as much as 18 million IOPS and serve 720 GB of knowledge per second.

The most recent GPUs from Nvidia can ingest information from reminiscence at unbelievable speeds, as much as 2 TB of knowledge per second for the A100 and three.35 TB per second for the H100. This form of reminiscence bandwidth, utilizing the most recent HBM3 commonplace, is required to coach the most important massive language fashions (LLMs) and run different scientific workloads.

Maintaining the PCI busses saturated with information is important for using the complete capability of the GPUs, and that requires a knowledge storage infrastructure that’s able to maintaining. The parents at WEKA say they’ve accomplished that with the brand new WEKApod line of knowledge storage home equipment it launched final week.

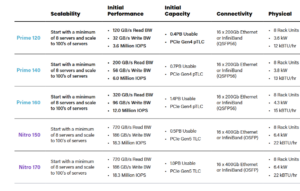

The corporate is providing two variations of the WEKApod, together with the Prime and the Nitro. Each households begin with clusters of eight rack-based servers and round half a petabyte of knowledge, and might scale to assist tons of of servers and a number of petabytes of knowledge.

The Prime line of WEKApods relies on PCIe Gen4 know-how and 200Gb Ethernet or Infiniband connectors. It begins out with 3.6 million IOPS and 120 GB per second of learn throughput, and goes as much as 12 million IOPS and 320 GB of learn throughput.

The Nitro line relies on PCIe Gen5 know-how and 400Gb Ethernet or Infiniband connectors. Each the Nitro 150 and the Nitro 180 are rated at 18 million IOPS of bandwidth and might hit information learn speeds of 720 GB per second and information write speeds of 186 GB per second.

Enterprise AI workloads require excessive efficiency for each studying and writing of knowledge, says Colin Gallagher, vice chairman of product advertising for WEKA.

“A number of of our rivals have currently been claiming to be one of the best information infrastructure for AI,” Gallagher says in a video on the WEKA web site. “However to take action they selectively quote a single quantity, usually one for studying information, and go away others out. For contemporary AI workloads, one efficiency information quantity is deceptive.”

That’s as a result of, in AI information pipelines, there’s a vital information interaction between studying and writing of knowledge because the AI workloads change, he says.

“Initially, information is ingested from varied sources for coaching, loaded to reminiscence, preprocessed and written again out,” Gallagher says. “Throughout coaching, it’s frequently learn to replace mannequin parameters, checkpoints of varied sizes are saved, and outcomes are written for analysis. After coaching, the mannequin generates outputs that are written for additional evaluation or use.”

The WEKAPods make the most of the WekaFS file system, the corporate’s high-speed parallel file system, which helps quite a lot of protocols. The home equipment assist GPUDirect Storage (GDS), an RDMA-based protocol developed by Nvidia, to enhance bandwidth and cut back latency between the server NIC and GPU reminiscence.

WekaFS has full assist for GDS and has been validated by Nvidia together with a reference structure, WEKA says. WEKApod Nitro is also licensed for Nvidia DGX SuperPOD.

WEKA says its new home equipment embrace an array of enterprise options, corresponding to assist for a number of protocols (FS, SMB, S3, POSIX, GDS, and CSI); encryption; backup/restoration; snapshotting; and information safety mechanisms.

For information safety particularly, it says it makes use of a patented distributed information safety coding scheme to protect towards information loss attributable to server failures. The corporate says it delivers the scalability and sturdiness of erasure coding, “however with out the efficiency penalty.”

“Accelerated adoption of generative AI functions and multi-modal retrieval-augmented era has permeated the enterprise sooner than anybody may have predicted, driving the necessity for reasonably priced, highly-performant and versatile information infrastructure options that ship extraordinarily low latency, drastically cut back the fee per tokens generated and might scale to fulfill the present and future wants of organizations as their AI initiatives evolve,” WEKA Chief Product Officer Nilesh Patel mentioned in a press launch. “WEKApod Nitro and WEKApod Prime provide unparalleled flexibility and selection whereas delivering distinctive efficiency, vitality effectivity, and worth to speed up their AI initiatives anyplace and in every single place they want them to run.”

Associated Gadgets:

Legacy Information Architectures Holding GenAI Again, WEKA Report Finds

Hyperion To Present a Peek at Storage, File System Utilization with World Website Survey

Object and File Storage Have Merged, However Product Variations Stay, Gartner Says