Posted by Thomas Ezan, Sr Developer Relation Engineer

Hundreds of builders throughout the globe are harnessing the ability of the Gemini 1.5 Professional and Gemini 1.5 Flash fashions to infuse superior generative AI options into their functions. Android builders are not any exception, and with the upcoming launch of the secure model of VertexAI in Firebase in a number of weeks (accessible in Beta since Google I/O), it is the right time to discover how your app can profit from it. We simply printed a codelab that can assist you get began.

Let’s deep dive into some superior capabilities of the Gemini API that transcend easy textual content prompting and uncover the thrilling use circumstances they’ll unlock in your Android app.

Shaping AI habits with system directions

System directions function a “preamble” that you just incorporate earlier than the consumer immediate. This permits shaping the mannequin’s habits to align along with your particular necessities and eventualities. You set the directions whenever you initialize the mannequin, after which these directions persist by way of all interactions with the mannequin, throughout a number of consumer and mannequin turns.

For instance, you need to use system directions to:

- Outline a persona or function for a chatbot (e.g, “clarify like I’m 5”)

- Specify the response to the output format (e.g., Markdown, YAML, and so on.)

- Set the output fashion and tone (e.g, verbosity, formality, and so on…)

- Outline the targets or guidelines for the duty (e.g, “return a code snippet with out additional rationalization”)

- Present further context for the immediate (e.g., a information cutoff date)

To make use of system directions in your Android app, cross it as parameter whenever you initialize the mannequin:

val generativeModel = Firebase.vertexAI.generativeModel( modelName = "gemini-1.5-flash", ... systemInstruction = content material { textual content("You're a educated tutor. Reply the questions utilizing the socratic tutoring technique.") } )

You’ll be able to be taught extra about system instruction within the Vertex AI in Firebase documentation.

It’s also possible to simply take a look at your immediate with totally different system directions in Vertex AI Studio, Google Cloud console software for quickly prototyping and testing prompts with Gemini fashions.

When you’re able to go to manufacturing it is suggested to focus on a selected model of the mannequin (e.g. gemini-1.5-flash-002). However as new mannequin variations are launched and former ones are deprecated, it’s suggested to make use of Firebase Distant Config to have the ability to replace the model of the Gemini mannequin with out releasing a brand new model of your app.

Past chatbots: leveraging generative AI for superior use circumstances

Whereas chatbots are a preferred software of generative AI, the capabilities of the Gemini API transcend conversational interfaces and you may combine multimodal GenAI-enabled options into numerous features of your Android app.

Many duties that beforehand required human intervention (corresponding to analyzing textual content, picture or video content material, synthesizing knowledge right into a human readable format, participating in a artistic course of to generate new content material, and so on… ) might be probably automated utilizing GenAI.

Gemini JSON help

Android apps don’t interface effectively with pure language outputs. Conversely, JSON is ubiquitous in Android growth, and offers a extra structured manner for Android apps to devour enter. Nonetheless, guaranteeing correct key/worth formatting when working with generative fashions might be difficult.

With the overall availability of Vertex AI in Firebase, applied options to streamline JSON technology with correct key/worth formatting:

Response MIME kind identifier

When you have tried producing JSON with a generative AI mannequin, it is possible you’ve got discovered your self with undesirable additional textual content that makes the JSON parsing tougher.

e.g:

Certain, right here is your JSON: ``` { "someKey”: “someValue", ... } ```

When utilizing Gemini 1.5 Professional or Gemini 1.5 Flash, within the technology configuration, you’ll be able to explicitly specify the mannequin’s response mime/kind as software/json and instruct the mannequin to generate well-structured JSON output.

val generativeModel = Firebase.vertexAI.generativeModel( modelName = "gemini-1.5-flash", … generationConfig = generationConfig { responseMimeType = "software/json" } )

Assessment the API reference for extra particulars.

Quickly, the Android SDK for Vertex AI in Firebase will allow you to outline the JSON schema anticipated within the response.

Multimodal capabilities

Each Gemini 1.5 Flash and Gemini 1.5 Professional are multimodal fashions. It signifies that they’ll course of enter from a number of codecs, together with textual content, pictures, audio, video. As well as, they each have lengthy context home windows, able to dealing with as much as 1 million tokens for Gemini 1.5 Flash and a couple of million tokens for Gemini 1.5 Professional.

These options open doorways to progressive functionalities that had been beforehand inaccessible corresponding to routinely generate descriptive captions for pictures, determine subjects in a dialog and generate chapters from an audio file or describe the scenes and actions in a video file.

You’ll be able to cross a picture to the mannequin as proven on this instance:

val contentResolver = applicationContext.contentResolver contentResolver.openInputStream(imageUri).use { stream -> stream?.let { val bitmap = BitmapFactory.decodeStream(stream) // Present a immediate that features the picture specified above and textual content val immediate = content material { picture(bitmap) textual content("How many individuals are on this image?") } } val response = generativeModel.generateContent(immediate) }

It’s also possible to cross a video to the mannequin:

val contentResolver = applicationContext.contentResolver contentResolver.openInputStream(videoUri).use { stream -> stream?.let { val bytes = stream.readBytes() // Present a immediate that features the video specified above and textual content val immediate = content material { blob("video/mp4", bytes) textual content("What's within the video?") } val fullResponse = generativeModel.generateContent(immediate) } }

You’ll be able to be taught extra about multimodal prompting within the VertexAI for Firebase documentation.

Notice: This technique allows you to cross recordsdata as much as 20 MB. For bigger recordsdata, use Cloud Storage for Firebase and embrace the file’s URL in your multimodal request. Learn the documentation for extra info.

Operate calling: Extending the mannequin’s capabilities

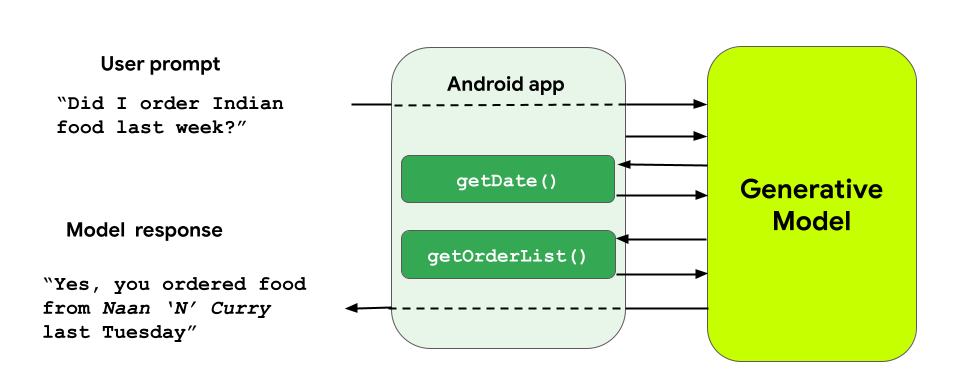

Operate calling allows you to prolong the capabilities to generative fashions. For instance you’ll be able to allow the mannequin to retrieve info in your SQL database and feed it again to the context of the immediate. It’s also possible to let the mannequin set off actions by calling the features in your app supply code. In essence, perform calls bridge the hole between the Gemini fashions and your Kotlin code.

Take the instance of a meals supply software that’s desirous about implementing a conversational interface with the Gemini 1.5 Flash. Assume that this software has a getFoodOrder(delicacies: String) perform that returns the record orders from the consumer for a selected kind of delicacies:

enjoyable getFoodOrder(delicacies: String) : JSONObject { // implementation… }

Notice that the perform, to be usable to by the mannequin, must return the response within the type of a JSONObject.

To make the response accessible to Gemini 1.5 Flash, create a definition of your perform that the mannequin will be capable to perceive utilizing defineFunction:

val getOrderListFunction = defineFunction( title = "getOrderList", description = "Get the record of meals orders from the consumer for a outline kind of delicacies.", Schema.str(title = "cuisineType", description = "the kind of delicacies for the order") ) { cuisineType -> getFoodOrder(cuisineType) }

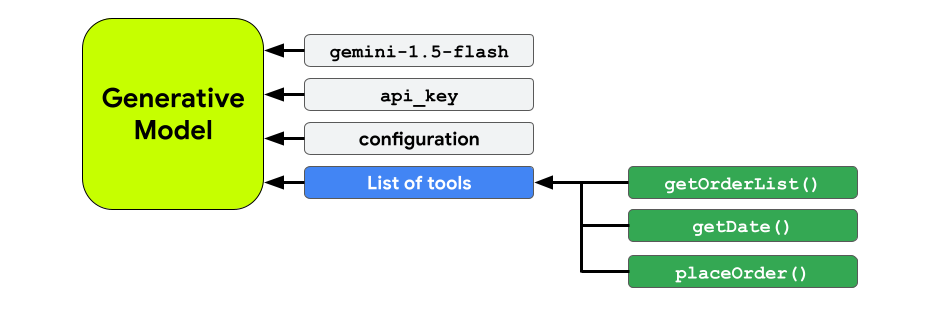

Then, whenever you instantiate the mannequin, share this perform definition with the mannequin utilizing the instruments parameter:

val generativeModel = Firebase.vertexAI.generativeModel( modelName = "gemini-1.5-flash", ... instruments = listOf(Device(listOf(getExchangeRate))) )

Lastly, whenever you get a response from the mannequin, verify within the response if the mannequin is definitely requesting to execute the perform:

// Ship the message to the generative mannequin var response = chat.sendMessage(immediate) // Examine if the mannequin responded with a perform name response.functionCall?.let { functionCall -> // Attempt to retrieve the saved lambda from the mannequin's instruments and // throw an exception if the returned perform was not declared val matchedFunction = generativeModel.instruments?.flatMap { it.functionDeclarations } ?.first { it.title == functionCall.title } ?: throw InvalidStateException("Operate not discovered: ${functionCall.title}") // Name the lambda retrieved above val apiResponse: JSONObject = matchedFunction.execute(functionCall) // Ship the API response again to the generative mannequin // in order that it generates a textual content response that may be exhibited to the consumer response = chat.sendMessage( content material(function = "perform") { half(FunctionResponsePart(functionCall.title, apiResponse)) } ) } // If the mannequin responds with textual content, present it within the UI response.textual content?.let { modelResponse -> println(modelResponse) }

To summarize, you’ll present the features (or instruments to the mannequin) at initialization:

And when applicable, the mannequin will request to execute the suitable perform and supply the outcomes:

You’ll be able to learn extra about perform calling within the VertexAI for Firebase documentation.

Unlocking the potential of the Gemini API in your app

The Gemini API affords a treasure trove of superior options that empower Android builders to craft actually progressive and fascinating functions. By going past primary textual content prompts and exploring the capabilities highlighted on this weblog submit, you’ll be able to create AI-powered experiences that delight your customers and set your app aside within the aggressive Android panorama.

Learn extra about how some Android apps are already beginning to leverage the Gemini API.

To be taught extra about AI on Android, take a look at different sources we now have accessible throughout AI on Android Highlight Week.

Use #AndroidAI hashtag to share your creations or suggestions on social media, and be part of us on the forefront of the AI revolution!

The code snippets on this weblog submit have the next license:

// Copyright 2024 Google LLC. // SPDX-License-Identifier: Apache-2.0