At Databricks, we all know that knowledge is one in every of your most beneficial belongings. Our product and safety groups work collectively to ship an enterprise-grade Information Intelligence Platform that allows you to defend towards safety dangers and meet your compliance obligations. Over the previous 12 months, we’re proud to have delivered new capabilities and sources similar to securing knowledge entry with Azure Personal Hyperlink for Databricks SQL Serverless, conserving knowledge personal with Azure firewall assist for Workspace storage, defending knowledge in-use with Azure confidential computing, attaining FedRAMP Excessive Company ATO on AWS GovCloud, publishing the Databricks AI Safety Framework, and sharing particulars on our strategy to Accountable AI.

In accordance with the 2024 Verizon Information Breach Investigations Report, the variety of knowledge breaches has elevated by 30% since final 12 months. We consider it’s essential so that you can perceive and appropriately make the most of our safety features and undertake really useful safety finest practices to mitigate knowledge breach dangers successfully.

On this weblog, we’ll clarify how one can leverage a few of our platform’s high controls and lately launched safety features to ascertain a sturdy defense-in-depth posture that protects your knowledge and AI belongings. We may even present an summary of our safety finest practices sources so that you can stand up and working rapidly.

Shield your knowledge and AI workloads throughout the Databricks Information Intelligence Platform

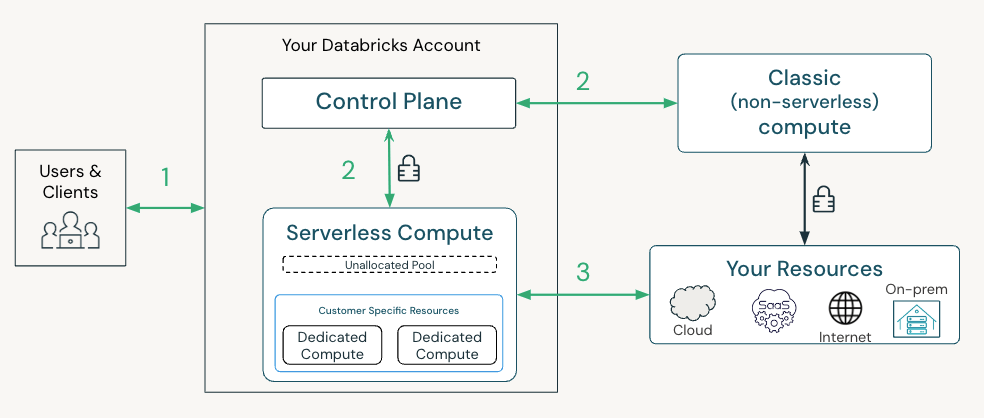

The Databricks Platform offers safety guardrails to defend towards account takeover and knowledge exfiltration dangers at every entry level. Within the beneath picture, we define a typical lakehouse structure on Databricks with 3 surfaces to safe:

- Your shoppers, customers and purposes, connecting to Databricks

- Your workloads connecting to Databricks providers (APIs)

- Your knowledge being accessed out of your Databricks workloads

Let’s now stroll by way of at a excessive degree among the high controls—both enabled by default or accessible so that you can activate—and new safety capabilities for every connection level. Our full listing of suggestions primarily based on totally different menace fashions might be present in our safety finest apply guides.

Connecting customers and purposes into Databricks (1)

To guard towards access-related dangers, it’s best to use a number of elements for each authentication and authorization of customers and purposes into Databricks. Utilizing solely passwords is insufficient on account of their susceptibility to theft, phishing, and weak person administration. In reality, as of July 10, 2024, Databricks-managed passwords reached the end-of-life and are now not supported within the UI or by way of API authentication. Past this extra default safety, we advise you to implement the beneath controls:

- Authenticate by way of single-sign-on on the account degree for all person entry (AWS, SSO is robotically enabled on Azure/GCP)

- Leverage multi-factor authentication supplied by your IDP to confirm all customers and purposes which are accessing Databricks (AWS, Azure, GCP)

- Allow unified login for all workspaces utilizing a single account-level SSO and configure SSO Emergency entry with MFA for streamlined and safe entry administration (AWS, Databricks integrates with built-in identification suppliers on Azure/GCP)

- Use front-end personal hyperlink on workspaces to limit entry to trusted personal networks (AWS, Azure, GCP)

- Configure IP entry lists on workspaces and on your account to solely permit entry from trusted community places, similar to your company community (AWS, Azure, GCP)

Connecting your workloads to Databricks providers (2)

To stop workload impersonation, Databricks authenticates workloads with a number of credentials in the course of the lifecycle of the cluster. Our suggestions and accessible controls rely in your deployment structure. At a excessive degree:

- For Traditional clusters that run in your community, we suggest configuring a back-end personal hyperlink between the compute aircraft and the management aircraft. Configuring the back-end personal hyperlink ensures that your cluster can solely be authenticated over that devoted and personal channel.

- For Serverless, Databricks robotically offers a defense-in-depth safety posture on our platform utilizing a mixture of application-level credentials, mTLS consumer certificates and personal hyperlinks to mitigate towards Workspace impersonation dangers.

Connecting from Databricks to your storage and knowledge sources (3)

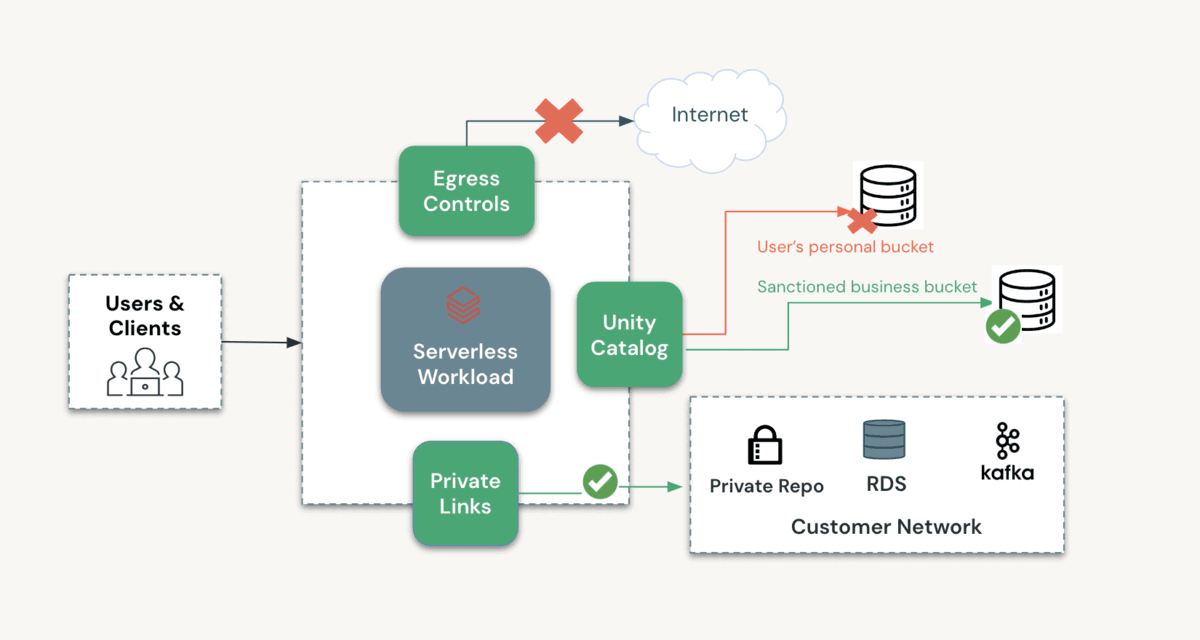

To make sure that knowledge can solely be accessed by the precise person and workload on the precise Workspace, and that workloads can solely write to approved storage places, we suggest leveraging the next options:

- Utilizing Unity Catalog to manipulate entry to knowledge: Unity Catalog offers a number of layers of safety, together with fine-grained entry controls and time-bound down-scoped credentials which are solely accessible to trusted code by default.

- Leverage Mosaic AI Gateway: Now in Public Preview, Mosaic AI Gateway permits you to monitor and management the utilization of each exterior fashions and fashions hosted on Databricks throughout your enterprise.

- Configuring entry from approved networks: You possibly can configure entry insurance policies utilizing S3 bucket insurance policies on AWS, Azure storage firewall and VPC Service Controls on GCP.

- With Traditional clusters, you possibly can lock down entry to your community by way of the above-listed controls.

- With Serverless, you possibly can lock down entry to the Serverless community (AWS, Azure) or to a devoted personal endpoint on Azure. On Azure, now you can allow the storage firewall on your Workspace storage (DBFS root) account.

- Sources exterior to Databricks, similar to exterior fashions or storage accounts, might be configured with devoted and personal connectivity. Here’s a deployment information for accessing Azure OpenAI, one in every of our most requested situations.

- Configuring egress controls to stop entry to unauthorized storage places: With Traditional clusters, you possibly can configure egress controls in your community. With SQL Serverless, Databricks doesn’t permit web entry from untrusted code similar to Python UDFs. To find out how we’re enhancing egress controls as you undertake extra Serverless merchandise, please this way to affix our previews.

The diagram beneath outlines how one can configure a non-public and safe atmosphere for processing your knowledge as you undertake Databricks Serverless merchandise. As described above, a number of layers of safety can shield all entry to and from this atmosphere.

Outline, deploy and monitor your knowledge and AI workloads with industry-leading safety finest practices

Now that we’ve outlined a set of key controls accessible to you, you in all probability are questioning how one can rapidly operationalize them for your online business. Our Databricks Safety staff recommends taking a “outline, deploy, and monitor” strategy utilizing the sources they’ve developed from their expertise working with a whole bunch of shoppers.

- Outline: It’s best to configure your Databricks atmosphere by reviewing our greatest practices together with the dangers particular to your group. We have crafted complete finest apply guides for Databricks deployments on all three main clouds. These paperwork supply a guidelines of safety practices, menace fashions, and patterns distilled from our enterprise engagements.

- Deploy: Terraform templates make deploying safe Databricks workspaces simple. You possibly can programmatically deploy workspaces and the required cloud infrastructure utilizing the official Databricks Terraform supplier. These unified Terraform templates are preconfigured with hardened safety settings much like these utilized by our most security-conscious prospects. View our GitHub to get began on AWS, Azure, and GCP.

- Monitor: The Safety Evaluation Device (SAT) can be utilized to observe adherence to safety finest practices in Databricks workspaces on an ongoing foundation. We lately upgraded the SAT to streamline setup and improve checks, aligning them with the Databricks AI Safety Framework (DASF) for improved protection of AI safety dangers.

Keep forward in knowledge and AI safety

The Databricks Information Intelligence Platform offers an enterprise-grade defense-in-depth strategy for shielding knowledge and AI belongings. For suggestions on mitigating safety dangers, please consult with our safety finest practices guides on your chosen cloud(s). For a summarized guidelines of controls associated to unauthorized entry, please consult with this doc.

We repeatedly improve our platform primarily based in your suggestions, evolving {industry} requirements, and rising safety threats to higher meet your wants and keep forward of potential dangers. To remain knowledgeable, bookmark our Safety and Belief weblog, head over to our YouTube channel, and go to the Databricks Safety and Belief Heart.