(Lewis-Tse/Shutterstock)

With $274 billion in income final 12 months and $3.3 trillion in belongings beneath administration, JPMorgan Chase has extra assets than most to dedicate to constructing a profitable information and AI technique. However as James Massa, JPMorgan Chase’s senior govt director of software program engineering and structure, defined throughout his SolixEmpower keynote final week, even the most important corporations on the earth should pay shut consideration to the information and AI particulars with a purpose to succeed.

In his Solix Empower 2024 keynote tackle, titled “Information High quality and Information Technique for AI, Measuring AI Worth, Testing LLMs, and AI Use Instances,” Massa offered a behind-the-scenes glimpse into how the storied monetary companies agency handles information and AI challenges like mannequin testing, information freshness, explainability, calculating worth, and regulatory compliance.

One of many huge points in adopting massive language fashions (LLMs) is belief, Massa mentioned. When an organization hires a brand new worker, they search for a level that signifies a college has vetted his or her skills. Whereas LLMs, ostensibly, are designed to exchange (or not less than increase) human staff, we don’t have the identical kind of certification that claims “you’ll be able to belief this LLM.”

“What’s the expertise of the LLM worker that’s there? What sort of information they…skilled upon? And are they superb?” Massa mentioned within the Qualcomm Auditorium on the College of California, San Diego campus, the place the information administration software program vendor Solix Applied sciences held the occasion. “That’s the factor that doesn’t exist but for AI.”

We’re nonetheless within the early days of AI, he mentioned, and the AI distributors, like OpenAI, are consistently tweaking their algorithms. It’s as much as the AI adopters to repeatedly check their GenAI purposes to make sure issues are working as marketed, since they don’t get any ensures from the distributors.

“It’s difficult to quantify the LLM high quality and set benchmarks,” he mentioned. “As a result of there’s no commonplace and it’s laborious to quantify, we’re dropping one thing that has been there for years.”

James Massa, JPMorgan Chase’s senior govt director of software program engineering and structure, talking at SolixEmpower 2024 at UCSD on November 14, 2024

Within the outdated days, the standard assurance (QA) crew would stand in the way in which of pushing an app to manufacturing, Massa mentioned. However due to the event of DevOps instruments and strategies, akin to Git and CICD, QA practices have grow to be extra standardized. Software program high quality has improved.

“We had issues like anticipated outcomes, full protection of the code. We reached an understanding that if this and that occurs, then you’ll be able to go to manufacturing. That’s not there as a lot right this moment,” Massa mentioned. “Now we’re again to the push and the pull of whether or not one thing ought to go or shouldn’t go. It turns into a persona contest about who’s obtained extra of the gravitas and weight to face up within the assembly and say, this must go ahead or this must be pulled again.”

Within the outdated days, builders labored in a probabilstic paradigm, and software program (principally) labored in a predictable method. However AI fashions are probabilistic, and there are not any ensures you’ll get the identical reply twice. Firms should maintain humans-in-the-loop to make sure that AI doesn’t get too far out of contact with actuality.

As an alternative of getting a single, appropriate reply throughout QA testing, the perfect that AI testers can hope for is an “anticipated kind of reply,” Massa mentioned. “There’s no such factor as testing full,” he mentioned. “Now the LLMs, they’re virtually virtually alive. The info drifts and also you get completely different outcomes consequently.”

Issues get much more complicated when AI fashions work together with different AI fashions. “It’s like infinity squared. We don’t know what’s going to occur,” he mentioned. “So we should determine on how a lot human within the loop we’re going to must assessment the solutions.”

JPMC makes use of a number of instruments to check varied features of LLMs, together with Recall-Oriented Understudy for Gisting Analysis ROUGE) for testing recall, BiLingual Analysis Understudy (BLEU) for testing precision, Ragas for measuring a mixture of recall, faithfulness, context relevancy, and reply relevancy, and the Elo ranking system for testing how fashions change over time, Massa mentioned.

One other facet impact of the dearth of belief in AI programs is the elevated want for explainability. Massa recalled a easy rule that each one software program engineering managers adopted.

“You clarify it to me in 60 seconds. In the event you can’t try this, you don’t sufficiently perceive it, and I don’t belief that you just haven’t made plenty of bugs. I don’t belief that this factor ought to go to manufacturing,” Massaid mentioned. “That was the way in which we operated. Explainability is quite a bit like that with the LLM. In the event you can’t clarify to me why you’re getting these outcomes and the way you realize you received’t get false negatives, then you’ll be able to’t go to manufacturing.”

The quantity of testing that AI would require is immense, notably if regulators are concerned. However there are limits to how a lot testing could be realistically completed. As an example, say an AI developer has constructed a mannequin and examined it completely with six month’s value of knowledge, adopted by additional evaluation of the check automated by a machine, Massa mentioned. “I ought to be going to manufacturing on curler skates,” he advised his viewers. “That is nice.”

However then the powers that be dropped an enormous phrase on him: Sustainability.

“I’d by no means heard [it] earlier than, sustainability,” he mentioned. “That is what sustainability says on a go ahead foundation. Is that this sustainable? How are you aware on a go-forward foundation this factor received’t disintegrate on you? How do you retain up with that?”

The reply, Massa was advised, was to have a second LLM test the outcomes of the primary. That led Massa to surprise: Who’s checking the second LLM? “So it’s a corridor of mirrors,” he mentioned. “Identical to in compliance…tthere’s first, second, third strains of compliance protection.”

If the dearth of certification, QA challenges, and testing sustainability doesn’t journey you up, there may be at all times the potential to have information issues, together with stale information. Information that has been sitting in a single place for a very long time could now not meet the wants of the corporate. That requires extra testing. Something impacting the AI product, whether or not it’s vector embeddings or paperwork used for RAG, have to be checked, he mentioned. Typically instances, there will probably be dozens of variations of a single doc, so corporations additionally want an expiry system for deprecating outdated variations of paperwork which might be extra more likely to include stale information.

“It’s quite simple,” Massa mentioned. “It’s not rocket science, what must be completed. But it surely takes huge effort and cash to make an app. And hopefully there’ll be extra [vendor] instruments that assist us do it. However thus far, there’s plenty of rolling your personal to get it completed.”

Checking for information high quality points one time received’t get you far with Massa, who advocates for a “zero belief” coverage relating to information high quality. And as soon as a knowledge high quality subject is detected, the corporate should have a sort of ticketing workflow system to guarantee that the problems are fastened.

“It’s nice, for instance, that you just examined all the information as soon as on the way in which in. However how are you aware that the information hasn’t gone dangerous by some odd course of alongside the way in which whereas it was sitting there?” he mentioned. “Provided that you check it earlier than you employ it. So assume zero-trust information high quality.”

Guardrails are additionally wanted to maintain the AI from behaving badly. These guardrails perform like firewalls, in that they forestall dangerous issues from coming into the corporate in addition to forestall dangerous issues from going out, Massa mentioned. Sadly, it may be fairly difficult to construct guardrails to deal with each potentiality.

“It’s very laborious to give you these guardrails when there’s infinity squared various things that would occur,” he mentioned. “They mentioned, so show to me, with no shadow of a doubt, that you just’ve lined infinity squared issues and you’ve got the guardrails for it.” That’s not more likely to occur.

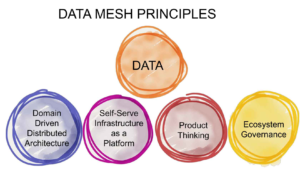

JPMC has centralized features, nevertheless it additionally needs its information scientists to be free to pursue “ardour tasks,” Massa mentioned. To allow this type of information use, the corporate has adopted a knowledge mesh structure. “Information mesh is sweet to make the information each out there and discoverable,” he mentioned.

The corporate’s information technique is a mixture of bottom-up and top-down approaches, Massa mentioned. “We’re type of taking part in each ends,” he says. “They mentioned it’s okay to have ardour tasks, for instance, as a result of that fosters innovation and studying, and also you by no means know what’s going to come back out when you might have the centralized management.”

Some centralized management is critical, nevertheless, such relating to AI rules, compliance and delicate information. “I feel we’re doing experiments at both finish of the continuum to some extent, and looking for the place we belong, the place we need to be going ahead,” he mentioned. “Someplace within the center, as normal. Reality is at all times within the center.”

At one level, Massa’s crew had 300 AI fashions, however that quantity has been whittled right down to about 100, he says. A part of that discount stemmed from the corporate’s requirement that each mannequin have a greenback worth and generate a optimistic ROI.

Discovering AI worth is just not at all times straightforward, Massa mentioned. For some AI fashions, akin to fraud prevention, assigning an ROI is comparatively simple, however in different circumstances, it’s fairly troublesome. The paradox of regulatory compliance guidelines makes it troublesome to evaluate impacts, too.

Some tasks are higher candidates for AI than others. Initiatives that may scale are higher for the “AI chainsaw” than tasks that may’t scale. “I’m not going to take a chainsaw to chop down that little sapling, provided that there’s an enormous redwood,” he mentioned. “The AI is a chainsaw.”

One other classes Massa realized is that folks don’t scale. Initiatives that require fixed consideration from information scientists aren’t not the perfect candidates for ongoing funding. That’s a lesson he realized from the times of conventional machine studying.

“It solely took one or two or three fashions earlier than I came upon that my whole crew is dedicated to sustaining the fashions,” he mentioned. “We are able to’t make any new fashions. It doesn’t scale, as a result of individuals don’t scale. In order that ought to be taken into consideration as early as doable, so that you just don’t find yourself like me.”

You possibly can view Massa’s presentation right here.

Associated Objects:

Solix Internet hosting Information and AI Convention at UCSD

Prime 5 Causes Why ChatGPT is Not Prepared for the Enterprise

Information High quality Is A Mess, However GenAI Can Assist

huge information, information high quality, GenAI, human within the loop, James Massa, JPMC, JPMorgan Chase, LLM monitoring, LLM testing, LLMs, mannequin administration, Q&A