OpenAI’s newest mannequin, o3-mini, is revolutionizing coding duties with its superior reasoning, problem-solving, and code era capabilities. It effectively handles complicated queries and integrates structured knowledge, setting a brand new normal in AI purposes. This text explores utilizing o3-mini and CrewAI to construct a Retrieval-Augmented Technology (RAG) analysis assistant agent that retrieves info from a number of PDFs and processes consumer queries intelligently. We’ll use CrewAI’s CrewDoclingSource, SerperDevTool, and OpenAI’s o3-mini to boost automation in analysis workflows.

Constructing the RAG Agent with o3-mini and CrewAI

With the overwhelming quantity of analysis being revealed, an automatic RAG-based assistant can assist researchers shortly discover related insights with out manually skimming via a whole lot of papers. The agent we’re going to construct will course of PDFs to extract key info and reply queries based mostly on the content material of the paperwork. If the required info isn’t discovered within the PDFs, it can mechanically carry out an internet search to offer related insights. This setup could be prolonged for extra superior duties, equivalent to summarizing a number of papers, detecting contradictory findings, or producing structured studies.

On this hands-on information, we are going to construct a analysis agent that can undergo articles on DeepSeek-R1 and o3-mini, to reply queries we ask about these fashions. For constructing this analysis assistant agent, we are going to first undergo the stipulations and arrange the setting. We’ll then import the mandatory modules, set the API keys, and cargo the analysis paperwork. Then, we are going to go on to outline the AI mannequin and combine the net search device into it. Lastly, we are going to create he AI brokers, outline their duties, and assemble the crew. As soon as prepared, we’ll run the analysis assistant agent to search out out if o3-mini is best and safer than DeepSeek-R1.

Stipulations

Earlier than diving into the implementation, let’s briefly go over what we have to get began. Having the proper setup ensures a easy growth course of and avoids pointless interruptions.

So, guarantee you’ve:

- A working Python setting (3.8 or above)

- API keys for OpenAI and Serper (Google Scholar API)

With these in place, we’re prepared to begin constructing!

Step 1: Set up Required Libraries

First, we have to set up the mandatory libraries. These libraries present the muse for the doc processing, AI agent orchestration, and internet search functionalities.

!pip set up crewai

!pip set up 'crewai[tools]'

!pip set up docling

These libraries play an important position in constructing an environment friendly AI-powered analysis assistant.

- CrewAI gives a sturdy framework for designing and managing AI brokers, permitting the definition of specialised roles and enabling environment friendly analysis automation. It additionally facilitates job delegation, guaranteeing easy collaboration between AI brokers.

- Moreover, CrewAI[tools] installs important instruments that improve AI brokers’ capabilities, enabling them to work together with APIs, carry out internet searches, and course of knowledge seamlessly.

- Docling focuses on extracting structured data from analysis paperwork, making it perfect for processing PDFs, educational papers, and text-based recordsdata. On this undertaking, it’s used to extract key findings from arXiv analysis papers.

Step 2: Import Crucial Modules

import os

from crewai import LLM, Agent, Crew, Activity

from crewai_tools import SerperDevTool

from crewai.data.supply.crew_docling_source import CrewDoclingSource

On this,

- The os module securely manages environmental variables like API keys for easy integration.

- LLM powers the AI reasoning and response era.

- The Agent defines specialised roles to deal with duties effectively.

- Crew manages a number of brokers, guaranteeing seamless collaboration.

- Activity assigns and tracks particular tasks.

- SerperDevTool allows Google Scholar searches, bettering exterior reference retrieval.

- CrewDoclingSource integrates analysis paperwork, enabling structured data extraction and evaluation.

Step 3: Set API Keys

os.environ['OPENAI_API_KEY'] = 'your_openai_api_key'

os.environ['SERPER_API_KEY'] = 'your_serper_api_key'

Get API Keys?

- OpenAI API Key: Enroll at OpenAI and get an API key.

- Serper API Key: Register at Serper.dev to acquire an API key.

These API keys enable entry to AI fashions and internet search capabilities.

Step 4: Load Analysis Paperwork

On this step, we are going to load the analysis papers from arXiv, enabling our AI mannequin to extract insights from them. The chosen papers cowl key matters:

- https://arxiv.org/pdf/2501.12948: Explores incentivizing reasoning capabilities in LLMs via reinforcement studying (DeepSeek-R1).

- https://arxiv.org/pdf/2501.18438: Compares the protection of o3-mini and DeepSeek-R1.

- https://arxiv.org/pdf/2401.02954: Discusses scaling open-source language fashions with a long-term perspective.

content_source = CrewDoclingSource(

file_paths=[

"https://arxiv.org/pdf/2501.12948",

"https://arxiv.org/pdf/2501.18438",

"https://arxiv.org/pdf/2401.02954"

],

)

Step 5: Outline the AI Mannequin

Now we are going to outline the AI mannequin.

llm = LLM(mannequin="o3-mini", temperature=0)

- o3-mini: A strong AI mannequin for reasoning.

- temperature=0: Ensures deterministic outputs (identical reply for a similar question).

Step 6: Configure Net Search Instrument

To boost analysis capabilities, we combine an internet search device that retrieves related educational papers when the required info shouldn’t be discovered within the supplied paperwork.

serper_tool = SerperDevTool(

search_url="https://google.serper.dev/scholar",

n_results=2 # Fetch prime 2 outcomes

)

- search_url=”https://google.serper.dev/scholar”

This specifies the Google Scholar search API endpoint.It ensures that searches are carried out particularly in scholarly articles, analysis papers, and educational sources, slightly than common internet pages.

- n_results=2

This parameter limits the variety of search outcomes returned by the device, guaranteeing that solely essentially the most related info is retrieved. On this case, it’s set to fetch the highest two analysis papers from Google Scholar, prioritizing high-quality educational sources. By decreasing the variety of outcomes, the assistant retains responses concise and environment friendly, avoiding pointless info overload whereas sustaining accuracy.

Step 7: Outline Embedding Mannequin for Doc Search

To effectively retrieve related info from paperwork, we use an embedding mannequin that converts textual content into numerical representations for similarity-based search.

embedder = {

"supplier": "openai",

"config": {

"mannequin": "text-embedding-ada-002",

"api_key": os.environ['OPENAI_API_KEY']

}

}

The embedder in CrewAI is used for changing textual content into numerical representations (embeddings), enabling environment friendly doc retrieval and semantic search. On this case, the embedding mannequin is supplied by OpenAI, particularly utilizing “text-embedding-ada-002”, a well-optimized mannequin for producing high-quality embeddings. The API secret is retrieved from the setting variables to authenticate requests.

CrewAI helps a number of embedding suppliers, together with OpenAI and Gemini (Google’s AI fashions), permitting flexibility in selecting the most effective mannequin based mostly on accuracy, efficiency, and value issues.

Step 8: Create the AI Brokers

Now we are going to create the 2 AI Brokers required for our researching job: the Doc Search Agent, and the Net Search Agent.

The Doc Search Agent is liable for retrieving solutions from the supplied analysis papers and paperwork. It acts as an skilled in analyzing technical content material and extracting related insights. If the required info shouldn’t be discovered, it might probably delegate the question to the Net Search Agent for additional exploration. The allow_delegation=True setting allows this delegation course of.

doc_agent = Agent(

position="Doc Searcher",

aim="Discover solutions utilizing supplied paperwork. If unavailable, delegate to the Search Agent.",

backstory="You're an skilled in analyzing analysis papers and technical blogs to extract insights.",

verbose=True,

allow_delegation=True, # Permits delegation to the search agent

llm=llm,

)

The Net Search Agent, then again, is designed to seek for lacking info on-line utilizing Google Scholar. It steps in solely when the Doc Search Agent fails to search out a solution within the accessible paperwork. In contrast to the Doc Search Agent, it can’t delegate duties additional (allow_delegation=False). It makes use of Serper (Google Scholar API) as a device to fetch related educational papers and guarantee correct responses.

search_agent = Agent(

position="Net Searcher",

aim="Seek for the lacking info on-line utilizing Google Scholar.",

backstory="When the analysis assistant can't discover a solution, you step in to fetch related knowledge from the net.",

verbose=True,

allow_delegation=False,

instruments=[serper_tool],

llm=llm,

)

Step 9: Outline the Duties for the Brokers

Now we are going to create the 2 duties for the brokers.

The primary job includes answering a given query utilizing accessible analysis papers and paperwork.

task1 = Activity(

description="Reply the next query utilizing the accessible paperwork: {query}. "

"If the reply shouldn't be discovered, delegate the duty to the Net Search Agent.",

expected_output="A well-researched reply from the supplied paperwork.",

agent=doc_agent,

)

The subsequent job comes into play when the document-based search doesn’t yield a solution.

Activity 2: Carry out Net Search if Wanted

task2 = Activity(

description="If the document-based agent fails to search out the reply, carry out an internet search utilizing Google Scholar.",

expected_output="An online-searched reply with related citations.",

agent=search_agent,

)

Step 10: Assemble the Crew

The Crew in CrewAI manages brokers to finish duties effectively by coordinating the Doc Search Agent and Net Search Agent. It first searches throughout the uploaded paperwork and delegates to internet search if wanted.

- knowledge_sources=[content_source] gives related paperwork,

- embedder=embedder allows semantic search, and

- verbose=True logs actions for higher monitoring, guaranteeing a easy workflow.

crew = Crew(

brokers=[doc_agent, search_agent],

duties=[task1, task2],

verbose=True,

knowledge_sources=[content_source],

embedder=embedder

)

Step 11: Run the Analysis Assistant

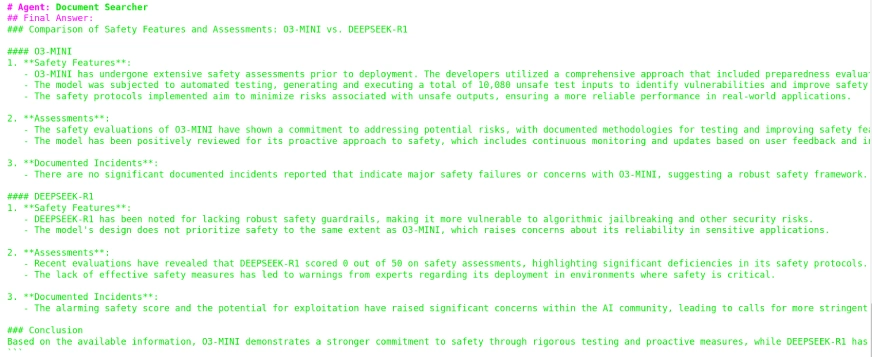

The preliminary question is directed to the doc to examine if the researcher agent can present a response. The query being requested is “O3-MINI vs DEEPSEEK-R1: Which one is safer?”

Instance Question 1:

query = "O3-MINI VS DEEPSEEK-R1: WHICH ONE IS SAFER?"

end result = crew.kickoff(inputs={"query": query})

print("Closing Reply:n", end result)

Response:

Right here, we will see that the ultimate reply is generated by the Doc Searcher, because it efficiently positioned the required info throughout the supplied paperwork.

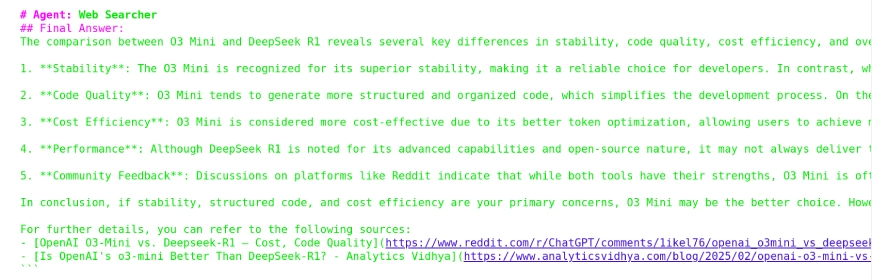

Instance Question 2:

Right here, the query “Which one is best, O3 Mini or DeepSeek R1?” shouldn’t be accessible within the doc. The system will examine if the Doc Search Agent can discover a solution; if not, it can delegate the duty to the Net Search Agent

query = "Which one is best O3 Mini or DeepSeek R1?"

end result = crew.kickoff(inputs={"query": query})

print("Closing Reply:n", end result)

Response:

From the output, we observe that the response was generated utilizing the Net Searcher Agent for the reason that required info was not discovered by the Doc Researcher Agent. Moreover, it contains the sources from which the reply was lastly retrieved.

Conclusion

On this undertaking, we efficiently constructed an AI-powered analysis assistant that effectively retrieves and analyzes info from analysis papers and the net. By utilizing CrewAI for agent coordination, Docling for doc processing, and Serper for scholarly search, we created a system able to answering complicated queries with structured insights.

The assistant first searches inside paperwork and seamlessly delegates to internet search if wanted, guaranteeing correct responses. This strategy enhances analysis effectivity by automating info retrieval and evaluation. Moreover, by integrating the o3-mini analysis assistant with CrewAI’s CrewDoclingSource and SerperDevTool, we additional enhanced the system’s doc evaluation capabilities. With additional customization, this framework could be expanded to help extra knowledge sources, superior reasoning, and improved analysis workflows.

You may discover superb tasks that includes OpenAI o3-mini in our free course – Getting Began with o3-mini!

Incessantly Requested Questions

A. CrewAI is a framework that permits you to create and handle AI brokers with particular roles and duties. It allows collaboration between a number of AI brokers to automate complicated workflows.

A. CrewAI makes use of a structured strategy the place every agent has an outlined position and may delegate duties if wanted. A Crew object orchestrates these brokers to finish duties effectively.

A. CrewDoclingSource is a doc processing device in CrewAI that extracts structured data from analysis papers, PDFs, and text-based paperwork.

A. Serper API is a device that permits AI purposes to carry out Google Search queries, together with searches on Google Scholar for tutorial papers.

A. Serper API affords each free and paid plans, with limitations on the variety of search requests within the free tier.

A. In contrast to normal Google Search, Serper API gives structured entry to look outcomes, permitting AI brokers to extract related analysis papers effectively.

A. Sure, it helps frequent analysis doc codecs, together with PDFs and text-based recordsdata.