Introduction

Perform calling in giant language fashions (LLMs) has remodeled how AI brokers work together with exterior methods, APIs, or instruments, enabling structured decision-making primarily based on pure language prompts. Through the use of JSON schema-defined capabilities, these fashions can autonomously choose and execute exterior operations, providing new ranges of automation. This text will display how operate calling might be applied utilizing Mistral 7B, a state-of-the-art mannequin designed for instruction-following duties.

Studying Outcomes

- Perceive the position and sorts of AI brokers in generative AI.

- Learn the way operate calling enhances LLM capabilities utilizing JSON schemas.

- Arrange and cargo Mistral 7B mannequin for textual content technology.

- Implement operate calling in LLMs to execute exterior operations.

- Extract operate arguments and generate responses utilizing Mistral 7B.

- Execute real-time capabilities like climate queries with structured output.

- Increase AI agent performance throughout varied domains utilizing a number of instruments.

This text was printed as part of the Information Science Blogathon.

What are AI Brokers?

Within the scope of Generative AI (GenAI), AI brokers symbolize a major evolution in synthetic intelligence capabilities. These brokers use fashions, resembling giant language fashions (LLMs), to create content material, simulate interactions, and carry out advanced duties autonomously. The AI brokers improve their performance and applicability throughout varied domains, together with buyer assist, training, and medical area.

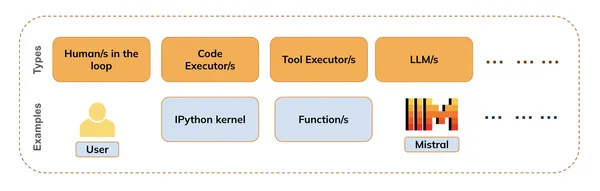

They are often of a number of sorts (as proven within the determine under) together with :

- People within the loop (e.g. for offering suggestions)

- Code executors (e.g. IPython kernel)

- Instrument Executors (e.g. Perform or API executions )

- Fashions (LLMs, VLMs, and so on)

Perform Calling is the mix of Code execution, Instrument execution, and Mannequin Inference i.e. whereas the LLMs deal with pure language understanding and technology, the Code Executor can execute any code snippets wanted to satisfy person requests.

We will additionally use the People within the loop, to get suggestions in the course of the course of, or when to terminate the method.

What’s Perform Calling in Giant Language Fashions?

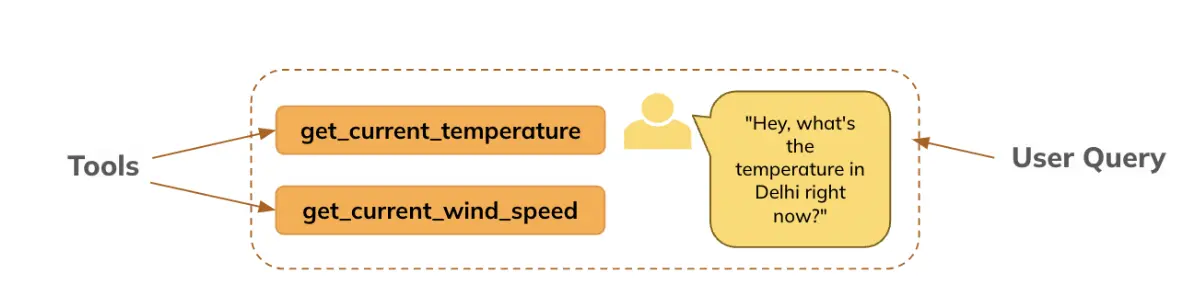

Builders outline capabilities utilizing JSON schemas (that are handed to the mannequin), and the mannequin generates the required arguments for these capabilities primarily based on person prompts. For instance: It may name climate APIs to offer real-time climate updates primarily based on person queries (We’ll see the same instance on this pocket book). With operate calling, LLMs can intelligently choose which capabilities or instruments to make use of in response to a person’s request. This functionality permits brokers to make autonomous selections about tips on how to greatest fulfill a job, enhancing their effectivity and responsiveness.

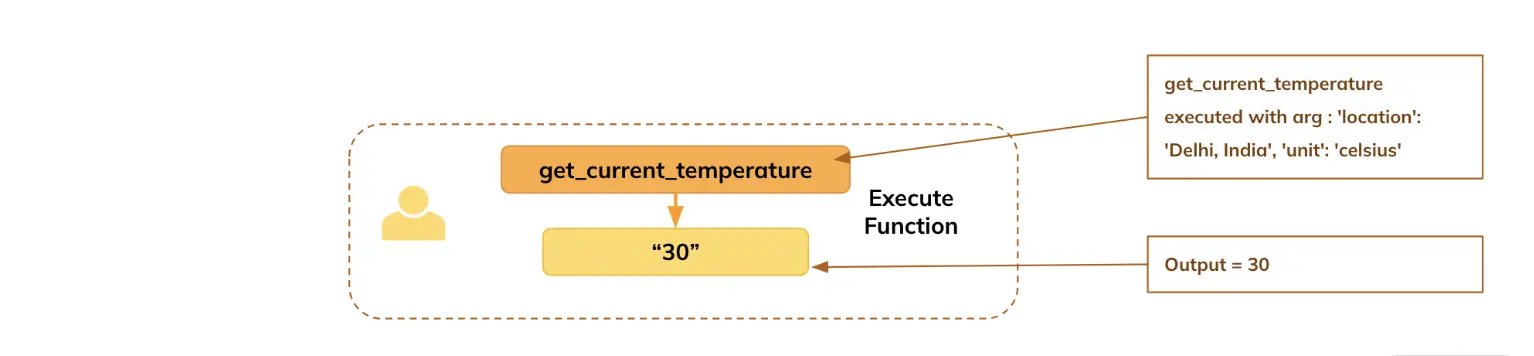

This text will display how we used the LLM (right here, Mistral) to generate arguments for the outlined operate, primarily based on the query requested by the person, particularly: The person asks in regards to the temperature in Delhi, the mannequin extracts the arguments, which the operate makes use of to get the real-time data (right here, we’ve set to return a default worth for demonstration functions), after which the LLM generates the reply in easy language for the person.

Constructing a Pipeline for Mistral 7B: Mannequin and Textual content Technology

Let’s import the required libraries and import the mannequin and tokenizer from huggingface for inference setup. The Mannequin is accessible right here.

Importing Crucial Libraries

from transformers import pipeline ## For sequential textual content technology

from transformers import AutoModelForCausalLM, AutoTokenizer # For main the mannequin and tokenizer from huggingface repository

import warnings

warnings.filterwarnings("ignore") ## To take away warning messages from outputOffering the huggingface mannequin repository identify for mistral 7B

model_name = "mistralai/Mistral-7B-Instruct-v0.3"Downloading the Mannequin and Tokenizer

- Since this LLM is a gated mannequin, it’ll require you to enroll on huggingface and settle for their phrases and situations first. After signing up, you possibly can observe the directions on this web page to generate your person entry token to obtain this mannequin in your machine.

- After producing the token by following the above-mentioned steps, go the huggingface token (in hf_token) for loading the mannequin.

mannequin = AutoModelForCausalLM.from_pretrained(model_name, token = hf_token, device_map='auto')

tokenizer = AutoTokenizer.from_pretrained(model_name, token = hf_token)Implementing Perform Calling with Mistral 7B

Within the quickly evolving world of AI, implementing operate calling with Mistral 7B empowers builders to create subtle brokers able to seamlessly interacting with exterior methods and delivering exact, context-aware responses.

Step 1 : Specifying instruments (operate) and question (preliminary immediate)

Right here, we’re defining the instruments (operate/s) whose data the mannequin can have entry to, for producing the operate arguments primarily based on the person question.

Instrument is outlined under:

def get_current_temperature(location: str, unit: str) -> float:

"""

Get the present temperature at a location.

Args:

location: The placement to get the temperature for, within the format "Metropolis, Nation".

unit: The unit to return the temperature in. (selections: ["celsius", "fahrenheit"])

Returns:

The present temperature on the specified location within the specified items, as a float.

"""

return 30.0 if unit == "celsius" else 86.0 ## We're setting a default output only for demonstration goal. In actual life it will be a working operateThe immediate template for Mistral must be within the particular format under for Mistral.

Question (the immediate) to be handed to the mannequin

messages = [

{"role": "system", "content": "You are a bot that responds to weather queries. You should reply with the unit used in the queried location."},

{"role": "user", "content": "Hey, what's the temperature in Delhi right now?"}

]Step 2: Mannequin Generates Perform Arguments if Relevant

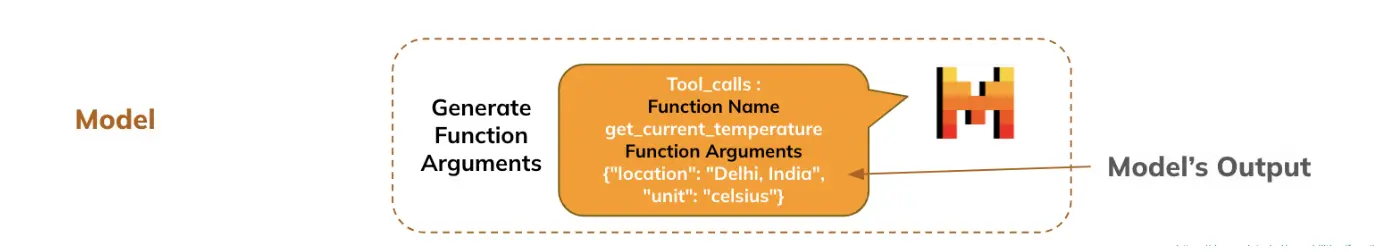

Total, the person’s question together with the details about the accessible capabilities is handed to the LLM, primarily based on which the LLM extracts the arguments from the person’s question for the operate to be executed.

- Making use of the precise chat template for mistral operate calling

- The mannequin generates the response which comprises which operate and which arguments must be specified.

- The LLM chooses which operate to execute and extracts the arguments from the pure language offered by the person.

inputs = tokenizer.apply_chat_template(

messages, # Passing the preliminary immediate or dialog context as an inventory of messages.

instruments=[get_current_temperature], # Specifying the instruments (capabilities) accessible to be used in the course of the dialog. These might be APIs or helper capabilities for duties like fetching temperature or wind pace.

add_generation_prompt=True, # Whether or not so as to add a system technology immediate to information the mannequin in producing applicable responses primarily based on the instruments or enter.

return_dict=True, # Return the leads to dictionary format, which permits simpler entry to tokenized knowledge, inputs, and different outputs.

return_tensors="pt" # Specifies that the output must be returned as PyTorch tensors. That is helpful in the event you're working with fashions in a PyTorch-based atmosphere.

)

inputs = {ok: v.to(mannequin.gadget) for ok, v in inputs.gadgets()} # Strikes all of the enter tensors to the identical gadget (CPU/GPU) because the mannequin.

outputs = mannequin.generate(**inputs, max_new_tokens=128)

response = tokenizer.decode(outputs[0][len(inputs["input_ids"][0]):], skip_special_tokens=True)# Decodes the mannequin's output tokens again into human-readable textual content.

print(response)Output : [{“name”: “get_current_temperature”, “arguments”: {“location”: “Delhi, India”, “unit”: “celsius”}}]

Step 3:Producing a Distinctive Instrument Name ID (Mistral-Particular)

It’s used to uniquely determine and match software calls with their corresponding responses, guaranteeing consistency and error dealing with in advanced interactions with exterior instruments

import json

import random

import string

import reGenerate a random tool_call_id

It’s used to uniquely determine and match software calls with their corresponding responses, guaranteeing consistency and error dealing with in advanced interactions with exterior instruments.

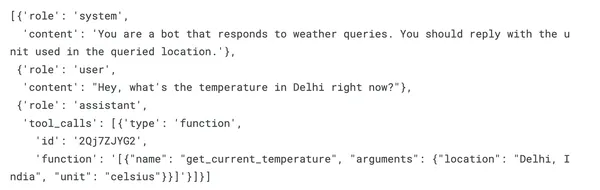

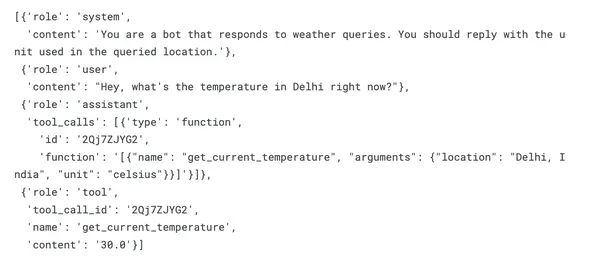

tool_call_id = ''.be a part of(random.selections(string.ascii_letters + string.digits, ok=9))Append the software name to the dialog

messages.append({"position": "assistant", "tool_calls": [{"type": "function", "id": tool_call_id, "function": response}]})print(messages)Output :

Step 4: Parsing Response in JSON Format

attempt :

tool_call = json.masses(response)[0]

besides :

# Step 1: Extract the JSON-like half utilizing regex

json_part = re.search(r'[.*]', response, re.DOTALL).group(0)

# Step 2: Convert it to an inventory of dictionaries

tool_call = json.masses(json_part)[0]

tool_callOutput : {‘identify’: ‘get_current_temperature’, ‘arguments’: {‘location’: ‘Delhi, India’, ‘unit’: ‘celsius’}}

[Note] : In some instances, the mannequin could produce some texts as nicely alongwith the operate data and arguments. The ‘besides’ block takes care of extracting the precise syntax from the output

Step 5: Executing Capabilities and Acquiring Outcomes

Based mostly on the arguments generated by the mannequin, you go them to the respective operate to execute and procure the outcomes.

function_name = tool_call["name"] # Extracting the identify of the software (operate) from the tool_call dictionary.

arguments = tool_call["arguments"] # Extracting the arguments for the operate from the tool_call dictionary.

temperature = get_current_temperature(**arguments) # Calling the "get_current_temperature" operate with the extracted arguments.

messages.append({"position": "software", "tool_call_id": tool_call_id, "identify": "get_current_temperature", "content material": str(temperature)})Step 6: Producing the Remaining Reply Based mostly on Perform Output

## Now this listing comprises all the data : question and performance particulars, operate execution particulars and the output of the operate

print(messages)Output

Making ready the immediate for passing complete data to the mannequin

inputs = tokenizer.apply_chat_template(

messages,

add_generation_prompt=True,

return_dict=True,

return_tensors="pt"

)

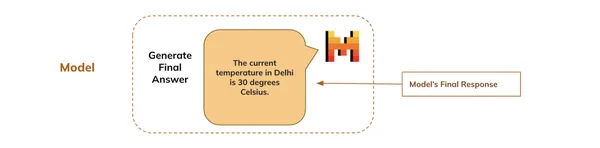

inputs = {ok: v.to(mannequin.gadget) for ok, v in inputs.gadgets()}Mannequin Generates Remaining Reply

Lastly, the mannequin generates the ultimate response primarily based on your entire dialog that begins with the person’s question and reveals it to the person.

- inputs : Unpacks the enter dictionary, which comprises tokenized knowledge the mannequin must generate textual content.

- max_new_tokens=128: Limits the generated response to a most of 128 new tokens, stopping the mannequin from producing excessively lengthy responses

outputs = mannequin.generate(**inputs, max_new_tokens=128)

final_response = tokenizer.decode(outputs[0][len(inputs["input_ids"][0]):],skip_special_tokens=True)

## Remaining response

print(final_response)Output: The present temperature in Delhi is 30 levels Celsius.

Conclusion

We constructed our first agent that may inform us real-time temperature statistics throughout the globe! In fact, we used a random temperature as a default worth, however you possibly can join it to climate APIs that fetch real-time knowledge.

Technically talking, primarily based on the pure language question by the person, we had been in a position to get the required arguments from the LLM to execute the operate, get the outcomes out, after which generate a pure language response by the LLM.

What if we needed to know the opposite elements like wind pace, humidity, and UV index? : We simply must outline the capabilities for these elements and go them within the instruments argument of the chat template. This manner, we will construct a complete Climate Agent that has entry to real-time climate data.

Key Takeaways

- AI brokers leverage LLMs to carry out duties autonomously throughout numerous fields.

- Integrating operate calling with LLMs allows structured decision-making and automation.

- Mistral 7B is an efficient mannequin for implementing operate calling in real-world functions.

- Builders can outline capabilities utilizing JSON schemas, permitting LLMs to generate obligatory arguments effectively.

- AI brokers can fetch real-time data, resembling climate updates, enhancing person interactions.

- You possibly can simply add new capabilities to develop the capabilities of AI brokers throughout varied domains.

Continuously Requested Questions

A. Perform calling in LLMs permits the mannequin to execute predefined capabilities primarily based on person prompts, enabling structured interactions with exterior methods or APIs.

A. Mistral 7B excels at instruction-following duties and may autonomously generate operate arguments, making it appropriate for functions that require real-time knowledge retrieval.

A. JSON schemas outline the construction of capabilities utilized by LLMs, permitting the fashions to grasp and generate obligatory arguments for these capabilities primarily based on person enter.

A. You possibly can design AI brokers to deal with varied functionalities by defining a number of capabilities and integrating them into the agent’s toolset.

The media proven on this article just isn’t owned by Analytics Vidhya and is used on the Creator’s discretion.