In right this moment’s AI market, you’ll find quite a lot of massive language fashions (LLMs), coming in quite a few varieties (open-source and closed-source) and suggesting quite a lot of totally different capabilities.

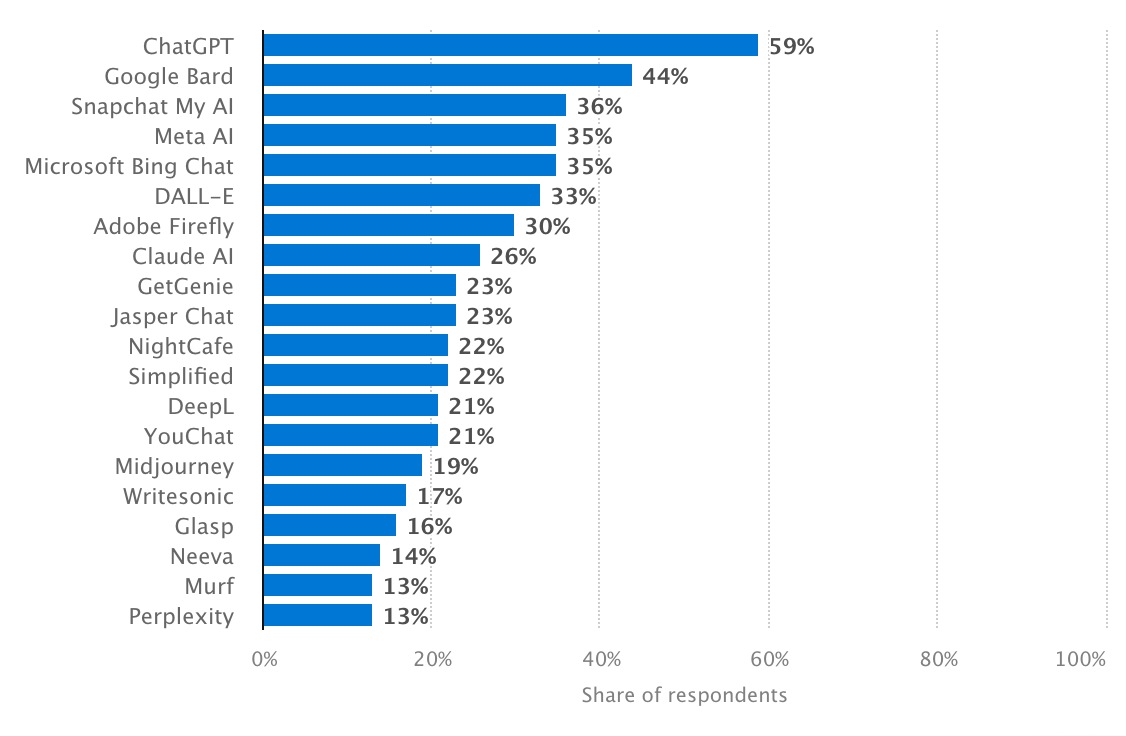

A few of these fashions are already considerably higher than the others (e.g., ChatGPT, Gemini, Claude, Llama, and Mistral) as a result of they’re able to fixing quite a few duties extra exactly and quicker than others.

Most Fashionable AI Instruments, Statista

However even these top-tier fashions, as highly effective as they’re, aren’t at all times an ideal match out of the field. Most organizations quickly discover that broad, generic LLMs don’t decide up their {industry} terminology, in-house working strategies, or model voice. That’s the place fine-tuning enters the image.

What Is Advantageous-Tuning and Why It Issues in 2025

Advantageous-tuning refers back to the observe of constant coaching on a pre-trained LLM utilizing a small, specialised dataset associated to a chore, area, or group.

Advantageous-tuning must be distinguished from coaching a mannequin from scratch as a result of it solely entails making it study a selected half or act with particular requirements and intentions.

Why Pre-Skilled Fashions Are Not All the time Sufficient

Pre-trained language fashions are generally made to deal with all kinds of duties (content material creation, translation, summarization, query answering, and so forth.), however they generally gloss over the main points.

Since these fashions study from public web knowledge, they could misunderstand skilled language, resembling authorized phrases, monetary statements, or medical information.

No, in fact, their solutions might sound high quality, however for field-specific professionals, they’ll seem awkward, complicated, or inappropriate.

Advantageous-tuning helps repair this. For instance, a hospital can fine-tune a mannequin to grasp medical phrases and practitioners’ communication.

Or, a logistics firm can prepare it to know the ins and outs of transport and stock. With fine-tuning, the mannequin turns into extra factual, makes use of the right vocabulary, and matches a distinct segment space.

Benefits of Advantageous-Tuning LLMs for Companies

Tuning large language fashions helps enterprise organisations get a lot worth out of AI by making it do issues they need it to do.

Initially, fine-tuning makes a mannequin communicate your organization’s language. Each enterprise has its tone/type/method — some are formal and technical, others are pleasant and heat. Supervised fine-tuning makes the mannequin catch your type and use your favored expressions.

Moreover, fine-tuning strongly improves accuracy in specialised areas. As an illustration, the OpenAI o1 mannequin had scored the best benchmark rating of 94.8% for answering arithmetic issues as of March 2024.

Nevertheless, as a generic mannequin, it won’t totally perceive authorized phrases, medical wording, or financial statements.

But when a mannequin is tuned with info deliberately from any {industry}, then it learns to course of and reply to superior or technical questions significantly better.

Privateness is another excuse companies choose to fine-tune. As a substitute of constructing delicate info out there to a third-party service, companies can tweak and make use of the mannequin on their networks and thus hold info protected and have it adhere to knowledge security pointers.

Lastly, fine-tuning massive language fashions can lower your expenses over time. Though it takes some effort and time at first, a fine-tuned mannequin will get the job completed extra competently and quicker.

It reduces errors, takes fewer tries, and may even be cheaper than making a number of calls to a paid API for a basic mannequin.

High Advantageous-Tuning Strategies in 2025

Advantageous-tuning in 2025 has turn out to be extra accessible and easygoing than earlier than. Organizations now not want big budgets or loads of machine studying expertise to refine a mannequin for his or her use.

Now, there are a number of well-tested approaches, from complete retraining to gentle contact tuning, which allow organizations to pick the optimum for his or her functions, info, and infrastructure.

Full Advantageous-Tuning – The Most Efficient Methodology

Full fine-tuning is outlined by IBM as an method that makes use of the pre-existing information of the bottom mannequin as a place to begin to regulate the mannequin based on a smaller, task-specific dataset.

The entire fine-tuning course of modifications the parameter weights of a mannequin whose parameter weights have already been decided via prior coaching to be able to fine-tune the mannequin for a job.

LoRA and PEFT

If you would like one thing quicker and cheaper, LoRA (Low-Rank Adaptation) and PEFT (Parameter-Environment friendly Advantageous-Tuning) are good selections.

These strategies solely alter a portion of the mannequin as an alternative of the entire mannequin. They work properly even with much less task-specific knowledge and compute sources and are subsequently the selection of startups and medium-sized corporations.

Instruction Advantageous-Tuning

One other helpful method is fine-tuning for directions. It permits the mannequin to turn out to be extra delicate to how you can carry out directions and provides briefer, sensible responses. It’s fairly helpful for AI assistants which can be utilized to supply help, coaching, or recommendation.

RLHF (Reinforcement Studying from Human Suggestions)

RLHF (Reinforcement Studying from Human Suggestions) is meant for heavy use. It trains the mannequin by exposing it to examples of fine and poor solutions and rewarding optimum responses.

RLHF is extra progressive and complicated, however excellent for producing high-quality, dependable AI resembling regulation clerks or knowledgeable advisors.

Immediate-Tuning and Adapters

If you happen to merely require a straightforward and quick option to adapt your mannequin, you should utilize immediate tuning or adapters. These strategies don’t contact the entire mannequin. As a substitute, they make the most of slight add-ons or intelligent prompts to information the mannequin’s habits. They’re quick, low cost, and straightforward to check out.

| Methodology | What It Does | Price/Pace | Finest For |

| Full Advantageous-Tuning | Trains the whole mannequin on new knowledge | Excessive / Sluggish | Giant-scale, high-performance wants |

| LoRA / PEFT | Tunes solely choose parameters | Low / Quick | Startups, resource-limited groups |

| Instruction Tuning | Improves response to consumer instructions | Medium / Reasonable | AI assistants, help bots |

| RLHF | Trains with human suggestions and reward indicators | Excessive / Reasonable | Professional-level, protected, dependable outputs |

| Immediate/Adapters | Provides small modules or prompts, no retraining | Very Low / Very Quick | Fast testing, low cost customization |

High Advantageous-Tuning Strategies in 2025 – At a Look

What Do You Must Advantageous-Tune a Giant Language Mannequin in 2025: Finest Practices

Advantageous-tuning an LLM in 2025 is reasonably priced than even for corporations with out an ML engineering workforce. Nevertheless, to attain correct and dependable outcomes, it is very important method the method rightly.

Step one is to decide on the kind of mannequin: open-source and closed-source. Open fashions (e.g., LLaMA, Mistral) permit extra: you host them by yourself servers, customise the mannequin structure, and handle the info.

Closed ones (like GPT or Claude) present excessive energy and high quality, however work via APIs, i.e., full management isn’t out there.

If knowledge safety and suppleness are crucial to your firm, open fashions are extra preferable. If velocity of launch and minimal technical boundaries are vital, it’s higher to decide on closed fashions.

Subsequent, you want satisfactory knowledge coaching, which suggests clear, well-organized examples out of your area, resembling emails, help chats, paperwork, or different texts your organization works with.

The higher your knowledge, the smarter and extra helpful the mannequin might be after fine-tuning. With out it, the mannequin would possibly sound good, but it surely will get issues mistaken or misses the purpose.

In addition to, you’ll additionally want the correct instruments and infrastructure. Some corporations use AWS or Google Cloud platforms, whereas others host every little thing regionally for further privateness. For steering and watching the coaching course of, it’s possible you’ll use Hugging Face or Weights & Biases instruments, and so forth.

After all, none of this works with out the correct individuals. Advantageous-tuning often entails a machine studying engineer (to coach the mannequin), a DevOps knowledgeable (to arrange and run the methods), and a website knowledgeable or enterprise analyst (to clarify what the mannequin ought to study). If you happen to don’t have already got this type of workforce, constructing one from scratch may be costly and gradual.

That’s why many corporations now work with outsourcing companions, which focus on AI customized software program growth. Outsourcing companions can take over the whole technical aspect, from deciding on the mannequin and getting ready your knowledge to coaching, testing, and deploying it.

Enterprise Use Circumstances for Advantageous-Tuned LLMs

Advantageous-tuned fashions are usually not simply smarter, they’re extra appropriate for real-world enterprise use circumstances. Once you prepare a mannequin in your firm’s knowledge, it takes over your sum and substance, which makes it generate worthwhile, correct outputs, as an alternative of bland solutions.

AI Buyer Assist Brokers

As a substitute of getting a generic chatbot, you possibly can construct a help agent acquainted with your companies, merchandise, and insurance policies. It could possibly reply as if it had been a human agent skilled, however with the right tone and up-to-date info.

Customized Digital Assistants

A extremely skilled mannequin can assist with particular duties resembling processing orders, answering HR questions, prearranging interviews, or following shipments. These assistants study out of your inside paperwork and methods, so that they understand how issues get completed in your organization.

Enterprise Data Administration

In massive corporations and enterprises, there are simply too many papers, manuals, and company insurance policies to recollect.

An optimized LLM can learn via all of them and provides staff easy solutions inside seconds. It saves time and permits individuals to seek out info that they want with out digging via recordsdata or PDFs.

Area-Particular Copilots (Authorized, Medical, E-commerce)

Specialised copilots, aside from different functions, can help professionals with their every day work:

- Legal professionals get assist reviewing contracts or summarizing authorized circumstances.

- Medical doctors can use the mannequin to draft notes or perceive affected person historical past quicker.

- E-commerce groups can shortly create product descriptions, replace catalogs, or analyze buyer opinions.

Case Examine: Good Journey Information

Among the finest examples of fine-tuning fashions is the Good Journey Information AI. It was fine-tuned to assist vacationers with customized ideas based mostly on their likes, location, and native occasions. As a substitute of providing widespread ideas, it makes personalized routes and proposals.

Challenges in Advantageous-Tuning LLMs

Typically, it is vitally helpful to tune an LLM, however typically it comes with some obstacles.

The preliminary severe problem is having sufficient knowledge. You may solely tune when you have a number of clear, structured, and worthwhile examples to coach on.

In case your dataset is unorganized, insufficient, or stuffed with errors, the mannequin won’t study what you truly require. To place it otherwise: when you feed it waste, you’ll get waste, regardless of how superior the mannequin.

Then, in fact, there’s coaching and sustaining the mannequin price. These fashions use an incredible quantity of laptop energy, particularly when you have a big one.

However the expense doesn’t cease after coaching. Additionally, you will want to check it, revise it, and get proof it really works satisfactorily over the long run.

One other subject is overfitting. That is when the mannequin learns your coaching knowledge too completely, and nothing else. It can provide nice solutions when it’s being examined, however disintegrate when somebody asks it a brand new and even considerably totally different query.

And equally vital are authorized and moral components. In case your mannequin offers recommendation, holds delicate knowledge, or makes choices, you should be further cautious.

You will need to make sure that it’s not biased, by no means produces dangerous outputs, and adheres to privateness legal guidelines like GDPR or HIPAA.

How one can Get Began with LLM Advantageous-Tuning

If you concentrate on fine-tuning, the excellent news is you don’t have to leap in blindly. With the correct method, it may be a painless and extremely rewarding course of.

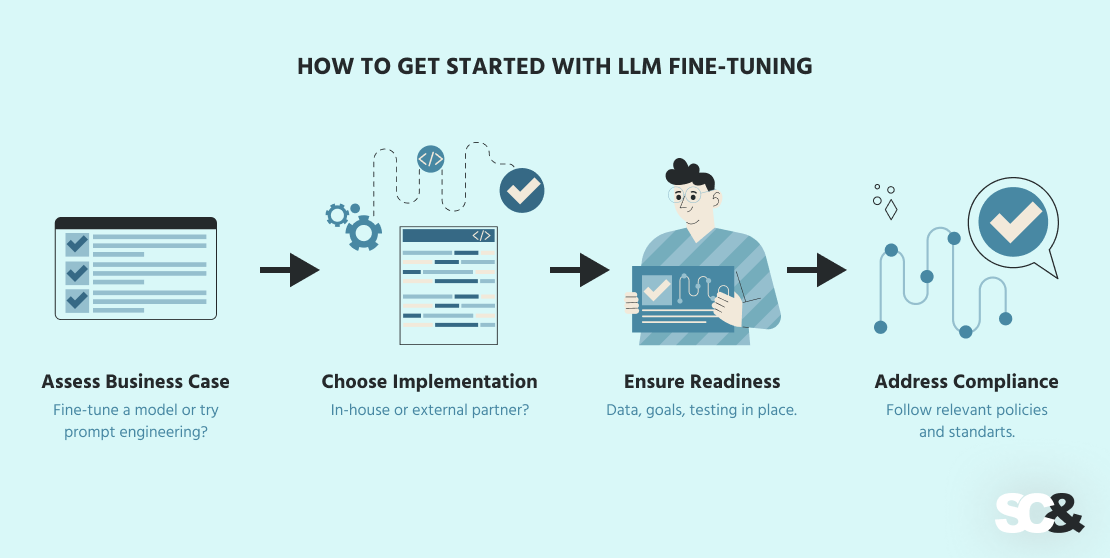

The factor to do is to evaluate what you are promoting case. Ask your self: Do you really want to fine-tune a mannequin, or can immediate engineering (writing smarter, extra detailed prompts) provide the outcomes you need? For a lot of easy duties or domains, immediate engineering is cheaper and quicker.

However when you’re coping with industry-specific language, strict tone necessities, or personal knowledge, fine-tuning can supply a significantly better long-term resolution.

Subsequent, resolve whether or not to run the challenge in-house or work with an exterior accomplice. Constructing your individual AI workforce offers you full management, but it surely takes time, finances, and specialised expertise.

Alternatively, an outsourcing accomplice, resembling SCAND, can solely take over the technical aspect. They can assist you decide the correct mannequin, put together your knowledge, alter it, deploy, and even assist with immediate engineering.

Earlier than getting began, make sure that your organization is prepared. You’ll want sufficient clear knowledge, clear objectives for the mannequin, and a option to take a look at how properly it really works.

Lastly, don’t neglect about safety and compliance. In case your mannequin will work with confidential, authorized, or medical knowledge, it should adhere to all vital insurance policies.

How SCAND Can Assist

If you happen to don’t have the time or technical workforce to do it in-house, SCAND can handle the whole course of.

We’ll provide help to select the correct AI mannequin for what you are promoting (open-source like LLaMA or Mistral, or closed-source like GPT or Claude). We’ll then clear and prep your knowledge so it’s set and prepared.

Then we do the remaining: fine-tuning the mannequin, deploying it within the cloud or in your servers, and watch mannequin efficiency, proving that it communicates good and works properly.

If you happen to require extra safety, we additionally present native internet hosting to safe your knowledge and adjust to legal guidelines or you possibly can request LLM growth companies to get an AI mannequin made solely for you.

FAQ

Q: What precisely is fine-tuning an LLM?

Advantageous-tuning entails placing a pre-trained language mannequin by yourself knowledge in order that it acquires your particular {industry}, language, or model voice in a greater approach.

Q: Can’t I simply depart a pre-trained mannequin alone?

You may, however pre-trained fashions are generic and won’t deal with your area of interest subjects or tone so properly. Advantageous-tuning is what calibrating for precision and relevance to your particular wants.

Q: How a lot knowledge is required to fine-tune a mannequin?

That varies together with your wants and mannequin measurement. Extra high-quality, well-labeled knowledge typically means higher outcomes.

Q: Is okay-tuning costly?

It may be, particularly for big fashions, and requires repairs over time. However typically, it pays for itself in diminished reliance on pricey API calls and an improved consumer expertise.

.png)