When constructing a software-intensive system, a key half in making a safe and strong answer is to develop a cyber menace mannequin. It is a mannequin that expresses who may be serious about attacking your system, what results they could wish to obtain, when and the place assaults may manifest, and the way attackers may go about accessing the system. Menace fashions are essential as a result of they information necessities, system design, and operational decisions. Results can embody, for instance, compromise of confidential data, modification of data contained within the system, and disruption of operations. There are various functions for attaining these sorts of results, starting from espionage to ransomware.

This weblog publish focuses on a way menace modelers can use to make credible claims about assaults the system may face and to floor these claims in observations of adversary techniques, methods, and procedures (TTPs).

Brainstorming, material experience, and operational expertise can go a great distance in growing a listing of related menace eventualities. Throughout preliminary menace state of affairs technology for a hypothetical software program system, it might be doable to think about, What if attackers steal account credentials and masks their motion by placing false or dangerous knowledge into the consumer monitoring system? The more durable job—the place the attitude of menace modelers is essential—substantiates that state of affairs with identified patterns of assaults and even particular TTPs. These may very well be knowledgeable by potential menace intentions based mostly on the operational position of the system.

Growing sensible and related mitigation methods for the recognized TTPs is a crucial contributor to system necessities formulation, which is among the objectives of menace modeling.

This SEI weblog publish outlines a way for substantiating menace eventualities and mitigations by linking to industry-recognized assault patterns powered by model-based programs engineering (MBSE).

In his memo Directing Trendy Software program Acquisition to Maximize Lethality, Secretary of Protection Pete Hegseth wrote, “Software program is on the core of each weapon and supporting system we area to stay the strongest, most deadly preventing power on the earth.” Whereas understanding cyber threats to those advanced software program intensive programs is essential, figuring out threats and mitigations to them early within the design of a system helps scale back the fee to repair them. In response to Government Order (EO) 14028, Bettering the Nation’s Cybersecurity, the Nationwide Institute of Requirements and Expertise (NIST) really useful 11 practices for software program verification. Menace modeling is on the prime of the record.

Menace Modeling Objectives: 4 Key Questions

Menace modeling guides the necessities specification and early design decisions to make a system strong in opposition to assaults and weaknesses. Menace modeling may also help software program builders and cybersecurity professionals know what kinds of defenses, mitigation methods, and controls to place in place.

Menace modelers can body the method of menace modeling round solutions to 4 key questions (tailored from Adam Shostack):

- What are we constructing?

- What can go flawed?

- What ought to we do about these wrongs?

- Was the evaluation adequate?

What Are We Constructing?

The muse of menace modeling is the mannequin of the system targeted on its potential interactions with threats. A mannequin is a graphical, mathematical, logical, or bodily illustration that abstracts actuality to handle a selected set of issues whereas omitting particulars not related to the issues of the mannequin builder. There are numerous methodologies that present steerage on the best way to assemble menace fashions for several types of programs and use circumstances. For already constructed programs the place the design and implementation are identified and the place the principal issues relate to faults and errors (relatively than acts by intentioned adversaries), methods equivalent to fault tree evaluation could also be extra acceptable. These methods typically assume that desired and undesired states are identified and will be characterised. Equally, kill chain evaluation will be useful to grasp the complete end-to-end execution of a cyber assault.

Nonetheless, present high-level programs engineering fashions is probably not acceptable to establish particular vulnerabilities used to conduct an assault. These programs engineering fashions can create helpful context, however extra modeling is critical to handle threats.

On this publish I take advantage of the Unified Structure Framework (UAF) to information our modeling of the system. For bigger programs using MBSE, the menace mannequin can construct on DoDAF, UAF, or different architectural framework fashions. The frequent thread with all of those fashions is that menace modeling is enabled by fashions of data interactions and flows amongst elements. A standard mannequin additionally offers advantages in coordination throughout massive groups. When a number of teams are engaged on and deriving worth from a unified mannequin, the up-front prices will be extra manageable.

There are numerous notations for modeling knowledge flows or interactions. We discover on this weblog the usage of an MBSE device paired with a normal architectural framework to create fashions with advantages past easier diagramming device or drawings. For present programs and not using a mannequin, it’s nonetheless doable to make use of MBSE. This may be finished incrementally. For example, if new options are being added to an present system, it could be essential to mannequin simply sufficient of the system interacting with the brand new data flows or knowledge shops and create menace fashions for this subset of latest components.

What Can Go Flawed?

Menace modeling is just like programs modeling in that there are a lot of frameworks, instruments, and methodologies to assist information growth of the mannequin and establish potential drawback areas. STRIDE is menace identification taxonomy that could be a helpful a part of trendy menace modeling strategies, having initially been developed at Microsoft in 1999. Earlier work by the SEI has been performed to increase UAF with a profile that enables us to mannequin the outcomes of the menace identification step that makes use of STRIDE. We proceed that method on this weblog publish.

STRIDE itself is an acronym standing for spoofing, tampering, repudiation, data disclosure, denial of service, and elevation of privilege. This mnemonic helps modelers to categorize the impacts of threats on totally different knowledge shops and knowledge flows. Earlier work by Scandariato et al., of their paper A descriptive examine of Microsoft’s menace modeling method has additionally proven that STRIDE is adaptable to a number of ranges of abstraction. This paper reveals that a number of groups modeling the identical system did so with various dimension and composition of the info movement diagrams used. When engaged on new programs or a high-level structure, a menace modeler could not have all the main points wanted to reap the benefits of some extra in-depth menace modeling approaches. It is a advantage of the STRIDE method.

Along with the taxonomic structuring supplied by STRIDE, having a normal format for capturing the menace eventualities allows simpler evaluation. This format brings collectively the weather from the programs mannequin, the place we have now recognized belongings and knowledge flows, the STRIDE methodology for figuring out menace sorts, and the identification of potential classes of menace actors who may need intent and means to create conequences. Menace actors can vary from insider threats to nation-state actors and superior persistent threats. The next template reveals every of those components on this customary format and comprises the entire important particulars of a menace state of affairs.

An [ACTOR] performs an [ACTION] to [ATTACK] an [ASSET] to attain an [EFFECT] and/or [OBJECTIVE].

ACTOR | The individual or group that’s behind the menace state of affairs

ACTION | A possible incidence of an occasion which may injury an asset or aim of a strategic imaginative and prescient

ATTACK | An motion taken that makes use of a number of vulnerabilities to comprehend a menace to compromise or injury an asset or circumvent a strategic aim

ASSET | A useful resource, individual, or course of that has worth

EFFECT | The specified or undesired consequence

OBJECTIVE | The menace actor’s motivation or goal for conducting the assault

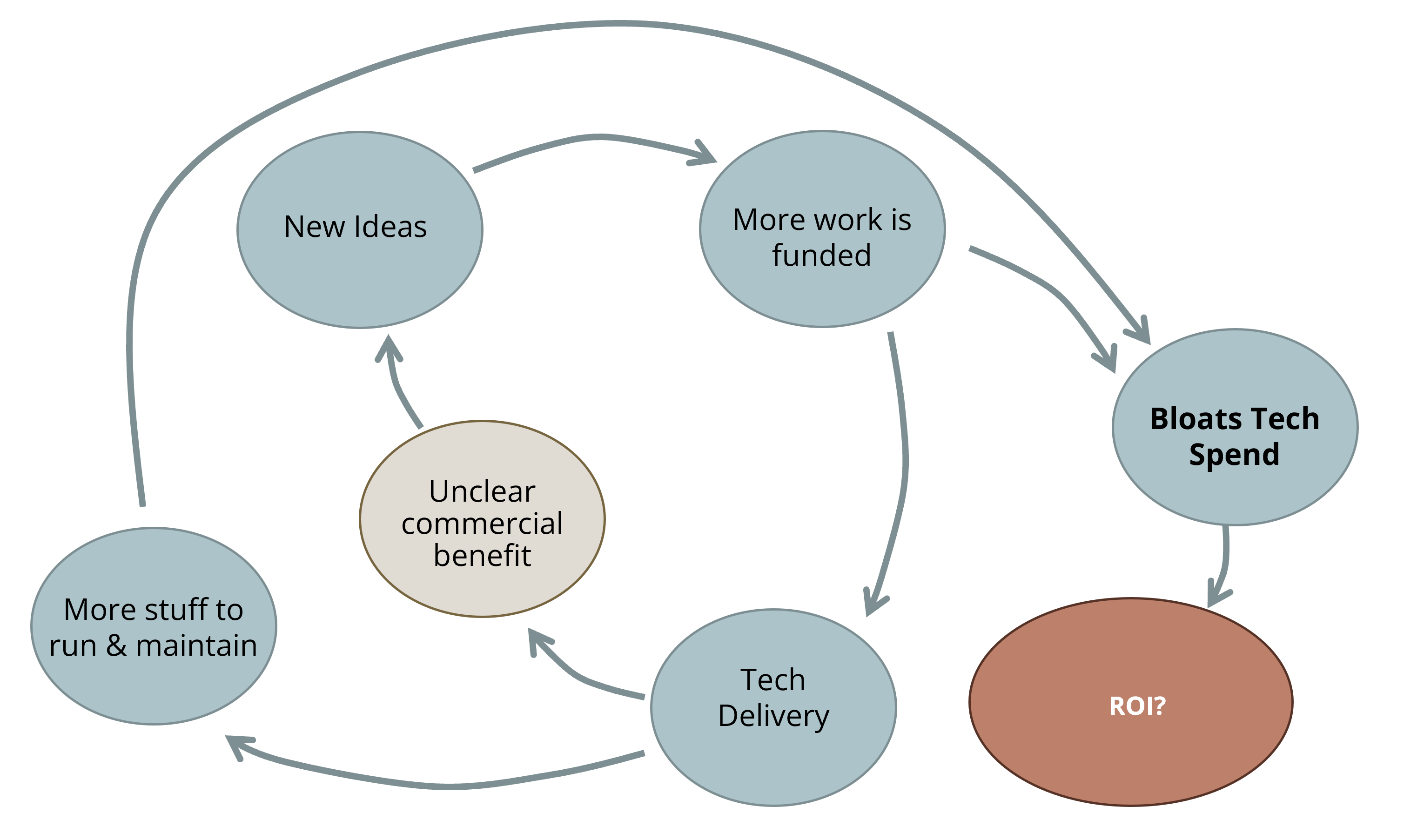

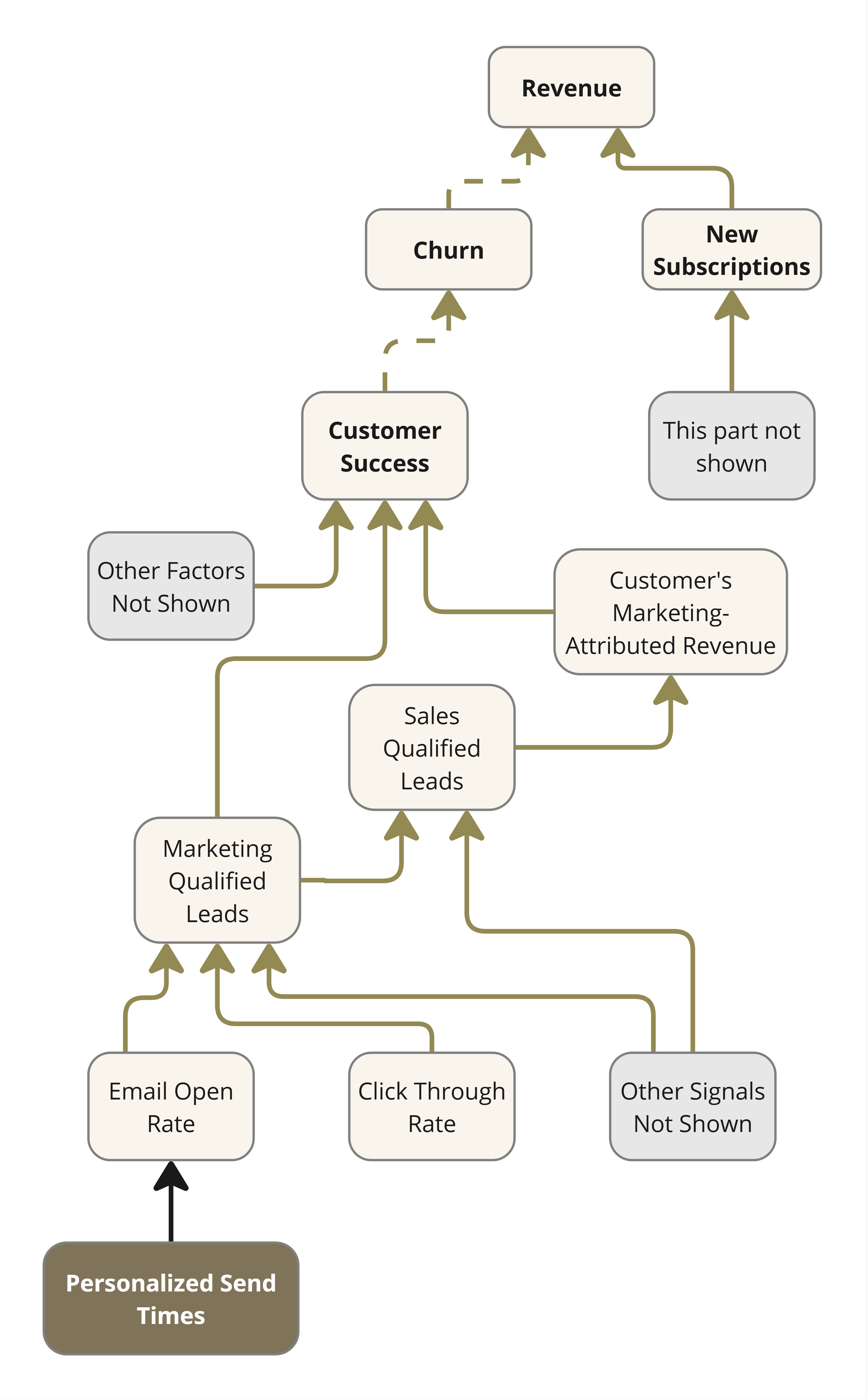

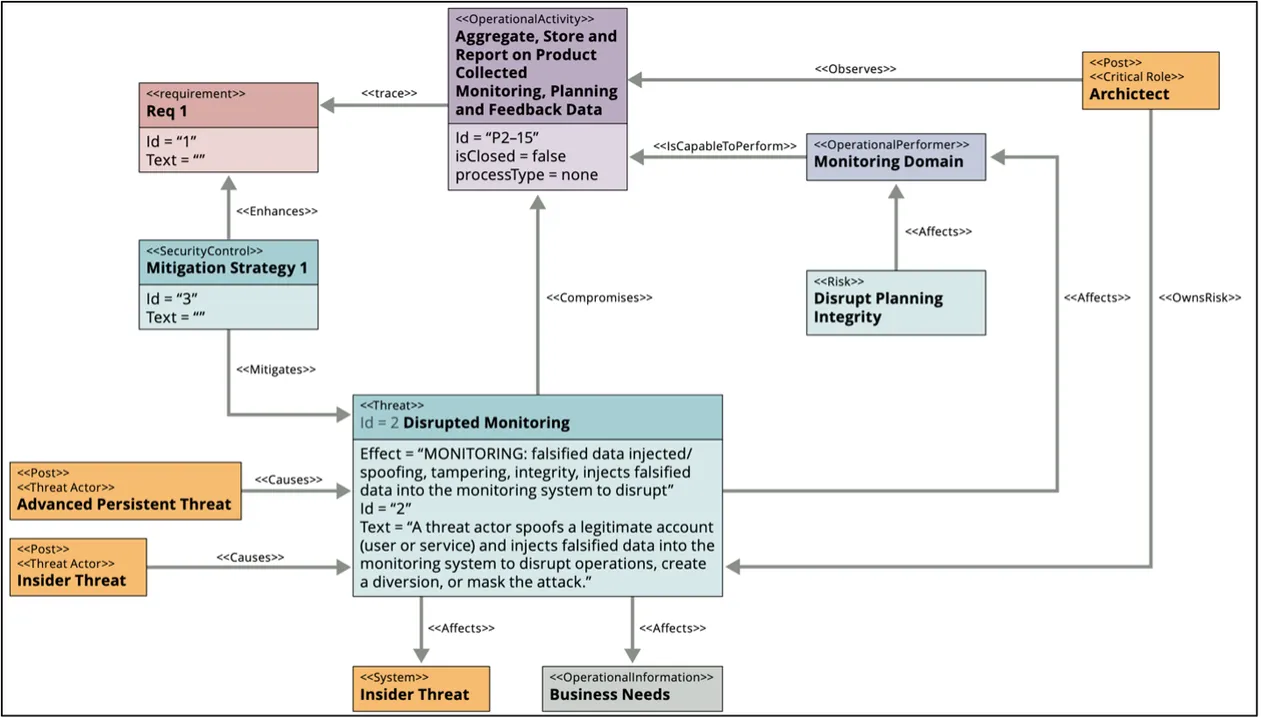

With formatted menace eventualities in hand, we will begin to combine the weather of the eventualities into our system mannequin. On this mannequin, the menace actor components describe the actors concerned in a menace state of affairs, and the menace factor describes the menace state of affairs, goal, and impact. From these two components, we will, throughout the mannequin, create relations to the precise components affected or in any other case associated to the menace state of affairs. Determine 1 reveals how the totally different menace modeling items work together with parts of the UAF framework.

Determine 1: Menace Modeling Profile

For the diagram components highlighted in purple, our workforce has prolonged the usual UAF with new components (<>, <>, <> and <> blocks) in addition to new relationships between them (<>, <> and <>). These additions seize the consequences of a menace state of affairs in our mannequin. Capturing these eventualities helps reply the query, What can go flawed?

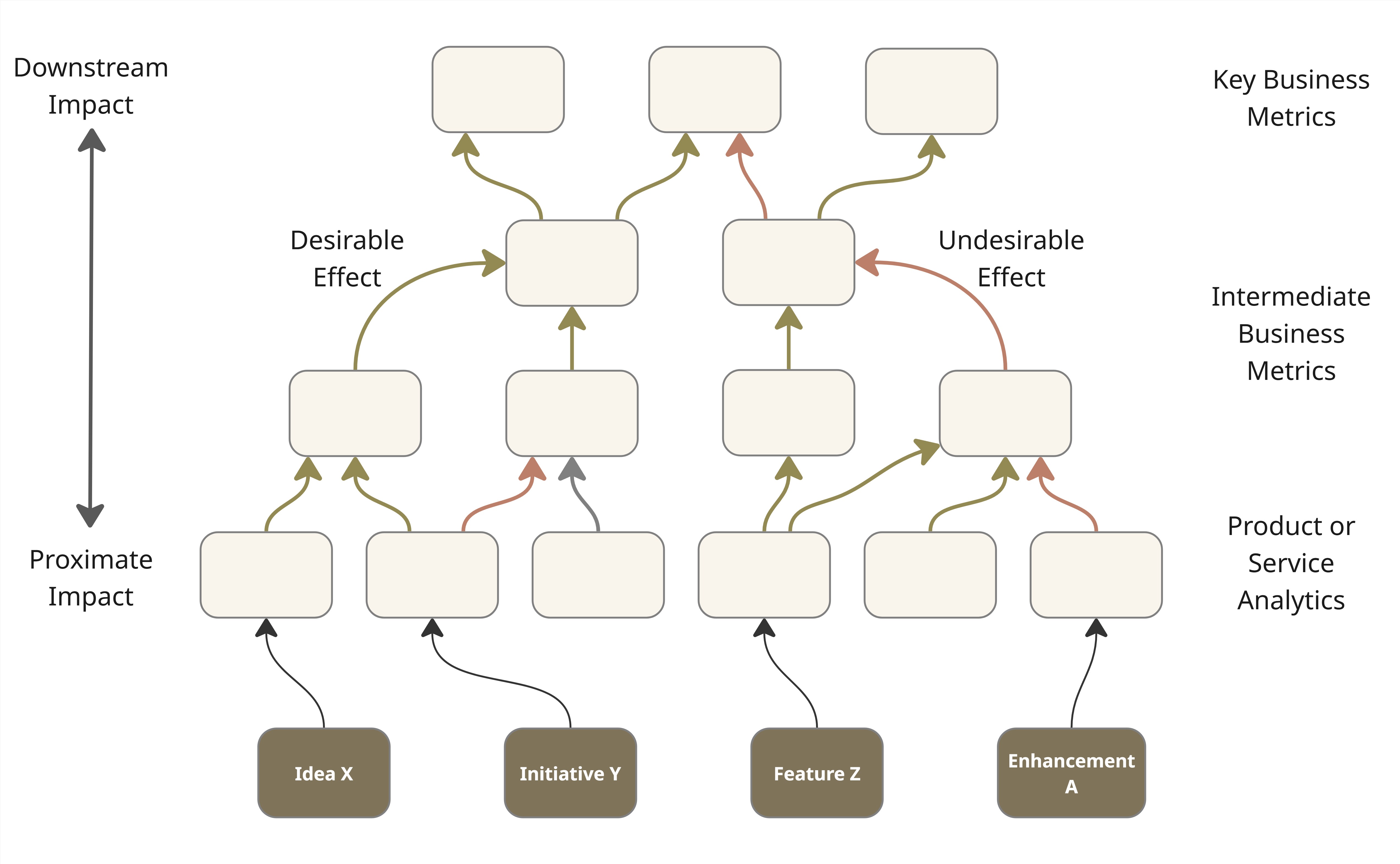

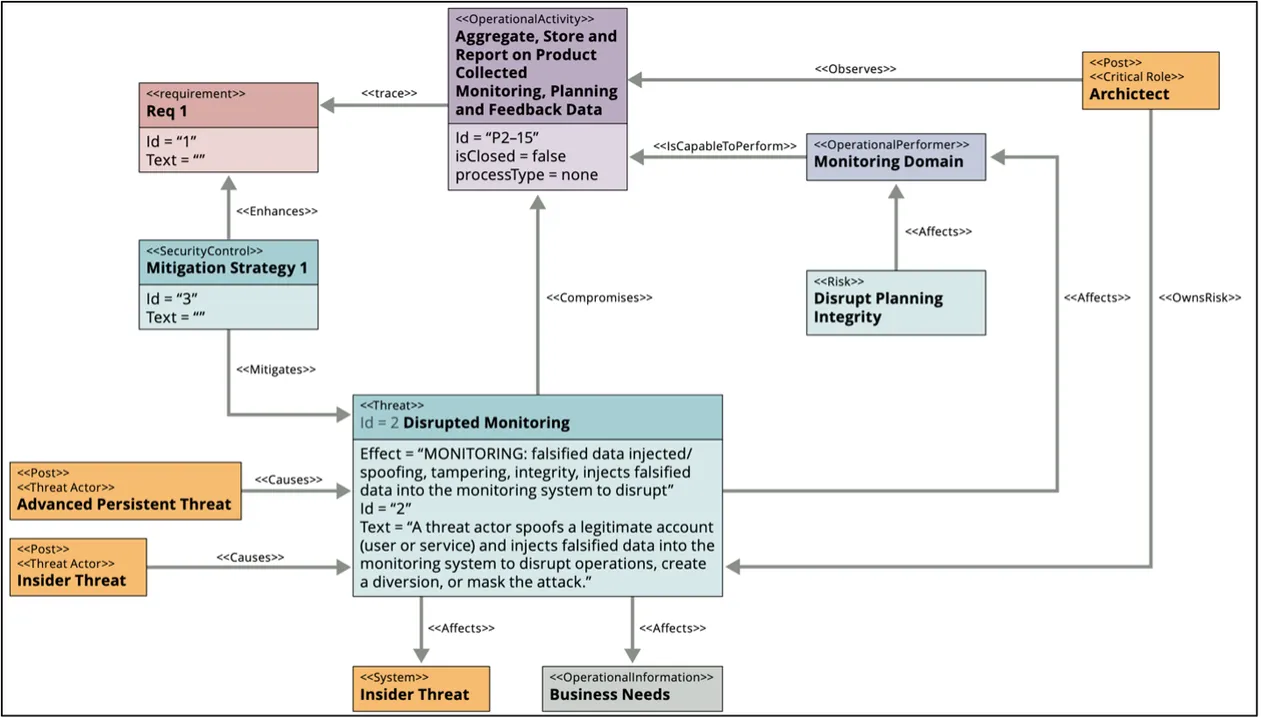

Right here I present an instance of the best way to apply this profile. First, we have to outline a part of a system we wish to construct and a number of the elements and their interactions. If we’re constructing a software program system that requires a monitoring and logging functionality, there may very well be a menace of disruption of that monitoring and logging service. An instance menace state of affairs written within the model of our template could be, A menace actor spoofs a reliable account (consumer or service) and injects falsified knowledge into the monitoring system to disrupt operations, create a diversion, or masks the assault. It is a good begin. Subsequent, we will incorporate the weather from this state of affairs into the mannequin. Represented in a safety taxonomy diagram, this menace state of affairs would resemble Determine 2 under.

Determine 2: Disrupted Monitoring Menace State of affairs

What’s essential to notice right here is that the menace state of affairs a menace modeler creates drives mitigation methods that place necessities on the system to implement these mitigations. That is, once more, the aim of menace modeling. Nonetheless, these mitigation methods and necessities finally constrain the system design and will impose extra prices. A main profit to figuring out threats early in system growth is a discount in value; nevertheless, the true value of mitigating a menace state of affairs won’t ever be zero. There’s at all times some trade-off. Given this value of mitigating threats, it’s vitally essential that menace eventualities be grounded in reality. Ideally, noticed TTPs ought to drive the menace eventualities and mitigation methods.

Introduction to CAPEC

MITRE’s Widespread Assault Sample Enumerations and Classifications (CAPEC) undertaking goals to create simply such a listing of assault patterns. These assault patterns at various ranges of abstraction permit a simple mapping from menace eventualities for a particular system to identified assault patterns that exploit identified weaknesses. For every of the entries within the CAPEC record, we will create <> components from the prolonged UAF viewpoint proven in Determine 1. This offers many advantages that embody refining the eventualities initially generated, serving to decompose high-level eventualities, and, most crucially, creating the tie to identified assaults.

Within the Determine 2 instance state of affairs, no less than three totally different entries may apply to the state of affairs as written. CAPEC-6: Argument Injection, CAPEC-594: Site visitors Injection, and CAPEC-194: Pretend the Supply of Knowledge. This relationship is proven in Determine 3.

Determine 3: Menace State of affairs to Assault Mapping

<> blocks present how a state of affairs will be realized. By tracing the <> block to <> blocks, a menace modeler can present some stage of assurance that there are actual patterns of assault that may very well be used to attain the target or impact specified by the state of affairs. Utilizing STRIDE as a foundation for forming the menace eventualities helps to map to those CAPEC entries in following approach. CAPEC will be organized by mechanisms of assault (equivalent to “Interact in misleading interactions”) or by Domains of assault (equivalent to “{hardware}” or “provide chain”). The previous methodology of group aids the menace modeler within the preliminary seek for discovering the right entries to map the threats to, based mostly on the STRIDE categorization. This isn’t a one-to-one mapping as there are semantic variations; nevertheless, basically the next desk reveals the STRIDE menace sort and the mechanism of assault that’s prone to correspond.

|

STRIDE menace sort

|

CAPEC Mechanism of Assault

|

|

|

Spoofing

|

Interact in Misleading Interactions

|

|

|

Tampering

|

Manipulate Knowledge Constructions, Manipulate System Sources

|

|

|

Repudiation

|

Inject Surprising Objects

|

|

|

Data Disclosure

|

Acquire and Analyze Data

|

|

|

Denial of Service

|

Abuse Present Performance

|

|

|

Elevation of Privilege

|

Subvert Entry Management

|

|

As beforehand famous, this isn’t a one-to-one mapping. For example, the “Make use of probabilistic methods” and “Manipulate timing and state” mechanisms of assault will not be represented right here. Moreover, there are STRIDE assault sorts that span a number of mechanisms of assault. This isn’t stunning provided that CAPEC shouldn’t be oriented round STRIDE.

Figuring out Menace Modeling Mitigation Methods and the Significance of Abstraction Ranges

As proven in Determine 2, having recognized the affected belongings, data flows, processes and assaults, the following step in menace modeling is to establish mitigation methods. We additionally present how the unique menace state of affairs was capable of be mapped to totally different assaults at totally different ranges of abstraction and why standardizing on a single abstraction stage offers advantages.

When coping with particular points, it’s straightforward to be particular in making use of mitigations. One other instance is a laptop computer working macOS 15. The Apple macOS 15 STIG Guide states that, “The macOS system should restrict SSHD to FIPS-compliant connections.” Moreover, the guide says, “Working programs utilizing encryption should use FIPS-validated mechanisms for authenticating to cryptographic modules.” The guide then particulars check procedures to confirm this for a system and what precise instructions to run to repair the problem if it’s not true. It is a very particular instance of a system that’s already constructed and deployed. The extent of abstraction could be very low, and all knowledge flows and knowledge shops all the way down to the bit stage are outlined for SSHD on macOS 15. Menace modelers would not have that stage of element at early phases of the system growth lifecycle.

Particular points additionally will not be at all times identified even with an in depth design. Some software program programs are small and simply replaceable or upgradable. In different contexts, equivalent to in main protection programs or satellite tv for pc programs, the power to replace, improve, or change the implementation is restricted or tough. That is the place engaged on the next abstraction stage and specializing in design components and knowledge flows can remove broader lessons of threats than will be eradicated by working with extra detailed patches or configurations.

To return to the instance proven in Determine 2, on the present stage of system definition it’s identified that there will likely be a monitoring answer to combination, retailer, and report on collected monitoring and suggestions data. Nonetheless, will this answer be a business providing, a home-grown answer, or a mixture? What particular applied sciences will likely be used? At this level within the system design, these particulars will not be identified. Nonetheless, that doesn’t imply that the menace can’t be modeled at a excessive stage of abstraction to assist inform necessities for the eventual monitoring answer.

CAPEC consists of three totally different ranges of abstraction concerning assault patterns: Meta, Customary, and Detailed. Meta assault patterns are excessive stage and don’t embody particular expertise. This stage is an efficient match for our instance. Customary assault patterns do name out some particular applied sciences and methods. Detailed assault patterns give the complete view of how a particular expertise is attacked with a particular method. This stage of assault sample could be extra frequent in a answer structure.

To establish mitigation methods, we should first guarantee our eventualities are normalized to some stage of abstraction. The instance state of affairs from above has points on this regard. First the state of affairs is compound in that the menace actor has three totally different targets (i.e., disrupt operations, create a diversion, and masks the assault). When making an attempt to hint mitigation methods or necessities to this state of affairs, it could be tough to see the clear linkage. The kind of account might also influence the mitigations. It might be a requirement that a normal consumer account not be capable of entry log knowledge whereas a service account could also be permitted to have such entry to do upkeep duties. These complexities brought on by the compound state of affairs are additionally illustrated by the tracing of the state of affairs to a number of CAPEC entries. These assaults signify distinctive units of weaknesses, and all require totally different mitigation methods.

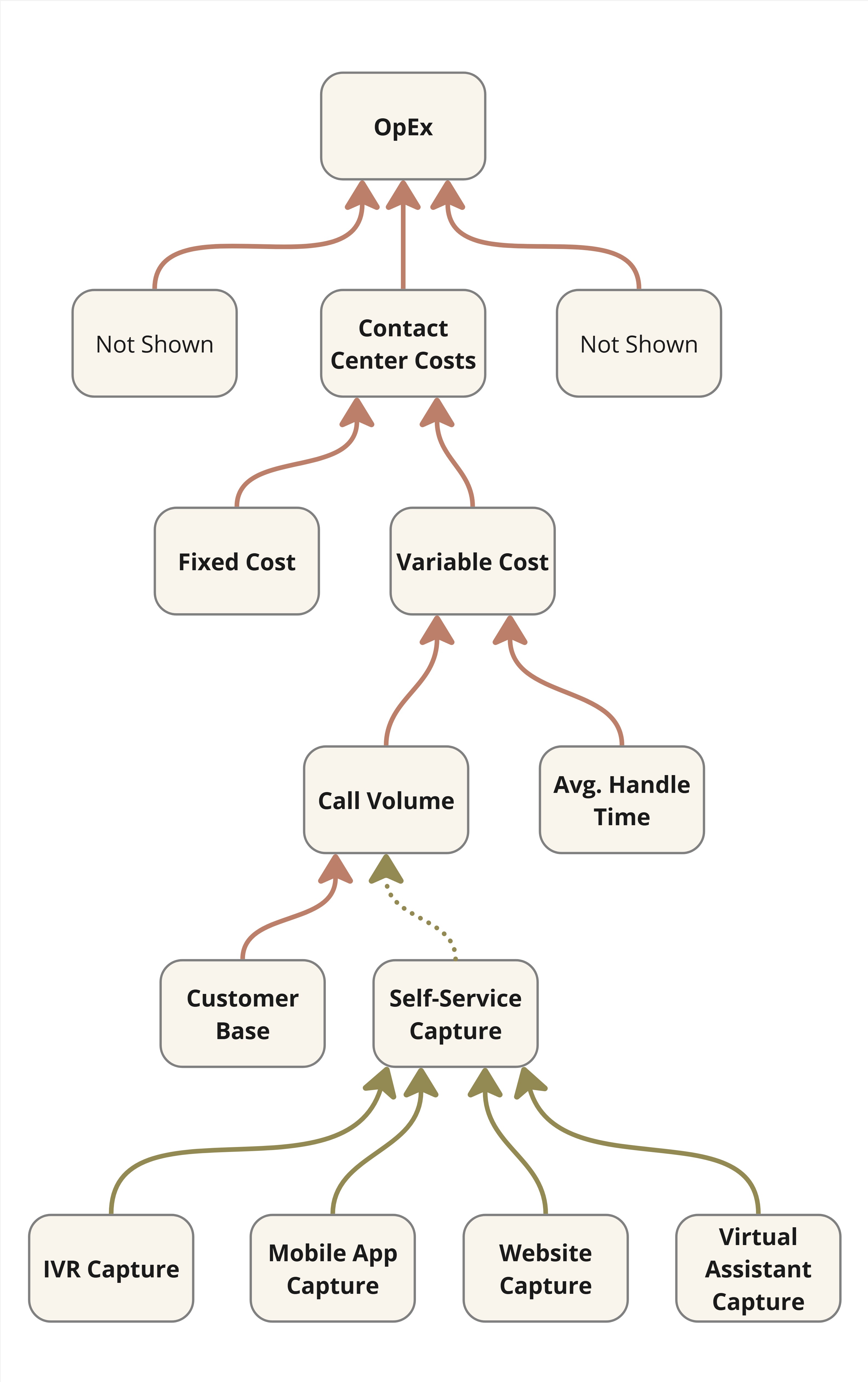

To decompose the state of affairs, we will first cut up out the several types of accounts after which cut up on the totally different targets. A full decomposition of those elements is proven in Determine 4.

Determine 4: Menace State of affairs Decomposition

This decomposition considers that totally different targets typically are achieved by way of totally different means. If a menace actor merely needs to create a diversion, the weak point will be loud and ideally set off alarms or points that the system’s operators must take care of. If as an alternative the target is to masks an assault, then the attacker could must deploy quieter techniques when injecting knowledge.

Determine 4 shouldn’t be the one method to decompose the eventualities. The unique state of affairs could also be cut up into two based mostly on the spoofing assault and the info injection assault (the latter falling into the tampering class beneath STRIDE). Within the first state of affairs, a menace actor spoofs a reliable account (CAPEC-194: Pretend the Supply of Knowledge) to maneuver laterally by way of the community. Within the second state of affairs, a menace actor performs an argument injection (CAPEC-6: Argument Injection) into the monitoring system to disrupt operations.

Given the breakdown of our authentic state of affairs into the rather more scope-limited sub-scenarios, we will now simplify the mapping by mapping these to no less than one standard-level assault sample that offers extra element to engineers to engineer in mitigations for the threats.

Now that we have now the menace state of affairs damaged down into extra particular eventualities with a single goal, we will be extra particular with our mapping of assaults to menace eventualities and mitigation methods.

As famous beforehand, mitigation methods, at a minimal, constrain design and, in most circumstances, can drive prices. Consequently, mitigations needs to be focused to the precise elements that can face a given menace. This is the reason decomposing menace eventualities is essential. With an actual mapping between menace eventualities and confirmed assault patterns, one can both extract mitigation methods instantly from the assault sample entries or deal with producing one’s personal mitigation methods for a minimally full set of patterns.

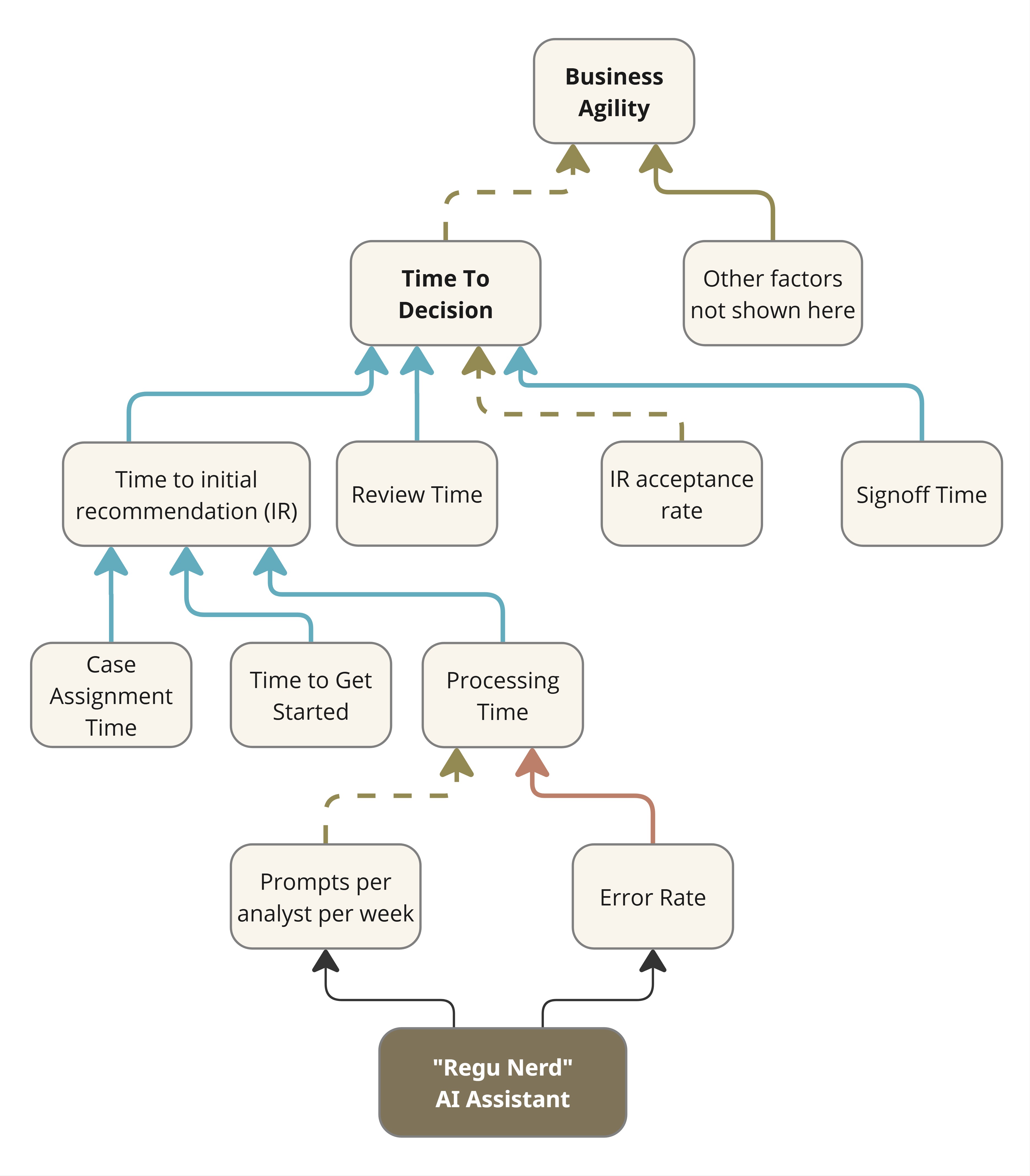

Argument injection is a superb instance of an assault sample in CAPEC that features potential mitigations. This assault sample contains two design mitigations and one implementation-specific mitigation. When menace modeling on a excessive stage of abstraction, the design-focused mitigations will typically be extra related to designers and designers.

Determine 5: Mitigations Mapped to a Menace.

Determine 5 reveals how the 2 design mitigations hint to the menace that’s realized by an assault. On this case the assault sample we’re mapping to had mitigations linked and laid out plainly. Nonetheless, this doesn’t imply mitigation methods are restricted to what’s within the database. A very good system engineer will tailor the utilized mitigations for a particular system, setting, and menace actors. It needs to be famous in the identical vein that assault components needn’t come from CAPEC. We use CAPEC as a result of it’s a customary; nevertheless, if there’s an assault not captured or not captured on the proper stage of element, one can create one’s personal assault components within the mannequin.

Bringing Credibility to Menace Modeling

The overarching aim of menace modeling is to assist defend a system from assault. To that finish, the actual product {that a} menace mannequin ought to produce is mitigation methods for threats to the system components, actions, and knowledge flows. Leveraging a combination of MBSE, UAF, the STRIDE methodology, and CAPEC can accomplish this aim. Whether or not working on a high-level summary structure or with a extra detailed system design, this methodology is versatile to accommodate the quantity of data available and to permit menace modeling and mitigation to happen as early within the system design lifecycle as doable. Moreover, by counting on an industry-standard set of assault patterns, this methodology brings credibility to the menace modeling course of. That is achieved by way of the traceability from an asset to the menace state of affairs and the real-world noticed patterns utilized by adversaries to hold out the assault.