Making a cell app someplace within the US or India appears so great till you understand the challenges relating to it.

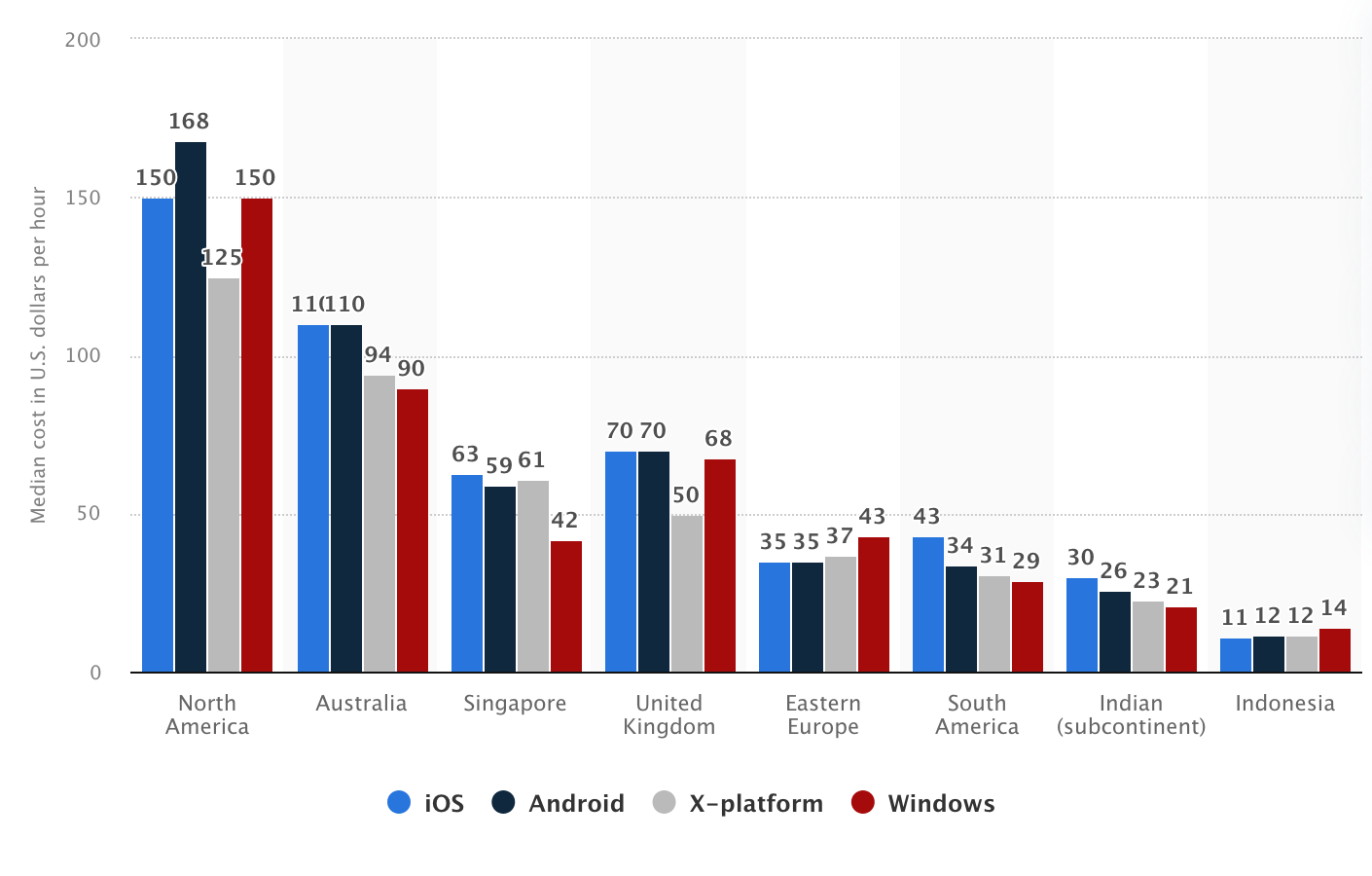

Hiring North American builders, for example, could be as costly as $125–$170+ per hour, which is usually a massive blow to the price range.

Indian subcontinent builders, nevertheless, often are cheaper (roughly $20-30 per hour) however the important time zone variations or occasional cultural misalignments can result in venture delays, misunderstandings, and unmet expectations. What are the choices, then?

If in-house or native improvement fails for some purpose, nearshore cell app improvement is usually a prudent second possibility.

This manner, you get a steadiness of price and management over the venture, in addition to a cell app improvement companion that operates inside your time zone, speaks the identical language (or no less than very near it), and is conversant in your work type.

Approximate Value of Cellular Utility Growth in Totally different Areas, Statista

What Is Nearshore Cellular App Growth?

Nearshore cell app improvement is a type of app improvement outsourcing the place you rent a crew based mostly in a close-by nation, sometimes inside your time zone or just some hours aside.

For instance, a US firm can work with a crew from Mexico or Colombia, whereas an organization from Germany can nearshore to Poland or Bulgaria.

Why Firms Select Nearshore Software program Growth

Increasingly corporations are selecting nearshore cell app improvement as a result of it is a wonderful mixture of lowered price and straightforward communication.

Whereas offshore utility improvement (having a crew on the opposite aspect of the world) could look cheaper, the identical invariably causes issues — amongst others, enormous time variations, language points, and sluggish suggestions. All these decelerate your improvement venture and make issues extra sophisticated than they should be.

| Standards | Onshore | Nearshore | Offshore |

| Value | $$$ | $$ | $ |

| Time Zone | Similar | Related (1–4 hours distinction) | Very completely different (5+ hours) |

| Communication | Straightforward | Straightforward to Average | Usually Difficult |

| Cultural Match | Excessive | Excessive to Average | Low to Average |

Comparability Desk: Nearshore vs Offshore vs Onshore

Nearshore app improvement avoids most of those points by working with an in-nearby nation crew:

- Dwell Teamwork: You’re in about the identical space, so it’s straightforward to leap on a name or get instantaneous responses.

- Fewer Time Zone Gaps: Having just a few hours hole, you don’t have to attend for an entire day to get solutions.

- Straightforward Communication: Groups often share good English or perhaps a shared language.

- Higher Venture Movement: As a result of they share the identical working hours and the identical work type, tasks movement properly.

- Cultural Familiarity: Groups from geographically neighboring nations often perceive your type of enterprise, which prevents confusion.

- Value Financial savings: Nearshore outsourcing charges are usually way more reasonably priced than these of native builders in Western Europe, however you continue to get the standard you search.

Standard Nearshore Locations for Western European Firms

For Western European corporations, nearshore cell app improvement is a wonderful means of getting high-quality work accomplished at decrease prices with out the time zone or communication points which will happen from going too far offshore.

1. Poland

Poland is definitely a pacesetter in Europe in the case of outsourcing cell app improvement. Poland has an abundance of expert builders, good technical universities, and good English abilities.

There are quite a few Western European companies that outsource to Polish groups just because they do nice work and perceive methods to talk with international clients. And since Poland is within the EU, the identical information safety and authorized setting.

2. Romania

Romania has a rising tech trade, reasonably priced charges, and dependable infrastructure. Because it’s a part of the EU, it follows the identical authorized and information safety requirements as Western Europe, making cooperation easy and protected. It’s additionally straightforward to journey there, and the time zone distinction is small, which helps with easy communication.

3. The Baltic States (Estonia, Latvia, Lithuania)

These smaller nations are common for nearshoring owing to their robust emphasis on expertise and creation. They possess small however environment friendly groups who’re wonderful in English and cognizant of Western enterprise calls for. Estonia is especially famend for its digital-first tradition and startup-vibrant tradition.

Value of Nearshore Cellular App Growth

One of many predominant causes companies select nearshore cell app improvement is to make a top-quality app with out exposing your price range an excessive amount of.

Nearshore improvement provides decrease app improvement charges than native builders however with a lot better collaboration than far-away offshore improvement groups.

By comparability, programmer charges in Germany or the UK can go as much as $90–150 per hour or much more. Whereas offshore charges (e.g., India) could be decrease, nearshore builders show to supply higher overlap in time zones, higher communications, and fewer delays.

So what determines the fee?

- How complicated your app is: Much less complicated apps are cheaper, and extra superior options like AI, real-time chat, or funds take extra money and time.

- Who you will have working in your crew: The extra specialists you want, equivalent to designers, testers, and venture managers, the upper the general price.

- What tech you’re employed with: Some instruments or frameworks will pace it up, whereas others will take extra time and experience.

- Kind of contract: Mounted-price tasks management your price range. Time-and-material contracts are much less inflexible however extra expensive if the scope adjustments.

- Future upkeep: Don’t neglect to incorporate the price of future upgrades and upkeep, often billed individually.

Select the Proper Nearshore Cellular App Growth Companion

Discovering the correct nearshore improvement companion is a large step within the path of constructing a formidable utility.

Begin with the expertise. Test their portfolio, case research, or buyer suggestions to search out out whether or not they have accomplished tasks just like yours. It makes you already know that they’re able to regulating what you want.

Then take note of communication. You’ll be working collectively typically, so your companion ought to converse your language properly, reply rapidly, and be comfy utilizing instruments like Slack, Jira, or Trello.

Subsequent, be certain that your cell app builders know the correct applied sciences. Whether or not you’re producing your app with Flutter, React Native, Kotlin, or Swift, your builders ought to be skilled with the instruments your venture calls for and comply with improvement developments.

Don’t overlook safety. Regardless of if you wish to implement AI or not, your concepts and information should stay confidential, so search for a nearshore improvement crew severe about privateness, able to carry out NDAs, and comply with authorized requirements, particularly in the event that they’re within the EU or the area with a strict set of knowledge legal guidelines.

Lastly, search for an organization that’s forthcoming and versatile. They should be sincere about prices, timelines, and attainable troubles, they usually should be keen to chop again in case your timeline adjustments alongside the way in which.

Why Select SCAND as a Nearshore Workforce?

With a 20+ 12 months historical past and greater than 250 skilled software program builders, SCAND has helped corporations in Europe, the US, and past deliver their app concepts to life as actual merchandise on time and inside price range.

We now have our headquarters in Poland, within the middle of Europe, which makes us an ideal nearshore companion for Western European companies. We share the identical time zones, converse flawless English, and are specialists at working with worldwide clients.

No matter whether or not you want a local app for iOS or Android (constructed with Swift or Kotlin), a cross-platform app (with Flutter or React Native), or a hybrid app, our builders know methods to steadiness applied sciences, comfort, and enticing outer look of cell purposes. We make use of fashionable improvement processes, apply the most recent instruments, and ensure every venture is top-notch, from begin to end.

We’re additionally involved about safety and at all times do our greatest to make your app meet corresponding laws. We respect GDPR laws and signal separate agreements that defend your ideas and your information.

If you’re able to nearshore your cell app improvement, please contact SCAND or discover our customized app improvement providers to get began.