Digital Safety

As well being information continues to be a prized goal for hackers, here is methods to decrease the fallout from a breach impacting your individual well being information

20 Jun 2024

•

,

5 min. learn

Digital transformation helps healthcare suppliers throughout the globe to turn into extra cost-efficient, whereas enhancing requirements of affected person care. However digitizing healthcare information additionally comes with some main cyber dangers. As soon as your information is saved on IT programs that may be reached by way of the web, it might be by chance leaked, or accessed by malicious third events and even insiders.

Medical information is among the many most delicate info we share with organizations. That’s why it’s given “particular class” standing by the GDPR – which means extra protections are required. However no group is 100% breach-proof. Meaning it’s extra essential than ever that you just perceive what to do within the occasion your information is compromised – to attenuate the fallout.

The worst-case state of affairs

Within the first 10 months of 2023 within the US, over 88 million individuals had their medical information uncovered, based on authorities figures. The quantity might be even greater as soon as organizations not regulated by affected person privateness regulation HIPAA are taken into consideration.

Most notably incidents over current years embody:

- Change Healthcare, which suffered a main ransomware breach in February 2024. The US healthcare supplier not solely skilled main operational disruption, however its attackers (Black Cat/ALPHV) additionally claimed to have stolen 6TB of knowledge through the assault. Though the ransomware group shut down shortly after Change Healthcare paid an alleged $22m ransom, the ransomware affiliate chargeable for the assault tried to extort the corporate once more, threatening to promote the info to the best bidder.

- Psychological well being startup Cerebral by chance leaked extremely delicate medical info on 3.1 million individuals on-line. The agency admitted final 12 months that it had for 3 years inadvertently been sharing shopper and person information to “third-party platforms” and “subcontractors” by way of misconfigured advertising tech.

What’s at stake?

Among the many medical information doubtlessly in danger is your:

- Medical insurance coverage coverage numbers, or comparable

- Personally identifiable info (PII) together with Social Safety quantity, dwelling and e-mail tackle, and beginning date

- Passwords to key medical, insurance coverage and monetary accounts

- Medical historical past together with remedies and prescriptions

- Billing and fee info, together with credit score and debit card and checking account particulars

This info might be utilized by menace actors to run up payments in your bank card, open new traces of credit score, entry and drain your checking account, or impersonate you to acquire costly medical companies and prescription remedy. Within the US, healthcare information may even be used to file fraudulent tax returns in an effort to acquire rebates. And if there’s delicate info on remedies or diagnoses you’d slightly be saved secret, malicious actors could even attempt to blackmail you.

8 steps to take following an information breach

If you end up in a worst-case state of affairs, it’s essential to maintain a cool head. Work systematically via the next:

1. Examine the notification

Learn via the e-mail rigorously for any indicators of a possible rip-off. Inform-tale indicators embody spelling and grammatical errors and pressing requests in your private info, maybe by asking you to ‘affirm’ your particulars. Additionally, look out for a sender e-mail tackle that doesn’t match the professional firm whenever you hover over the “from” tackle, in addition to for embedded clickable hyperlinks which you’re inspired to observe or attachments you’re being requested to obtain.

2. Discover out precisely what occurred

The following vital step is to grasp your threat publicity. Precisely what info has been compromised? Was the incident an unintended information publicity, or did malicious third events entry and steal your information? What kind of data could have been accessed? Was it encrypted? In case your supplier hasn’t answered these questions adequately then name them to get the data it’s essential to take the following steps. If it’s nonetheless unclear, then plan for the worst.

3. Monitor your accounts

If malicious actors have accessed your PII and medical info, they could promote it to fraudsters or attempt to use it themselves. Both means, it pays to watch for suspicious exercise equivalent to medical payments for care you didn’t obtain, or notifications saying you’ve reached your insurance coverage profit restrict. If monetary info has been compromised, regulate checking account and card transactions. Many organizations provide free credit score monitoring, which notifies you when there are any updates or adjustments to your credit score stories which may point out fraud.

4. Report suspicious exercise

It goes with out saying that it is best to report any suspicious exercise or billing errors instantly to the related supplier. It’s best to take action in writing in addition to notifying your insurer/supplier by way of e-mail/cellphone.

5. Freeze your credit score and playing cards

Relying on what private info has been stolen, you would possibly wish to activate a credit score freeze. It will imply collectors can not entry your credit score report and due to this fact received’t have the ability to approve any new credit score account in your title. That may stop menace actors operating up debt in your title. Additionally think about freezing and/or having new financial institution playing cards issued. This will usually be executed merely by way of your banking app.

6. Change your passwords

In case your log-ins have been compromised in a breach, then the related supplier ought to routinely reset them. But when not, it’d pay to take action manually anyway – for peace of thoughts. It will stop account takeover makes an attempt – particularly if you happen to improve you safety by dint of two-factor authentication.

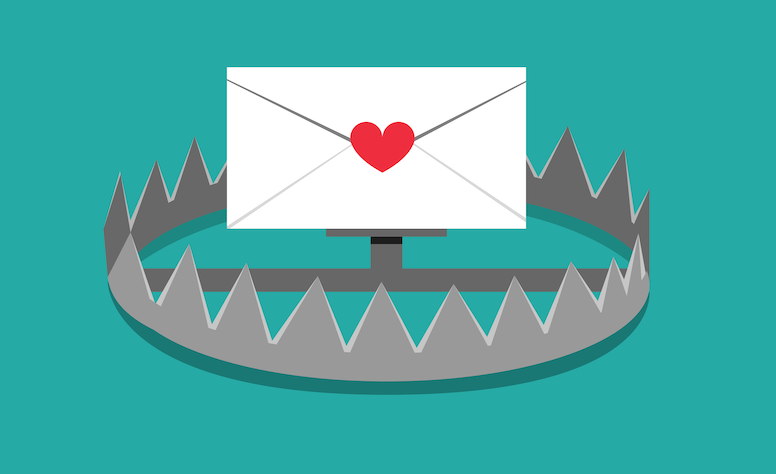

7. Keep alert

If fraudsters pay money for your private and medical info, they could attempt to use it in follow-on phishing assaults. These might be launched by way of e-mail, textual content, and even stay cellphone calls. The purpose is to make use of the stolen data so as to add legitimacy to requests for extra private info like monetary particulars. Stay vigilant. And if a menace actor tries to extort you by threatening to reveal delicate medical particulars, contact the police instantly.

8. Think about authorized motion

In case your information was compromised because of negligence out of your healthcare supplier, you would be in line for some kind of compensation. It will rely upon the jurisdiction and related native information safety/privateness legal guidelines, however a authorized professional ought to have the ability to advise whether or not a person or class motion case is feasible.

No finish in sight

Provided that medical information can fetch 20 instances the worth of bank card particulars on the cybercrime underground, cybercriminals are unlikely to cease focusing on healthcare organizations anytime quickly. Their capacity to power multimillion-dollar pay-outs by way of ransomware solely makes the sector an much more engaging goal. That’s why it’s essential to be ready for the worst, and know precisely what to do to attenuate the harm to your psychological well being, privateness and funds.