As a pastime, I’m engaged on a SwiftUI app on the aspect. It permits me to maintain monitor of top and weight of my daughters and plot them on charts that enable me to see how “regular” my offspring are creating.

I’ve shied away from statistics at college, so it took me so time to analysis a number of issues to resolve a difficulty I used to be having. Let me share how I labored in direction of an answer to this statistical downside. Could you discover it as instructive as I did.

Observe: In the event you discover any error of thought or truth on this article, please let me know on Twitter, in order that I can perceive what induced it.

Let me first offer you some background as to what I’ve completed earlier than in the present day, so that you simply perceive my statistical query.

Setup

The World Well being Group publishes tables that give the percentiles for size/top from delivery to 2 years, to 5 years and to 19 years. Till two years of age the measurement is to be carried out with the toddler on its again, and referred to as “size”. Past two years we measure standing up after which it’s referred to as “top”. That’s why there’s a slight break within the printed values at two years.

I additionally compiled my ladies heights in a Numbers sheet which I fed from paediatrician visits initially and later by sometimes marking their top on a poster behind their bed room door.

To get began I hard-coded the heights such:

import Basis

struct ChildData

{

let days: Int

let top: Double

}

let elise = [ChildData(days: 0, height: 50),

ChildData(days: 6, height: 50),

ChildData(days: 49, height: 60),

ChildData(days: 97, height: 64),

ChildData(days: 244, height: 73.5),

ChildData(days: 370, height: 78.5),

ChildData(days: 779, height: 87.7),

ChildData(days: 851, height: 90),

ChildData(days: 997, height: 95),

ChildData(days: 1178, height: 97.5),

ChildData(days: 1339, height: 100),

ChildData(days: 1367, height: 101),

ChildData(days: 1464, height: 103.0),

ChildData(days: 1472, height: 103.4),

ChildData(days: 1544, height: 105),

ChildData(days: 1562, height: 105.2)

]

let erika = [ChildData(days: 0, height: 47),

ChildData(days: 7, height: 48),

ChildData(days: 44, height: 54),

ChildData(days: 119, height: 60.5),

ChildData(days: 256, height: 68.5),

ChildData(days: 368, height: 72.5),

ChildData(days: 529, height: 80),

ChildData(days: 662, height: 82),

ChildData(days: 704, height: 84),

ChildData(days: 734, height: 85),

ChildData(days: 752, height: 86),

]The WHO outlined one month as 30.4375 days and so I used to be in a position to have these values be plotted on a SwiftUI chart. The vertical traces you see on the chart are months with bolder traces representing full years. You can too discover the small step on the second 12 months finish.

It’s nonetheless lacking some kind of labelling, however you possibly can already see that my older daughter Elise (blue) was on the taller aspect throughout her first two years, whereas the second-born Erika (purple) was fairly near the “center of the highway”.

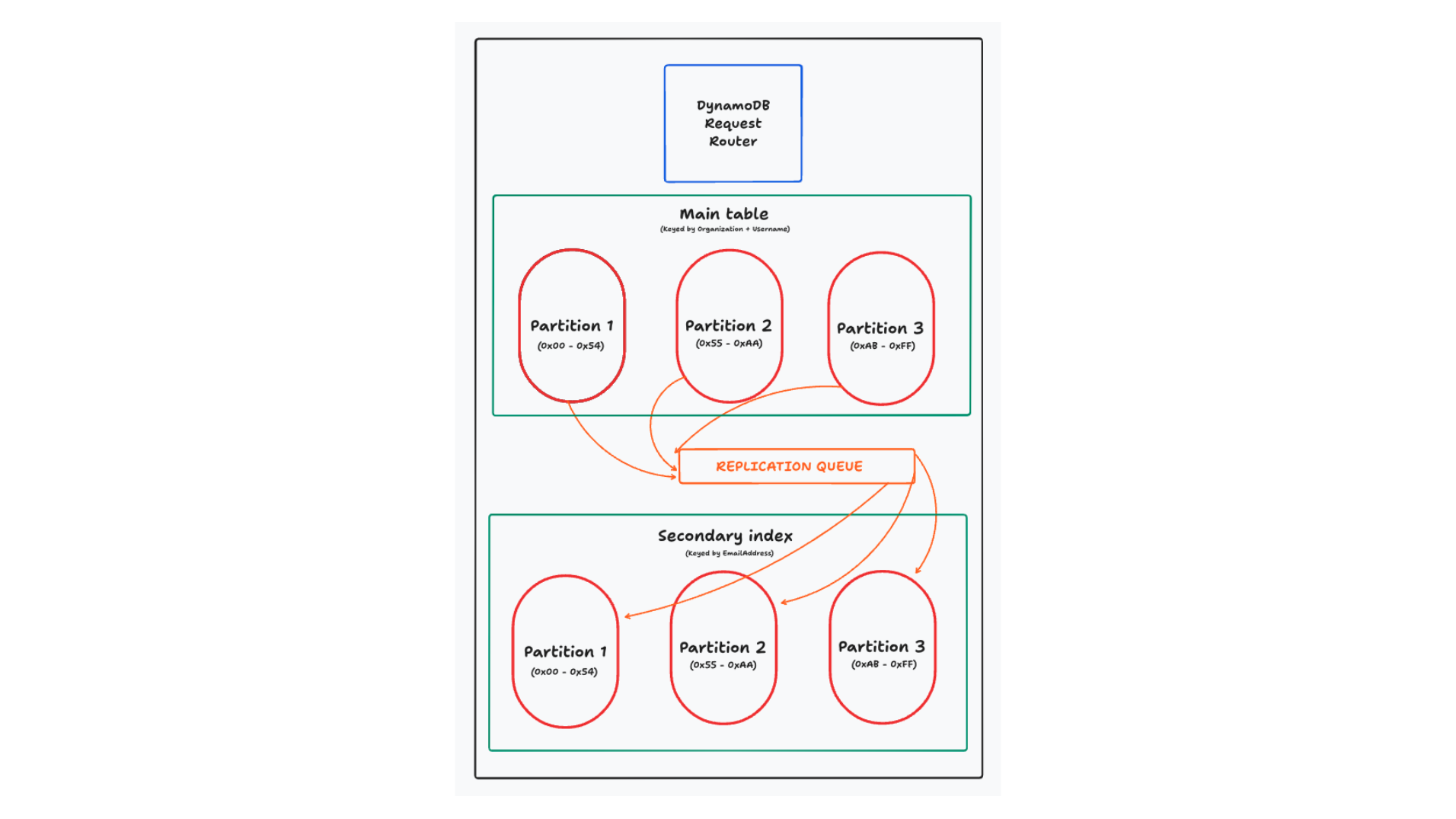

This chart offers you an eye-eye overview of the place on the highway my daughters are, however I wished to have the ability to put your finger down on each place and have a pop up let you know the precise percentile worth.

The Knowledge Dilemma

A percentile worth is mainly giving the knowledge what number of % of kids are shorter than your baby. So in case your child is on the seventy fifth percentile, then seventy fifth of kids are shorter than it. The shades of inexperienced on the chart signify the steps within the uncooked information offered by the WHO.

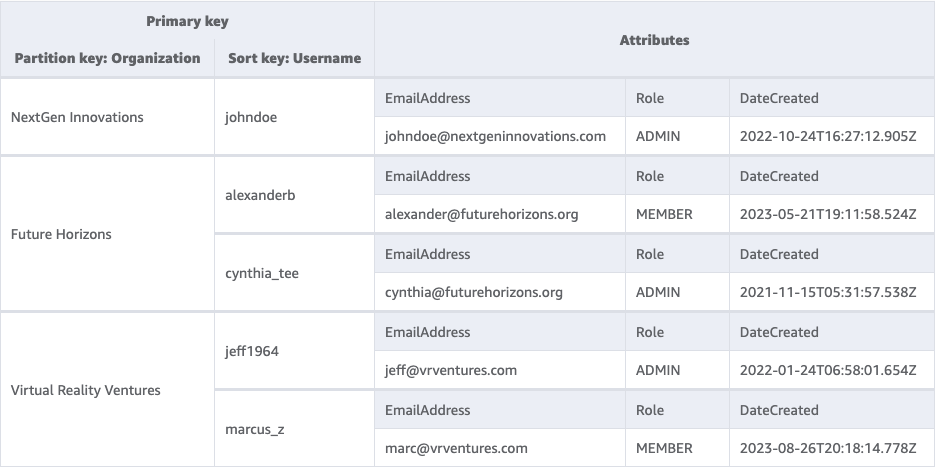

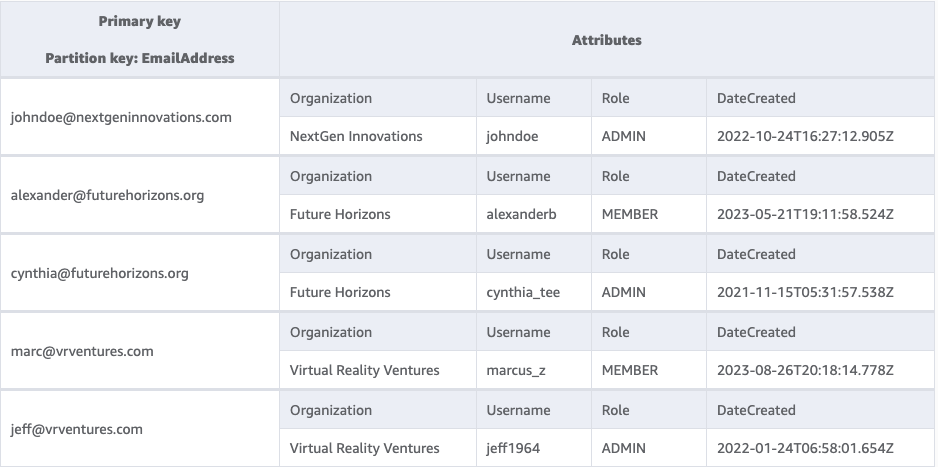

Thery offer you P01, P1, P3, P5, P10, P15, P25, P50, P75, P85, P90, P95, P97, P99, P999. P01 is the 0.1th percentile, P999 is the 99.ninth percentile. On the extremes the percentiles are very shut collectively, however within the center there’s a enormous soar from 25 to 50 to 75.

I wished to point out percentile values at these arbitrary instances which might be at the least full integers. i.e. say forty seventh percentile as a substitute of “between 25 and 50” and possibly present this place with a coloured line on the distribution curve these percentile values signify.

It seems, these top values are “usually distributed”, on a curve that appears a bit like a bell, thus the time period “bell curve”. To me as a programmer, I’d say that I perceive {that a} a kind a knowledge compression the place you solely must to know the imply worth and the usual deviation and from you could draw the curve, versus interpolating between the person percentile values.

The second – smaller – difficulty is that WHO offers information for full months solely. To find out the traditional distribution curve for arbitrary instances in between the months we have to interpolate between the month information earlier than and after the arbitrary worth.

With these questions I turned to Stack Overflow and Math Stack Trade hoping that any person may assist out me statistics noob. Right here’s what I posted…

The Drawback

Given the size percentiles information the WHO has printed for ladies. That’s size in cm at for sure months. e.g. at delivery the 50% percentile is 49.1 cm.

Month L M S SD P01 P1 P3 P5 P10 P15 P25 P50 P75 P85 P90 P95 P97 P99 P999

0 1 49.1477 0.0379 1.8627 43.4 44.8 45.6 46.1 46.8 47.2 47.9 49.1 50.4 51.1 51.5 52.2 52.7 53.5 54.9

1 1 53.6872 0.0364 1.9542 47.6 49.1 50 50.5 51.2 51.7 52.4 53.7 55 55.7 56.2 56.9 57.4 58.2 59.7

2 1 57.0673 0.03568 2.0362 50.8 52.3 53.2 53.7 54.5 55 55.7 57.1 58.4 59.2 59.7 60.4 60.9 61.8 63.4

3 1 59.8029 0.0352 2.1051 53.3 54.9 55.8 56.3 57.1 57.6 58.4 59.8 61.2 62 62.5 63.3 63.8 64.7 66.3P01 is the 0.1% percentile, P1 the 1% percentile and P50 is the 50% percentile.

Say, I’ve a sure (probably fractional) month, say 2.3 months. (a top measurement could be achieved at a sure variety of days after delivery and you may divide that by 30.4375 to get a fractional month)

How would I’m going about approximating the percentile for a selected top at a fraction month? i.e. as a substitute of simply seeing it “subsequent to P50”, to say, properly that’s about “P62”

One method I considered could be to do a linear interpolation, first between month 2 and month 3 between all fastened percentile values. After which do a linear interpolation between P50 and P75 (or these two percentiles for which there’s information) values of these time-interpolated values.

What I concern is that as a result of this can be a bell curve the linear values close to the center is perhaps too far off to be helpful.

So I’m pondering, is there some method, e.g. a quad curve that you may use with the fastened percentile values after which get an actual worth on this curve for a given measurement?

This bell curve is a standard distribution, and I suppose there’s a method by which you will get values on the curve. The temporal interpolation can most likely nonetheless be achieved linear with out inflicting a lot distortion.

My Answer

I did get some responses starting from ineffective to a stage the place they is perhaps right, however to me as a math outsider they didn’t assist me obtain my objective. So I got down to analysis methods to obtain the end result myself.

I labored by way of the query primarily based on two examples, specifically my two daughters.

ELISE at 49 days divide by 30.4375 = 1.61 months 60 cm

In order that’s between month 1 and month 2:

Month P01 P1 P3 P5 P10 P15 P25 P50 P75 P85 P90 P95 P97 P99 P999 1 47.6 49.1 50 50.5 51.2 51.7 52.4 53.7 55 55.7 56.2 56.9 57.4 58.2 59.7 2 50.8 52.3 53.2 53.7 54.5 55 55.7 57.1 58.4 59.2 59.7 60.4 60.9 61.8 63.4

Subtract the decrease month: 1.61 – 1 = 0.61. So the worth is 61% the way in which to month 2. I’d get a percentile row for this by linear interpolation. For every percentile I can interpolate values from the month row earlier than and after it.

// e.g. for P01

p1 = 47.6

p2 = 50.8

p1 * (1.0 - 0.61) + p2 * (0.61) = 18.564 + 30.988 = 49.552 I did that in Numbers to get the values for all percentile columns.

Month P01 P1 P3 P5 P10 P15 P25 P50 P75 P85 P90 P95 P97 P99 P999

1.6 49.552 51.052 51.952 52.452 53.213 53.713 54.413 55.774 57.074 57.835 58.335 59.035 59.535 60.396 61.957First, I attempted the linear interpolation:

60 cm is between 59,535 (P97) and 60,396 (P99).

0.465 away from the decrease, 0.396 away from the upper worth.

0.465 is 54% of the gap between them (0,861)

(1-0.54) * 97 + 0.54 * 99 = 44.62 + 53.46 = 98,08

// rounded P98Seems that this can be a dangerous instance.

On the extremes the percentiles are very carefully spaced in order that linear interpolation would give related outcomes. Linear interpolation within the center could be too inaccurate.

Let’s do a greater instance. This time with my second daughter.

ERIKA at 119 days divide by 30.4375 = 3.91 months 60.5 cm

We interpolate between month 3 and month 4:

Month P01 P1 P3 P5 P10 P15 P25 P50 P75 P85 P90 P95 P97 P99 P999 3 53.3 54.9 55.8 56.3 57.1 57.6 58.4 59.8 61.2 62.0 62.5 63.3 63.8 64.7 66.3 4 55.4 57.1 58.0 58.5 59.3 59.8 60.6 62.1 63.5 64.3 64.9 65.7 66.2 67.1 68.8 3.91 55.211 56.902 57.802 58.302 59.102 59.602 60.402 61.893 63.293 64.093 64.684 65.484 65.984 66.884 68.575

Once more, let’s strive with linear interpolation:

60.5 cm is between 60.402 (P25) and 61.893 (P50)

0.098 of the gap 1.491 = 6.6%

P = 25 * (1-0.066) + 50 * 0.066 = 23.35 + 3.3 = 26.65 // rounds to P27

To check that to approximating it on a bell curve, I used an on-line calculator/plotter. This wanted a imply and a regular deviation, which I feel I discovered on the percentile desk left-most columns. However I additionally must interpolate these for month 3.91:

Month L M S SD 3 1.0 59.8029 0.0352 2.1051 4 1.0 62.0899 0.03486 2.1645 3.91 1.0 61.88407 0.0348906 2.159154

I don’t know what L and S imply, however M most likely means MEAN and SD most likely means Commonplace Deviation.

Plugging these into the web plotter…

μ = 61.88407

σ = 2.159154

x = 60.5

The web plotter offers me a results of P(X < x) = 0.26075, rounded P26

That is far sufficient from the P27 I arrived at by linear interpolation, warranting a extra correct method.

Z-Scores Tables

Looking round, I discovered that when you can convert a size worth right into a z-score you possibly can then lookup the percentile in a desk. I additionally discovered this nice rationalization of Z-Scores.

Z-Rating is the variety of normal deviation from the imply {that a} sure information level is.

So I’m attempting to attain the identical end result as above with the method:

(x - M) / SD

(60.5 - 61.88407) / 2.159154 = -0.651

Then I used to be in a position to convert that right into a percentile by consulting a z-score desk.

Wanting up -0.6 on the left aspect vertically after which 0.05 horizontally I get to 0.25785 – In order that rounds to be additionally P26, though I get an uneasy feeling that it’s ever so barely lower than the worth spewed out from the calculator.

How to do this in Swift?

Granted that it could be easy sufficient to implement such a percentile search for desk in Swift, however the feeling that I can get a extra correct end result coupled with much less work pushed me to search around for a Swift package deal.

Certainly, Sigma Swift Statistics appears to offer the wanted statistics perform “regular distribution”, described as:

Returns the traditional distribution for the given values of x, μ and σ. The returned worth is the world below the traditional curve to the left of the worth x.

I couldn’t discover something talked about percentile as end result, however I added the Swift package deal and I attempted it out for the second instance, to see what end result I’d get for this worth between P25 and P50:

let y = Sigma.normalDistribution(x: 60, μ: 55.749061, σ: 2.00422) // end result 0.2607534748851712

That appears very shut sufficient to P26. It’s completely different than the worth from the z-tables, `0.25785` however it rounds to the identical integer percentile worth.

For the primary instance, between P97 and P99, we additionally get inside rounding distance of P98.

let y = Sigma.normalDistribution(x: 60, μ: 55.749061, σ: 2.00422) // end result 0.9830388548349042

As a aspect observe, I discovered it pleasant to see the usage of greek letters for the parameters, a function potential because of Swifts Unicode assist.

Conclusion

Math and statistics have been the rationale why I aborted my college diploma in laptop science. I couldn’t see how these would have benefitted me “in actual life” as a programmer.

Now – many many years later – I sometimes discover {that a} bit extra information in these issues would enable me to grasp such uncommon situations extra shortly. Fortunately, my web looking abilities could make up for what I lack in tutorial information.

I appear to have the components assembled to start out engaged on this regular distribution chart giving interpolated percentile values for particular days between the month boundaries. I’ll give an replace when I’ve constructed that, if you’re .

Additionally printed on Medium.