A brand new report from cybersecurity firm Netskope reveals particulars about assault campaigns abusing Microsoft Sway and CloudFlare Turnstile and leveraging QR codes to trick customers into offering their Microsoft Workplace credentials to the phishing platform.

These campaigns have focused victims in Asia and North America throughout a number of segments led by know-how, manufacturing, and finance.

What’s quishing?

QR codes are a handy option to browse web sites or entry data with out the necessity to enter any URL on a smartphone. However there’s a danger in utilizing QR codes: cybercriminals would possibly abuse them to steer victims to malicious content material.

This course of, known as “quishing,” entails redirecting victims to malicious web sites or prompting them to obtain dangerous content material by scanning a QR code. As soon as on the positioning, cybercriminals work to steal your private and monetary data. The design of QR codes makes it not possible for the consumer to know the place the code will direct them after scanning.

Thomas Damonneville, head of anti-phishing firm StalkPhish, informed TechRepublic that quishing “is a rising development” that “could be very simple to make use of and makes it tougher to verify if the content material is respectable.”

Quishing assaults through Microsoft Sway

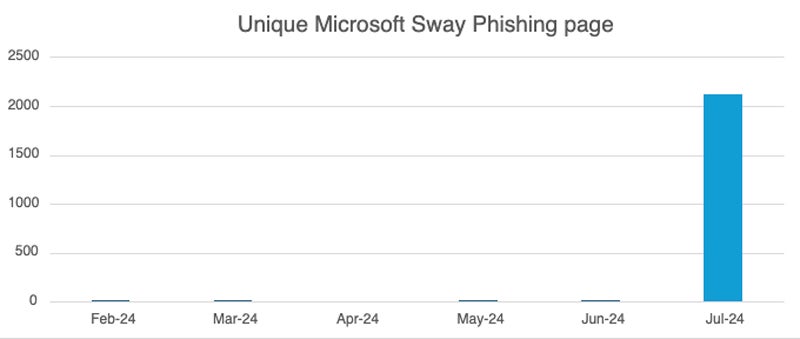

In July 2024, Netskope Risk Labs found a 2000-fold improve in site visitors to phishing pages through Microsoft Sway. The vast majority of the malicious pages used QR codes.

Microsoft Sway is a web based app from Microsoft Workplace that comes free and allows customers to simply create shows or different web-based content material. The app being freed from cost makes it a sexy goal for cybercriminals.

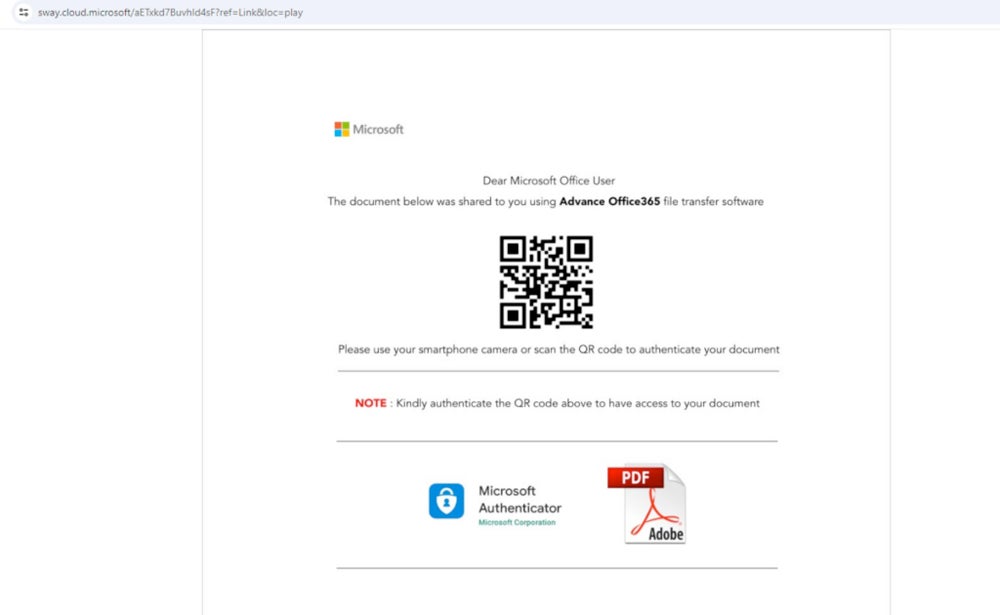

Within the assault campaigns uncovered by Netskope’s researcher Jan Michael Alcantara, victims are being focused with Microsoft Sway pages that result in phishing makes an attempt for Microsoft Workplace credentials.

Netskope’s analysis doesn’t point out how the fraudulent hyperlinks have been despatched to victims. Nevertheless, it’s doable to unfold these hyperlinks through electronic mail, social networks, SMS, or immediate messaging software program.

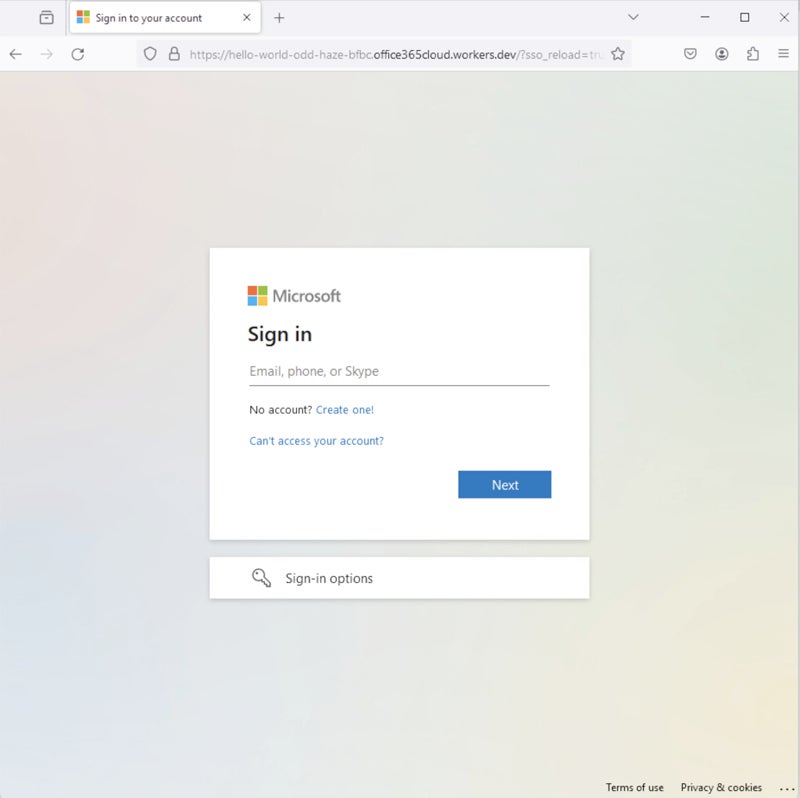

The ultimate payload appears to be like just like the respectable Microsoft Workplace login web page, as uncovered in a Could 2024 publication from the identical researcher.

Stealthier assault utilizing CloudFlare Turnstile

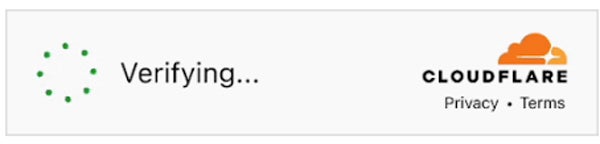

CloudFlare’s Turnstile is a free software that replaces captchas, which have been exploited in reported assault campaigns. This respectable service permits web site homeowners to simply add the mandatory Turnstile code to their content material, enabling customers to easily click on on a verification code as a substitute of fixing a captcha.

From an attacker perspective, utilizing this free software is interesting as a result of it requires customers to click on on a CloudFlare Turnstile earlier than being redirected to the phishing web page. This provides a layer of safety towards detection for the attacker, as the ultimate phishing payload is hid from on-line URL scanners.

Attacker-in-the-middle phishing method

Conventional phishing strategies sometimes accumulate credentials earlier than displaying an error web page or redirecting the consumer to the respectable login web page. This method makes customers imagine they’ve entered incorrect credentials, doubtless leaving them unaware of the fraud.

The attacker-in-the-middle phishing method is extra discreet. The consumer’s credentials are collected and instantly used to log into the respectable service. This methodology, additionally known as clear phishing, permits the consumer to be efficiently logged after the fraudulent credential theft, making the assault much less noticeable.

Malicious QR code detection difficulties

“No person can learn a QR code along with his personal eyes,” Damonneville stated. “You may solely scan it with the suitable machine, a smartphone. Some hyperlinks will be so lengthy that you could’t verify the entire hyperlink, should you verify it … However who checks hyperlinks?”

Textual content-only-based detections are additionally ineffective towards QR codes as they’re photos. There may be additionally no widespread commonplace for verifying the authenticity of a QR code. Safety mechanisms akin to digital signatures for QR codes aren’t generally applied, making it troublesome to confirm the supply or integrity of the content material.

How are you going to stop a QR code from phishing?

Many QR code readers present a preview of the URL, although, enabling customers to see the URL earlier than scanning it. Any suspicion on the URL ought to entice the consumer to not use the QR code. Moreover:

- QR codes resulting in actions akin to login or present data ought to increase suspicion and must be rigorously analyzed.

- Safety options additionally would possibly assist, as they’ll detect phishing URLs. URLs ought to at all times be scanned by such a software.

- Funds shouldn’t be carried out by QR code except you’re assured that it’s respectable.

Microsoft Sway is just not the one respectable product that may be used by cybercriminals to host phishing pages.

“We recurrently observe respectable websites or purposes getting used to host quishing or phishing, together with Github, Gitbooks or Google Docs, for instance, each day,” Damonneville stated. “To not point out all of the URL shorteners in the marketplace, or free internet hosting websites, extensively used to cover a URL simply.”

This as soon as once more enforces the concept that customers’ consciousness must be raised and workers have to be skilled to tell apart a suspicious URL from a respectable one.

Disclosure: I work for Pattern Micro, however the views expressed on this article are mine.