Convolutional neural networks (CNNs) are nice – they’re capable of detect options in a picture regardless of the place. Nicely, not precisely. They’re not detached to only any sort of motion. Shifting up or down, or left or proper, is ok; rotating round an axis just isn’t. That’s due to how convolution works: traverse by row, then traverse by column (or the opposite method spherical). If we would like “extra” (e.g., profitable detection of an upside-down object), we have to prolong convolution to an operation that’s rotation-equivariant. An operation that’s equivariant to some kind of motion won’t solely register the moved characteristic per se, but additionally, preserve monitor of which concrete motion made it seem the place it’s.

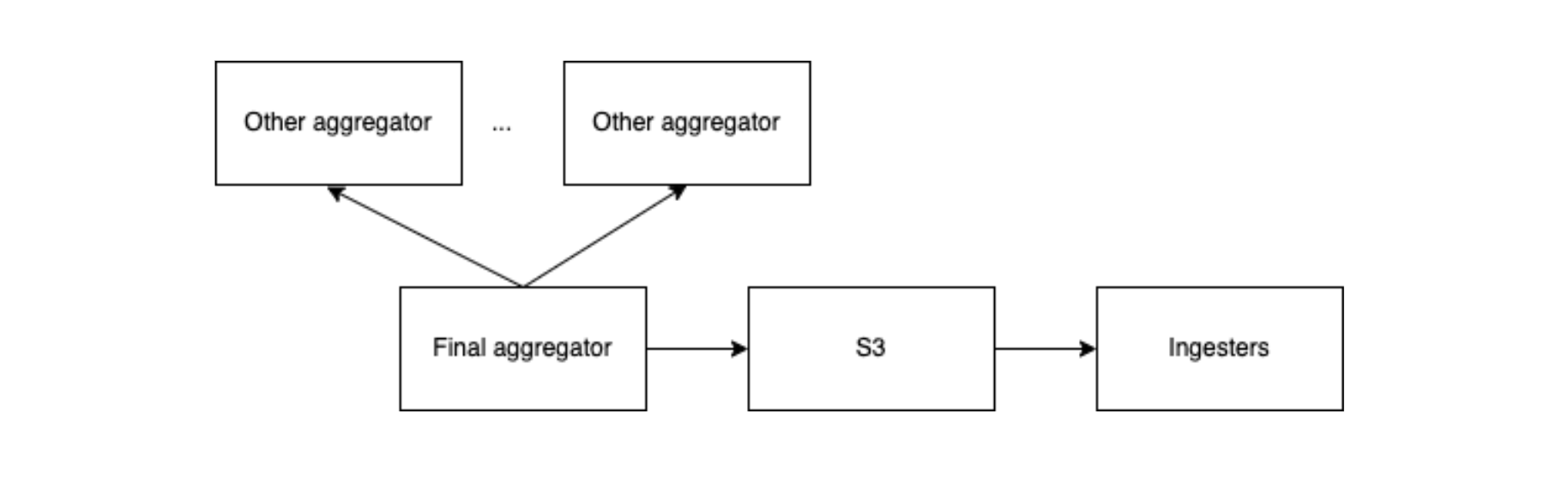

That is the second put up in a sequence that introduces group-equivariant CNNs (GCNNs). The first was a high-level introduction to why we’d need them, and the way they work. There, we launched the important thing participant, the symmetry group, which specifies what sorts of transformations are to be handled equivariantly. Should you haven’t, please check out that put up first, since right here I’ll make use of terminology and ideas it launched.

As we speak, we code a easy GCNN from scratch. Code and presentation tightly observe a pocket book offered as a part of College of Amsterdam’s 2022 Deep Studying Course. They will’t be thanked sufficient for making obtainable such glorious studying supplies.

In what follows, my intent is to clarify the final considering, and the way the ensuing structure is constructed up from smaller modules, every of which is assigned a transparent objective. For that purpose, I gained’t reproduce all of the code right here; as a substitute, I’ll make use of the package deal gcnn. Its strategies are closely annotated; so to see some particulars, don’t hesitate to have a look at the code.

As of at this time, gcnn implements one symmetry group: (C_4), the one which serves as a working instance all through put up one. It’s straightforwardly extensible, although, making use of sophistication hierarchies all through.

Step 1: The symmetry group (C_4)

In coding a GCNN, the very first thing we have to present is an implementation of the symmetry group we’d like to make use of. Right here, it’s (C_4), the four-element group that rotates by 90 levels.

We will ask gcnn to create one for us, and examine its parts.

# remotes::install_github("skeydan/gcnn")

library(gcnn)

library(torch)

C_4 <- CyclicGroup(order = 4)

elems <- C_4$parts()

elems

torch_tensor

0.0000

1.5708

3.1416

4.7124

[ CPUFloatType{4} ]

Parts are represented by their respective rotation angles: (0), (frac{pi}{2}), (pi), and (frac{3 pi}{2}).

Teams are conscious of the identification, and know tips on how to assemble a component’s inverse:

C_4$identification

g1 <- elems[2]

C_4$inverse(g1)

torch_tensor

0

[ CPUFloatType{1} ]

torch_tensor

4.71239

[ CPUFloatType{} ]

Right here, what we care about most is the group parts’ motion. Implementation-wise, we have to distinguish between them performing on one another, and their motion on the vector house (mathbb{R}^2), the place our enter photographs reside. The previous half is the straightforward one: It could merely be applied by including angles. In actual fact, that is what gcnn does after we ask it to let g1 act on g2:

g2 <- elems[3]

# in C_4$left_action_on_H(), H stands for the symmetry group

C_4$left_action_on_H(torch_tensor(g1)$unsqueeze(1), torch_tensor(g2)$unsqueeze(1))

torch_tensor

4.7124

[ CPUFloatType{1,1} ]

What’s with the unsqueeze()s? Since (C_4)’s final raison d’être is to be a part of a neural community, left_action_on_H() works with batches of parts, not scalar tensors.

Issues are a bit much less easy the place the group motion on (mathbb{R}^2) is anxious. Right here, we’d like the idea of a group illustration. That is an concerned subject, which we gained’t go into right here. In our present context, it really works about like this: We have now an enter sign, a tensor we’d prefer to function on not directly. (That “a way” will likely be convolution, as we’ll see quickly.) To render that operation group-equivariant, we first have the illustration apply the inverse group motion to the enter. That completed, we go on with the operation as if nothing had occurred.

To offer a concrete instance, let’s say the operation is a measurement. Think about a runner, standing on the foot of some mountain path, able to run up the climb. We’d prefer to file their peak. One choice we’ve is to take the measurement, then allow them to run up. Our measurement will likely be as legitimate up the mountain because it was down right here. Alternatively, we is likely to be well mannered and never make them wait. As soon as they’re up there, we ask them to return down, and after they’re again, we measure their peak. The outcome is identical: Physique peak is equivariant (greater than that: invariant, even) to the motion of working up or down. (In fact, peak is a reasonably boring measure. However one thing extra attention-grabbing, equivalent to coronary heart charge, wouldn’t have labored so properly on this instance.)

Returning to the implementation, it seems that group actions are encoded as matrices. There may be one matrix for every group aspect. For (C_4), the so-called customary illustration is a rotation matrix:

[

begin{bmatrix} cos(theta) & -sin(theta) sin(theta) & cos(theta) end{bmatrix}

]

In gcnn, the operate making use of that matrix is left_action_on_R2(). Like its sibling, it’s designed to work with batches (of group parts in addition to (mathbb{R}^2) vectors). Technically, what it does is rotate the grid the picture is outlined on, after which, re-sample the picture. To make this extra concrete, that methodology’s code appears to be like about as follows.

Here’s a goat.

img_path <- system.file("imgs", "z.jpg", package deal = "gcnn")

img <- torchvision::base_loader(img_path) |> torchvision::transform_to_tensor()

img$permute(c(2, 3, 1)) |> as.array() |> as.raster() |> plot()

First, we name C_4$left_action_on_R2() to rotate the grid.

# Grid form is [2, 1024, 1024], for a second, 1024 x 1024 picture.

img_grid_R2 <- torch::torch_stack(torch::torch_meshgrid(

record(

torch::torch_linspace(-1, 1, dim(img)[2]),

torch::torch_linspace(-1, 1, dim(img)[3])

)

))

# Remodel the picture grid with the matrix illustration of some group aspect.

transformed_grid <- C_4$left_action_on_R2(C_4$inverse(g1)$unsqueeze(1), img_grid_R2)

Second, we re-sample the picture on the remodeled grid. The goat now appears to be like as much as the sky.

transformed_img <- torch::nnf_grid_sample(

img$unsqueeze(1), transformed_grid,

align_corners = TRUE, mode = "bilinear", padding_mode = "zeros"

)

transformed_img[1,..]$permute(c(2, 3, 1)) |> as.array() |> as.raster() |> plot()

Step 2: The lifting convolution

We wish to make use of current, environment friendly torch performance as a lot as doable. Concretely, we wish to use nn_conv2d(). What we’d like, although, is a convolution kernel that’s equivariant not simply to translation, but additionally to the motion of (C_4). This may be achieved by having one kernel for every doable rotation.

Implementing that concept is strictly what LiftingConvolution does. The precept is identical as earlier than: First, the grid is rotated, after which, the kernel (weight matrix) is re-sampled to the remodeled grid.

Why, although, name this a lifting convolution? The same old convolution kernel operates on (mathbb{R}^2); whereas our prolonged model operates on mixtures of (mathbb{R}^2) and (C_4). In math converse, it has been lifted to the semi-direct product (mathbb{R}^2rtimes C_4).

lifting_conv <- LiftingConvolution(

group = CyclicGroup(order = 4),

kernel_size = 5,

in_channels = 3,

out_channels = 8

)

x <- torch::torch_randn(c(2, 3, 32, 32))

y <- lifting_conv(x)

y$form

[1] 2 8 4 28 28

Since, internally, LiftingConvolution makes use of an extra dimension to understand the product of translations and rotations, the output just isn’t four-, however five-dimensional.

Step 3: Group convolutions

Now that we’re in “group-extended house”, we will chain quite a few layers the place each enter and output are group convolution layers. For instance:

group_conv <- GroupConvolution(

group = CyclicGroup(order = 4),

kernel_size = 5,

in_channels = 8,

out_channels = 16

)

z <- group_conv(y)

z$form

[1] 2 16 4 24 24

All that continues to be to be performed is package deal this up. That’s what gcnn::GroupEquivariantCNN() does.

Step 4: Group-equivariant CNN

We will name GroupEquivariantCNN() like so.

cnn <- GroupEquivariantCNN(

group = CyclicGroup(order = 4),

kernel_size = 5,

in_channels = 1,

out_channels = 1,

num_hidden = 2, # variety of group convolutions

hidden_channels = 16 # variety of channels per group conv layer

)

img <- torch::torch_randn(c(4, 1, 32, 32))

cnn(img)$form

[1] 4 1

At informal look, this GroupEquivariantCNN appears to be like like all outdated CNN … weren’t it for the group argument.

Now, after we examine its output, we see that the extra dimension is gone. That’s as a result of after a sequence of group-to-group convolution layers, the module tasks right down to a illustration that, for every batch merchandise, retains channels solely. It thus averages not simply over places – as we usually do – however over the group dimension as properly. A ultimate linear layer will then present the requested classifier output (of dimension out_channels).

And there we’ve the entire structure. It’s time for a real-world(ish) check.

Rotated digits!

The thought is to coach two convnets, a “regular” CNN and a group-equivariant one, on the standard MNIST coaching set. Then, each are evaluated on an augmented check set the place every picture is randomly rotated by a steady rotation between 0 and 360 levels. We don’t anticipate GroupEquivariantCNN to be “good” – not if we equip with (C_4) as a symmetry group. Strictly, with (C_4), equivariance extends over 4 positions solely. However we do hope it can carry out considerably higher than the shift-equivariant-only customary structure.

First, we put together the information; particularly, the augmented check set.

dir <- "/tmp/mnist"

train_ds <- torchvision::mnist_dataset(

dir,

obtain = TRUE,

remodel = torchvision::transform_to_tensor

)

test_ds <- torchvision::mnist_dataset(

dir,

practice = FALSE,

remodel = operate(x) >

torchvision::transform_to_tensor()

)

train_dl <- dataloader(train_ds, batch_size = 128, shuffle = TRUE)

test_dl <- dataloader(test_ds, batch_size = 128)

How does it look?

test_images <- coro::acquire(

test_dl, 1

)[[1]]$x[1:32, 1, , ] |> as.array()

par(mfrow = c(4, 8), mar = rep(0, 4), mai = rep(0, 4))

test_images |>

purrr::array_tree(1) |>

purrr::map(as.raster) |>

purrr::iwalk(~ {

plot(.x)

})

We first outline and practice a traditional CNN. It’s as much like GroupEquivariantCNN(), architecture-wise, as doable, and is given twice the variety of hidden channels, in order to have comparable capability general.

default_cnn <- nn_module(

"default_cnn",

initialize = operate(kernel_size, in_channels, out_channels, num_hidden, hidden_channels) {

self$conv1 <- torch::nn_conv2d(in_channels, hidden_channels, kernel_size)

self$convs <- torch::nn_module_list()

for (i in 1:num_hidden) {

self$convs$append(torch::nn_conv2d(hidden_channels, hidden_channels, kernel_size))

}

self$avg_pool <- torch::nn_adaptive_avg_pool2d(1)

self$final_linear <- torch::nn_linear(hidden_channels, out_channels)

},

ahead = operate(x) >

self$conv1()

)

fitted <- default_cnn |>

luz::setup(

loss = torch::nn_cross_entropy_loss(),

optimizer = torch::optim_adam,

metrics = record(

luz::luz_metric_accuracy()

)

) |>

luz::set_hparams(

kernel_size = 5,

in_channels = 1,

out_channels = 10,

num_hidden = 4,

hidden_channels = 32

) %>%

luz::set_opt_hparams(lr = 1e-2, weight_decay = 1e-4) |>

luz::match(train_dl, epochs = 10, valid_data = test_dl)

Practice metrics: Loss: 0.0498 - Acc: 0.9843

Legitimate metrics: Loss: 3.2445 - Acc: 0.4479

Unsurprisingly, accuracy on the check set just isn’t that nice.

Subsequent, we practice the group-equivariant model.

fitted <- GroupEquivariantCNN |>

luz::setup(

loss = torch::nn_cross_entropy_loss(),

optimizer = torch::optim_adam,

metrics = record(

luz::luz_metric_accuracy()

)

) |>

luz::set_hparams(

group = CyclicGroup(order = 4),

kernel_size = 5,

in_channels = 1,

out_channels = 10,

num_hidden = 4,

hidden_channels = 16

) |>

luz::set_opt_hparams(lr = 1e-2, weight_decay = 1e-4) |>

luz::match(train_dl, epochs = 10, valid_data = test_dl)

Practice metrics: Loss: 0.1102 - Acc: 0.9667

Legitimate metrics: Loss: 0.4969 - Acc: 0.8549

For the group-equivariant CNN, accuracies on check and coaching units are so much nearer. That could be a good outcome! Let’s wrap up at this time’s exploit resuming a thought from the primary, extra high-level put up.

A problem

Going again to the augmented check set, or reasonably, the samples of digits displayed, we discover an issue. In row two, column 4, there’s a digit that “below regular circumstances”, must be a 9, however, most likely, is an upside-down 6. (To a human, what suggests that is the squiggle-like factor that appears to be discovered extra usually with sixes than with nines.) Nonetheless, you would ask: does this have to be an issue? Possibly the community simply must be taught the subtleties, the sorts of issues a human would spot?

The best way I view it, all of it relies on the context: What actually must be completed, and the way an software goes for use. With digits on a letter, I’d see no purpose why a single digit ought to seem upside-down; accordingly, full rotation equivariance can be counter-productive. In a nutshell, we arrive on the similar canonical crucial advocates of honest, simply machine studying preserve reminding us of:

At all times consider the best way an software goes for use!

In our case, although, there may be one other facet to this, a technical one. gcnn::GroupEquivariantCNN() is a straightforward wrapper, in that its layers all make use of the identical symmetry group. In precept, there is no such thing as a want to do that. With extra coding effort, totally different teams can be utilized relying on a layer’s place within the feature-detection hierarchy.

Right here, let me lastly let you know why I selected the goat image. The goat is seen by means of a red-and-white fence, a sample – barely rotated, because of the viewing angle – made up of squares (or edges, should you like). Now, for such a fence, forms of rotation equivariance equivalent to that encoded by (C_4) make plenty of sense. The goat itself, although, we’d reasonably not have look as much as the sky, the best way I illustrated (C_4) motion earlier than. Thus, what we’d do in a real-world image-classification activity is use reasonably versatile layers on the backside, and more and more restrained layers on the prime of the hierarchy.

Thanks for studying!

Photograph by Marjan Blan | @marjanblan on Unsplash

Take pleasure in this weblog? Get notified of latest posts by electronic mail:

Posts additionally obtainable at r-bloggers