Samsung says Galaxy Z Fold 6 paint is peeling on account of unofficial chargers

What it’s essential to know

- Some Galaxy telephones are experiencing paint peeling, primarily on account of third-party chargers with poor grounding, as per Samsung.

- Customers on Reddit are reporting paint points on Galaxy Z Fold 6, revealing metallic beneath.

- Utilizing an EMS massager with the machine may also trigger paint issues, hinting at points with energy circulate administration.

Samsung says some third-party chargers are inflicting the paint on some Galaxy telephones to peel and blames poor grounding in these chargers as the reason for the harm.

A wave of on-line reviews, primarily on Reddit, declare that some customers are noticing untimely paint peeling on Galaxy Z Fold 6 units, revealing the metallic beneath—a disappointing flaw for such a dear machine.

In a help web page, Samsung says that utilizing a high-speed third-party charger with dangerous grounding could cause leakage present. This will mess with the anodic coating and result in minor paint peeling.

Principally, a non-genuine charger messes with the paint’s protecting coating, weakening the adhesive and inflicting peeling. Samsung says this primarily occurs with low-quality chargers and should not be a problem with Qi-standard ones.

Nevertheless, Samsung’s point out of the Qi commonplace, which is for wi-fi charging, appears odd because the drawback is with wired charging.

Samsung’s repair is to recommend customers cost their units in a selected orientation to scale back the danger of harm.

Extra to the purpose, Samsung needs to nudge customers to stay with real Samsung chargers, including one other value on high of the already hefty value of the cellphone.

Fairly intriguingly, Samsung provides that utilizing an EMS massager with the machine could cause related points, hinting that the issue would possibly lie in how the machine handles sure energy circulate setups.

Nonetheless, Samsung says respected third-party chargers that observe business requirements are normally secure. However the South Korean tech large warns towards counterfeit or low-quality chargers, which may harm the machine by inflicting electrical leakage that corrodes its metallic components.

This challenge is very more likely to elevate doubts about Samsung’s selection to go away out the charger from the Galaxy Z Fold 6 and different units, typically pushing customers in direction of cheaper third-party choices.

Anker Prime Charger (250W, 6 ports) evaluate: Full charging management

At a look

At a look

Skilled’s Ranking

Professionals

- 6 ports

- 140W PD 3.1 charging

- Customized port management modes

- Visible charging show

- Clock Mode

Cons

- Clock Mode lacks alarm clock options

The 6-port 250W Anker Prime Charger presents a tidy strategy to cost a number of gadgets, plus customizable choices to fine-tune what gadgets get charged quickest and an easy-to-read show displaying how a lot energy goes to every machine.

All digital gadgets require a charger, both built-in or by way of an exterior energy provide, however most frequently they use a wall or desktop charger. Wall chargers plug straight into an influence socket, and also you join it to your machine utilizing a charging cable (normally USB-C). Desktop chargers hook up with the wall socket by way of a regular energy plug and cable and are extra handy when charging a number of gadgets in the identical place.

Whereas there are a lot of nice wall chargers with a number of charging ports, if you need greater than three or 4 ports you’ll want a desktop charger, such because the Anker Prime Charger. A desktop charger means much less desk cable litter, conserving the whole lot charged utilizing only one wall energy socket.

Anker Prime Charger: Design

The Anker Prime Charger does resemble a bedside alarm clock, so it’s acceptable for it to have a Cock Mode. Sadly, the charger’s Clock Mode solely exhibits the time and date and lacks any alarm capabilities.

Constructed utilizing the most recent GaN expertise, the machine is extremely small and compact for one thing with so many ports and options. It measures 4.18 x 1.58 x 3.64 inches (10.6 x 4 x 9.3cm) and weighs 22.58oz (640g).

Anker Prime Charger: Energy show

The Anker Prime Charger has a big 2.26-inch LCD on the entrance that offers you visible suggestions on the charging standing and velocity of every USB-C port. While you aren’t charging or don’t have to know the exact particulars, you possibly can set the charger’s show to point out a clock in three totally different codecs, with date and time on present. A Wi-Fi connection is important for Clock Mode to work.

Anker Prime Charger: Ports

The Anker Prime Charger boasts six charging ports: 4 USB-C conveniently positioned on the entrance and two old-school USB-A on one facet.

The primary USB-C port (C1) can output 140W, which makes it good for the 16-inch MacBook Professional, which will be fast-charged (to 50 p.c full battery in simply 25 minutes) at this velocity when utilizing Apple’s MagSafe 3 cable. Additionally it is wonderful for charging every other lower-powered machine, even your AirPods charging case, however it’s best suited to laptops that require a PD 3.1 (as much as 240W) energy supply. The opposite three USB-C ports (C2, C3, and C4) are rated at 100W charging potential.

The 2 USB-A ports can every output 22.5W.

Most energy output is 250W so you could possibly fast-charge a 140W laptop computer and nonetheless have over 100W for one more laptop computer and a smaller machine.

Anker Prime Charger: Customization

You possibly can customise charging priorities and vitality output utilizing the distinctive twist good management dial subsequent to the USB-A ports. You rotate the knob to change the cursor and press it to substantiate your choice.

In Port Precedence Mode, you possibly can manually set energy precedence for one or two ports as you require. When setting C1 because the Precedence Port you should utilize all six ports on this configuration: 100W, 45W, 45W, 45W, and 15W shared between the 2 USB-A ports. Different energy configurations may embody 70W, 70W, 45W, 30W, and 15W shared and 65W, 20W, 20W, 20W, and 15W shared.

There’s additionally a Twin-Laptop computer Mode to be sure that the laptops are on the entrance of the charging queue, and a Low Present Mode to protect the battery lifetime of telephones and low-power gadgets.

Or you possibly can depart it within the default AI Energy Mode, the place the charger itself works out the optimum prioritization of output by port–figuring out machine energy necessities (excessive, medium, or low), and adjusting energy distribution accordingly for optimum charging effectivity.

It’s also possible to alter the display screen brightness utilizing the dial.

Should you want, you should utilize the customized controls utilizing the Anker app in your telephone or pill moderately than twiddle the dial.

Anker Prime Charger: Worth

The Anker Prime Charger (250W, 6 Ports, GaNPrime) is priced at $169.99 within the U.S., £169.99 within the U.Ok., and €159,99 within the E.U. There are cheaper desktop chargers however none with 140W PD 3.1 and such exact port controls.

Foundry

Anker Prime Charger: Competitors

We have now examined the finest MacBook chargers, together with a wonderful collection of desktop chargers. None has the extent of management over port prioritization because the Anker Prime 250W, and if that appeals to you we extremely advocate this charger.

Different 140W-capable PD 3.1 desktop chargers embody the equally priced Ugreen Nexode 300W GaN Desktop Charger, which has one fewer ports (4 USB-C and one USB-A) however a better total energy output: 300W in comparison with Anker’s 250W.

Should you want greater than 4 USB-C ports and don’t give a fig about USB-A, think about the Satechi 200W USB-C 6-port PD GaN Charger that boasts six USB-C ports however has a lesser 200W most energy output.

Should you don’t want the extent of customization or visible suggestions, Anker’s personal Prime Charger (200W, 6 Ports, GaN) has the identical vary of ports however no prioritization, no show, and 50W much less most energy output, however at $79.99 / £79.99 is considerably cheaper.

Must you purchase the Anker Prime Charger?

Should you crave exact optimization of your charging ports, this stylish Anker Prime desktop charger offers you quite a lot of management and visible suggestions, plus six -top-end charging ports and a wholesome 250W whole energy output potential.

Researcher sued for sharing knowledge stolen by ransomware with media

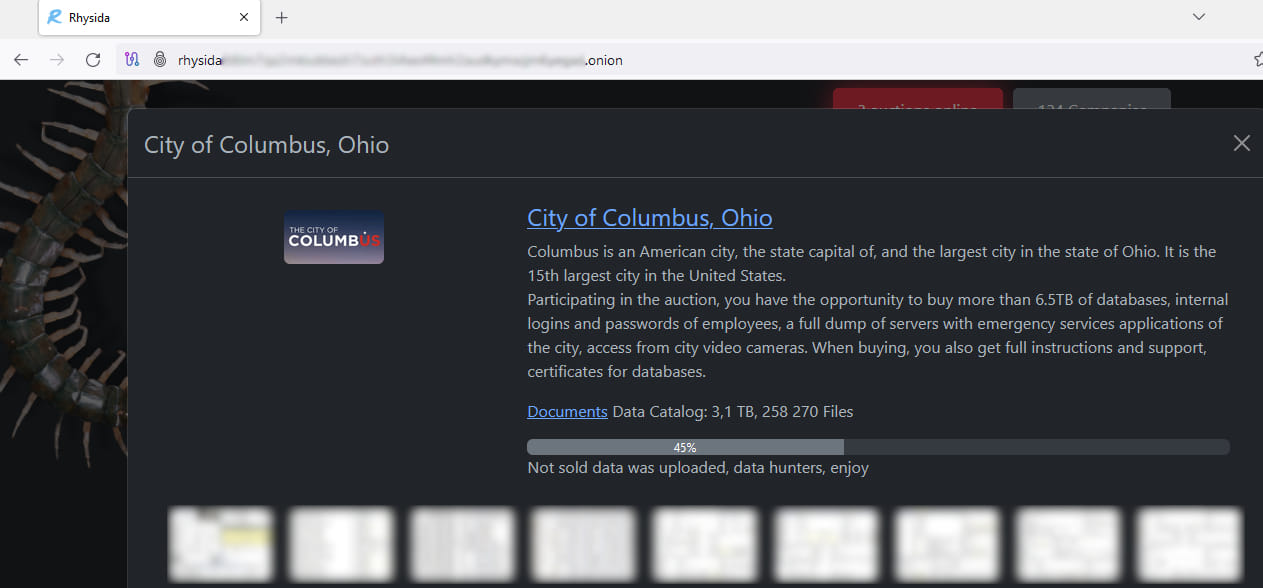

The Metropolis of Columbus, Ohio, has filed a lawsuit towards safety researcher David Leroy Ross, aka Connor Goodwolf, accusing him of illegally downloading and disseminating knowledge stolen from the Metropolis’s IT community and leaked by the Rhysida ransomware gang.

Columbus, the capital and most populous (2,140,000) metropolis in Ohio, suffered a ransomware assault on July 18, 2024, which precipitated varied service outages and unavailability of e mail and IT connectivity between public businesses.

On the finish of July, the Metropolis’s administration introduced that no techniques had been encrypted, however they have been wanting into the chance that delicate knowledge may need been stolen within the assault.

On the identical day, Rhysida ransomware claimed accountability for the assault, alleging they stole 6.5 TB of databases, together with worker credentials, server dumps, metropolis video digicam feeds, and different delicate info.

On August 8, after failing to extort the Metropolis, the menace actors revealed 45% of stolen knowledge comprising 260,000 information (3.1 TB), exposing a lot of what they beforehand claimed to be holding.

In accordance with the Metropolis’s grievance, the uncovered dataset contains two backup databases containing giant quantities of knowledge gathered by the native prosecutors and police power, relationship again to no less than 2015, containing, amongst different issues, the non-public info of undercover officers.

On the day of the information leak on Rhysida’s extortion portal on the darkish internet, Columbus Mayor Andrew Ginther acknowledged on native media that the disclosed info was neither worthwhile nor usable and that the assault had been efficiently thwarted.

A number of hours later, Goodwolf disputed the Mayor’s declare that no delicate or worthwhile knowledge was uncovered by sharing info with the media about what the leaked dataset included.

In response to this, on August 12, Mayor Ginther claimed that the uncovered knowledge was “encrypted or corrupted,” so the leak is unusable and must be of no concern to the general public.

Nevertheless, Goodwolf disputed these claims, sharing samples of the information with the media as an example that it contained unencrypted private knowledge of individuals in Columbus.

“Among the many particulars laid naked have been names from home violence circumstances, and Social Safety numbers for law enforcement officials and crime victims alike. The dump not solely impacts metropolis workers, but additionally revealed private info for residents and guests going again years,” reported NBC4.

Silencing the researcher

The lawsuit submitted by Columbus alleges that Goodwolf’s conduct of spreading stolen knowledge was each negligent and unlawful, leading to nice concern in the neighborhood.

Furthermore, the Metropolis alleges that the leaked knowledge is not accessible to anyone, as Goodwolf acknowledged, because it was revealed on a platform of restricted entry, requiring information to find.

“Defendant’s actions of downloading from the darkish internet and spreading this stolen, delicate info at a neighborhood stage has resulted in widespread concern all through the Central Ohio area,” reads the grievance.

“Solely people prepared to navigate and work together with the prison aspect on the darkish internet, who even have the pc experience and instruments essential to obtain knowledge from the darkish internet, would find a way to take action.”

The grievance notes that Goodwolf’s sharing of regulation enforcement knowledge and the alleged plans to create a web site for folks to see if their knowledge was uncovered interferes with police investigations.

The Metropolis seeks a short lived restraining order, preliminary injunction, and everlasting injunction towards Goodwolf to forestall additional dissemination of stolen knowledge. Moreover, the Metropolis is looking for damages exceeding $25,000.

In a press convention concerning the lawsuit, proven under, Metropolis Legal professional Zach Klein says that the lawsuit just isn’t about suppressing free speech, as Goodwolf can nonetheless discuss concerning the leak, however is aimed toward stopping him from downloading and disseminating the stolen info.

A Information to DynamoDB Secondary Indexes: GSI, LSI, Elasticsearch and Rockset – how to decide on the fitting indexing technique

Many growth groups flip to DynamoDB for constructing event-driven architectures and user-friendly, performant purposes at scale. As an operational database, DynamoDB is optimized for real-time transactions even when deployed throughout a number of geographic areas. Nevertheless, it doesn’t present robust efficiency for search and analytics entry patterns.

Search and Analytics on DynamoDB

Whereas NoSQL databases like DynamoDB typically have wonderful scaling traits, they help solely a restricted set of operations which are centered on on-line transaction processing. This makes it tough to go looking, filter, mixture and be a part of knowledge with out leaning closely on environment friendly indexing methods.

DynamoDB shops knowledge beneath the hood by partitioning it over a lot of nodes based mostly on a user-specified partition key discipline current in every merchandise. This user-specified partition key could be optionally mixed with a kind key to symbolize a main key. The first key acts as an index, making question operations cheap. A question operation can do equality comparisons (=)

on the partition key and comparative operations (>, <, =, BETWEEN) on the kind key if specified.

Performing analytical queries not lined by the above scheme requires using a scan operation, which is usually executed by scanning over the whole DynamoDB desk in parallel. These scans could be sluggish and costly by way of learn throughput as a result of they require a full learn of the whole desk. Scans additionally are inclined to decelerate when the desk dimension grows, as there’s

extra knowledge to scan to supply outcomes. If we wish to help analytical queries with out encountering prohibitive scan prices, we will leverage secondary indexes, which we’ll talk about subsequent.

Indexing in DynamoDB

In DynamoDB, secondary indexes are sometimes used to enhance utility efficiency by indexing fields which are queried regularly. Question operations on secondary indexes may also be used to energy particular options by analytic queries which have clearly outlined necessities.

Secondary indexes consist of making partition keys and optionally available type keys over fields that we wish to question. There are two sorts of secondary indexes:

- Native secondary indexes (LSIs): LSIs lengthen the hash and vary key attributes for a single partition.

- World secondary indexes (GSIs): GSIs are indexes which are utilized to a complete desk as a substitute of a single partition.

Nevertheless, as Nike found, overusing GSIs in DynamoDB could be costly. Analytics in DynamoDB, until they’re used just for quite simple level lookups or small vary scans, can lead to overuse of secondary indexes and excessive prices.

The prices for provisioned capability when utilizing indexes can add up shortly as a result of all updates to the bottom desk must be made within the corresponding GSIs as nicely. Actually, AWS advises that the provisioned write capability for a world secondary index ought to be equal to or larger than the write capability of the bottom desk to keep away from throttling writes to the bottom desk and crippling the applying. The price of provisioned write capability grows linearly with the variety of GSIs configured, making it value prohibitive to make use of many GSIs to help many entry patterns.

DynamoDB can be not well-designed to index knowledge in nested buildings, together with arrays and objects. Earlier than indexing the information, customers might want to denormalize the information, flattening the nested objects and arrays. This might tremendously enhance the variety of writes and related prices.

For a extra detailed examination of utilizing DynamoDB secondary indexes for analytics, see our weblog Secondary Indexes For Analytics On DynamoDB.

The underside line is that for analytical use instances, you possibly can achieve vital efficiency and price benefits by syncing the DynamoDB desk with a special software or service that acts as an exterior secondary index for working advanced analytics effectively.

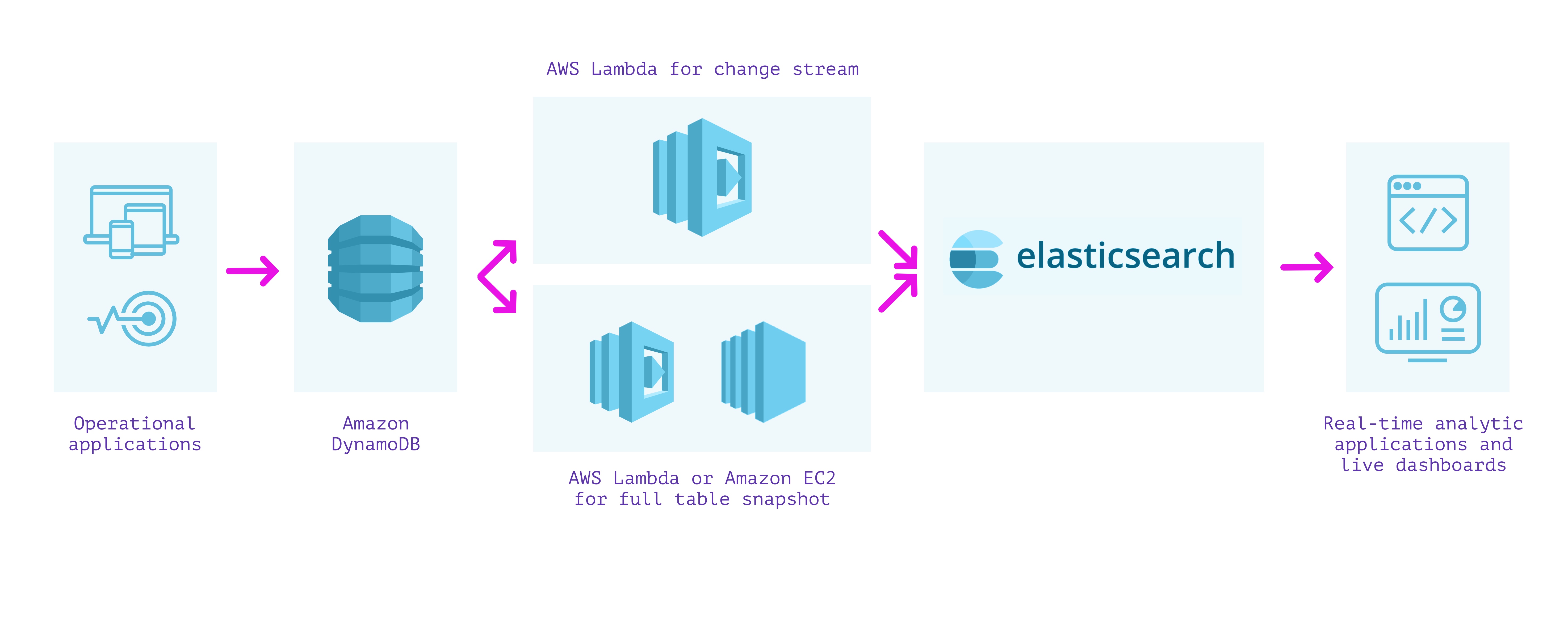

DynamoDB + Elasticsearch

One strategy to constructing a secondary index over our knowledge is to make use of DynamoDB with Elasticsearch. Cloud-based Elasticsearch, comparable to Elastic Cloud or Amazon OpenSearch Service, can be utilized to provision and configure nodes in line with the scale of the indexes, replication, and different necessities. A managed cluster requires some operations to improve, safe, and hold performant, however much less so than working it solely by your self on EC2 cases.

Because the strategy utilizing the Logstash Plugin for Amazon DynamoDB is unsupported and moderately tough to arrange, we will as a substitute stream writes from DynamoDB into Elasticsearch utilizing DynamoDB Streams and an AWS Lambda operate. This strategy requires us to carry out two separate steps:

- We first create a lambda operate that’s invoked on the DynamoDB stream to publish every replace because it happens in DynamoDB into Elasticsearch.

- We then create a lambda operate (or EC2 occasion working a script if it would take longer than the lambda execution timeout) to publish all the prevailing contents of DynamoDB into Elasticsearch.

We should write and wire up each of those lambda capabilities with the right permissions with a view to be sure that we don’t miss any writes into our tables. When they’re arrange together with required monitoring, we will obtain paperwork in Elasticsearch from DynamoDB and may use Elasticsearch to run analytical queries on the information.

The benefit of this strategy is that Elasticsearch helps full-text indexing and a number of other sorts of analytical queries. Elasticsearch helps purchasers in numerous languages and instruments like Kibana for visualization that may assist shortly construct dashboards. When a cluster is configured accurately, question latencies could be tuned for quick analytical queries over knowledge flowing into Elasticsearch.

Disadvantages embrace that the setup and upkeep value of the answer could be excessive. Even managed Elasticsearch requires coping with replication, resharding, index progress, and efficiency tuning of the underlying cases.

Elasticsearch has a tightly coupled structure that doesn’t separate compute and storage. This implies sources are sometimes overprovisioned as a result of they can’t be independently scaled. As well as, a number of workloads, comparable to reads and writes, will contend for a similar compute sources.

Elasticsearch additionally can not deal with updates effectively. Updating any discipline will set off a reindexing of the whole doc. Elasticsearch paperwork are immutable, so any replace requires a brand new doc to be listed and the previous model marked deleted. This ends in extra compute and I/O expended to reindex even the unchanged fields and to write down total paperwork upon replace.

As a result of lambdas fireplace once they see an replace within the DynamoDB stream, they’ll have have latency spikes as a result of chilly begins. The setup requires metrics and monitoring to make sure that it’s accurately processing occasions from the DynamoDB stream and capable of write into Elasticsearch.

Functionally, by way of analytical queries, Elasticsearch lacks help for joins, that are helpful for advanced analytical queries that contain multiple index. Elasticsearch customers typically must denormalize knowledge, carry out application-side joins, or use nested objects or parent-child relationships to get round this limitation.

Benefits

- Full-text search help

- Help for a number of sorts of analytical queries

- Can work over the newest knowledge in DynamoDB

Disadvantages

- Requires administration and monitoring of infrastructure for ingesting, indexing, replication, and sharding

- Tightly coupled structure ends in useful resource overprovisioning and compute rivalry

- Inefficient updates

- Requires separate system to make sure knowledge integrity and consistency between DynamoDB and Elasticsearch

- No help for joins between completely different indexes

This strategy can work nicely when implementing full-text search over the information in DynamoDB and dashboards utilizing Kibana. Nevertheless, the operations required to tune and preserve an Elasticsearch cluster in manufacturing, its inefficient use of sources and lack of be a part of capabilities could be difficult.

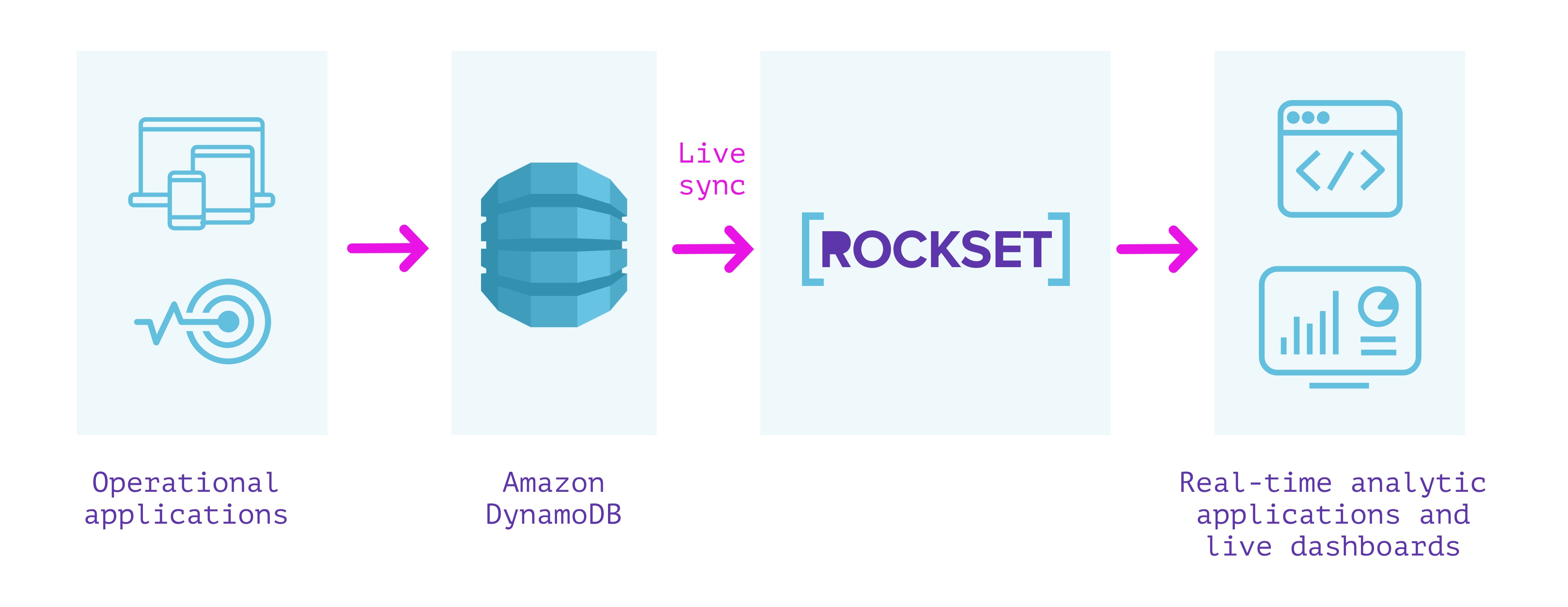

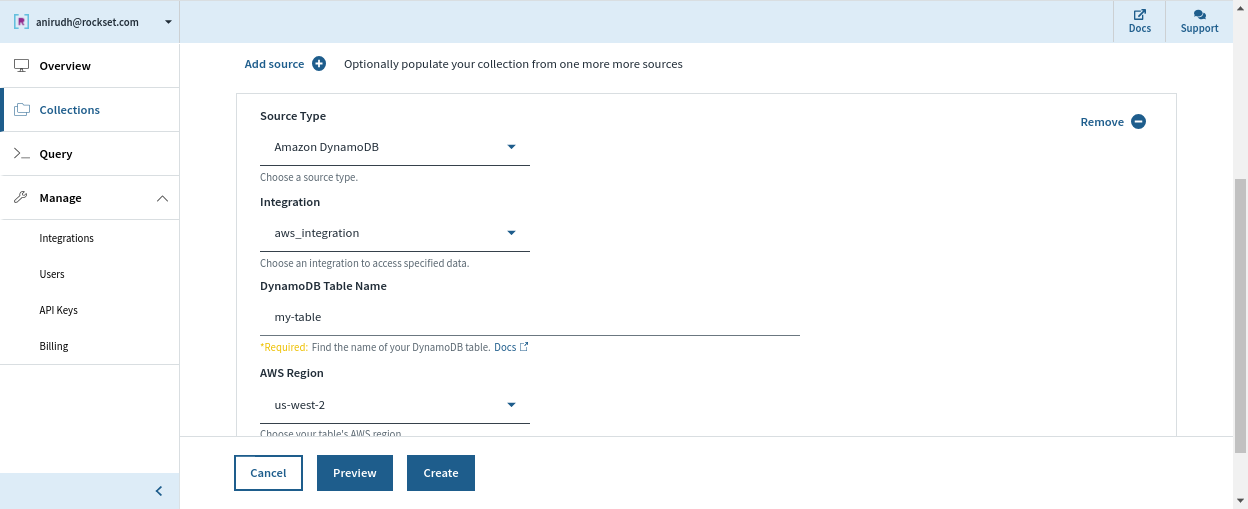

DynamoDB + Rockset

Rockset is a totally managed search and analytics database constructed primarily to help real-time purposes with excessive QPS necessities. It’s typically used as an exterior secondary index for knowledge from OLTP databases.

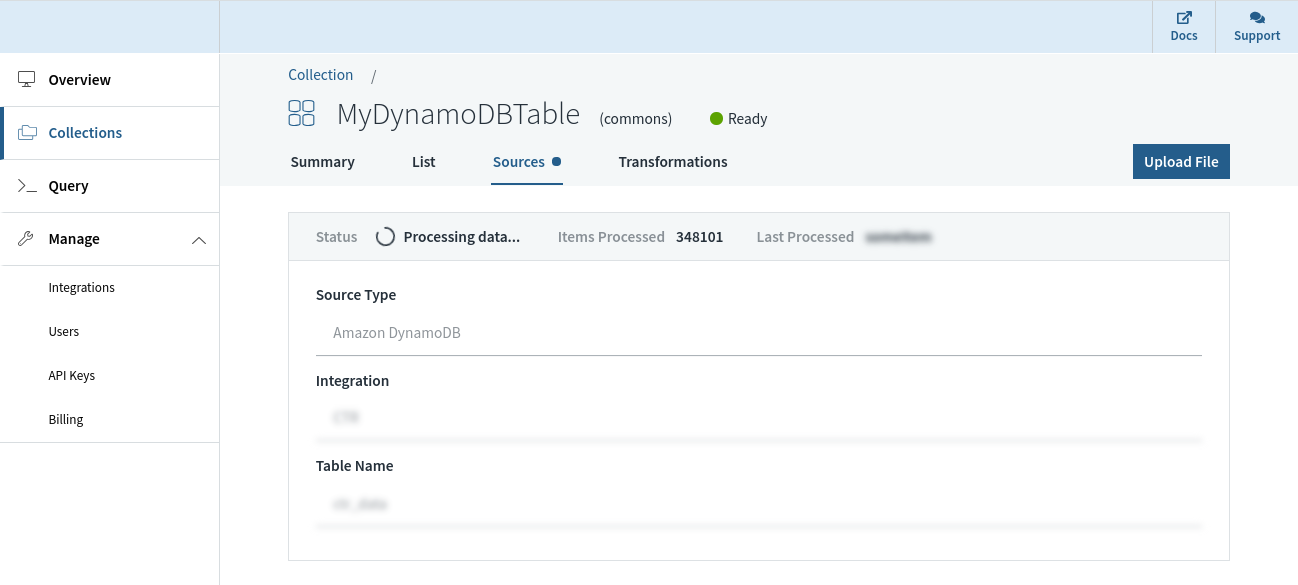

Rockset has a built-in connector with DynamoDB that can be utilized to maintain knowledge in sync between DynamoDB and Rockset. We will specify the DynamoDB desk we wish to sync contents from and a Rockset assortment that indexes the desk. Rockset indexes the contents of the DynamoDB desk in a full snapshot after which syncs new adjustments as they happen. The contents of the Rockset assortment are at all times in sync with the DynamoDB supply; no quite a lot of seconds aside in regular state.

Rockset manages the information integrity and consistency between the DynamoDB desk and the Rockset assortment mechanically by monitoring the state of the stream and offering visibility into the streaming adjustments from DynamoDB.

And not using a schema definition, a Rockset assortment can mechanically adapt when fields are added/eliminated, or when the construction/kind of the information itself adjustments in DynamoDB. That is made potential by robust dynamic typing and good schemas that obviate the necessity for any extra ETL.

The Rockset assortment we sourced from DynamoDB helps SQL for querying and could be simply utilized by builders with out having to study a domain-specific language. It may also be used to serve queries to purposes over a REST API or utilizing consumer libraries in a number of programming languages. The superset of ANSI SQL that Rockset helps can work natively on deeply nested JSON arrays and objects, and leverage indexes which are mechanically constructed over all fields, to get millisecond latencies on even advanced analytical queries.

Rockset has pioneered compute-compute separation, which permits isolation of workloads in separate compute models whereas sharing the identical underlying real-time knowledge. This provides customers larger useful resource effectivity when supporting simultaneous ingestion and queries or a number of purposes on the identical knowledge set.

As well as, Rockset takes care of safety, encryption of information, and role-based entry management for managing entry to it. Rockset customers can keep away from the necessity for ETL by leveraging ingest transformations we will arrange in Rockset to switch the information because it arrives into a set. Customers also can optionally handle the lifecycle of the information by organising retention insurance policies to mechanically purge older knowledge. Each knowledge ingestion and question serving are mechanically managed, which lets us concentrate on constructing and deploying dwell dashboards and purposes whereas eradicating the necessity for infrastructure administration and operations.

Particularly related in relation to syncing with DynamoDB, Rockset helps in-place field-level updates, in order to keep away from pricey reindexing. Evaluate Rockset and Elasticsearch by way of ingestion, querying and effectivity to decide on the fitting software for the job.

Abstract

- Constructed to ship excessive QPS and serve real-time purposes

- Utterly serverless. No operations or provisioning of infrastructure or database required

- Compute-compute separation for predictable efficiency and environment friendly useful resource utilization

- Reside sync between DynamoDB and the Rockset assortment, in order that they’re by no means quite a lot of seconds aside

- Monitoring to make sure consistency between DynamoDB and Rockset

- Automated indexes constructed over the information enabling low-latency queries

- In-place updates that avoids costly reindexing and lowers knowledge latency

- Joins with knowledge from different sources comparable to Amazon Kinesis, Apache Kafka, Amazon S3, and so forth.

We will use Rockset for implementing real-time analytics over the information in DynamoDB with none operational, scaling, or upkeep issues. This may considerably pace up the event of real-time purposes. If you would like to construct your utility on DynamoDB knowledge utilizing Rockset, you will get began without cost on right here.

Researchers at Netskope final month noticed a 2000-fold enhance in visitors to

Researchers at Netskope final month noticed a 2000-fold enhance in visitors to