1. Introduction

The analysis and engineering neighborhood at giant have been constantly iterating upon Massive Language Fashions (LLMs) as a way to make them extra educated, general-purpose, and able to becoming into more and more advanced workflows. Over the previous few years, LLMs have progressed from text-only fashions to having multi-modal capabilities; now, we’re more and more seeing a development towards LLMs as a part of compound AI methods. This paradigm envisions an LLM as an integral half of a bigger engineering setting, versus an end-to-end pipeline in and of itself. At Databricks, now we have discovered that this compound AI system mannequin is extra aligned with real-world purposes.

To ensure that an LLM to function as half of a bigger system, it must have instrument use capabilities. Such capabilities allow an LLM to obtain inputs from and produce outputs to exterior sources. At the moment, probably the most generally used instrument is operate calling, or the power to work together with exterior code similar to APIs or customized features. Including this functionality transforms LLMs from remoted textual content processors into integral components of bigger, extra advanced AI methods. Nonetheless, operate calling wants an LLM that may do three issues: interpret person requests precisely, resolve if the request wants exterior code, and assemble a accurately formatted operate name with the proper arguments.

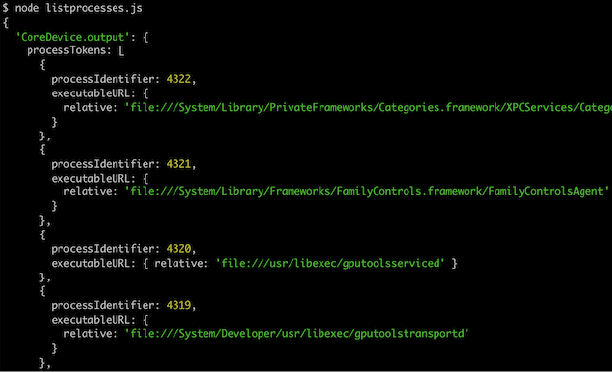

Take into account the next easy instance:

System: You are an AI Assistant who can use operate calls to assist reply the person's queries. You may have entry to a number of climate-related features: get_weather(metropolis, state_abbr), get_timezone(latitude, longitude), get_nearest_station_id...

Consumer: What's the climate in San Francisco?Provided that the LLM has been made conscious of a number of features utilizing the system immediate, it first wants to grasp what the person needs. On this case, the query is pretty simple. Now, it must examine if it wants exterior features and if any of the accessible features are related. On this case, the get_weather() operate must be used. Even when the LLM has gotten this far, it now must plug within the right arguments. On this case, it’s clear that metropolis=”San Francisco” and state_abbr=”CA”. Subsequently, it must generate the next output:

Assistant: get_weather("San Francisco", "CA")Now, the compound system constructed on high of the LLM can use this output to make the suitable operate name, get the output, and both return it to the person or feed it again into the LLM to format it properly.

From the above instance, we will see that even a easy question involving operate calling requires the LLM to get many issues proper. However which LLM to make use of? Do all LLMs possess this functionality? Earlier than we will resolve that, we have to first perceive tips on how to measure it.

On this weblog publish, we’ll discover operate calling in additional element, beginning with what it’s and tips on how to consider it. We’ll give attention to two distinguished evals: the Berkeley Operate Calling Leaderboard (BFCL) and the Nexus Operate Calling Leaderboard (NFCL). We’ll focus on the precise features of operate calling that these evals measure in addition to their strengths and limitations. As we’ll see, it’s sadly not a one-size-fits-all technique. To get a holistic image of a mannequin’s capability to carry out operate calling, we have to think about a number of elements and analysis strategies.

We’ll share what we have discovered from working these evaluations and focus on the way it may also help us select the proper mannequin for sure duties. We additionally define methods for bettering an LLM’s operate calling and gear use talents. Particularly, we show that the efficiency of smaller, open supply fashions like DBRX and LLama-3-70b will be elevated by a mix of cautious prompting and parsing methods, bringing them nearer to and even surpassing GPT-4 high quality in sure features.

What’s operate calling, and why is it helpful?

Operate calling is a instrument that enables an LLM to work together with exterior methods utilizing APIs and customized features. Observe that “instrument use” and “operate calling” are sometimes used interchangeably within the literature; operate calling was the primary kind of instrument launched and stays one of the popularly used instruments so far. On this weblog, we seek advice from operate calling as a selected kind of instrument use. To be able to use operate calling, the person first supplies the mannequin with a set of accessible features and their required arguments, usually described utilizing JSON schemas. This provides the mannequin the syntactical construction of the operate in addition to descriptions of every argument. When offered with a person question, the mannequin identifies which (if any) features are related. It then generates the proper operate name, full with the required arguments.

At Databricks, we have noticed two main enterprise use circumstances that leverage operate calling:

- Brokers and sophisticated multi-turn chatbots

- Batch inference function extraction

Brokers

There’s a rising curiosity in “agentic” capabilities for LLMs. Usually talking, an LLM Agent ought to have the ability to full a fancy process that will require a number of steps with minimal person intervention. In apply, operate calling is usually needed to finish a number of of those steps: as mentioned earlier, it’s the underlying functionality that allows an LLM to work together with present software program infrastructure similar to databases, e.g. by way of REST APIs.

Take into account the next state of affairs: You’re a giant enterprise with a considerable amount of monetary projection knowledge in a SQL database. With operate calling, now you can construct a chatbot that has learn entry to that knowledge. An inner person can ask the chatbot a fancy query like “What’s the projected income for our XYZ product on this fiscal 12 months primarily based on final 12 months’s tendencies?” and the chatbot can use operate calling to request monetary knowledge between two dates which it might probably then use to present an correct estimate. Right here’s an instance of what this may occasionally seem like:

Consumer: "What's the projected income for our XYZ product on this fiscal 12 months primarily based on final 12 months's tendencies?"

Chatbot: To reply this query, I'll have to retrieve some monetary knowledge. Let me examine our database.

[Function Call: get_financial_data]

Parameters:

- product: "XYZ"

- start_date: "2023-07-01" // Assuming final fiscal 12 months began on this date

- end_date: "2024-06-30" // Present fiscal 12 months finish

- columns: ["last_year_revenue", "growth_rate"]

# this might both be instantly executed by designing the chatbot as a compound AI

# system wrapping the mannequin, or manually executed by the person who returns the

# response

[Function Response]

{

"last_year_revenue": 10000000,

"growth_rate": 0.15,

}

Chatbot: Primarily based on the info I've retrieved, I can present you with a projection for XYZ's income this fiscal 12 months:

Final 12 months's income for XYZ was $10 million. The product has proven a progress price of 15% 12 months-over-12 months.

Projecting this development ahead, we will estimate that the overall income for XYZ this fiscal 12 months might be roughly $11.5 million. This projection takes into account the present progress price and the efficiency to date this 12 months.

Would you like me to break down this calculation additional or present any further details about the projection?Batched Function Extraction

Operate calling often refers back to the LLM’s capability to name a operate from user-provided APIs or features. However it additionally means the mannequin should output the operate name within the actual format outlined by the operate’s signature and outline. In apply, that is achieved by utilizing JSON as a illustration of the operate. This facet will be exploited to resolve a prevalent use case: extracting structured knowledge within the type of JSON objects from unstructured knowledge. We seek advice from this as “batched function extraction,” and discover that it’s pretty widespread for enterprises to leverage operate calling as a way to carry out this process. For instance, a authorized agency may use an LLM with function-calling capabilities to course of big collections of contracts to extract key clauses, determine potential dangers, and categorize every doc primarily based on its content material. Utilizing operate calling on this method permits this authorized agency to transform a considerable amount of knowledge into easy JSONs which might be straightforward to parse and achieve insights from.

2. Analysis Frameworks

The above use circumstances present that by bridging the hole between pure language understanding and sensible, real-world actions, operate calling considerably expands the potential purposes of LLMs in enterprise settings. Nonetheless, the query of which LLM to make use of nonetheless stays unanswered. Whereas one would count on most LLMs to be extraordinarily good at these duties, on nearer examination, we discover that they endure from widespread failure modes rendering them unreliable and troublesome to make use of, significantly in enterprise settings. Subsequently, like in all issues LLM, dependable evals are of paramount significance.

Regardless of the rising curiosity in operate calling (particularly from enterprise customers), present operate calling evals don’t at all times agree of their format or outcomes. Subsequently, evaluating operate calling correctly is non-trivial and requires combining a number of evals and extra importantly, understanding each’s strengths and weaknesses. For this weblog, we’ll give attention to easy, single-turn operate calling and leverage the two most common evals: Berkeley Operate Calling Leaderboard (BFCL) and Nexus Operate Calling Leaderboard (NFCL).

Berkeley Operate Calling Leaderboard

The Berkeley Operate Calling Leaderboard (BFCL) is a well-liked public function-calling eval that’s stored up-to-date with the newest mannequin releases. It’s created and maintained by the creators of Gorilla-openfunctions-v2, an OSS mannequin constructed for operate calling. Regardless of some limitations, BFCL is a superb analysis framework; a excessive rating on its leaderboard usually signifies sturdy function-calling capabilities. As described on this weblog, the eval consists of the next classes. (Observe that BFCL additionally incorporates check circumstances with REST APIs and in addition features in numerous languages. However the overwhelming majority of checks are in Python which is the subset that we think about.)

- Easy Operate incorporates the only format: the person supplies a single operate description, and the question solely requires that operate to be referred to as.

- A number of Operate is barely tougher, on condition that the person supplies 2-4 operate descriptions and the mannequin wants to pick out one of the best operate amongst them to invoke as a way to reply the question.

- Parallel Operate requires invoking a number of operate calls in parallel with one person question. Like Easy Operate, the LLM is given solely a single operate description.

- Parallel A number of Operate is the mix of Parallel and A number of. The mannequin is supplied with a number of operate descriptions, and every of them might should be invoked zero or a number of instances.

- Relevance Detection consists purely of eventualities the place not one of the supplied features are related, and the mannequin shouldn’t invoke any of them.

One can even view these classes from the lens of what expertise it calls for of the mannequin:

- Easy merely wants the mannequin to generate the proper arguments primarily based on the question.

- A number of requires that the mannequin have the ability to select the proper operate along with selecting its arguments.

- Parallel requires that the mannequin resolve what number of instances it must invoke the given operate and what arguments it wants for every invocation.

- Parallel A number of checks if the mannequin possesses all the above expertise.

- Relevance Detection checks if the mannequin is ready to discern when it wants to make use of operate calling and when to not. Nonetheless, Relevance Detection solely incorporates examples the place not one of the features are related. Subsequently, a mannequin that’s unable to ever carry out operate calling would seemingly rating 100% on it. Nonetheless, given {that a} mannequin performs nicely within the different classes, it turns into an especially useful eval. This as soon as once more underscores the significance of understanding these evals nicely and viewing them holistically.

Every of the above classes will be evaluated by checking the Summary Syntax Tree (AST) or really executing the operate name. The AST analysis first constructs the summary syntax tree of the operate name, then extracts the arguments and checks in the event that they match the bottom fact’s doable solutions. (Footnote: For extra particulars seek advice from: https://gorilla.cs.berkeley.edu/blogs/8_berkeley_function_calling_leaderboard.html#bfcl)

We discovered that the AST analysis accuracy correlates nicely with the Executable analysis and, subsequently, solely thought-about AST.

| Strengths | Weaknesses |

|---|---|

| BFCL is pretty numerous and has a number of classes in every class. | The reference implementation applies bespoke parsing for a number of fashions which makes it troublesome to check pretty throughout fashions (Observe: in our implementation, we normalize the parsing throughout fashions to solely embody minimal parsing of the mannequin’s output.) |

| Broadly accepted locally. | A number of classes in BFCL are far too straightforward and never consultant of real-world use circumstances. Classes like easy and a number of seem like saturated and we consider that a lot of the greatest fashions have already crossed the noise ceiling right here. |

| Relevance detection is a vital functionality, significantly in real-world purposes. |

Nexus Operate Calling Leaderboard

The Nexus Operate Calling Leaderboard (NFCL) can be a single flip operate calling eval; not like BFCL, it doesn’t embody relevance detection. Nonetheless, it has a number of different options that make it an efficient eval for enterprise operate calling. It’s from the creators of the NexusRaven-v2 which is an OSS mannequin aimed toward operate calling. Whereas the NFCL reviews that it outperforms even GPT-4, it solely will get 68.06% on BFCL. This discrepancy as soon as once more reveals the significance of understanding what the eval numbers on a selected benchmark imply for a selected software.

The NFCL classes are break up primarily based on the supply of their APIs relatively than the sort of analysis. Nonetheless, additionally they differ in issue, as we describe beneath.

- NVD Library: The queries on this class are primarily based on the 2 search APIs from the Nationwide Vulnerability Database: searchCVE and searchCPE. Since there are solely two APIs to select from, this can be a comparatively straightforward process that solely requires calling one in every of them. The complexity arises from the truth that every operate has round 30 arguments.

- VirusTotal: These are primarily based on the VirusTotal APIs that are used to research suspicious recordsdata and URLs. There are 12 APIs however they’re easier than NVD. Subsequently, fashions usually rating barely larger on VirusTotal than NVD. VirusTotal nonetheless requires solely a single operate name.

- OTX: These are primarily based on the Open Risk Alternate APIs. There are 9 very simple APIs and that is often the class the place most fashions rating the best.

- Locations: These are primarily based on a set of APIs which might be associated to querying particulars about places. Whereas there are solely 7 pretty easy features, the questions require nested operate calls (eg., fun1(fun2(fun3(args))) ) which makes it tough for many fashions. Whereas a number of of the questions require just one operate name, many require nesting of as much as 7 features.

- Local weather API: Because the title suggests, that is primarily based on APIs used to retrieve local weather knowledge. Once more, whereas there are solely 9 easy features, they usually require a number of parallel calls and nested calls, making this benchmark fairly troublesome for many fashions.

- VirusTotal Nested: That is primarily based on the identical APIs because the VirusTotal benchmark, however the questions all require nested operate calls to be answered. This is among the hardest benchmarks, primarily as a result of most fashions weren’t designed to output nested operate calls.

- NVD Nested: That is primarily based on the identical APIs because the NVD benchmark, however the questions require nested operate calls to be answered. Not one of the fashions now we have examined have been capable of rating larger than 10% on this benchmark.

Observe that whereas we seek advice from the above classes as involving APIs, they’re applied utilizing static dummy Python operate definitions whose signatures are primarily based on real-world APIs. Beneath the BFCL taxonomy, NVD, VirustTotal and OTX classes could be labeled as A number of Operate however with extra candidate features to select from. The parallel examples in Local weather could be categorized as Parallel Operate, whereas the nested examples within the remaining classes would not have an equal. In reality, nested operate calls are a considerably uncommon eval since they’re usually dealt with by multi-turn interactions within the function-calling world. This additionally explains why most fashions, together with GPT-4, battle with them. Along with seemingly being out of distribution from the mannequin’s coaching knowledge, the LLM should plan the order of operate invocations and plug them into the proper argument of the later operate calls. We discover that regardless of not being consultant of typical use circumstances, it’s a helpful eval because it checks each planning and structured output era whereas being much less vulnerable to eval overfitting.

Scoring for NFCL is predicated purely on string matching on the ultimate operate name generated by the mannequin. Whereas this isn’t ultimate, we discover that it not often, if in any respect, results in false positives.

| Strengths | Weaknesses |

|---|---|

| Aside from OTX, not one of the classes seem like exhibiting indicators of saturation and usually reveal a big hole between fashions whose function-calling capabilities are anticipated to be completely different. | Most function-calling implementations seek advice from the OpenAI spec; subsequently, they’re unlikely to resolve the nested classes with out breaking it down right into a multi-turn interplay. |

| The tougher classes requiring nested and parallel calls are nonetheless difficult, even for fashions like GPT-4. We consider that whereas clients might not use this functionality instantly, it’s consultant of the mannequin’s capability to plan and execute which is important for advanced real-world purposes. | The scoring is predicated on actual string matching of the operate calls and could also be resulting in false negatives. |

| A few of the operate descriptions are missing and will be improved. Moreover, a number of of them are atypical in that they’ve numerous arguments or don’t have any required arguments. | |

| Not one of the examples check relevance detection. |

3. Outcomes from working the evals

To be able to make a good comparability throughout completely different fashions, we determined to run the evals ourselves with some minor modifications. These adjustments have been primarily made to maintain the prompting and parsing uniform throughout fashions.

We discovered that evaluating even on publicly accessible benchmarks is usually nuanced because the conduct can range wildly with completely different era kwargs. For instance, we discover that accuracy can range as a lot as 10% in some classes of BFCL when producing with Temperature 0.0 vs Temperature 0.7. Since function-calling is a reasonably programmatic process, we discover that utilizing Temperature 0.0 often ends in one of the best efficiency throughout fashions. We made the choice to incorporate the operate definitions and descriptions within the system immediate as repeating them in every person immediate would incur a a lot larger token price in multi-turn conversations. We additionally used the identical minimal parsing throughout fashions in our implementations for each NFCL and BFCL. Observe that the DBRX-instruct numbers that we report are decrease than that from the publicly hosted leaderboard whereas the numbers for the opposite fashions are larger. It’s because the general public leaderboard makes use of Temperature 0.7 and bespoke parsing for DBRX.

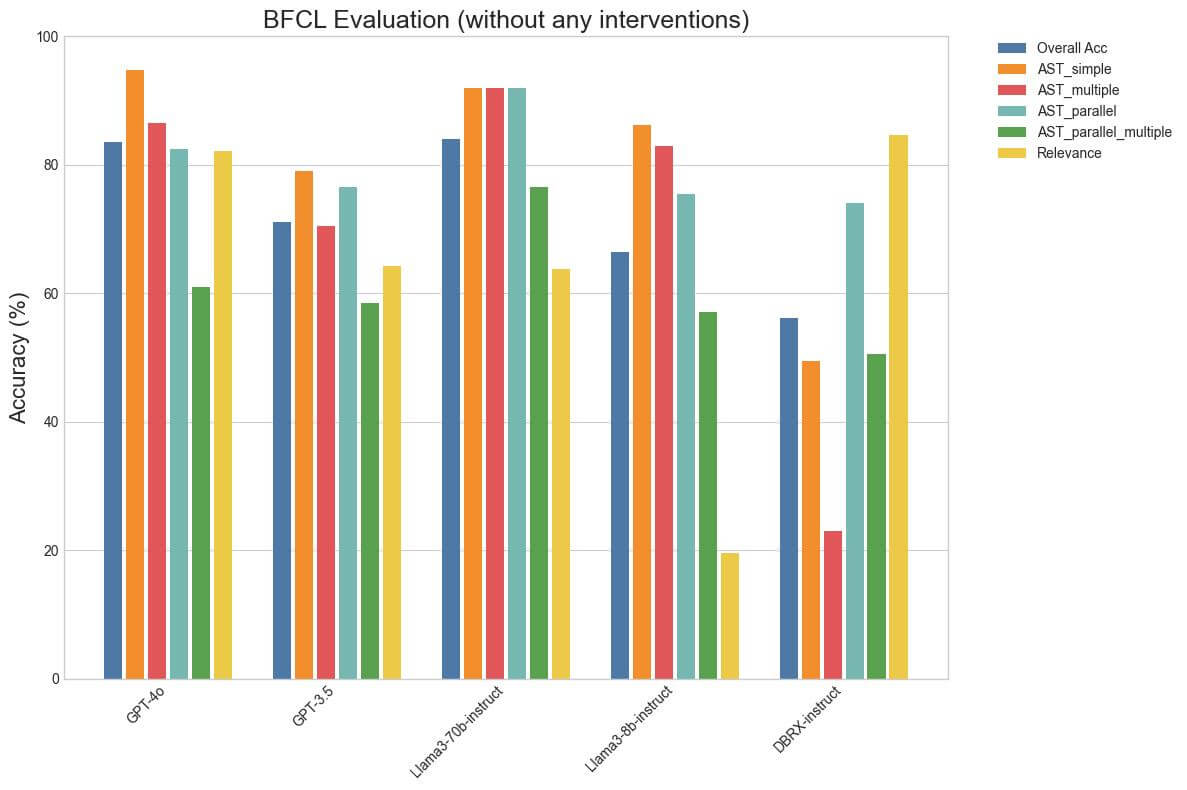

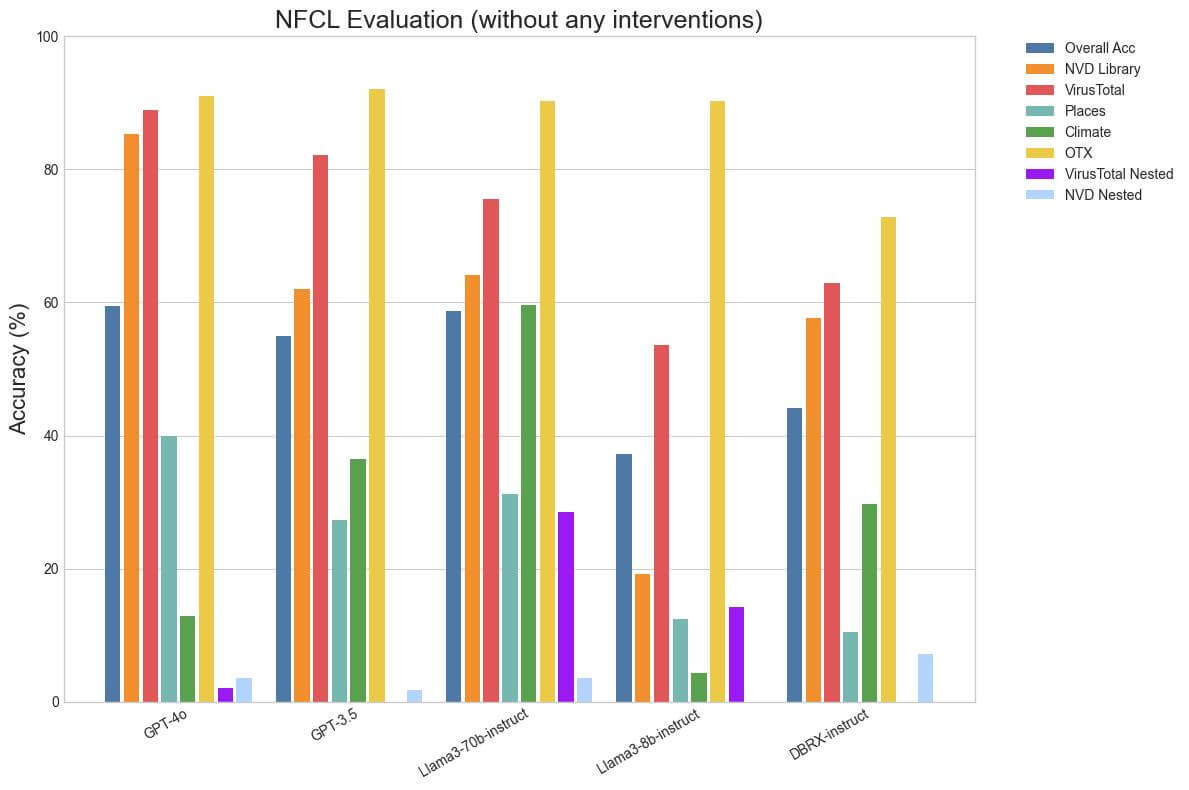

We discover that the outcomes on NFCL with none adjustments align with the anticipated ordering, in that GPT-4o is one of the best in most classes, adopted intently by Llama3-70b-instruct, then GPT-3.5 after which DBRX-instruct. Llama3-70b-instruct closes the hole to GPT-4o on Local weather and Locations, seemingly as a result of they require nested calls. Considerably surprisingly, DBRX-instruct performs one of the best on NVD Nested regardless of not being educated explicitly for function-calling. We suspect that it’s because it’s not biased towards nested operate calls and easily solves it as a programming train. BFCL reveals some indicators of saturation, in that Llama3-70b-instruct outperforms GPT-4o in nearly each class apart from Relevance Detection, though the latter has seemingly been educated explicitly for function-calling because it helps instrument use. In reality, LLaMa-3-8b-instruct is surprisingly near GPT-4 on a number of BFCL classes regardless of being a clearly inferior mannequin. We posit {that a} excessive rating on BFCL is a needed, relatively than ample, situation to be good at operate calling. Low scores point out {that a} mannequin clearly struggles with operate calling whereas a excessive rating doesn’t assure {that a} mannequin is best at operate calling.

4. Bettering Operate-calling Efficiency

As soon as now we have a dependable method to consider a functionality and know tips on how to interpret the outcomes, the plain subsequent step is to attempt to enhance these outcomes. We discovered that one of many keys to unlocking a mannequin’s function-calling talents is specifying an in depth system immediate that provides the mannequin the power to purpose earlier than making a call on which operate to name, if any. Additional, directing it to construction its outputs utilizing XML tags and a considerably strict format makes parsing the operate name straightforward and dependable. This eliminates the necessity for bespoke parsing strategies for various fashions and purposes.

One other key aspect is making certain that the mannequin is given entry to the main points of the operate, its arguments and their knowledge varieties in an efficient format. Guaranteeing that every argument has a knowledge kind and a transparent description helps elevate efficiency. Few-shot examples of anticipated mannequin conduct are significantly efficient at guiding the mannequin to guage the relevance of the handed features and discouraging the mannequin from hallucinating features. In our immediate, we used few-shot examples to information the mannequin to undergo every of the supplied features one-by-one and consider whether or not they’re related to the duty earlier than deciding which operate to name.

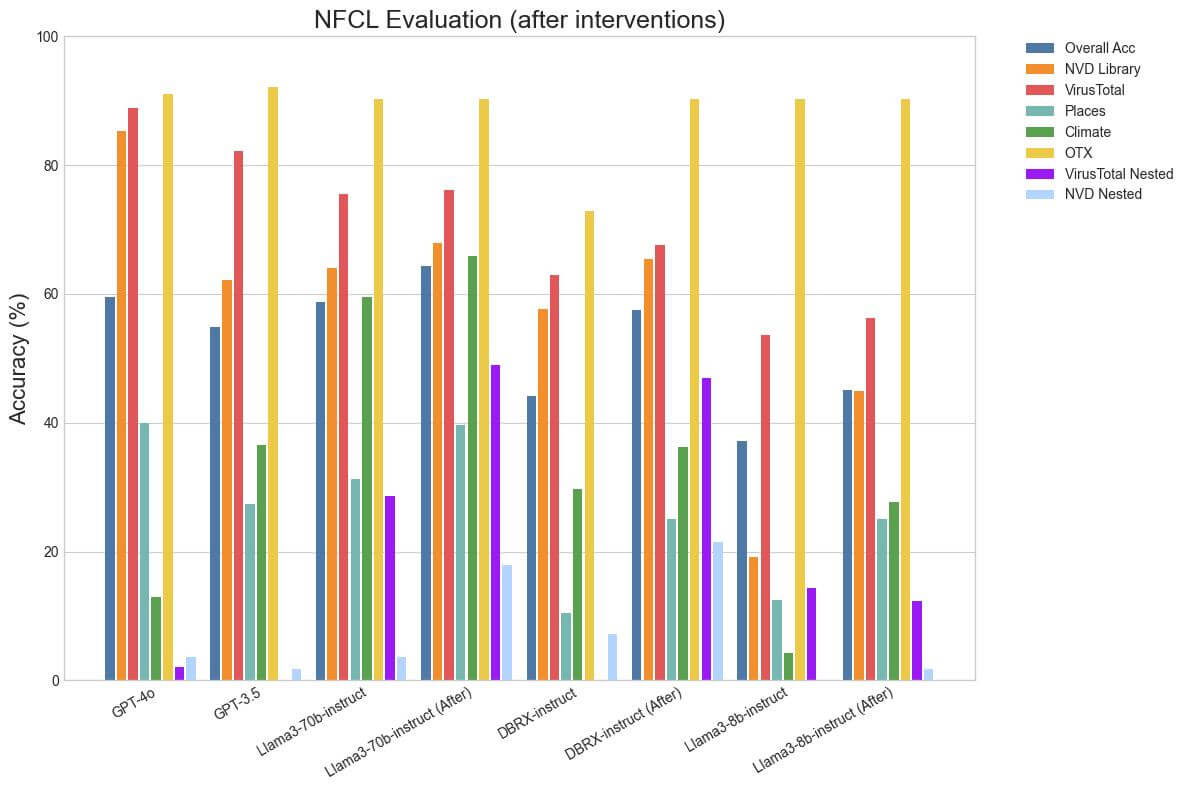

With this strategy, we have been capable of improve the Relevance Detection accuracy of Llama3-70b-instruct from 63.75% to 75.41% and Llama3-8b-instruct from 19.58% to 78.33%. There are a few counterintuitive outcomes right here: the relevance detection efficiency of Llama3-8b-instruct is larger than the 70b variant! Additionally, the efficiency of DBRX-instruct really dropped from 84.58% to 77.08%. The rationale for this is because of a limitation in the way in which relevance detection is applied. Since all of the check circumstances solely include irrelevant features, a mannequin that’s poor at function-calling and calls features incorrectly and even fails to ever name a operate will do exceptionally nicely on this class. Subsequently, it may be deceptive to view this quantity exterior of the context of its general efficiency. The excessive relevance detection accuracy of DBRX-instruct earlier than our adjustments is as a result of its outputs have been usually structurally flawed and subsequently its general function-calling efficiency was poor.

The overall instructions in our system immediate seem like this:

Please use your personal judgment as to whether or not or not you must name a operate. In specific, you could observe these guiding ideas:

1. You might assume the person has applied the operate themselves.

2. You might assume the person will name the operate on their very own. It's best to NOT ask the person to name the operate and let you understand the outcome; they may do that on their very own. You simply want to move the title and arguments.

3. By no means name a operate twice with the identical arguments. Do not repeat your operate calls!

4. If none of the features are related to the person's query, DO NOT MAKE any pointless operate calls.

5. Don't assume entry to any features that aren't listed on this immediate, irrespective of how easy. Don't assume entry to a code interpretor both. DO NOT MAKE UP FUNCTIONS.

You possibly can solely name features in accordance with the next formatting guidelines:

Rule 1: All of the features you've gotten entry to are contained inside {tool_list_start}{tool_list_end} XML tags. You can't use any features that aren't listed between these tags.

Rule 2: For every operate name, output JSON which conforms to the schema of the operate. You could wrap the operate name in {tool_call_start}[...list of tool calls...]{tool_call_end} XML tags. Every name might be a JSON object with the keys "title" and "arguments". The "title" key will include the title of the operate you're calling, and the "arguments" key will include the arguments you're passing to the operate as a JSON object. The highest degree construction is an inventory of those objects. YOU MUST OUTPUT VALID JSON BETWEEN THE {tool_call_start} AND {tool_call_end} TAGS!

Rule 3: If person decides to run the operate, they may output the results of the operate name within the following question. If it solutions the person's query, you must incorporate the output of the operate in your following message.We additionally specified that the mannequin makes use of the <considering> tag to generate the rationale for the operate name whereas specifying the ultimate operate name inside <tool_call> tags.

Supposed the features accessible to you are:

<instruments>

[{'type': 'function', 'function': {'name': 'determine_body_mass_index', 'description': 'Calculate body mass index given weight and height.', 'parameters': {'type': 'object', 'properties': {'weight': {'type': 'number', 'description': 'Weight of the individual in kilograms. This is a float type value.', 'format': 'float'}, 'height': {'type': 'number', 'description': 'Height of the individual in meters. This is a float type value.', 'format': 'float'}}, 'required': ['weight', 'height']}}}]

[{'type': 'function', 'function': {'name': 'math_prod', 'description': 'Compute the product of all numbers in a list.', 'parameters': {'type': 'object', 'properties': {'numbers': {'type': 'array', 'items': {'type': 'number'}, 'description': 'The list of numbers to be added up.'}, 'decimal_places': {'type': 'integer', 'description': 'The number of decimal places to round to. Default is 2.'}}, 'required': ['numbers']}}}]

[{'type': 'function', 'function': {'name': 'distance_calculator_calculate', 'description': 'Calculate the distance between two geographical coordinates.', 'parameters': {'type': 'object', 'properties': {'coordinate_1': {'type': 'array', 'items': {'type': 'number'}, 'description': 'The first coordinate, a pair of latitude and longitude.'}, 'coordinate_2': {'type': 'array', 'items': {'type': 'number'}, 'description': 'The second coordinate, a pair of latitude and longitude.'}}, 'required': ['coordinate_1', 'coordinate_2']}}}]

</instruments>

And the person asks:

Query: What is the present time in New York?

Then you must reply with:

<considering>

Let's begin with an inventory of features I've entry to:

- determine_body_mass_index: since this operate just isn't related to getting the present time, I cannot name it.

- math_prod: since this operate just isn't related to getting the present time, I cannot name it.

- distance_calculator_calculate: since this operate just isn't related to getting the present time, I cannot name it.

Not one of the accessible features, [determine_body_mass_index, math_prod, distance_calculator] are pertinent to the given question. Please examine in the event you not noted any related features.

As a Massive Language Mannequin, with out entry to the suitable instruments, I'm unable to offer the present time in New York.

Whereas the precise system immediate that we used is probably not appropriate for all purposes and all fashions, the guiding ideas can be utilized to tailor it for particular use circumstances. For instance, with Llama-3-70b-instruct we used an abridged model of our full system immediate which skipped the few-shot examples and omitted among the extra verbose directions. We’d additionally like to emphasise that LLMs will be fairly delicate to indentation and we encourage utilizing markdown, capitalization and indentation fastidiously.

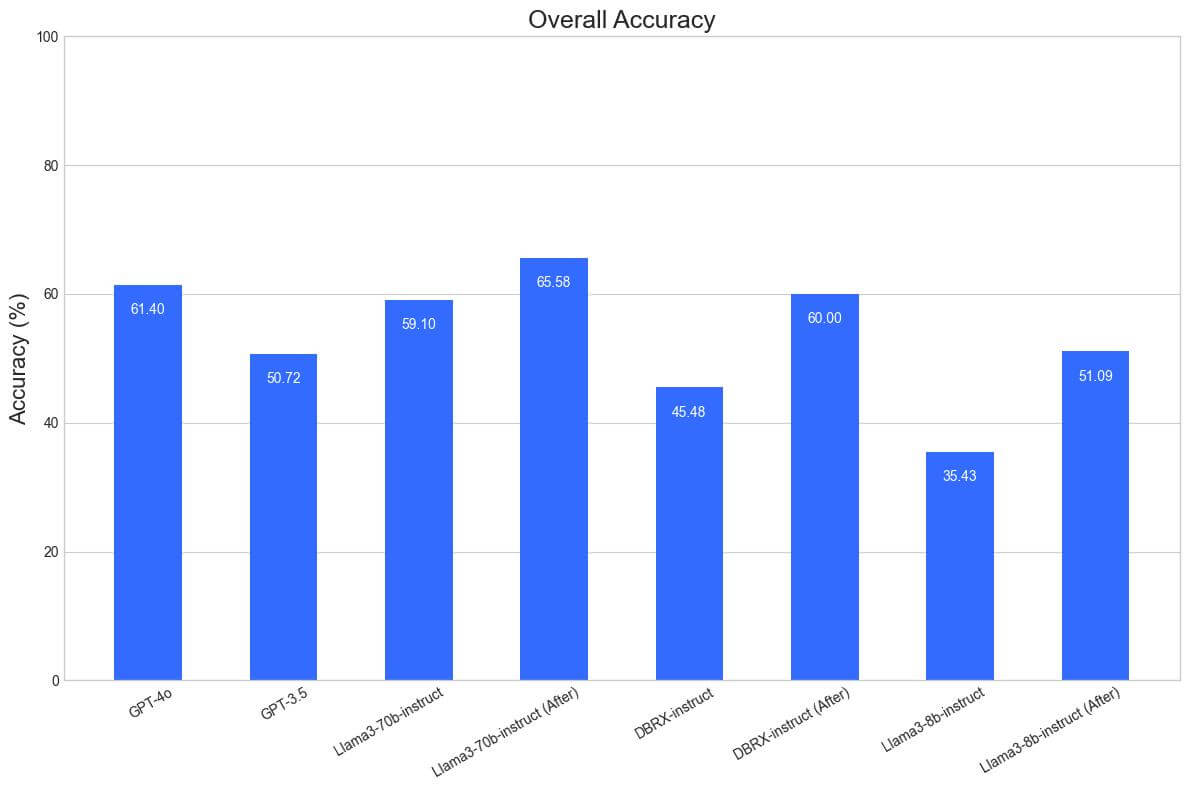

We computed an mixture metric by averaging throughout the subcategories in BFCL and NFCL whereas dropping the simplest classes (Easy, OTX). We additionally ignored the Local weather column, because it weights the nested operate calling capability too extremely. Lastly, we upweighted relevance detection since we discovered it significantly pertinent to the power of fashions to carry out operate calling within the wild.

The combination metric exhibits that Llama3-70b-instruct, which was already approaching GPT-4o in high quality, surpasses it with our modifications. Each DBRX-instruct and Llama3-8b-instruct which begin at beneath GPT-3.5 high quality surpass it and start to strategy GPT-4o high quality on these benchmarks.

A further word is that LLMs don’t present ensures on whether or not they can generate output that adheres to a given schema. As demonstrated by the outcomes above, one of the best open supply fashions exhibit spectacular capabilities on this space. Nonetheless, they’re nonetheless vulnerable to hallucinations and occasional errors. One method to mitigate these shortcomings is by utilizing structured era (in any other case referred to as constrained decoding), a decoding method that gives ensures of the format through which an LLM outputs tokens. That is executed by modifying the decoding step throughout LLM era to get rid of tokens that might violate given structural constraints. Standard open supply structured era libraries are Outlines, Steerage, and SGlang. From an engineering standpoint, structured era offers sturdy ensures which might be helpful for productionisation which is why we use it in our present implementation of operate calling on the Basis Fashions API. On this weblog, now we have solely offered outcomes with unstructured era for simplicity. Nonetheless, we need to emphasize {that a} well-implemented structured era pipeline ought to additional enhance the function-calling talents of an LLM.

5. Conclusion

Operate calling is a fancy functionality that considerably enhances the utility of LLMs in real-world purposes. Nonetheless, evaluating and bettering this functionality is way from simple. Listed below are some key takeaways:

- Complete analysis: No single benchmark tells the entire story. A holistic strategy, combining a number of analysis frameworks like BFCL and NFCL is essential to understanding a mannequin’s operate calling capabilities.

- Nuanced interpretation: Excessive scores on sure benchmarks, whereas needed, should not at all times ample to ensure superior function-calling efficiency in apply. It’s important to grasp the strengths and limitations of every analysis metric.

- The facility of prompting: We’ve demonstrated that cautious prompting and output structuring can dramatically enhance a mannequin’s function-calling talents. This strategy allowed us to raise the efficiency of fashions like DBRX and Llama-3, bringing them nearer to and even surpassing GPT-4o in sure features.

- Relevance detection: This often-overlooked facet of operate calling is essential for real-world purposes. Our enhancements on this space spotlight the significance of guiding fashions to purpose about operate relevance.

To study extra about operate calling, evaluate our official documentation and check out our Foundational Mannequin APIs.