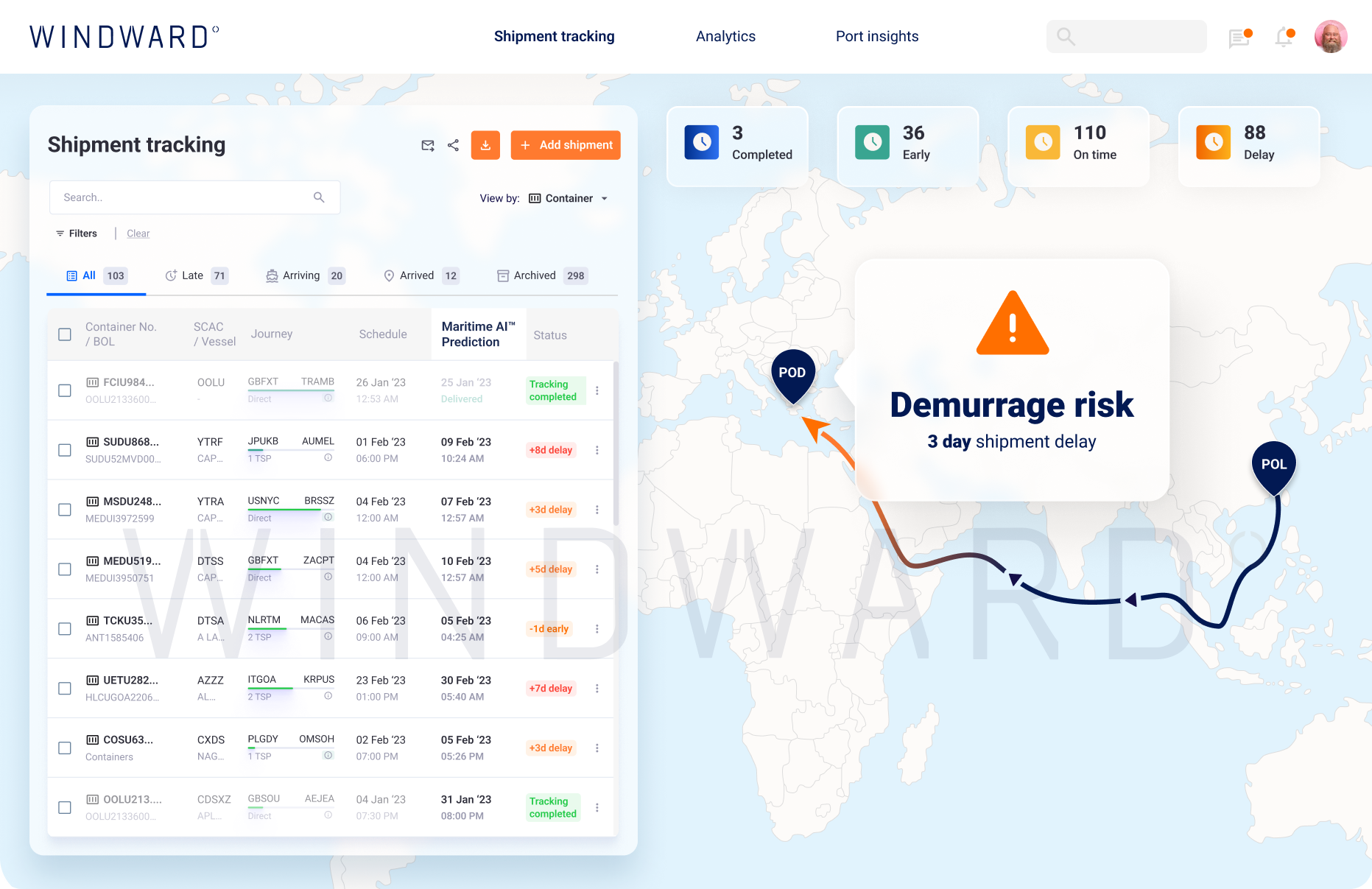

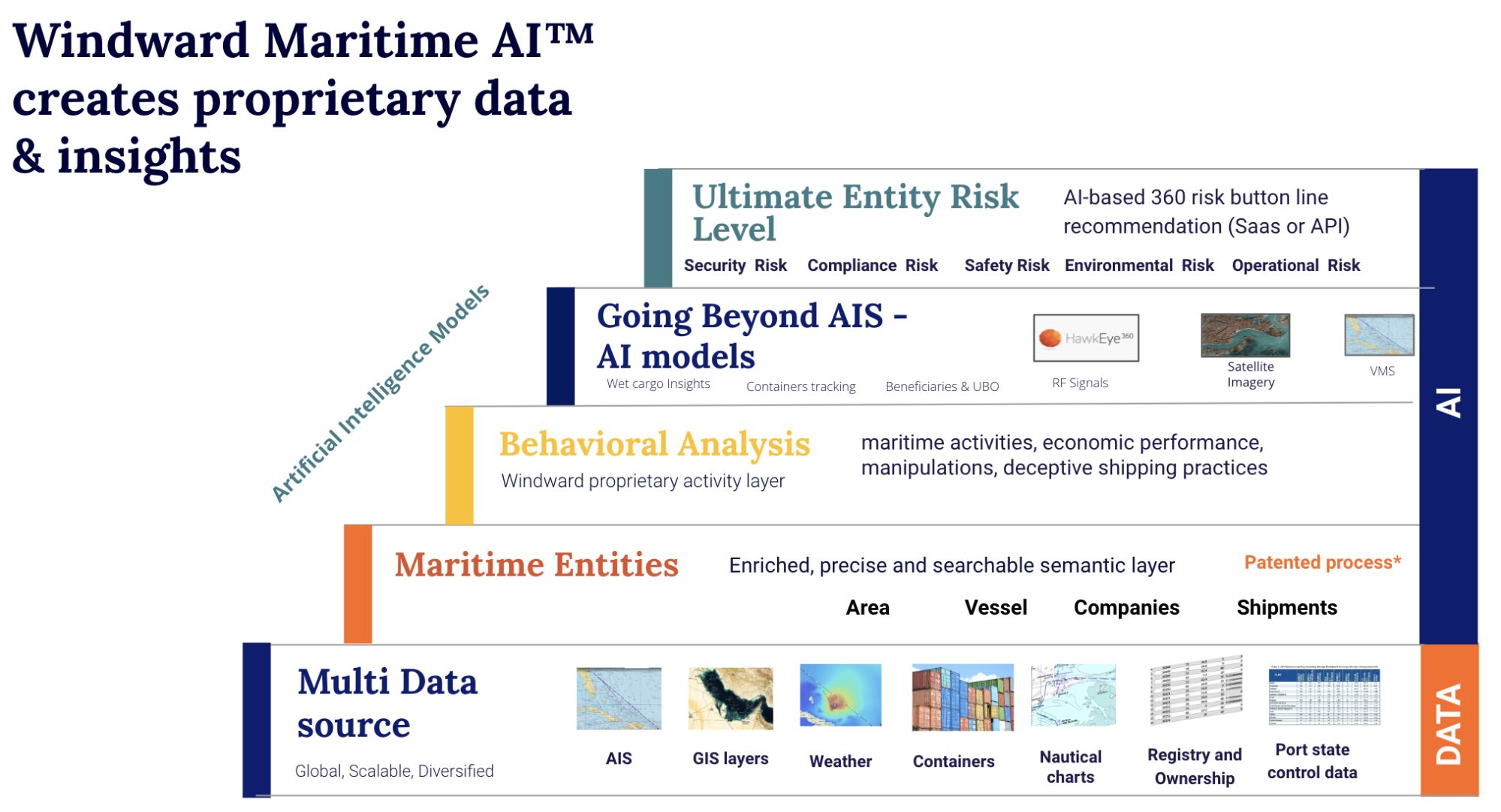

Windward (LSE:WNWD), is the main Maritime AI™ firm, offering an all-in-one platform for threat administration and maritime area consciousness must speed up world commerce. Windward displays and analyzes what 500k+ vessels around the globe are doing daily together with the place they go, what cargo is saved, how they deal with inclement climate and what ports they frequent. With 90% of commerce being transported by way of sea, this knowledge is essential to protecting the worldwide provide chain on observe however might be troublesome to disentangle and take motion on. Windward fills this area of interest by offering actionable intelligence with real-time ETA monitoring, service efficiency insights, threat monitoring and mitigation and extra.

In 2022, Windward launched into a number of adjustments to its utility prompting a reconsideration of its underlying knowledge stack. For one, the corporate determined to put money into an API Insights Lab the place clients and companions throughout suppliers, carriers, governments and insurance coverage firms may use maritime knowledge as a part of their inner techniques and workflows. This enabled every of the gamers to make use of the maritime knowledge in distinct methods with insurance coverage firms figuring out worth and assessing threat and governments monitoring unlawful actions. Because of this, Windward needed an underlying knowledge stack that took an API first strategy.

Windward expanded their AI insights to incorporate dangers associated to unlawful, unregulated and unreported (IUU) fishing in addition to to determine shadow fleets that obscure the transport of sanctioned Russian oil/moist cargo. To help this, Windward’s knowledge platform wanted to allow fast iteration so they may shortly innovate and construct extra AI capabilities.

Lastly, Windward needed to maneuver their whole platform from batch-based knowledge infrastructure to streaming. This transition can help new use circumstances that require a quicker option to analyze occasions that was not wanted till now.

On this weblog, we’ll describe the brand new knowledge platform for Windward and the way it’s API first, permits fast product iteration and is architected for real-time, streaming knowledge.

Knowledge Challenges

Windward tracks vessel positions generated by AIS transmissions within the ocean. Over 100M AIS transmissions get added daily to trace a vessel’s location at any given level of time. If a vessel makes a flip, Windward can use a minimal variety of AIS transmissions to chart its path. This knowledge may also be used to determine the pace, ports visited and different variables which might be a part of the journey. Now, this AIS transmission knowledge is a bit flaky, making it difficult to affiliate a transmission with the suitable vessel. Because of this, about 30% of all knowledge finally ends up triggering knowledge adjustments and deletions.

Along with the AIS transmissions knowledge, there are different knowledge sources for enrichment together with climate, nautical charts, possession and extra. This enrichment knowledge has altering schemas and new knowledge suppliers are continually being added to boost the insights, making it difficult for Windward to help utilizing relational databases with strict schemas.

Utilizing real-time and historic knowledge, Windward runs behavioral evaluation to look at maritime actions, financial efficiency and misleading transport practices. In addition they create AI fashions which might be used to find out environmental threat, sanctions compliance threat, operational threat and extra. All of those assessments return to the AI insights initiative that led Windward to re-examine its knowledge stack.

As Windward operated in a batch-based knowledge stack, they saved uncooked knowledge in S3. They used MongoDB as their metadata retailer to seize vessel and firm knowledge. The vessel positions knowledge which in nature is a time sequence geospatial knowledge set, was saved in each PostgreSQL and Cassandra to have the ability to help completely different use circumstances. Windward additionally used specialised databases like Elasticsearch for particular performance like textual content search. When Windward took stock of their knowledge structure, that they had 5 completely different databases making it difficult to help new use circumstances, obtain performant contextual queries and scale the database techniques.

Moreover, as Windward launched new use circumstances they began to hit limitations with their knowledge stack. Within the phrases of Benny Keinan, Vice President of R&D at Windward, “We had been caught on function growth and dealing too onerous on options that ought to have been straightforward to construct. The info stack and mannequin that we began Windward with twelve years in the past was not preferrred for the search and analytical options wanted to digitally and intelligently rework the maritime business.”

Benny and staff determined to embark on a brand new knowledge stack that would higher help the logistics monitoring wants of their clients and the maritime business. They began by contemplating new product requests from prospects and clients that will be onerous to help within the present stack, limiting the chance to generate vital new income. These included:

- Geo queries: Clients needed to generate personalised polygons to watch specific maritime areas of curiosity. Their objective was to have the potential to carry out searches on previous knowledge for not too long ago outlined polygons and procure outcomes inside seconds.

- Vessel search: Clients needed to seek for a particular vessel and see all the contextual data together with AIS transmissions, possession and actions and relations between actions (for instance, sequence of actions). Search and be a part of queries had been onerous to help in a well timed method within the utility expertise.

- Partial and fuzzy phrase search: The client may solely have the partial vessel identify and so the database must help partial phrase searches.

Windward realized that the database ought to help each search and analytics on streaming knowledge to satisfy their present and future product growth wants.

Necessities for Subsequent-Era Database

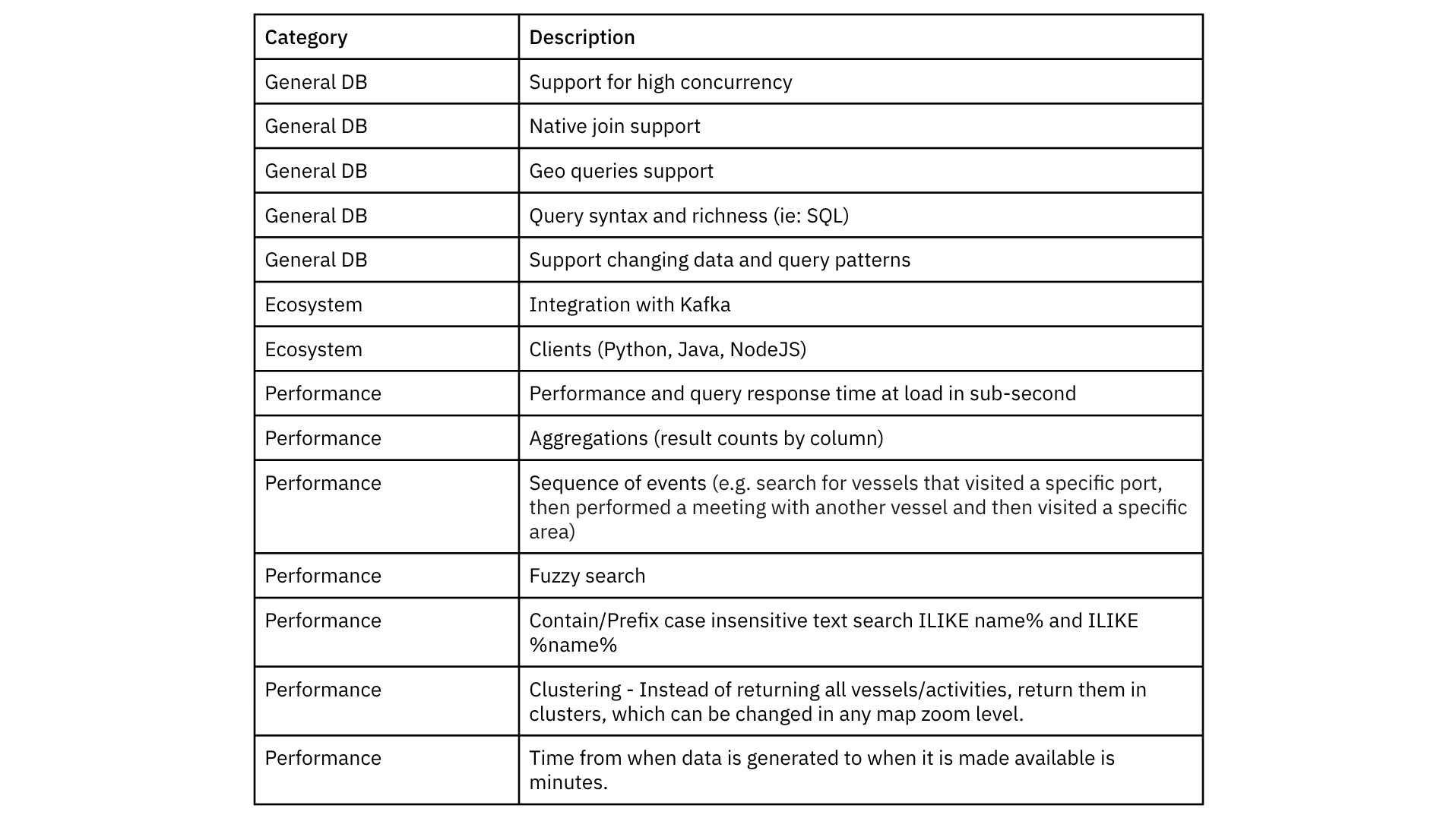

The variety of databases beneath administration and the challenges supporting new use case necessities prompted Windward to consolidate their knowledge stack. Taking a use case centric strategy, Windward was capable of determine the next necessities:

After arising with the necessities, Windward evaluated greater than 10 completely different databases, out of which solely Rockset and Snowflake had been able to supporting the primary use circumstances for search and analytics of their utility.

Rockset was short-listed for the analysis because it’s designed for quick search and analytics on streaming knowledge and takes an API first strategy. Moreover, Rockset helps in-place updates making it environment friendly to course of adjustments to AIS transmissions and their related vessels. With help for SQL on deeply nested semi-structured knowledge, Windward noticed the potential to consolidate geo knowledge and time sequence knowledge into one system and question utilizing SQL. As one of many limitations of the prevailing techniques was their incapacity to carry out quick searches, Windward appreciated Rockset’s Converged Index which indexes the information in a search index, columnar retailer and row retailer to help a variety of question patterns out-of-the-box.

Snowflake was evaluated for its columnar retailer and skill to help large-scale aggregations and joins on historic knowledge. Each Snowflake and Rockset are cloud-native and fully-managed, minimizing infrastructure operations on the Windward engineering staff in order that they will deal with constructing new AI insights and capabilities into their maritime utility.

Efficiency Analysis of Rockset and Snowflake

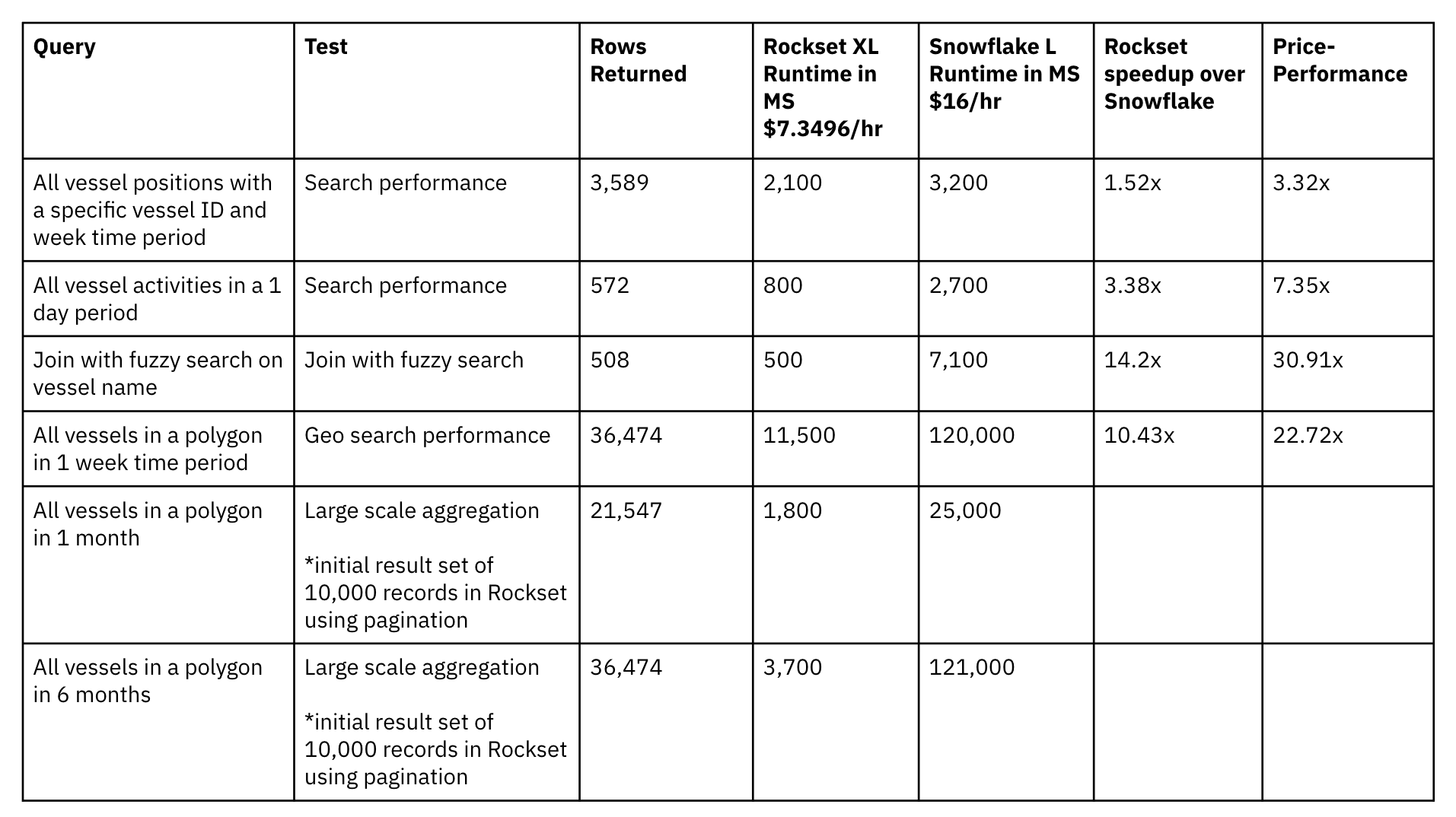

Windward evaluated the question efficiency of the techniques on a collection of 6 typical queries together with search, geosearch, fuzzy matching and large-scale aggregations on ~2B data dataset measurement.

The efficiency of Rockset was evaluated on an XL Digital Occasion, an allocation of 32 vCPU and 256 GB RAM, that’s $7.3496/hr within the AWS US-West area. The efficiency of Snowflake was evaluated on a Massive digital knowledge warehouse that’s $16/hr in AWS US-West.

The efficiency checks present that Rockset is ready to obtain quicker question efficiency at lower than half the value of Snowflake. Rockset noticed as much as a 30.91x price-performance benefit over Snowflake for Windward’s use case. The question pace good points over Snowflake are resulting from Rockset’s Converged Indexing know-how the place quite a few indexes are leveraged in parallel to realize quick efficiency on large-scale knowledge.

This efficiency testing made Windward assured that Rockset may meet the seconds question latency desired of the applying whereas staying inside price range right this moment and into the longer term.

Iterating in an Ocean of Knowledge

With Rockset, Windward is ready to help the quickly shifting wants of the maritime ecosystem, giving its clients the visibility and AI insights to reply and keep compliant.

Analytic capabilities that used to take down Windward’s PostgreSQL database or, at a minimal take 40 minutes to load, are actually supplied to clients inside seconds. Moreover, Windward is consolidating three databases into Rockset to simplify operations and make it simpler to help new product necessities. This offers Windward’s engineering staff time again to develop new AI insights.

Benny Keinan describes how product growth shifted with Rockset, “We’re capable of supply new capabilities to our clients that weren’t potential earlier than Rockset. Because of this, maritime leaders leverage AI insights to navigate their provide chains by way of the Coronavirus pandemic, Struggle within the Ukraine, decarbonization initiatives and extra. Rockset has helped us deal with the altering wants of the maritime business, all in actual time.”

You possibly can study extra in regards to the foundational items and rules of Windward’s AI on their blog- A Look into the “Engine Room” of Windward’s AI.