We’re thrilled to introduce keras3, the subsequent model of the Keras R

bundle. keras3 is a ground-up rebuild of {keras}, sustaining the

beloved options of the unique whereas refining and simplifying the API

primarily based on beneficial insights gathered over the previous few years.

Keras gives a whole toolkit for constructing deep studying fashions in

R—it’s by no means been simpler to construct, prepare, consider, and deploy deep

studying fashions.

Set up

To put in Keras 3:

What’s new:

Documentation

Nice documentation is crucial, and we’ve labored arduous to verify

that keras3 has wonderful documentation, each now, and sooner or later.

Keras 3 comes with a full refresh of the web site:

https://keras.posit.co. There, you can find guides, tutorials,

reference pages with rendered examples, and a brand new examples gallery. All

the reference pages and guides are additionally accessible by way of R’s built-in assist

system.

In a fast paced ecosystem like deep studying, creating nice

documentation and wrappers as soon as shouldn’t be sufficient. There additionally must be

workflows that make sure the documentation is up-to-date with upstream

dependencies. To perform this, {keras3} consists of two new maintainer

options that make sure the R documentation and performance wrappers will keep

up-to-date:

-

We now take snapshots of the upstream documentation and API floor.

With every launch, all R documentation is rebased on upstream

updates. This workflow ensures that every one R documentation (guides,

examples, vignettes, and reference pages) and R perform signatures

keep up-to-date with upstream. This snapshot-and-rebase

performance is applied in a brand new standalone R bundle,

{doctether}, which can

be helpful for R bundle maintainers needing to maintain documentation in

parity with dependencies.

-

All examples and vignettes can now be evaluated and rendered throughout

a bundle construct. This ensures that no stale or damaged instance code

makes it right into a launch. It additionally means all person dealing with instance code

now moreover serves as an prolonged suite of snapshot unit and

integration assessments.

Evaluating code in vignettes and examples continues to be not permitted

in keeping with CRAN restrictions. We work across the CRAN restriction

by including further bundle construct steps that pre-render

examples

and

vignettes.

Mixed, these two options will make it considerably simpler for Keras

in R to take care of characteristic parity and up-to-date documentation with the

Python API to Keras.

Multi-backend assist

Quickly after its launch in 2015, Keras featured assist for hottest

deep studying frameworks: TensorFlow, Theano, MXNet, and CNTK. Over

time, the panorama shifted; Theano, MXNet, and CNTK had been retired, and

TensorFlow surged in recognition. In 2021, three years in the past, TensorFlow

grew to become the premier and solely supported Keras backend. Now, the panorama

has shifted once more.

Keras 3 brings the return of multi-backend assist. Select a backend by

calling:

The default backend continues to be TensorFlow, which is the only option

for many customers right this moment; for small-to-medium sized fashions that is nonetheless the

quickest backend. Nevertheless, every backend has completely different strengths, and

having the ability to swap simply will allow you to adapt to adjustments as your

challenge, or the frameworks themselves, evolve.

As we speak, switching to the Jax backend can, for some mannequin sorts, deliver

substantial pace enhancements. Jax can also be the one backend that has

assist for a brand new mannequin parallelism distributed coaching API. Switching

to Torch may be useful throughout improvement, usually producing easier

trackbacks whereas debugging.

Keras 3 additionally allows you to incorporate any pre-existing Torch, Jax, or Flax

module as a typical Keras layer through the use of the suitable wrapper,

letting you construct atop current initiatives with Keras. For instance, prepare

a Torch mannequin utilizing the Keras high-level coaching API (compile() +

match()), or embrace a Flax module as a element of a bigger Keras

mannequin. The brand new multi-backend assist allows you to use Keras à la carte.

The ‘Ops’ household

{keras3} introduces a brand new “Operations” household of perform. The Ops

household, presently with over 200

capabilities,

gives a complete suite of operations sometimes wanted when

working on nd-arrays for deep studying. The Operation household

supersedes and significantly expands on the previous household of backend capabilities

prefixed with k_ within the {keras} bundle.

The Ops capabilities allow you to write backend-agnostic code. They supply a

uniform API, no matter in the event you’re working with TensorFlow Tensors,

Jax Arrays, Torch Tensors, Keras Symbolic Tensors, NumPy arrays, or R

arrays.

The Ops capabilities:

- all begin with prefix

op_ (e.g., op_stack())

- all are pure capabilities (they produce no side-effects)

- all use constant 1-based indexing, and coerce doubles to integers

as wanted

- all are protected to make use of with any backend (tensorflow, jax, torch, numpy)

- all are protected to make use of in each keen and graph/jit/tracing modes

The Ops API consists of:

- The whole thing of the NumPy API (

numpy.*)

- The TensorFlow NN API (

tf.nn.*)

- Widespread linear algebra capabilities (A subset of

scipy.linalg.*)

- A subfamily of picture transformers

- A complete set of loss capabilities

- And extra!

Ingest tabular information with layer_feature_space()

keras3 gives a brand new set of capabilities for constructing fashions that ingest

tabular information: layer_feature_space() and a household of characteristic

transformer capabilities (prefix, feature_) for constructing keras fashions

that may work with tabular information, both as inputs to a keras mannequin, or

as preprocessing steps in a knowledge loading pipeline (e.g., a

tfdatasets::dataset_map()).

See the reference

web page and an

instance utilization in a full end-to-end

instance

to be taught extra.

New Subclassing API

The subclassing API has been refined and prolonged to extra Keras

sorts.

Outline subclasses just by calling: Layer(), Loss(), Metric(),

Callback(), Constraint(), Mannequin(), and LearningRateSchedule().

Defining {R6} proxy lessons is not crucial.

Moreover the documentation web page for every of the subclassing

capabilities now accommodates a complete itemizing of all of the accessible

attributes and strategies for that kind. Try

?Layer to see what’s

potential.

Saving and Export

Keras 3 brings a brand new mannequin serialization and export API. It’s now a lot

easier to save lots of and restore fashions, and in addition, to export them for

serving.

-

save_model()/load_model():

A brand new high-level file format (extension: .keras) for saving and

restoring a full mannequin.

The file format is backend-agnostic. This implies that you could convert

educated fashions between backends, just by saving with one backend,

after which loading with one other. For instance, prepare a mannequin utilizing Jax,

after which convert to Tensorflow for export.

-

export_savedmodel():

Export simply the ahead move of a mannequin as a compiled artifact for

inference with TF

Serving or (quickly)

Posit Join. This

is the best solution to deploy a Keras mannequin for environment friendly and

concurrent inference serving, all with none R or Python runtime

dependency.

-

Decrease stage entry factors:

save_model_weights() / load_model_weights():

save simply the weights as .h5 recordsdata.save_model_config() / load_model_config():

save simply the mannequin structure as a json file.

-

register_keras_serializable():

Register customized objects to allow them to be serialized and

deserialized.

-

serialize_keras_object() / deserialize_keras_object():

Convert any Keras object to an R listing of straightforward sorts that’s protected

to transform to JSON or rds.

-

See the brand new Serialization and Saving

vignette

for extra particulars and examples.

New random household

A brand new household of random tensor

mills.

Just like the Ops household, these work with all backends. Moreover, all of the

RNG-using strategies have assist for stateless utilization whenever you move in a

seed generator. This allows tracing and compilation by frameworks that

have particular assist for stateless, pure, capabilities, like Jax. See

?random_seed_generator()

for instance utilization.

Different additions:

-

New form()

perform, one-stop utility for working with tensor shapes in all

contexts.

-

New and improved print(mannequin) and plot(mannequin) technique. See some

examples of output within the Useful API

information

-

All new match() progress bar and dwell metrics viewer output,

together with new dark-mode assist within the RStudio IDE.

-

New config

household,

a curated set of capabilities for getting and setting Keras international

configurations.

-

All the different perform households have expanded with new members:

Migrating from {keras} to {keras3}

{keras3} supersedes the {keras} bundle.

In the event you’re writing new code right this moment, you can begin utilizing {keras3} proper

away.

When you have legacy code that makes use of {keras}, you’re inspired to

replace the code for {keras3}. For a lot of high-level API capabilities, such

as layer_dense(), match(), and keras_model(), minimal to no adjustments

are required. Nevertheless there’s a lengthy tail of small adjustments that you simply

may have to make when updating code that made use of the lower-level

Keras API. A few of these are documented right here:

https://keras.io/guides/migrating_to_keras_3/.

In the event you’re operating into points or have questions on updating, don’t

hesitate to ask on https://github.com/rstudio/keras/points or

https://github.com/rstudio/keras/discussions.

The {keras} and {keras3} packages will coexist whereas the neighborhood

transitions. Throughout the transition, {keras} will proceed to obtain

patch updates for compatibility with Keras v2, which continues to be

printed to PyPi below the bundle identify tf-keras. After tf-keras is

not maintained, the {keras} bundle can be archived.

Abstract

In abstract, {keras3} is a sturdy replace to the Keras R bundle,

incorporating new options whereas preserving the convenience of use and

performance of the unique. The brand new multi-backend assist,

complete suite of Ops capabilities, refined mannequin serialization API,

and up to date documentation workflows allow customers to simply take

benefit of the most recent developments within the deep studying neighborhood.

Whether or not you’re a seasoned Keras person or simply beginning your deep

studying journey, Keras 3 gives the instruments and suppleness to construct,

prepare, and deploy fashions with ease and confidence. As we transition from

Keras 2 to Keras 3, we’re dedicated to supporting the neighborhood and

guaranteeing a easy migration. We invite you to discover the brand new options,

try the up to date documentation, and be part of the dialog on our

GitHub discussions web page. Welcome to the subsequent chapter of deep studying in

R with Keras 3!

Get pleasure from this weblog? Get notified of latest posts by e-mail:

Posts additionally accessible at r-bloggers

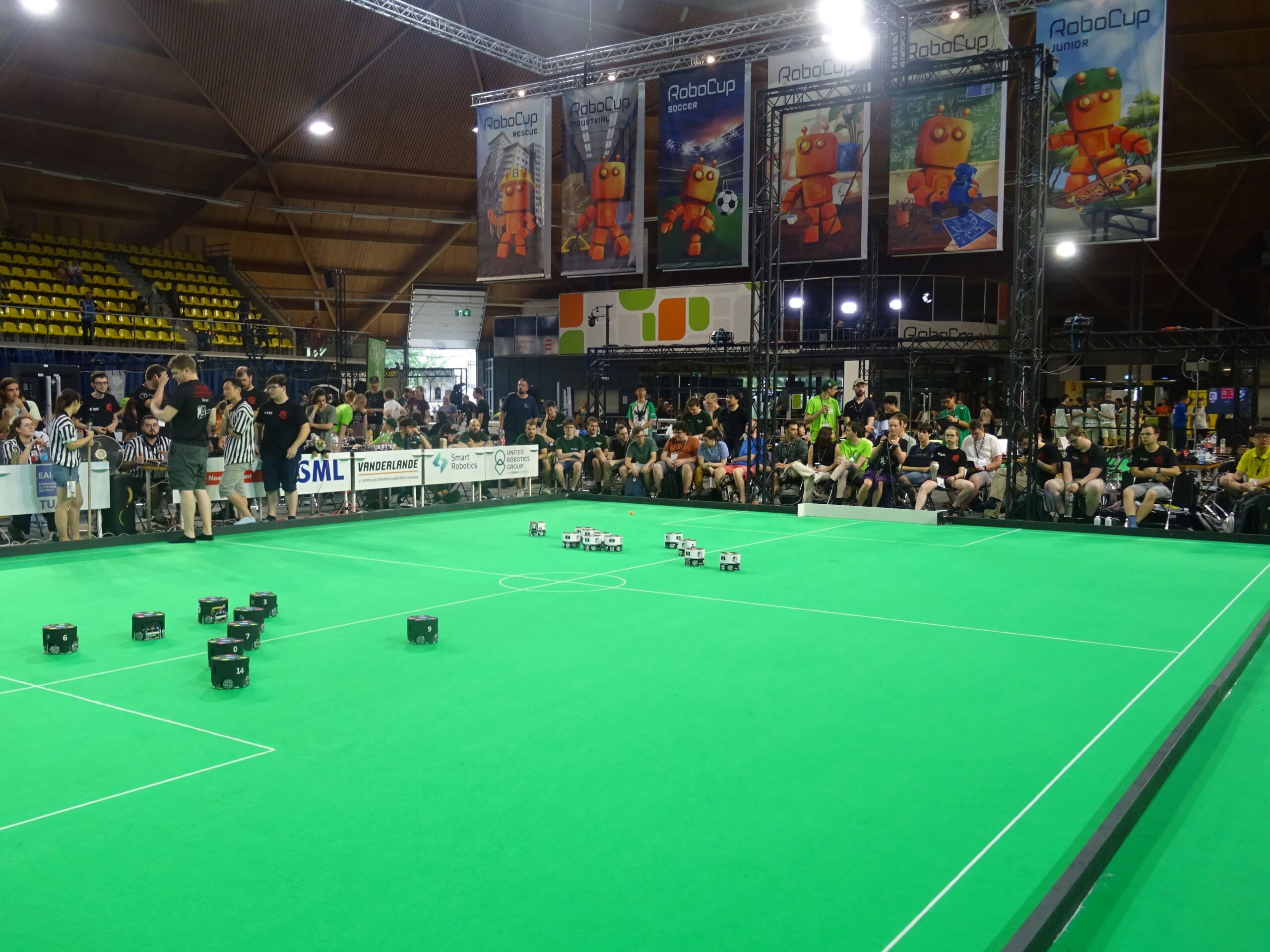

A break in play throughout a Small Measurement League match.

A break in play throughout a Small Measurement League match. The RedbackBots travelling workforce for 2024 (L-to-R: Murray Owens, Sam Griffiths, Tom Ellis, Dr Timothy Wiley, Mark Discipline, Jasper Avice Demay). Photograph credit score: Dr Timothy Wiley.

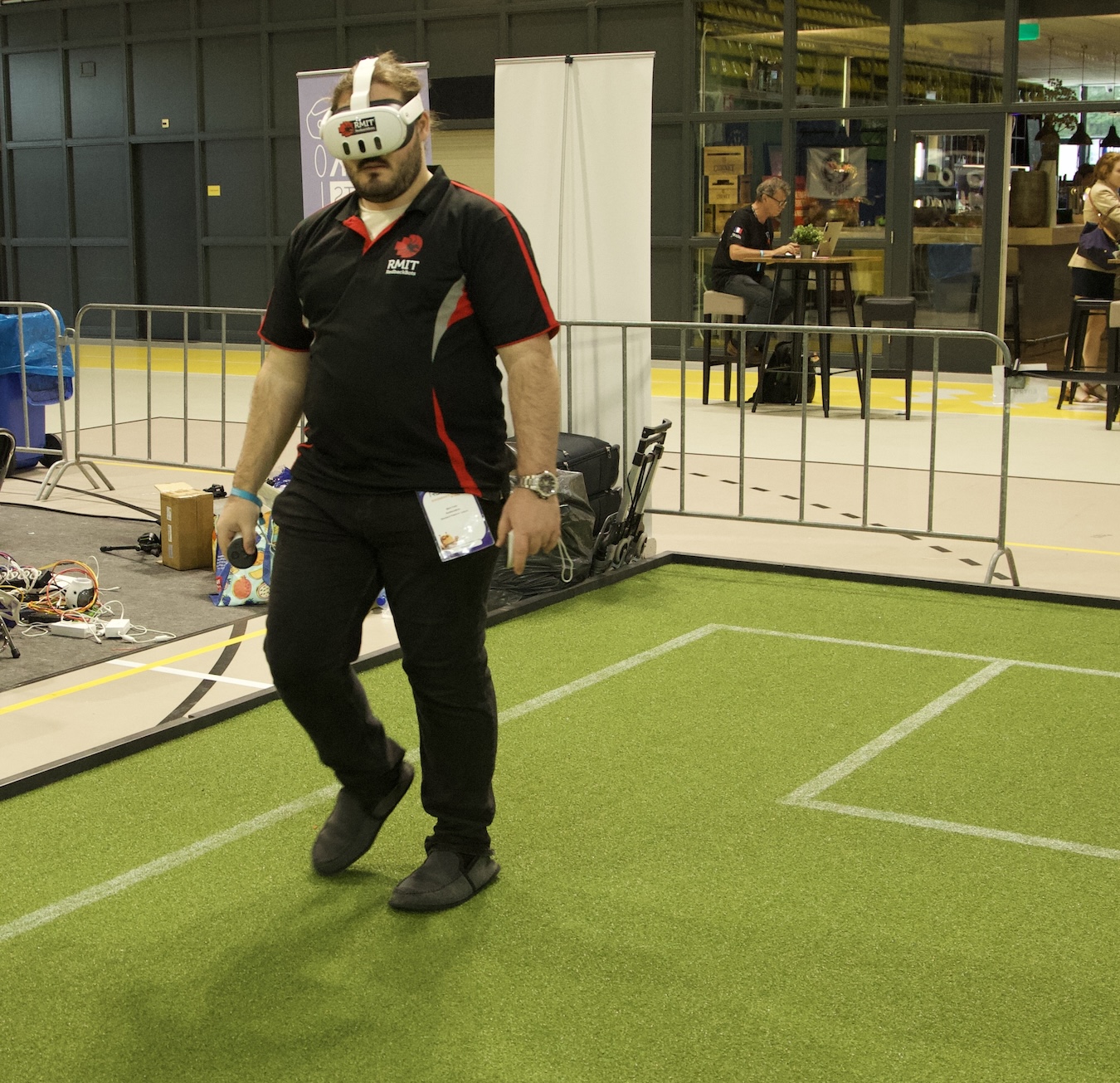

The RedbackBots travelling workforce for 2024 (L-to-R: Murray Owens, Sam Griffiths, Tom Ellis, Dr Timothy Wiley, Mark Discipline, Jasper Avice Demay). Photograph credit score: Dr Timothy Wiley. Mark Discipline organising the MetaQuest3 to make use of the augmented actuality system. Photograph credit score: Dr Timothy Wiley.

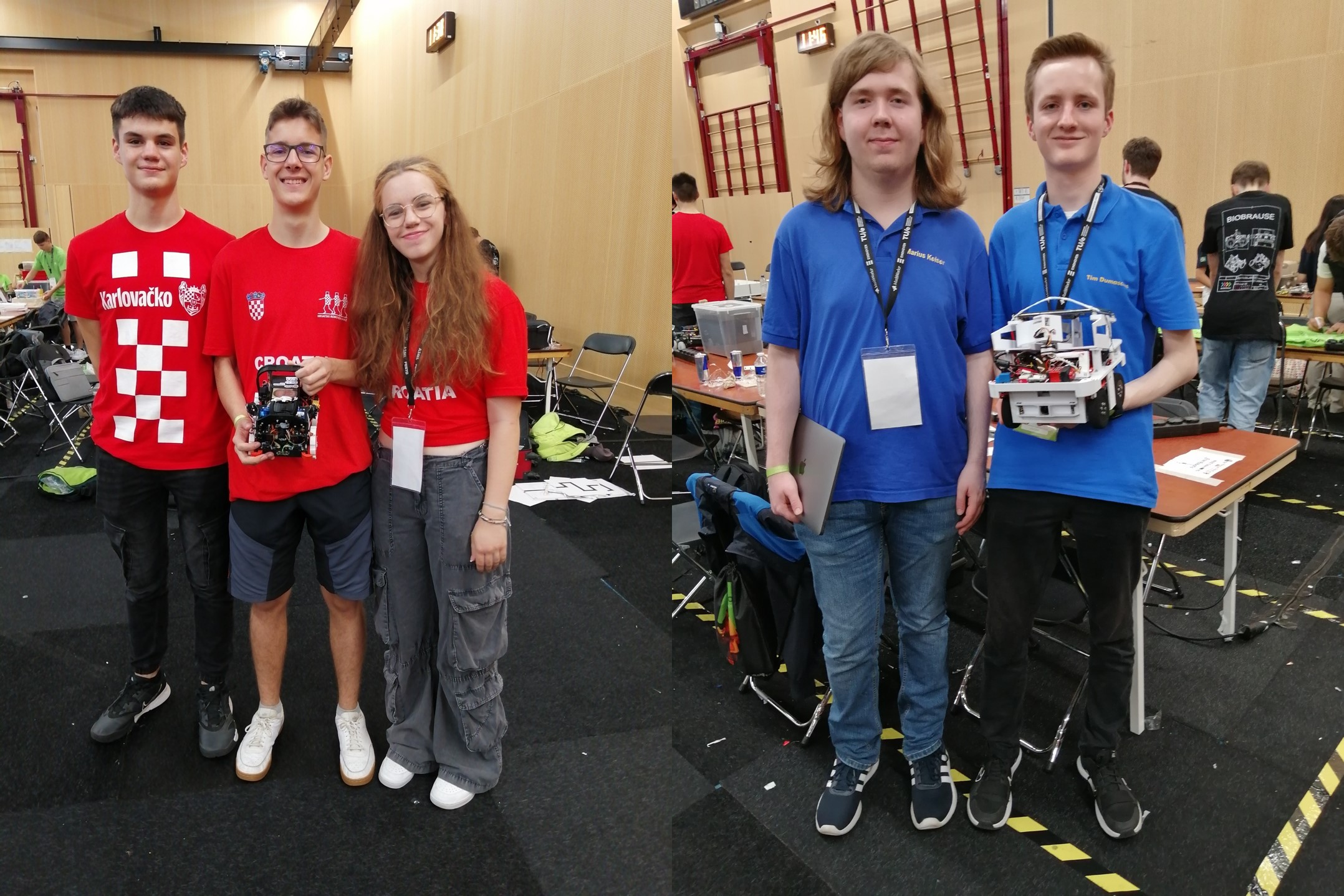

Mark Discipline organising the MetaQuest3 to make use of the augmented actuality system. Photograph credit score: Dr Timothy Wiley. Left: Workforce Skollska Knijgia. Proper: Workforce Overengeniering2.

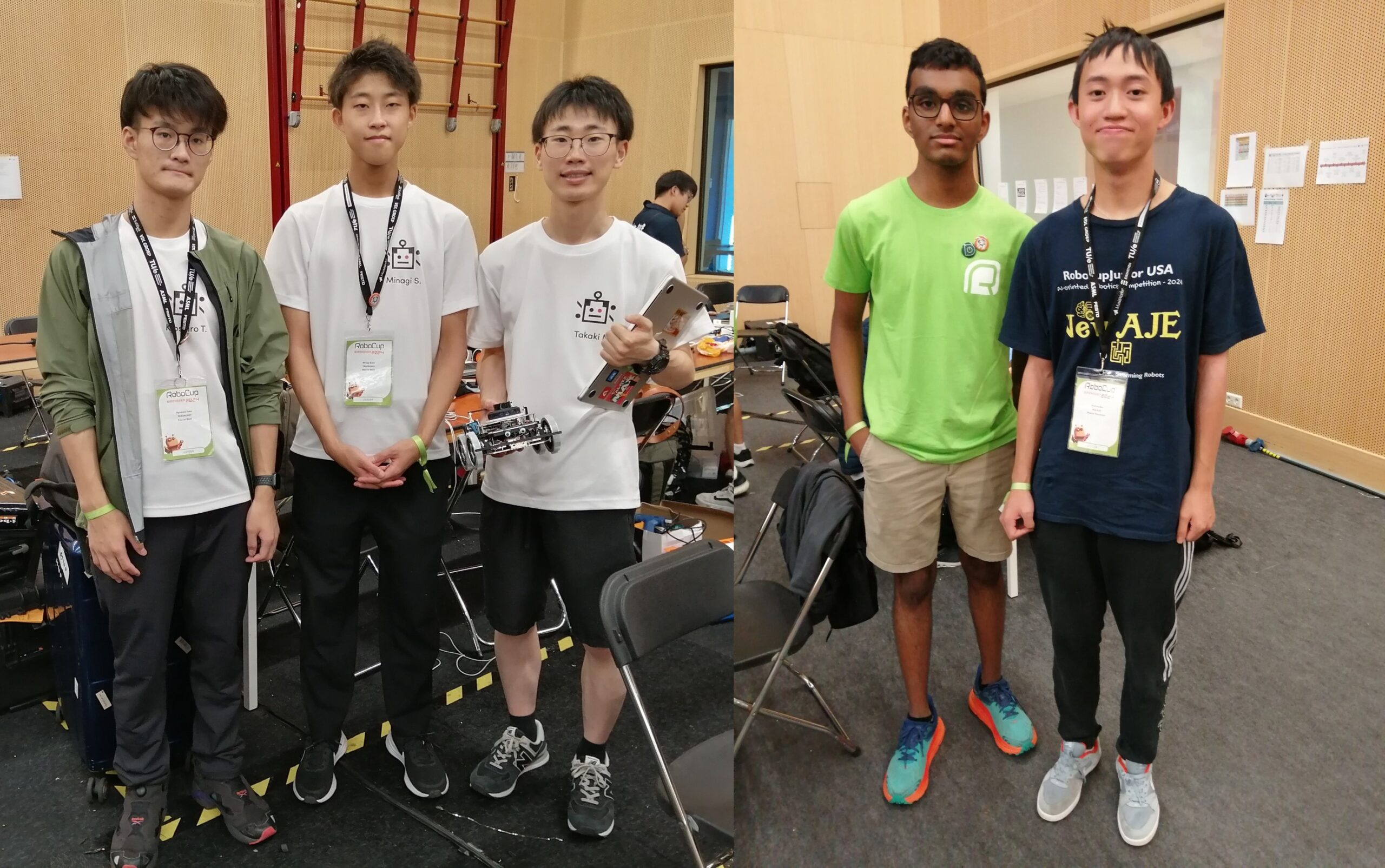

Left: Workforce Skollska Knijgia. Proper: Workforce Overengeniering2. Left: Workforce Tanorobo! Proper: Workforce New Aje.

Left: Workforce Tanorobo! Proper: Workforce New Aje. Left: Workforce Medic Bot Proper: Workforce Jam Session.

Left: Workforce Medic Bot Proper: Workforce Jam Session. Standing room solely to see the Grownup Measurement Humanoids.

Standing room solely to see the Grownup Measurement Humanoids.