Current analysis by cybersecurity firm ESET supplies particulars a few new assault marketing campaign focusing on Android smartphone customers.

The cyberattack, based mostly on each a posh social engineering scheme and the usage of a brand new Android malware, is able to stealing customers’ close to area communication knowledge to withdraw money from NFC-enabled ATMs.

Fixed technical enhancements from the menace actor

As famous by ESET, the menace actor initially exploited progressive internet app expertise, which permits the set up of an app from any web site outdoors of the Play Retailer. This expertise can be utilized with supported browsers equivalent to Chromium-based browsers on desktops or Firefox, Chrome, Edge, Opera, Safari, Orion, and Samsung Web Browser.

PWAs, accessed straight through browsers, are versatile and don’t usually undergo from compatibility issues. PWAs, as soon as put in on methods, might be acknowledged by their icon, which shows an extra small browser icon.

Cybercriminals use PWAs to guide unsuspecting customers to full-screen phishing web sites to gather their credentials or bank card info.

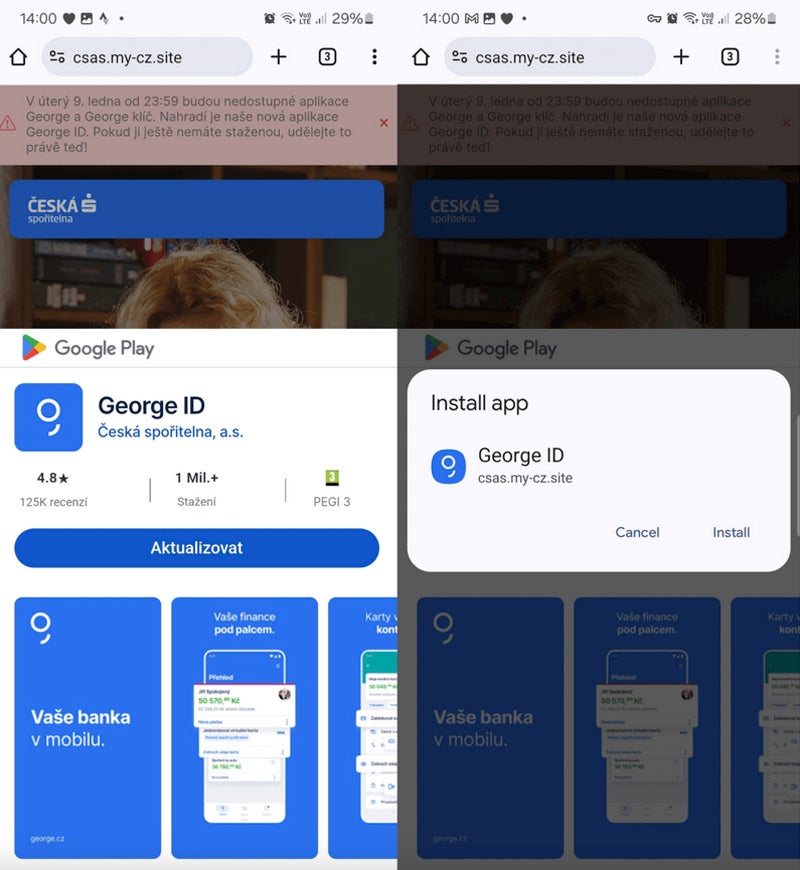

The menace actor concerned on this marketing campaign switched from PWAs to WebAPKs, a extra superior kind of PWA. The distinction is delicate: PWAs are apps constructed utilizing internet applied sciences, whereas WebAPKs use a expertise to combine PWAs as native Android purposes.

From the attacker perspective, utilizing WebAPKs is stealthier as a result of their icons not show a small browser icon.

The sufferer downloads and installs a standalone app from a phishing web site. That particular person doesn’t request any further permission to put in the app from a third-party web site.

These fraudulent web sites usually mimic elements of the Google Play Retailer to convey confusion and make the person imagine the set up really comes from the Play Retailer whereas it really comes straight from the fraudulent web site.

NGate malware

On March 6, the identical distribution domains used for the noticed PWAs and WebAPKs phishing campaigns out of the blue began spreading a brand new malware known as NGate. As soon as put in and executed on the sufferer’s cellphone, it opens a pretend web site asking for the person’s banking info, which is shipped to the menace actor.

But the malware additionally embedded a software known as NFCGate, a official software permitting the relaying of NFC knowledge between two units with out the necessity for the machine to be rooted.

As soon as the person has offered banking info, that particular person receives a request to activate the NFC function from their smartphone and to put their bank card in opposition to the again of their smartphone till the app efficiently acknowledges the cardboard.

Full social engineering

Whereas activating NFC for an app and having a cost card acknowledged might initially appear suspicious, the social engineering methods deployed by menace actors clarify the state of affairs.

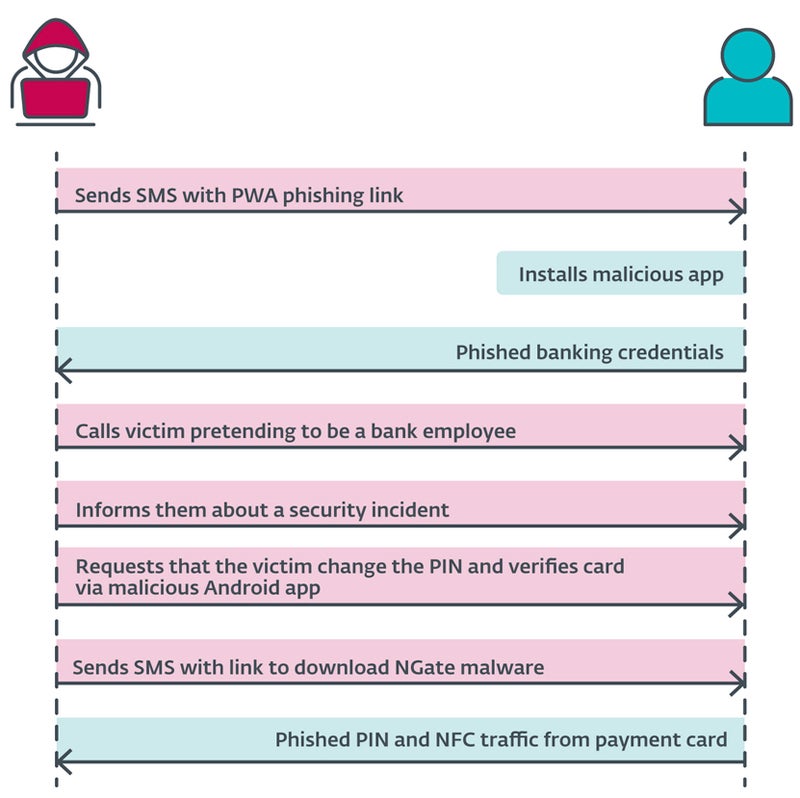

The cybercriminal sends a SMS message to the person, mentioning a tax return and together with a hyperlink to a phishing web site that impersonates banking corporations and results in a malicious PWA. As soon as put in and executed, the app requests banking credentials from the person.

At this level, the menace actor calls the person, impersonating the banking firm. The sufferer is knowledgeable that their account has been compromised, seemingly because of the earlier SMS. The person is then prompted to alter their PIN and confirm banking card particulars utilizing a cellular software to guard their banking account.

The person then receives a brand new SMS with a hyperlink to the NGate malware software.

As soon as put in, the app requests the activation of the NFC function and the popularity of the bank card by urgent it in opposition to the again of the smartphone. The information is shipped to the attacker in actual time.

Monetizing the stolen info

The data stolen by the attacker permits for traditional fraud: withdrawing funds from the banking account or utilizing bank card info to purchase items on-line.

Nevertheless, the NFC knowledge stolen by the cyberattacker permits them to emulate the unique bank card and withdraw cash from ATMs that use NFC, representing a beforehand unreported assault vector.

Assault scope

The analysis from ESET revealed assaults within the Czech Republic, as solely banking corporations in that nation had been focused.

A 22-year previous suspect has been arrested in Prague. He was holding about €6,000 ($6,500 USD). In response to the Czech Police, that cash was the results of theft from the final three victims, suggesting that the menace actor stole rather more throughout this assault marketing campaign.

Nevertheless, as written by ESET researchers, “the potential for its enlargement into different areas or nations can’t be dominated out.”

Extra cybercriminals will seemingly use comparable methods within the close to future to steal cash through NFC, particularly as NFC turns into more and more fashionable for builders.

The best way to shield from this menace

To keep away from falling sufferer to this cyber marketing campaign, customers ought to:

- Confirm the supply of the purposes they obtain and punctiliously look at URLs to make sure their legitimacy.

- Keep away from downloading software program outdoors of official sources, such because the Google Play Retailer.

- Avoid sharing their cost card PIN code. No banking firm will ever ask for this info.

- Use digital variations of the normal bodily playing cards, as these digital playing cards are saved securely on the machine and might be protected by further safety measures equivalent to biometric authentication.

- Set up safety software program on cellular units to detect malware and undesirable purposes on the cellphone.

Customers also needs to deactivate NFC on smartphones when not used, which protects them from further knowledge theft. Attackers can learn card knowledge by means of unattended purses, wallets, and backpacks in public locations. They will use the information for small contactless funds. Protecting instances can be used to create an environment friendly barrier to undesirable scans.

If any doubt ought to come up in case of a banking firm worker calling, grasp up and name the same old banking firm contact, ideally through one other cellphone.

Disclosure: I work for Development Micro, however the views expressed on this article are mine.