It might sound apparent to any enterprise chief that the success of enterprise AI initiatives rests on the provision, amount, and high quality of the information a corporation possesses. It’s not specific code or some magic know-how that makes an AI system profitable, however quite the information. An AI venture is primarily an information venture. Giant volumes of high-quality coaching knowledge are elementary to coaching correct AI fashions.

Nevertheless, in keeping with Forbes, solely someplace between 20-40% of firms are utilizing AI efficiently. Moreover, merely 14% of high-ranking executives declare to have entry to the information they want for AI and ML initiatives. The purpose is that getting coaching knowledge for machine studying tasks might be fairly difficult. This could be as a consequence of a lot of causes, together with compliance necessities, privateness and safety threat elements, organizational silos, legacy methods, or as a result of knowledge merely would not exist.

With coaching knowledge being so onerous to accumulate, artificial knowledge era utilizing generative AI could be the reply.

On condition that artificial knowledge era with generative AI is a comparatively new paradigm, speaking to a generative AI consulting firm for professional recommendation and assist emerges as the best choice to navigate by way of this new, intricate panorama. Nevertheless, previous to consulting GenAI specialists, you could need to learn our article delving into the transformative energy of generative AI artificial knowledge. This weblog publish goals to clarify what artificial knowledge is, easy methods to create artificial knowledge, and the way artificial knowledge era utilizing generative AI helps develop extra environment friendly enterprise AI options.

What’s artificial knowledge, and the way does it differ from mock knowledge?

Earlier than we delve into the specifics of artificial knowledge era utilizing generative AI, we have to clarify the artificial knowledge that means and evaluate it to mock knowledge. Lots of people simply get the 2 confused, although these are two distinct approaches, every serving a unique objective and generated by way of totally different strategies.

Artificial knowledge refers to knowledge created by deep generative algorithms educated on real-world knowledge samples. To generate artificial knowledge, algorithms first study patterns, distributions, correlations, and statistical traits of the pattern knowledge after which replicate real knowledge by reconstructing these properties. As we talked about above, real-world knowledge could also be scarce or inaccessible, which is especially true for delicate domains like healthcare and finance the place privateness issues are paramount. Artificial knowledge era eliminates privateness points and the necessity for entry to delicate or proprietary data whereas producing large quantities of secure and extremely purposeful synthetic knowledge for coaching machine studying fashions.

Mock knowledge, in flip, is usually created manually or utilizing instruments that generate random or semi-random knowledge primarily based on predefined guidelines for testing and growth functions. It’s used to simulate varied situations, validate performance, and consider the usability of functions with out relying on precise manufacturing knowledge. It might resemble actual knowledge in construction and format however lacks the nuanced patterns and variability present in precise datasets.

Total, mock knowledge is ready manually or semi-automatically to imitate actual knowledge for testing and validation, whereas artificial knowledge is generated algorithmically to duplicate actual knowledge patterns for coaching AI fashions and working simulations.

Key use instances for Gen AI-produced artificial knowledge

- Enhancing coaching datasets and balancing lessons for ML mannequin coaching

In some instances, the dataset measurement might be excessively small, which might have an effect on the ML mannequin’s accuracy, or the information in a dataset might be imbalanced, that means that not all lessons have an equal variety of samples, with one class being considerably underrepresented. Upsampling minority teams with artificial knowledge helps stability the category distribution by rising the variety of cases within the underrepresented class, thereby bettering mannequin efficiency. Upsamling implies producing artificial knowledge factors that resemble the unique knowledge and including them to the dataset.

- Changing real-world coaching knowledge with a purpose to keep compliant with industry- and region-specific laws

Artificial knowledge era utilizing generative AI is broadly utilized to design and confirm ML algorithms with out compromising delicate tabular knowledge in industries together with healthcare, banking, and the authorized sector. Artificial coaching knowledge mitigates privateness issues related to utilizing real-world knowledge because it would not correspond to actual people or entities. This enables organizations to remain compliant with industry- and region-specific laws, resembling, for instance, IT healthcare requirements and laws, with out sacrificing knowledge utility. Artificial affected person knowledge, artificial monetary knowledge, and artificial transaction knowledge are privacy-driven artificial knowledge examples. Assume, for instance, a couple of situation by which medical analysis generates artificial knowledge from a reside dataset; all names, addresses, and different personally identifiable affected person data are fictitious, however the artificial knowledge retains the identical proportion of organic traits and genetic markers as the unique dataset.

- Creating real looking check situation

Generative AI artificial knowledge can simulate real-world environments, resembling climate situations, visitors patterns, or market fluctuations, for testing autonomous methods, robotics, and predictive fashions with out real-world penalties. That is particularly useful in functions the place testing in harsh environments is critical but impracticable or dangerous, like autonomous automobiles, plane, and healthcare. Apart from, artificial knowledge permits for the creation of edge instances and unusual situations that won’t exist in real-world knowledge, which is important for validating the resilience and robustness of AI methods. This covers excessive circumstances, outliers, and anomalies.

Artificial knowledge era utilizing generative AI can convey vital worth when it comes to cybersecurity. The standard and variety of the coaching knowledge are important parts for AI-powered safety options like malware classifiers and intrusion detection. Generative AI-produced artificial knowledge can cowl a variety of cyber assault situations, together with phishing makes an attempt, ransomware assaults, and community intrusions. This selection in coaching knowledge makes positive AI methods are able to figuring out safety vulnerabilities and thwarting cyber threats, together with ones that they might not have confronted beforehand.

How generative AI artificial knowledge helps create higher, extra environment friendly fashions

Gartner estimates that by 2030, artificial knowledge will fully change actual knowledge in AI fashions. The advantages of artificial knowledge era utilizing generative AI lengthen far past preserving knowledge privateness. It underpins developments in AI, experimentation, and the event of sturdy and dependable machine studying options. A few of the most crucial benefits that considerably influence varied domains and functions are:

- Breaking the dilemma of privateness and utility

Entry to knowledge is important for creating extremely environment friendly AI fashions. Nevertheless, knowledge use is proscribed by privateness, security, copyright, or different laws. AI-generated artificial knowledge supplies a solution to this drawback by overcoming the privacy-utility trade-off. Corporations don’t want to make use of conventional anonymizing methods, resembling knowledge masking, and sacrifice knowledge utility for knowledge confidentiality any longer, as artificial knowledge era permits for preserving privateness whereas additionally giving entry to as a lot helpful knowledge as wanted.

- Enhancing knowledge flexibility

Artificial knowledge is way more versatile than manufacturing knowledge. It may be produced and shared on demand. Apart from, you possibly can alter the information to suit sure traits, downsize massive datasets, or create richer variations of the unique knowledge. This diploma of customization permits knowledge scientists to provide datasets that cowl a wide range of situations and edge instances not simply accessible in real-world knowledge. For instance, artificial knowledge can be utilized to mitigate biases embedded in real-world knowledge.

Conventional strategies of accumulating knowledge are pricey, time-consuming, and resource-intensive. Corporations can considerably decrease the entire value of possession of their AI tasks by constructing a dataset utilizing artificial knowledge. It reduces the overhead associated to accumulating, storing, formatting, and labeling knowledge – particularly for intensive machine studying initiatives.

One of the crucial obvious advantages of generative AI artificial knowledge is its skill to expedite enterprise procedures and cut back the burden of pink tape. The method of making exact workflows is incessantly hampered by knowledge assortment and coaching. Artificial knowledge era drastically shortens the time to knowledge and permits for quicker mannequin growth and deployment timelines. You’ll be able to receive labeled and arranged knowledge on demand with out having to transform uncooked knowledge from scratch.

How does the method of artificial knowledge era utilizing generative AI unfold?

The method of artificial knowledge era utilizing generative AI entails a number of key steps and methods. This can be a basic rundown of how this course of unfolds:

– The gathering of pattern knowledge

Artificial knowledge is sample-based knowledge. So step one is to gather real-world knowledge samples that may function a information for creating artificial knowledge.

– Mannequin choice and coaching

Select an acceptable generative mannequin primarily based on the kind of knowledge to be generated. The preferred deep machine studying generative fashions, resembling Variational Auto-Encoders (VAEs), Generative Adversarial Networks (GANs), diffusion fashions, and transformer-based fashions like massive language fashions (LLMs), require much less real-world knowledge to ship believable outcomes. Here is how they differ within the context of artificial knowledge era:

- VAEs work greatest for probabilistic modeling and reconstruction duties, resembling anomaly detection and privacy-preserving artificial knowledge era

- GANs are greatest fitted to producing high-quality pictures, movies, and media with exact particulars and real looking traits, in addition to for fashion switch and area adaptation

- Diffusion fashions are presently the very best fashions for producing high-quality pictures and movies; an instance is producing artificial picture datasets for pc imaginative and prescient duties like visitors car detection

- LLMs are primarily used for textual content era duties, together with pure language responses, artistic writing, and content material creation

– Precise artificial knowledge era

After being educated, the generative mannequin can create artificial knowledge by sampling from the realized distribution. As an example, a language mannequin like GPT may produce textual content token by token, or a GAN might produce graphics pixel by pixel. It’s doable to generate knowledge with specific traits or traits underneath management utilizing strategies like latent house modification (for GANs and VAEs). This enables the artificial knowledge to be modified and tailor-made to the required parameters.

– High quality evaluation

Assess the standard of the artificially generated knowledge by contrasting statistical measures (resembling imply, variance, and covariance) with these of the unique knowledge. Use knowledge processing instruments like statistical exams and visualization methods to judge the authenticity and realism of the artificial knowledge.

– Iterative enchancment and deployment

Combine artificial knowledge into functions, workflows, or methods for coaching machine studying fashions, testing algorithms, or conducting simulations. Enhance the standard and applicability of artificial knowledge over time by iteratively updating and refining the producing fashions in response to new knowledge and altering specs.

That is only a basic overview of the important phases firms have to undergo on their strategy to artificial knowledge. For those who want help with artificial knowledge era utilizing generative AI, ITRex provides a full spectrum of generative AI growth providers, together with artificial knowledge creation for mannequin coaching. That will help you synthesize knowledge and create an environment friendly AI mannequin, we’ll:

- assess your wants,

- advocate appropriate Gen AI fashions,

- assist accumulate pattern knowledge and put together it for mannequin coaching,

- prepare and optimize the fashions,

- generate and pre-process the artificial knowledge,

- combine the artificial knowledge into current pipelines,

- and supply complete deployment help.

To sum up

Artificial knowledge era utilizing generative AI represents a revolutionary method to producing knowledge that intently resembles real-world distributions and will increase the probabilities for creating extra environment friendly and correct ML fashions. It enhances dataset variety by producing further samples that complement the prevailing datasets whereas additionally addressing challenges in knowledge privateness. Generative AI can simulate advanced situations, edge instances, and uncommon occasions that could be difficult or pricey to look at in real-world knowledge, which helps innovation and situation testing.

By using superior AI and ML methods, enterprises can unleash the potential of artificial knowledge era to spur innovation and obtain extra sturdy and scalable AI options. That is the place we will help. With intensive experience in knowledge administration, analytics, technique implementation, and all AI domains, from traditional ML to deep studying and generative AI, ITRex will allow you to develop particular use instances and situations the place artificial knowledge can add worth.

Want to make sure manufacturing knowledge privateness whereas additionally preserving the chance to make use of the information freely? Actual knowledge is scarce or non-existent? ITRex provides artificial knowledge era options that deal with a broad spectrum of enterprise use instances. Drop us a line.

The publish Artificial Knowledge Technology Utilizing Generative AI appeared first on Datafloq.

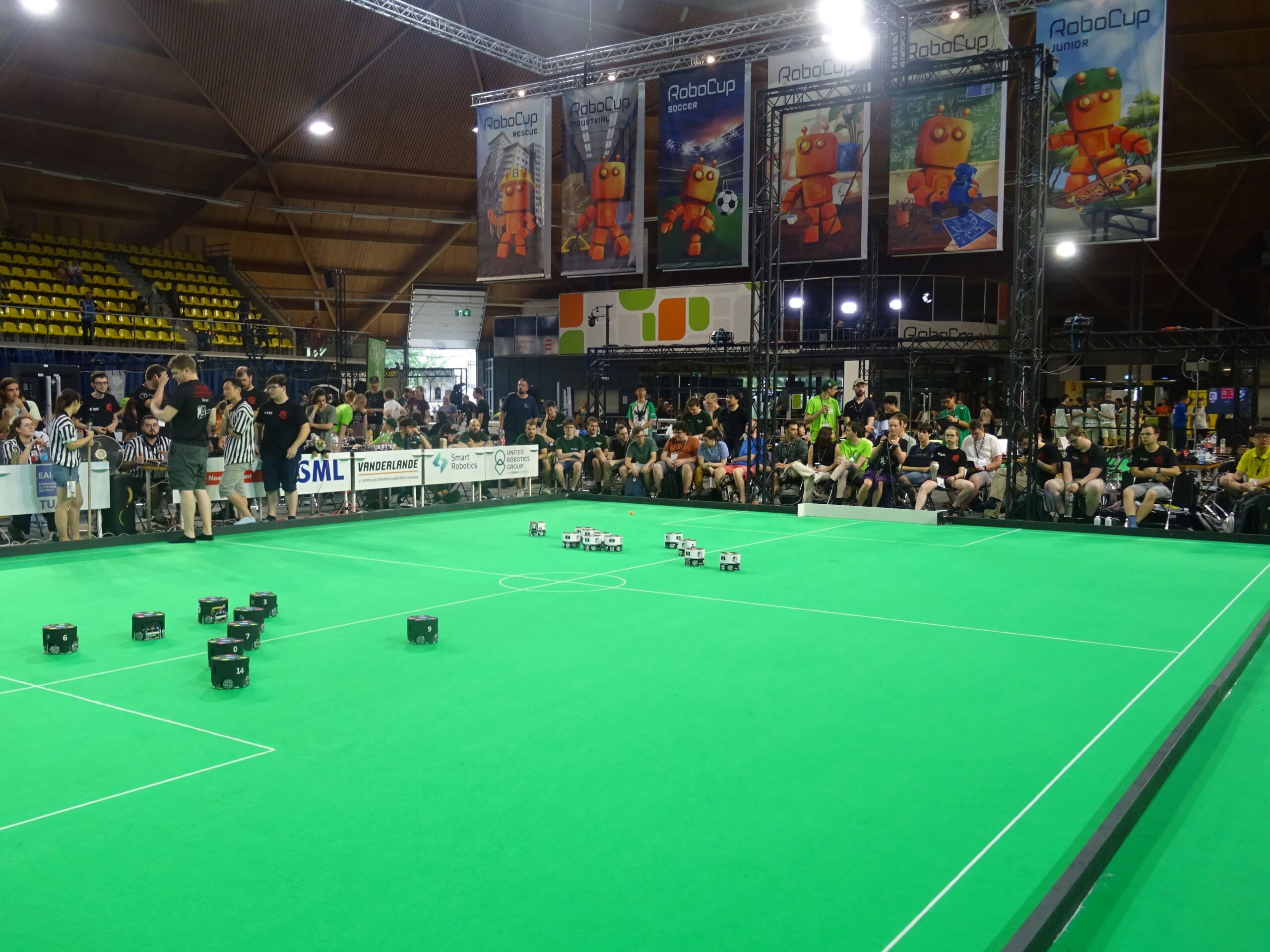

A break in play throughout a Small Measurement League match.

A break in play throughout a Small Measurement League match. The RedbackBots travelling workforce for 2024 (L-to-R: Murray Owens, Sam Griffiths, Tom Ellis, Dr Timothy Wiley, Mark Discipline, Jasper Avice Demay). Photograph credit score: Dr Timothy Wiley.

The RedbackBots travelling workforce for 2024 (L-to-R: Murray Owens, Sam Griffiths, Tom Ellis, Dr Timothy Wiley, Mark Discipline, Jasper Avice Demay). Photograph credit score: Dr Timothy Wiley. Mark Discipline organising the MetaQuest3 to make use of the augmented actuality system. Photograph credit score: Dr Timothy Wiley.

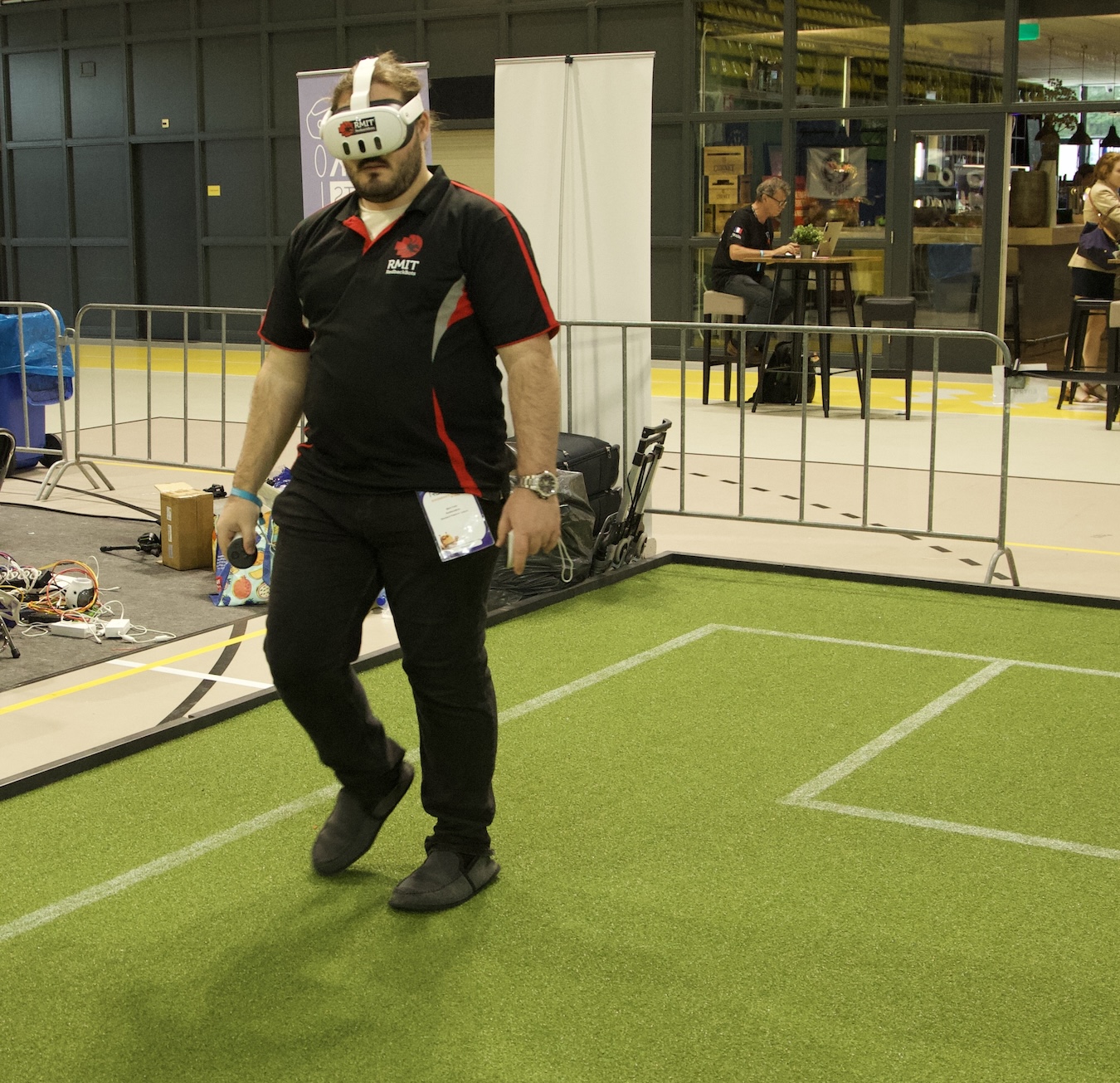

Mark Discipline organising the MetaQuest3 to make use of the augmented actuality system. Photograph credit score: Dr Timothy Wiley. Left: Workforce Skollska Knijgia. Proper: Workforce Overengeniering2.

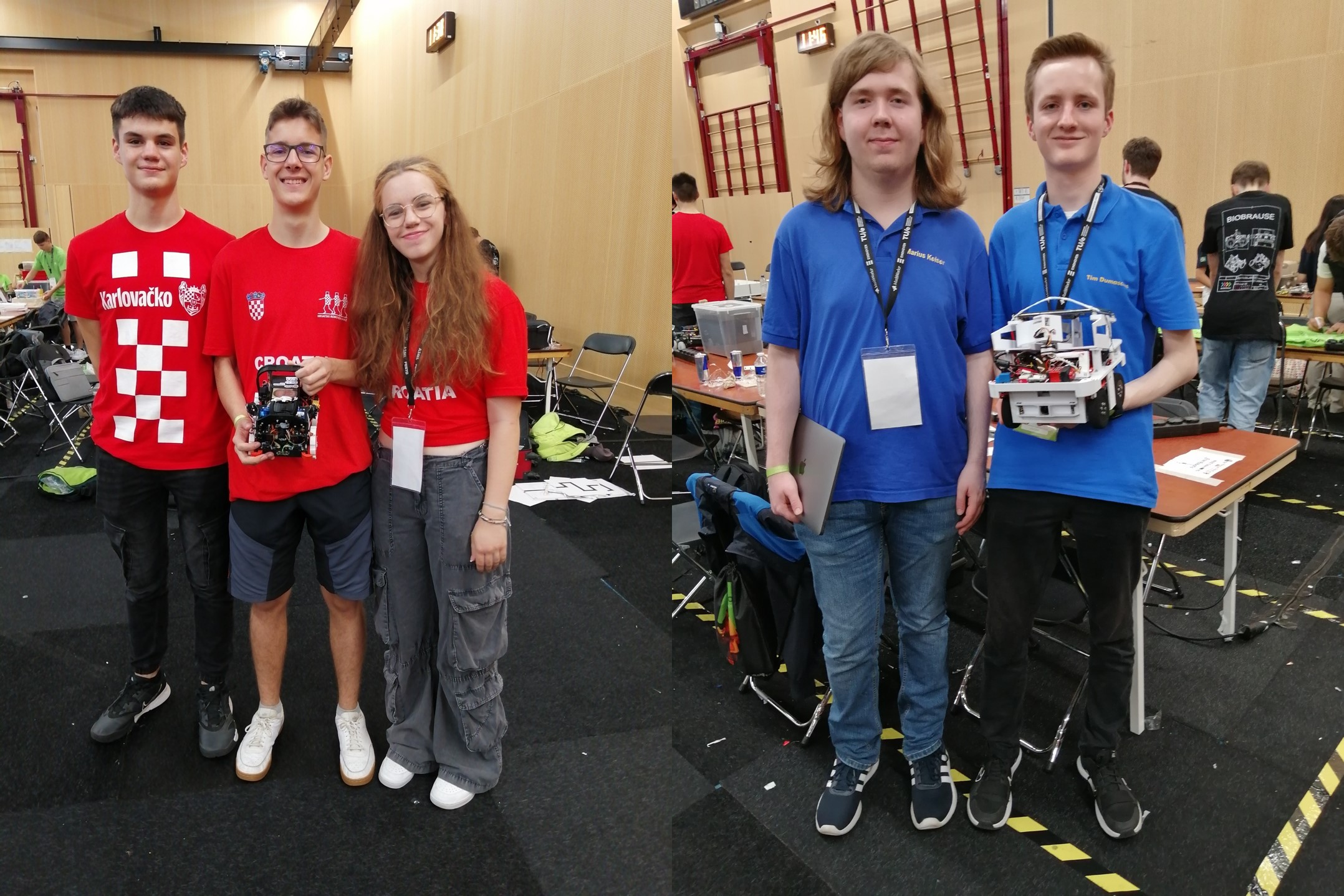

Left: Workforce Skollska Knijgia. Proper: Workforce Overengeniering2. Left: Workforce Tanorobo! Proper: Workforce New Aje.

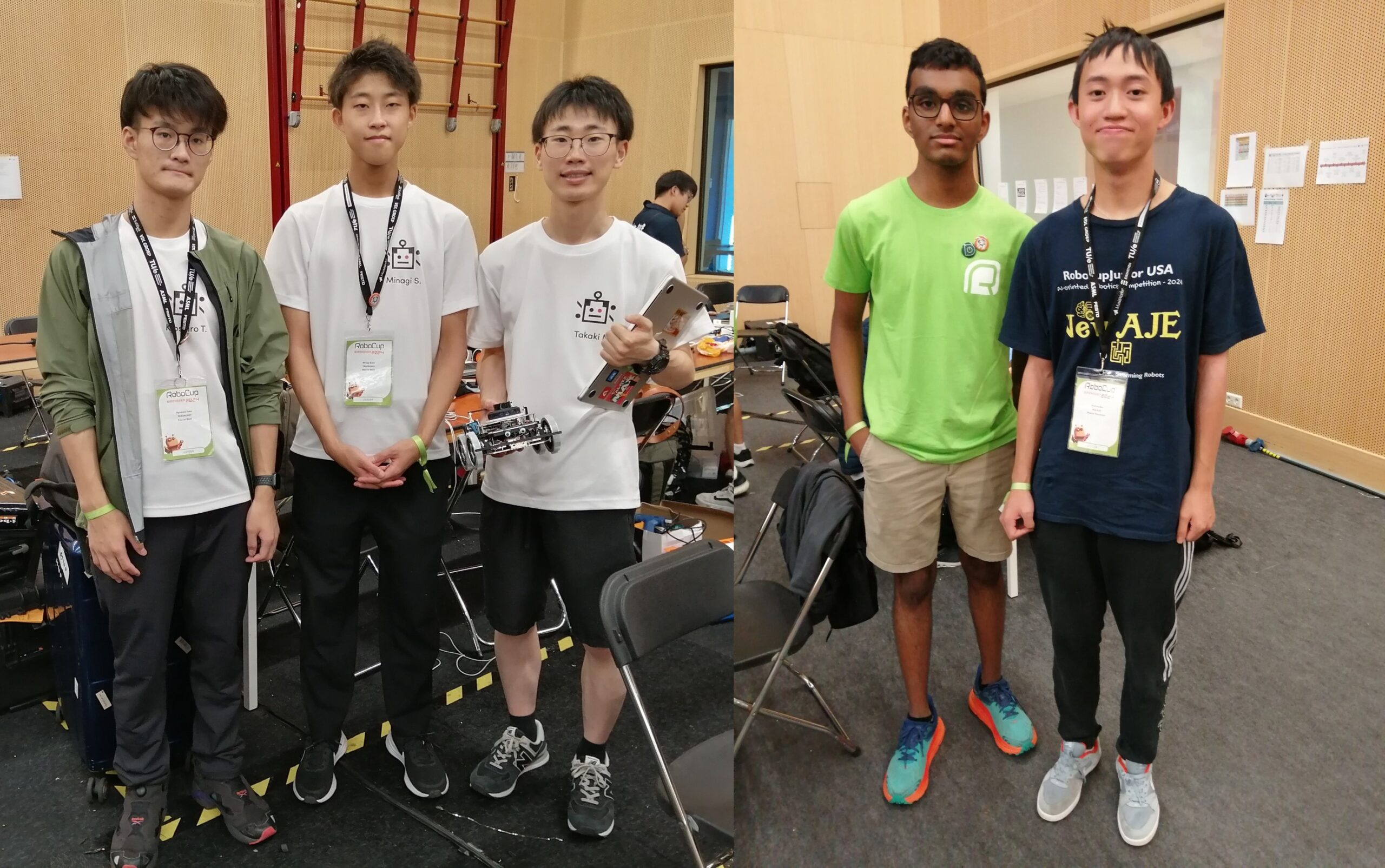

Left: Workforce Tanorobo! Proper: Workforce New Aje. Left: Workforce Medic Bot Proper: Workforce Jam Session.

Left: Workforce Medic Bot Proper: Workforce Jam Session. Standing room solely to see the Grownup Measurement Humanoids.

Standing room solely to see the Grownup Measurement Humanoids.