Have you ever ever questioned why a bot on an internet site immediately understands you, even when you misspell or write informally? It’s resulting from NLP — Pure Language Processing.

It’s a good algorithm that “reads” your textual content virtually like a human being: it acknowledges the which means, determines your intentions, and selects an applicable response. It makes use of linguistics, machine studying, and present language fashions like GPT all on the identical time.

Introduction to NLP Chatbots

At this time’s customers don’t wish to wait — they anticipate clear, immediate solutions with out pointless clicks. That’s precisely what NLP chatbots are constructed for: they perceive human language, course of natural-language queries, and immediately ship the data customers are on the lookout for.

They join with CRMs, acknowledge feelings, perceive context, and study from each interplay. That’s why they’re now important for contemporary AI-powered customer support, which incorporates every little thing from on-line procuring to digital banking and well being care help.

Increasingly more corporations are utilizing chatbots for the primary level of contact with clients — a second that must be as clear, useful, and reliable as doable.

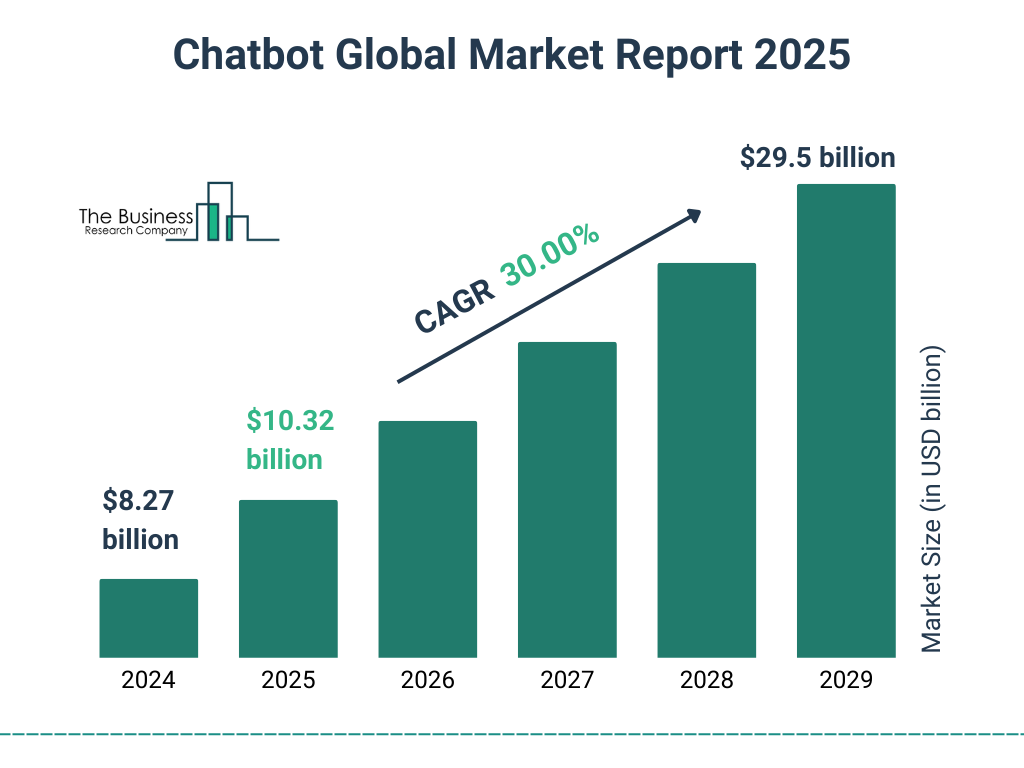

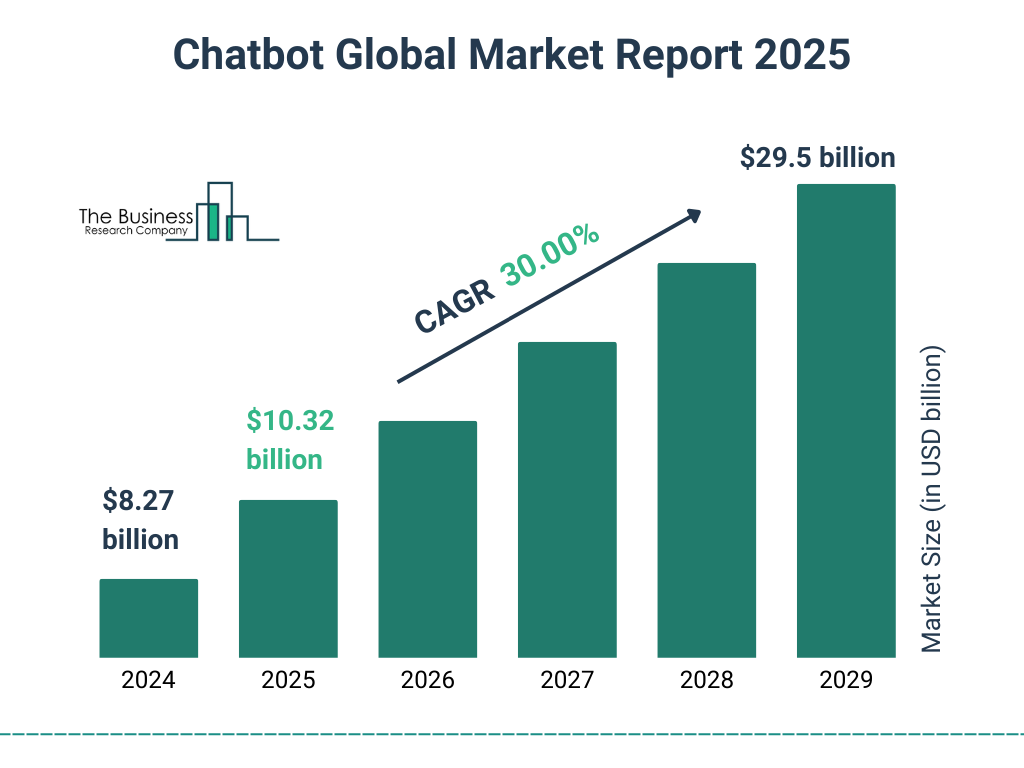

The Enterprise Analysis Firm printed a report that demonstrates how shortly the chatbot enterprise is growing. The market, valued at $10.32 billion in 2025, is forecast to increase to $29.5 billion by 2029, sustaining a robust compound annual development price of roughly 30%.

Chatbot market 2025, The Enterprise Analysis Firm

What Is Pure Language Processing (NLP)?

Pure Language Processing (NLP) helps computer systems work with human language. It’s not nearly studying phrases. It’s about getting the which means behind them — what somebody is attempting to say, what they need, and generally even how they really feel.

NLP is utilized in virtually all functions:

- Fashionable phrase processors can predict and recommend the ending.

- You say to your voice assistant, “Play one thing stress-free”, and it understands your needs — it interprets context.

- A buyer studies in a chat, “The place’s my order?” or “My package deal hasn’t proven up” — the bot understands there’s a supply query and appropriately responds.

- Google hasn’t searched on key phrases in years — it understands your question with contextual which means, even when your question is imprecise, for instance, “the film the place the man loses his reminiscence.”

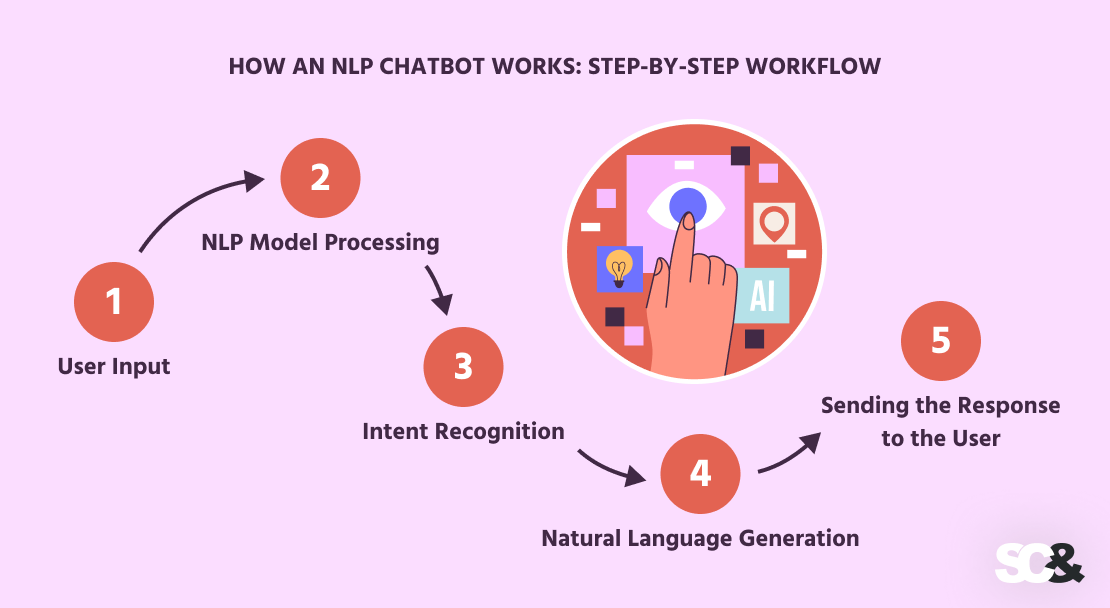

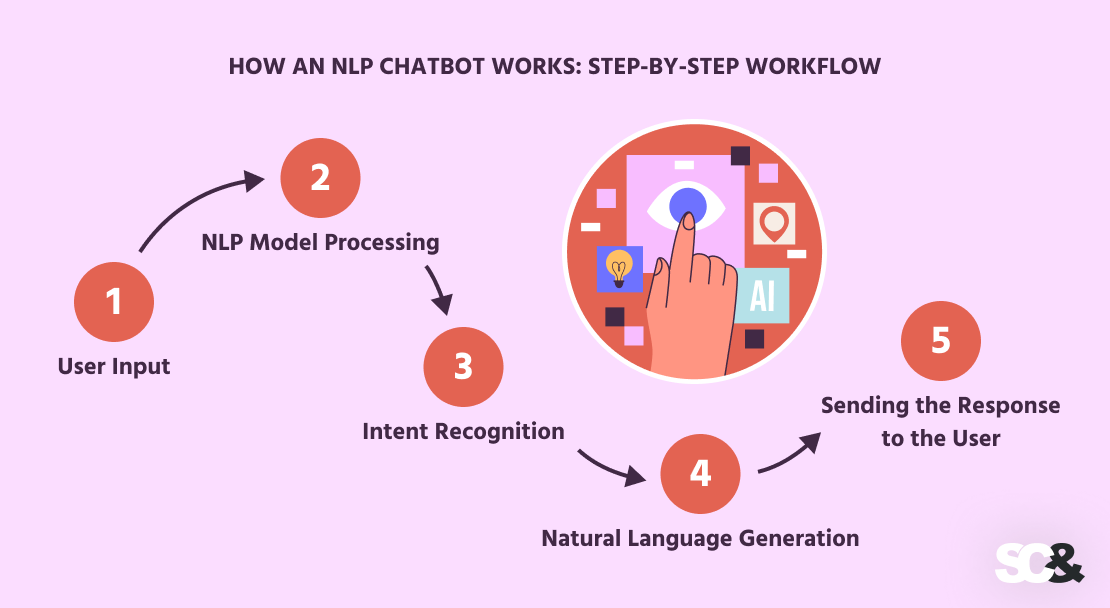

How an NLP Chatbot Works: Step-by-Step Workflow

Making a dialog with an NLP chatbot is not only a question-and-answer train. There’s a sequence of operations occurring inside that turns human speech right into a significant bot response. Right here’s the way it works step-by-step:

-

Person Enter

The consumer enters a message within the chat, for instance: “I wish to cancel my order.”

This may be:

- Free textual content with typos or slang

- A query in unstructured type

- A command phrased in numerous methods: “Please cancel the order,” “Cancel the acquisition,” and so forth.

-

NLP Mannequin Processing

The bot analyzes the message utilizing NLP elements:

- Tokenization — splitting into phrases and phrases

- Lemmatization — changing phrases to their base type

- Syntax evaluation — figuring out components of speech and construction

- Named Entity Recognition (NER) — extracting key knowledge (e.g., order quantity, date)

NLP helps to grasp: “cancel” — is an motion, “order” — is the item.

-

Intent Recognition

The chatbot determines what the consumer needs. On this case, the intent is order cancellation.

Moreover, it analyzes:

- Emotional tone (irritation, urgency)

- Dialog historical past (context)

- Clarifying questions (if data is inadequate)

-

Pure Language Era

Primarily based on the intent and knowledge, the bot generates a significant and clear response. This might be:

- A static template-based reply

- A dynamically generated textual content by way of the NLG module

- Integration with CRM/API (e.g., retrieving order standing)

Instance response:

“Bought it! I’ve canceled order №12345. The refund will likely be processed inside 3 enterprise days.”

-

Sending the Response to the Person

The ultimate step — the bot sends the prepared response to the interface, the place the consumer can:

- Proceed the dialog

- Verify/cancel the motion

- Proceed to the subsequent query

NLP Chatbots vs. Rule-Primarily based Chatbots: Key Variations

When growing a chatbot, it is very important select the proper strategy — it relies on how helpful, versatile, and adaptable it is going to be in real-life situations. All chatbots will be divided into two sorts: rule-based and NLP-oriented.

The primary one works in keeping with predefined guidelines, whereas the second makes use of pure language processing and machine studying. Under is a comparability of the important thing variations between these approaches:

| Side |

Rule-Primarily based Chatbots |

NLP Chatbots |

| How they work |

Use fastened guidelines — “if this, then that.” |

Use an AI agent to determine what the consumer actually means. |

| Dialog fashion |

Observe strict instructions. |

Can deal with other ways of asking the identical factor. |

| Language abilities |

Don’t truly “perceive” — they only match key phrases. |

Perceive the message as an entire, not simply the phrases. |

| Studying capacity |

They don’t study — as soon as arrange, that’s how they keep. |

Get smarter over time by studying from new interactions. |

| Context consciousness |

Don’t preserve monitor of earlier messages. |

Keep in mind the move of the dialog and reply accordingly. |

| Setup |

Simple to construct and launch shortly. |

Takes longer to develop however provides extra depth and suppleness. |

| Instance request |

“1 — cancel order” |

“I’d wish to cancel my order — I don’t want it anymore.” |

Key Variations Between Rule-Primarily based and NLP Chatbots

Strengths and Limitations

Each rule-based and NLP chatbots have their execs and cons. The most suitable choice relies on what you’re constructing, your finances, and how much buyer expertise your customers anticipate. Right here’s a more in-depth have a look at what every sort brings to the desk — and the place issues can get tough.

Benefits of Rule-Primarily based Chatbots:

- Simple to construct and handle

- Dependable for dealing with normal, predictable flows

- Works effectively for FAQs and menu-based navigation

Limitations of Rule-Primarily based Chatbots:

- Battle with uncommon or sudden queries

- Can’t course of pure language

- Lack of knowledge of context and consumer intent

Benefits of NLP Chatbots:

- Perceive free-form textual content and other ways of phrasing

- Can acknowledge intent, feelings, even typos and errors

- Help pure conversations and bear in mind context

- Study and enhance over time

Limitations of NLP Chatbots:

- Extra advanced to develop and check

- Require high-quality coaching knowledge

- Could give suboptimal solutions if not educated effectively

When to Use Every Kind

There’s no one-size-fits-all on the subject of chatbots. Your best option actually relies on what you want the bot to do. For easy, well-defined duties, a primary rule-based bot could be all you want. However when you’re coping with extra open-ended conversations or need the bot to grasp pure language and context, an NLP-based answer makes much more sense.

Right here’s a fast comparability that can assist you determine which kind of chatbot matches completely different use circumstances:

| Use Case |

Advisable Chatbot Kind |

Why |

| Easy navigation (menus, buttons) |

Rule-Primarily based |

Doesn’t require language understanding, simple to implement |

| Ceaselessly Requested Questions (FAQ) |

Rule-Primarily based or Hybrid |

Eventualities will be predefined prematurely |

| Help with a variety of queries |

NLP Chatbot |

Requires flexibility and context consciousness |

| E-commerce (order assist, returns) |

NLP Chatbot |

Customers phrase requests otherwise, personalization is necessary |

| Momentary campaigns, promo provides |

Rule-Primarily based |

Fast setup, restricted and particular flows |

| Voice assistants, voice enter |

NLP Chatbot |

Wants to grasp pure speech |

Chatbot Use Circumstances and Greatest-Match Applied sciences

Machine Studying and Coaching Knowledge

Machine studying is what makes good NLP chatbots really clever. Not like bots that keep on with inflexible scripts, a trainable mannequin can truly perceive what folks imply — irrespective of how they phrase it — and adapt to the way in which actual customers speak.

On the core is coaching on giant datasets made up of actual conversations. These are referred to as coaching knowledge. Every consumer message within the dataset is labeled — what the consumer needs (intent), what data the message comprises (entities), and what the right response needs to be.

For instance, the bot learns that “I wish to cancel my order,” “Please cancel my order,” and “I now not want the merchandise” all specific the identical intent — despite the fact that the wording is completely different. The extra examples it sees, the extra precisely the mannequin performs.

However it’s not nearly gathering consumer messages. Knowledge must be structured: intent detection, entity extraction (order numbers, addresses, dates), error frequency identification, and describing phrasing options. Analysts, linguists, and knowledge scientists work collectively to do that.

However it’s not nearly piling up chat logs. To show a chatbot effectively, that knowledge must be cleaned up and arranged. It means determining what the consumer truly needs (the intent), selecting out key particulars like names or dates, noticing widespread typos or quirks, and understanding all of the other ways folks would possibly say the identical factor.

It’s a workforce effort — analysts, linguists, and knowledge scientists all play an element in ensuring the bot actually will get how folks speak.

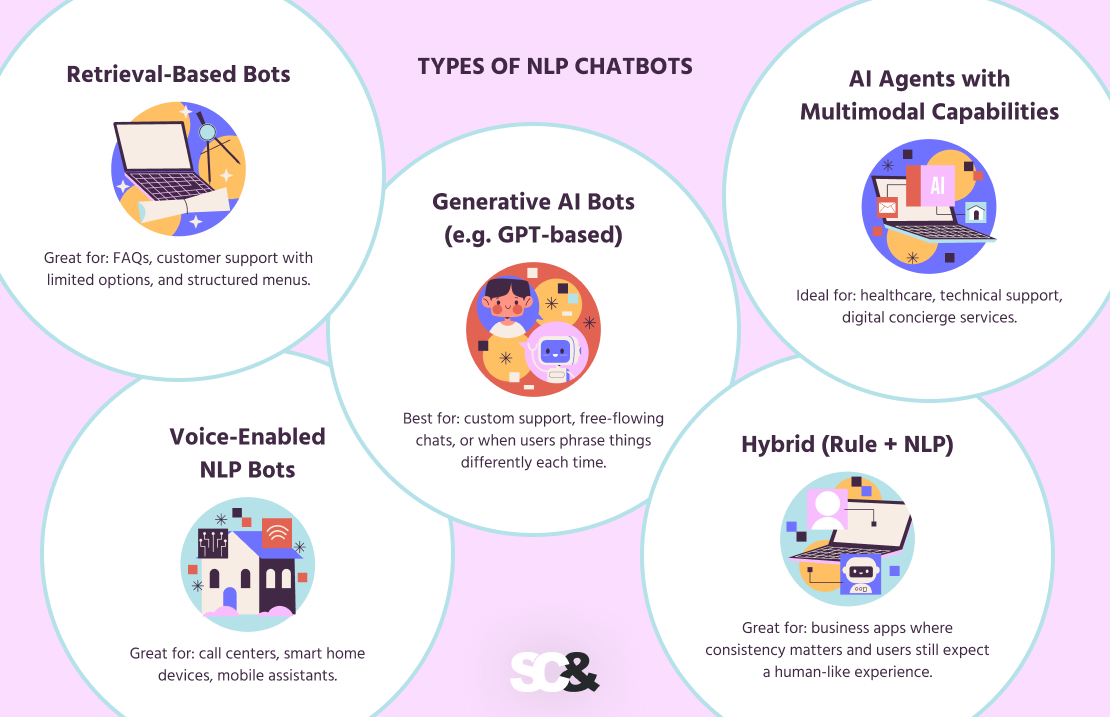

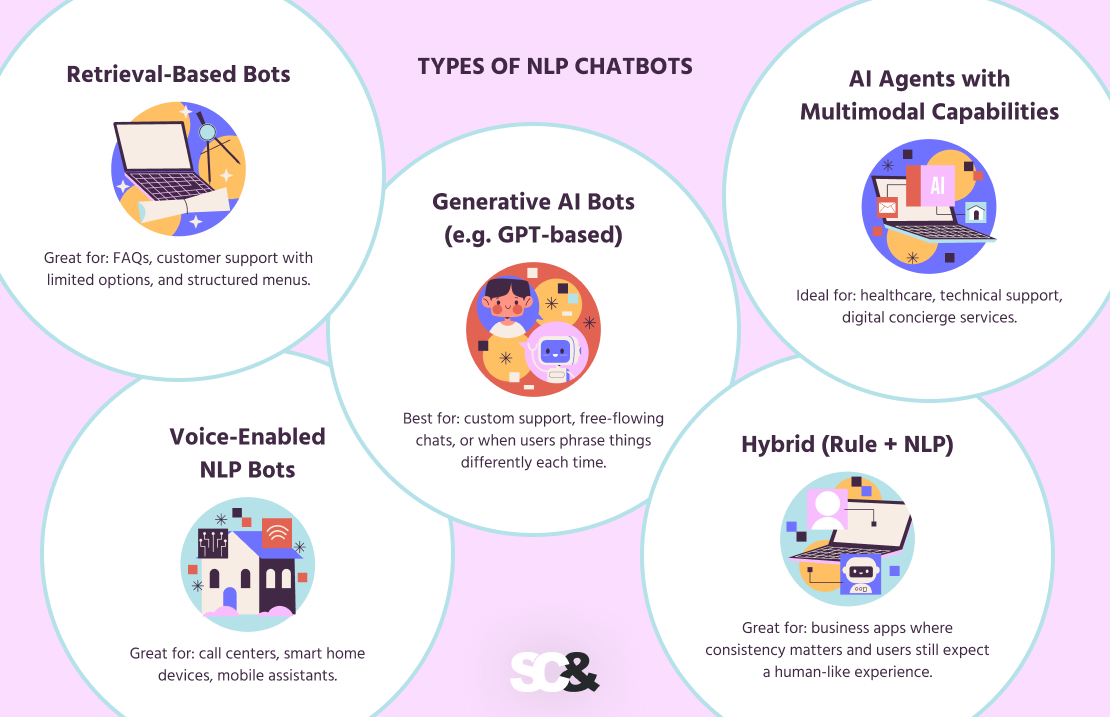

Kinds of NLP Chatbots

Not all chatbots are constructed the identical. Some comply with easy guidelines, others really feel virtually like actual folks. And relying on what what you are promoting wants — quick solutions, deep conversations, and even voice and picture help — there’s a kind of chatbot that matches excellent. Right here’s a fast information to the most typical sorts you’ll come throughout in 2025:

Retrieval-Primarily based Bots

These bots are like good librarians. They don’t invent something — they only choose the very best response from an inventory of solutions you’ve already given them. If somebody asks a query that’s been requested earlier than, they provide an immediate reply. Nice for: FAQs, buyer help with restricted choices, and structured menus.

Generative AI Bots (e.g. GPT-based)

These are those that may really converse. They don’t merely reply with pre-determined responses — they create their very own primarily based in your enter. They carry out the very best for non-linear conversations, have increased dialog fashion matches, and may match nearly any tone, fashion, and humor.

Greatest for: customized help, something with free flowing conversations, or conditions the place customers can just about by no means say issues the identical approach twice.

AI Brokers with Multimodal Capabilities

These machines can do extra than simply learn textual content. You possibly can chat with them, ship an e-mail, or add a doc, they usually know the best way to take care of it. Consider them as digital assistants with superpowers: they’ll “see,” “hear,” and “perceive” concurrently. Superb for: healthcare, technical help, digital concierge companies.

Voice-Enabled NLP Bots

These are the bots that you just communicate to — they usually communicate again. They use speech-to-text to grasp your voice and text-to-speech to answer. Excellent whenever you’re on the go, multitasking, or simply favor speaking over typing. Nice for: name facilities, good dwelling units, cell assistants.

Hybrid (Rule + NLP)

Why select between easy and good? Hybrid bots combine rule-based logic for straightforward duties (like “press 1 to cancel”) with NLP to deal with extra pure, advanced messages.

They’re versatile, scalable, and dependable — . Nice for: enterprise apps the place consistency issues and customers nonetheless anticipate a human-like expertise.

Construct an NLP Chatbot: Chatbot Use Circumstances

Creating an NLP chatbot is a course of that mixes enterprise logic, linguistic evaluation, and technical implementation. Listed below are the important thing levels of improvement:

Outline Use Circumstances and Intent Construction

Step one is to find out why you want a chatbot and what duties it’ll carry out. It may be requests, buyer help, reserving, solutions to frequent questions, and so forth.

After that, the construction of intents is shaped, i.e., an inventory of consumer intentions (for instance, “test order standing”, “cancel subscription”, “ask a query about supply”). Every intent needs to be clearly described and coated with examples of phrases with which customers will specific it.

Select NLP Engines (ChatGPT, Dialogflow, Rasa, and so forth.)

The following step is to decide on a pure language processing platform or engine. It may be:

- Dialogflow — a well-liked answer from Google with a user-friendly visible interface

- Rasa — open-source framework with native deployment and versatile customization

- ChatGPT API — highly effective LLMs from OpenAI appropriate for advanced and versatile dialogs

- Amazon Lex, Microsoft LUIS, IBM Watson Assistant — enterprise platforms with deep integration

The selection relies on the extent of management, privateness necessities, and integration with different programs.

Prepare with Pattern Dialogues and Suggestions Loops

After deciding on a platform, the bot is educated on the premise of dialog examples. It is very important gather as many variants as doable of phrases that customers use to precise the identical intentions.

The above can also be advisable to offer a technique of suggestions and refresher coaching. The system ought to “study” from new knowledge: enhance recognition accuracy and pure language understanding, consider typical errors, and replace the entity dictionary.

Combine with Frontend (Internet, Cellular, Voice)

The following stage is to combine the chatbot with consumer channels: web site, cell app, messenger, or voice assistant. The interface needs to be intuitive and simply adaptable to completely different units.

It is usually necessary to offer for quick knowledge trade with backend programs — CRM, databases, fee programs, and different exterior companies.

Add Fallbacks and Human Handoff Logic

Even the neatest bot won’t be able to course of 100% of requests. Due to this fact, it’s essential to implement fallback mechanics: if the bot doesn’t perceive the consumer, it’ll ask once more, provide choices, or go the dialog to an operator.

Human handoff (handoff to a stay worker) is a important component for advanced or delicate conditions. It will increase belief within the system and helps keep away from a unfavorable consumer expertise.

Instruments and Applied sciences for NLP Chatbots

As of late, chatbots can keep on actual conversations, information folks via duties, and make issues really feel easy and pure. What makes that doable? Thoughtfully chosen instruments that assist groups construct chatbots customers can truly depend on — clear, useful, and simple to speak to.

To make it simpler to decide on the proper platform, right here’s a comparability desk highlighting key options:

| Platform |

Entry Kind |

Customization Degree |

Language Help |

Integrations |

Greatest For |

| OpenAI / GPT-4 |

Cloud (API) |

Medium |

Multilingual |

Through API |

AI assistants, textual content technology |

| Google Dialogflow |

Cloud |

Medium |

Multilingual |

Google Cloud, messaging platforms |

Fast improvement of conversational bots |

| Rasa |

On-prem / Cloud |

Excessive |

Multilingual |

REST API |

Customized on-premise options |

| Microsoft Bot Framework |

Cloud |

Excessive (by way of code) |

Multilingual |

Azure, Groups, Skype, others |

Enterprise-level chatbot functions |

| AWS Lex |

Cloud |

Medium |

Restricted |

AWS Lambda, DynamoDB |

Voice and textual content bots inside the AWS ecosystem |

| IBM Watson Assistant |

Cloud |

Medium |

Multilingual |

IBM Cloud, CRM, exterior APIs |

Enterprise analytics and buyer help |

Comparability of Main NLP Chatbot Improvement Platforms

Greatest Practices for NLP Chatbot Improvement

Creating an environment friendly NLP chatbot not solely depends on the standard of the mannequin, but additionally how the mannequin is educated, examined, and improved. The next are core practices that can enable to make the bot extremely correct, helpful, and sustainable within the real-world.

Hold Coaching Knowledge Up to date

Repeatedly up to date coaching knowledge helps the chatbot adapt to modifications in consumer conduct and language patterns. Up-to-date knowledge will increase the accuracy of intent recognition and minimizes errors in question processing.

Use Clear Intent Definitions

Effectively-defined function definitions take away ambiguity, overlap and conflicts between contexts. A company mannequin of intents higher handles question understanding and propels bot response time.

Monitor Conversations for Edge Circumstances

Evaluation of actual dialogs permits you to establish non-standard circumstances that the bot fails to deal with. Figuring out such “nook” situations helps to shortly make changes and enhance the steadiness of dialog logic.

Mix Rule-Primarily based Chatbot Logic for Security

A chatbot that mixes NLP with some well-placed guidelines is a lot better at staying on monitor. In tough or necessary conditions, it will probably keep away from errors and keep on with what you are promoting logic with out going off beam.

Take a look at with Actual Customers

Testing with stay audiences reveals weaknesses that can’t be modeled in an remoted atmosphere. Suggestions from customers helps to higher perceive expectations and conduct, which helps to enhance consumer expertise.

Observe Metrics (Fallback Charge, CSAT, Decision Time)

Keeping track of metrics like fallback price, buyer satisfaction, and the way lengthy it takes to resolve queries helps you see how effectively your chatbot is doing — and the place there’s room to enhance.

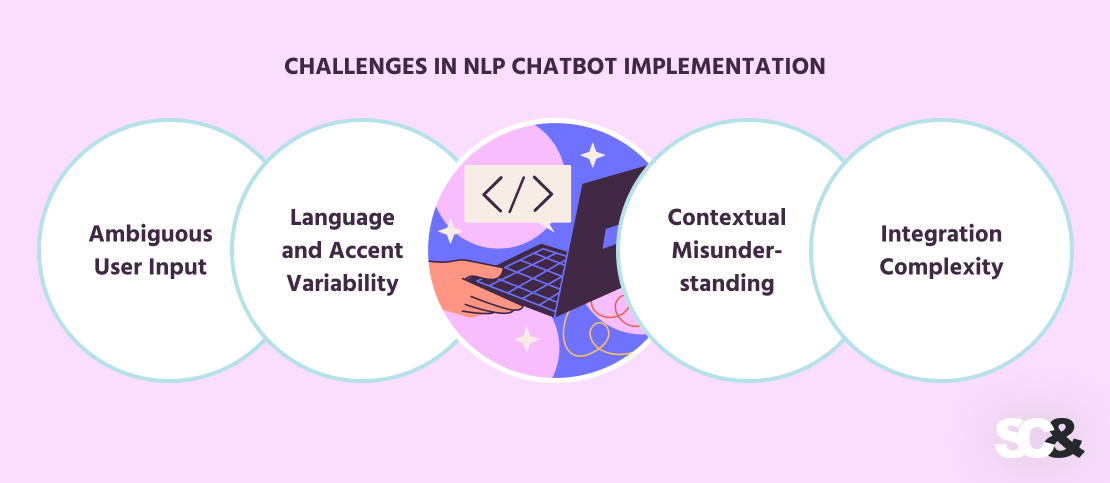

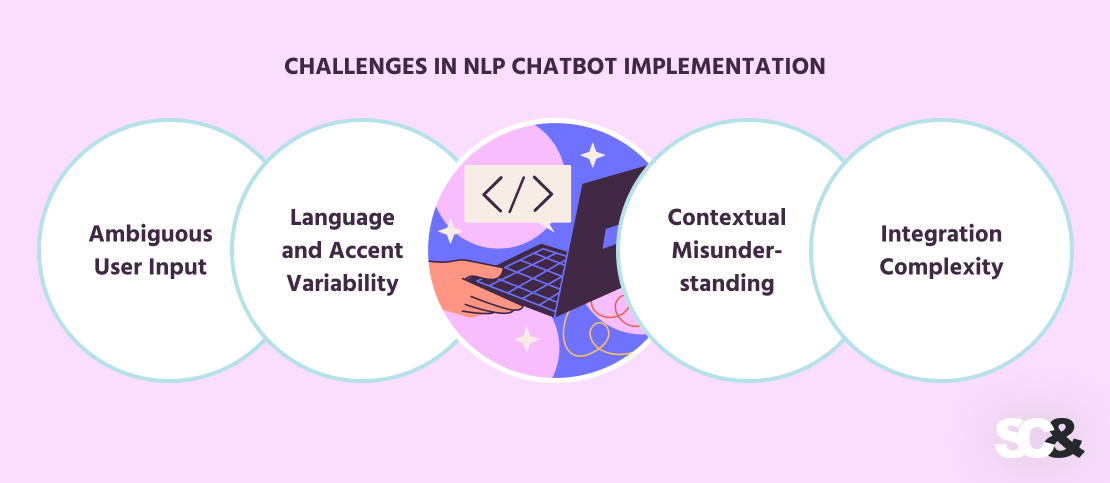

Challenges in NLP Chatbot Implementation

Though fashionable NLP chatbots are extremely succesful, bringing them into real-world use comes with its personal set of challenges. Figuring out about these hurdles forward of time might help you propose higher and construct a chatbot that’s extra dependable and efficient.

Ambiguous Person Enter

Folks don’t at all times say issues clearly. Messages will be imprecise, carry double meanings, or lack context. That makes it more durable for the chatbot to grasp the consumer’s intent and may result in mistaken replies. To cut back this threat, it’s necessary to incorporate clarifying questions and have a well-thought-out fallback technique.

Language and Accent Variability

A chatbot wants to acknowledge completely different languages, dialects, and accents, particularly when voice enter is concerned. If the system isn’t educated effectively sufficient on these variations, it will probably misread what’s being stated and break the consumer expertise.

Contextual Misunderstanding

Lengthy or advanced conversations will be tough. If a consumer modifications the subject or makes use of pronouns like “it” or “that,” the chatbot would possibly lose monitor of what’s being mentioned. This may result in awkward or irrelevant replies. To keep away from this, it’s essential to implement context monitoring and session reminiscence.

Integration Complexity

Connecting a chatbot to instruments like CRMs, databases, or APIs usually requires additional improvement work and cautious consideration to knowledge safety, permissions, and sync processes. With out correct integration, the bot received’t be capable of carry out helpful duties in actual enterprise situations.

At SCAND, we don’t simply construct software program — we construct long-term expertise partnerships. With over 20 years of expertise and deep roots in AI, deep studying, and pure language processing, we design chatbots that do greater than reply questions — they perceive your customers, help your groups, and enhance buyer experiences. Whether or not you’re simply beginning out or scaling quick, we’re the AI chatbot improvement firm that may assist you to flip automation into actual enterprise worth. Let’s create one thing your clients will love.

Ceaselessly Requested Questions (FAQs)

What’s the distinction between NLP and AI chatbot?

Consider conversational AI (Synthetic Intelligence) as the massive umbrella — it covers every kind of good applied sciences that attempt to mimic human pondering.

NLP (Pure Language Processing) is one particular a part of AI that focuses on how machines perceive and work with human language, whether or not it’s written or spoken. So, whereas all NLP is AI, not all AI is NLP.

Are NLP chatbots the identical as LLMs?

Not precisely, although they’re carefully associated. LLMs (Massive Language Fashions), like GPT, are the engine behind many superior NLP chatbots. An NLP chatbot could be powered by an LLM, which helps it generate replies, perceive advanced messages, and even match your tone.

However not all NLP bots use LLMs. Some keep on with less complicated fashions centered on particular duties. So it’s extra like: some NLP chatbots are constructed utilizing LLMs, however not all.

How do NLP bots study from customers?

They study the way in which folks do — from expertise. Each time customers work together with a chatbot, the system can gather suggestions: Did the bot perceive the request? Was the reply useful?

Over time, builders (and generally the bots themselves) analyze these patterns, retrain the mannequin with actual examples, and fine-tune it to make future conversations smoother. It is type of like a suggestions loop — the extra you speak to it, the smarter it will get (assuming it is set as much as study, after all).

Is NLP just for textual content, or additionally for voice?

It’s not restricted to textual content in any respect. NLP can completely work with voice enter, too. In reality, many good assistants — like Alexa or Siri — use NLP to grasp what you are saying and determine the best way to reply.

The method normally contains speech recognition first (turning your voice into textual content), then NLP kicks in to interpret the message. So sure — NLP works simply wonderful with voice, and it’s a giant a part of fashionable voice tech.

How a lot does it value to construct an NLP chatbot?

In the event you’re constructing a primary chatbot utilizing an off-the-shelf platform, the fee will be pretty low, particularly when you deal with setup in-house. However when you’re going for a customized, AI-powered assistant that understands pure language, remembers previous conversations, and integrates along with your instruments, you are taking a look at an even bigger funding. Prices differ primarily based on complexity, coaching knowledge, integrations, and ongoing help.