You’ve most likely heard of the Bored Ape Yacht Membership assortment, the place the worth of a single NFT can attain tons of of 1000’s of {dollars}. However what when you may personal only a fraction of such a token, investing not $200,000 however, say, $200? That is precisely what fractional NFTs provide — the chance to purchase a “piece” of an costly digital asset, divided into a number of shares, and earn alongside different buyers if its worth will increase.

What Are Fractional NFTs and Why Are They Changing into In style

An NFT (Non-Fungible Token) is a novel digital asset on the blockchain that verifies possession of a selected merchandise, comparable to a picture, music video, in-game merchandise, or digital piece of land. The problem is that the rarest and most beneficial NFTs can price tens and even tons of of 1000’s of {dollars}, making them unaffordable for most individuals.

The issue is solved by fractional NFTs. The know-how permits an NFT to be fractionalized into many smaller tokens, representing a share of that asset. An investor can purchase solely part of a token and revel in its value development in proportion to their share. It really works similar to shares in a enterprise: you don’t personal the entire enterprise; nonetheless, you could have rights to a few of its worth and potential income.

The Function of Fractional Possession within the Progress of the NFT Market

Fractional possession — the place a number of folks share possession of an NFT — is already serving to to develop the NFT market — it’s opening up digital property to individuals who couldn’t beforehand put money into high-value NFTs and likewise rising buying and selling exercise throughout the area, as fractional shares are extra sensible to purchase and promote.

As well as, fractional possession permits for the event of separate platforms to commerce fractional NFTs, giving buyers user-friendly instruments to find, commerce, and handle their possession of every asset. It additionally stimulates technological innovation that helps fractionalization and collective funding methods broaden.

As the costs of premium NFTs proceed to extend, fractional possession will develop in reputation, and these platforms will change into an enormous a part of the NFT ecosystem.

How Fractional NFTs Work

Once we speak about fractional NFTs, we imply a know-how that permits a single digital asset to be divided amongst a number of house owners. The precept works very similar to shopping for shares of an organization or co-owning actual property.

As an alternative of 1 investor paying tons of of 1000’s of {dollars} for an unique NFT, its worth could be break up into many components, every confirming possession of a share of the asset.

Ideas of Fractional Possession

Right here’s how the method works: the NFT proprietor decides to separate their token and deploys a particular good contract on the blockchain. This contract locks the unique NFT and points a selected variety of new tokens, for instance, 1,000 items. Every of those tokens represents a fraction of the underlying asset and could be freely purchased or bought on NFT marketplaces.

When an investor purchases a number of of those fractions, they change into a co-owner of the NFT. If the worth of the unique token will increase, the worth of every fraction additionally grows.

Generally, fractional holders acquire voting rights to take part in selections concerning the asset — for example, whether or not to promote the complete NFT or proceed holding it. When the NFT is ultimately bought, income are distributed amongst all fractional house owners in proportion to their shares.

This mannequin considerably simplifies participation in high-value offers, will increase market liquidity, and opens new funding alternatives. As an alternative of ready for a single rich purchaser, an NFT proprietor can shortly promote the asset in components, whereas buyers get the possibility to entry premium digital property with minimal investments.

NFT fractionalization basically transforms uncommon tokens into extra accessible and simply tradable digital property, broadening market participation and fueling business development.

Key Benefits and Advantages of Fractional NFTs

The advantages of fractional NFTs affect three key teams: buyers, creators and collectors, and the NFT marketplaces themselves.

The desk under summarizes the important thing advantages of fractional possession and makes the market accessible, liquid, and interesting for all individuals.

| For whom |

Advantages of fractional possession of NFTs |

| Traders |

– Alternative to put money into costly NFTs with small quantities.

– Decrease dangers via portfolio diversification.

– Quick shopping for and promoting of fractions on marketplaces.

– Entry to premium collections and property that had been beforehand unattainable.

|

| Creators and collectors |

– Elevated liquidity and sooner sale of high-value NFTs.

– Attracting a bigger pool of patrons.

– Capability to monetize an asset in fractions with out promoting it solely.

– New fashions of viewers engagement, comparable to collective possession.

|

| NFT marketplaces |

– Expanded person base due to inexpensive fractional tokens.

– Progress in buying and selling volumes and secondary market exercise.

– Emergence of latest funding instruments and platform use circumstances.

– Strengthened belief and engagement throughout the NFT neighborhood.

|

Benefits of fractional possession of NFTs

NFT Market Overview and Progress of the Fractional NFT Market

In line with a number one supply, The Enterprise Analysis Firm, the worldwide NFT market was valued at $43.08 billion in 2024 and will likely be price $247.41 billion by 2029, or develop at a CAGR of roughly 41.9%.

This fast growth is fueled by the changeover from the market being primarily speculative buying and selling to extra utilitarian and useful use circumstances. In-game property, metaverse properties, collectibles, branded tokens, and so forth. This places NFTs firmly within the digital economic system.

Why Extra and Extra Individuals Need to Put money into Fractional NFTs

An growing variety of buyers are turning to fractional NFTs as a result of one of these possession opens up high-value digital property that might not be afforded earlier than, and makes investments extra versatile and simpler to handle. These are the primary the reason why fractional NFTs have gotten a sexy funding instrument for the plenty:

- Accessibility: As an alternative of shopping for an NFT price tens or tons of of 1000’s of {dollars}, buyers can buy a fraction for simply tons of and even tens of {dollars}.

- Liquidity and adaptability: Promoting a part of an asset is faster and simpler than promoting the entire NFT without delay.

- Having a voice in huge selections: Shareholders can have a say in vital selections, comparable to whether or not to promote an NFT or maintain an asset.

- Extra methods to take a position: Fractionalization permits for constructing a group of NFT shares as an alternative of placing all funds right into a single token.

What Is a Fractional NFT Market?

A fractional NFT market is a digital buying and selling platform particularly designed for operations with fractional possession of NFTs.

On such a platform, the proprietor of a high-value token can “break up” it utilizing a good contract into tons of and even 1000’s of components, issued as fungible tokens (generally ERC-20). Traders can then freely purchase and promote these fractions, gaining partial possession of the unique NFT.

Not like an ordinary NFT market, the place the transaction mannequin is “one token — one purchaser,” a fractional market permits dozens and even tons of of individuals to co-own a single NFT, take part in its potential worth development, and have a say in selections concerning its administration.

The Function of the Platform in Simplifying Fractional NFT Possession and Buying and selling Operations

And not using a specialised market, the method of fractionalizing an NFT could be difficult and insecure, requiring handbook token issuance, possession monitoring, and purchaser searches. A fractional NFT market automates these processes by offering:

- Sensible contract deployment: The proprietor uploads an NFT to the platform, chooses what number of fractions to create, and the contract points the corresponding variety of tokens.

- Safe storage: The unique NFT is locked in a wise contract whereas its fractions are in circulation.

- Clear buying and selling: Traders can purchase and promote fractions at any time, similar to common cryptocurrency tokens.

- Collective decision-making: Fraction holders can vote on what to do with the NFT — promote it solely, hire it out, or use it within the metaverse.

- Entry to premium property: Even high-priced NFTs change into inexpensive to a wider viewers due to decrease entry prices enabled by fractionalization.

Selecting a Fractional NFT Market Improvement Firm

When choosing a workforce to construct your NFT platform, it’s vital to take a look at their expertise.

Search for builders with a robust background in creating decentralized apps and buying and selling platforms — NFT growth goes far past merely writing code. It requires a deep understanding of good contracts, tokenomics, fractional possession, and the flexibility to work seamlessly throughout a number of blockchains.

An organization that has efficiently delivered NFT and DeFi initiatives earlier than is much extra doubtless to supply a safe, reliable resolution tailor-made to your imaginative and prescient.

It’s additionally essential to accomplice with professionals who know the ins and outs of NFT growth. Errors in good contract design or weak safety practices can price buyers their funds and critically harm your platform’s repute. Expert groups assist you keep away from these dangers, providing smarter methods to scale, add performance, and ship a easy person expertise.

Earlier than committing to a partnership, take time to debate the necessities. Ask what tech stack they’ll use and whether or not it really works along with your most well-liked blockchain. Make clear what safety measures and contract audits they carry out, and the way they plan to guard person information and transactions.

Go over timelines, prices, post-launch help, and future function upgrades. A dependable signal you’re speaking to the appropriate folks is a robust portfolio with actual examples of profitable NFT initiatives they’ve already constructed.

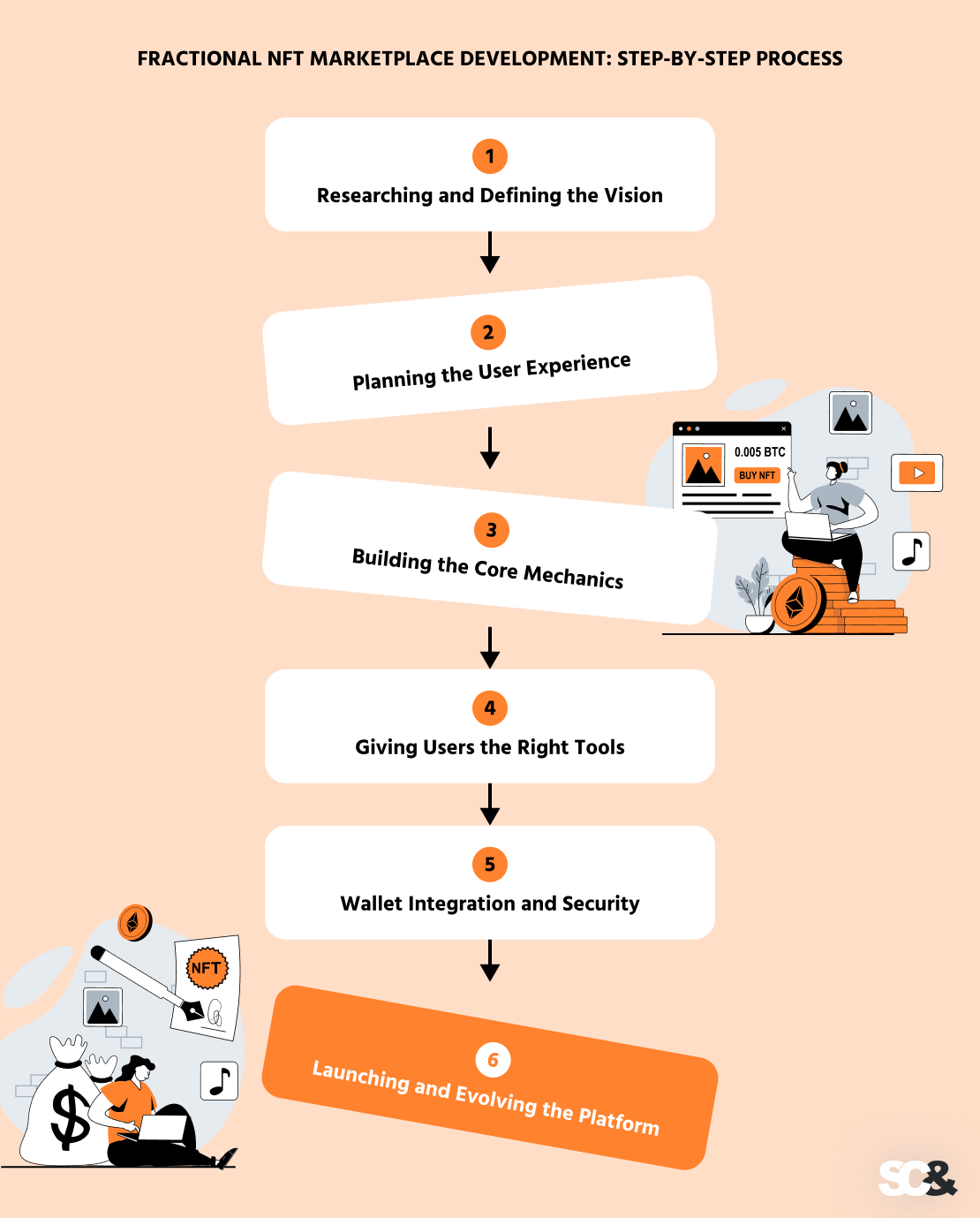

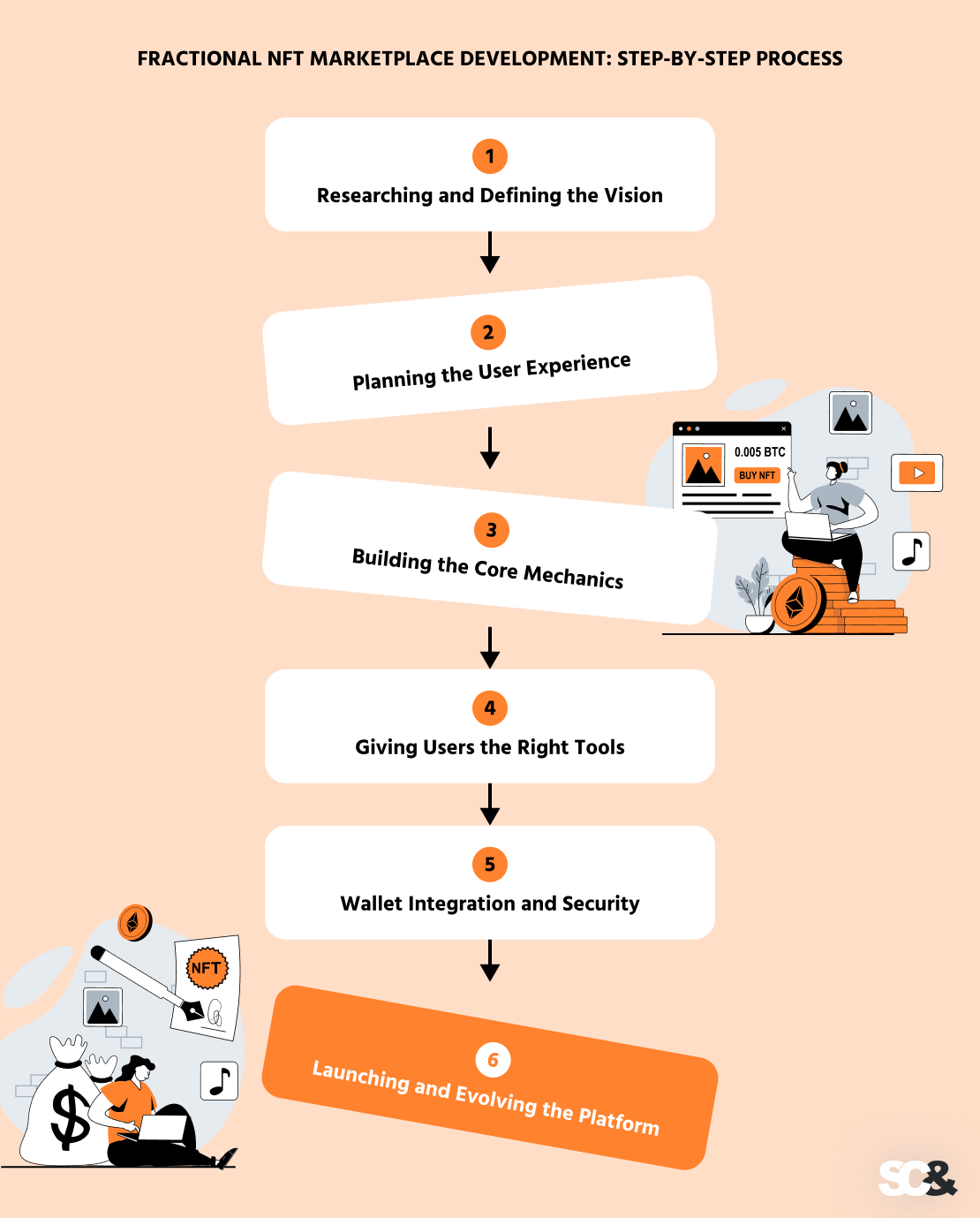

Fractional NFT Market Improvement: Step-by-Step Course of

Constructing a fractional NFT market isn’t nearly writing code — it’s about turning a imaginative and prescient right into a platform folks really need to use. The method takes planning, teamwork, and a transparent concept of what collectors, buyers, and creators want. Right here’s the way it often unfolds:

1. Researching and Defining the Imaginative and prescient

Every little thing begins with understanding the market and your viewers. Who will use the platform? What issues will it resolve? Which blockchain will present the most effective stability between pace, charges, and ecosystem help? These early selections form every thing that follows.

2. Planning the Consumer Expertise

The following step is designing how {the marketplace} will work. From issuing fractional NFTs to operating auctions, each element issues. Early mockups deal with simplicity — so even somebody utterly new to NFTs can determine issues out in minutes.

3. Constructing the Core Mechanics

That is the place good contracts are available. Builders create the logic for fractionalizing tokens, dealing with trades, and managing auctions. The objective? A safe, clear, and automatic course of that works flawlessly.

4. Giving Customers the Proper Instruments

Individuals want a straightforward method to add NFTs, break up them into shares, commerce fractions, and monitor their investments. The interface is designed to really feel pure and intuitive, eradicating any pointless complexity.

5. Pockets Integration and Safety

market simply connects with crypto wallets like MetaMask or WalletConnect. Safety is a prime precedence — contracts should be audited, comprise no dangers, and shield each funds and information.

6. Launching and Evolving the Platform

After thorough testing, the platform goes dwell. However that’s only the start. Steady updates, new options, and dependable help maintain {the marketplace} aggressive because it grows.

Why Accomplice with SCAND for Fractional NFT Market Improvement

Selecting an skilled growth workforce is a key consider constructing a dependable and in-demand platform for fractional NFT buying and selling. SCAND has been working within the international IT marketplace for over 25 years, offering purchasers with modern and technologically strong options.

Our workforce consists of greater than 250 extremely expert professionals, and through the years, we’ve efficiently delivered 900+ initiatives worldwide, together with blockchain- and NFT-based options.

SCAND’s experience in NFT market growth covers the complete venture lifecycle — from structure design and good contract growth to integration with varied blockchains and cryptocurrency wallets. We’ve got in-depth data of fractional NFT growth and perceive easy methods to set up collective possession of digital property safely and transparently.

Each venture undergoes thorough auditing to make sure reliability, robust safety measures, and person belief in your platform.

We create fractional NFT marketplaces which might be scalable, helpful, and straightforward to make use of in an effort to broaden your viewers, increase asset liquidity, and attract new buyers. Your platform can adapt to altering market developments due to our adaptable structure, which makes it doable to combine further options like auctions, DAO-based voting techniques, fractional possession administration instruments, and DeFi interactions.

You get greater than merely a technical service supplier while you work with SCAND; you get a strategic accomplice with many years of expertise and worldwide data. We’ll help you in growing a multipurpose fractional NFT market that can make what you are promoting stand out from the competitors and allow you to totally make the most of fractional NFT know-how.

Know-how Stack for Fractionalized NFT Market Improvement

At SCAND, we select a technological stack based mostly on the distinctive necessities of every consumer to create reliable, safe, and scalable NFT marketplaces. We’re in a position to design adaptable and absolutely useful platforms for NFT buying and selling and administration as a result of our options are based mostly on established blockchain platforms and modern growth instruments.

- Blockchain networks: We work with Ethereum, Polygon, Binance Sensible Chain, Solana, and different networks, choosing the optimum infrastructure based mostly on transaction pace, gasoline charges, and future scalability necessities.

- Sensible contracts: Our consultants design and audit good contracts to make sure safe NFT fractionalization, correct administration of fractional possession, automated revenue distribution, and clear transaction processing.

- APIs and integrations: We combine cryptocurrency wallets (MetaMask, WalletConnect, and others), cost gateways, analytics instruments, and exterior NFT marketplaces to broaden your platform’s capabilities.

- Safety and help: We implement superior information safety and transaction safety protocols, design a modular structure for seamless scalability, and supply complete technical help at each stage of your venture.

Value and Timeline of Fractional NFT Market Improvement

The price and timeline for growing a fractional NFT market rely upon a number of key components that instantly affect the complexity of implementation and venture supply pace:

- Characteristic complexity: Primary platforms with easy fractionalization and buying and selling features take considerably much less time to develop than superior options with DAO mechanisms, auctions, DeFi integrations, and cross-chain operations.

- Blockchain choice: Totally different blockchains fluctuate in transaction pace, gasoline charges, and technical capabilities, which may have an effect on each growth prices and long-term upkeep bills.

- Third-party integrations: Connecting exterior cost gateways, NFT marketplaces, analytics instruments, and APIs provides growth time and will increase the general funds.

- Consumer-specific necessities: Customized UI/UX design, branded options, and distinctive possession and revenue distribution mechanics require further assets and tailor-made growth efforts.

At SCAND, we fastidiously analyze every of those components throughout the venture’s discovery part to supply correct price estimates and real looking supply timelines. This method permits us to optimize bills, choose essentially the most appropriate applied sciences, and guarantee a quick, safe, and scalable launch on your fractional NFT market.

Way forward for Fractionalized NFTs and NFT Marketplaces

Fractionalized NFT know-how has already begun to vary the digital asset business, however there may be nonetheless a lot extra potential to uncover. Sooner or later, we will anticipate this mannequin to develop shortly all through varied sectors. In artwork and collectibles, fractionalization will diversify possession of uncommon and invaluable digital property amongst an increasing number of folks. Fractionalization will result in extra gathering, much less greed.

In metaverses and gaming initiatives, co-ownership of digital land plots and distinctive in-game objects will allow gamers and buyers to pool their assets for larger-scale initiatives.

Within the monetary sector and the realm of real-world property, fractional NFTs may function a bridge between conventional investments and the blockchain economic system, enabling the tokenization of actual property, revenue rights, and even firm shares.

Fractional NFT platforms will play a vital position within the mass adoption of NFTs as they eradicate the primary barrier to entry — the excessive price of premium property. With these platforms, buyers will be capable to buy fractions of top-tier NFTs as simply as they purchase cryptocurrency or shares immediately.

Their performance will proceed to broaden, providing options comparable to lending towards NFT shares, automated administration of funding swimming pools, and integration with DeFi instruments. This may open up new incomes alternatives and improve the general liquidity of the NFT market.

Fractional NFT possession is progressively changing into a full-fledged funding instrument. As an alternative of risking massive sums of cash on a single costly NFT, buyers will be capable to construct diversified portfolios consisting of fractions from varied collections and initiatives, decreasing dangers whereas growing potential returns. This mannequin paves the best way for the emergence of latest monetary services and products on the intersection of NFTs and conventional funding markets.

Conclusion

In case you are planning to launch your personal platform for buying and selling fractional NFT shares or need to combine fractional possession know-how into an present product, we’re open to collaboration and may present end-to-end growth companies — from concept evaluation and structure design to platform launch and ongoing help.

Accomplice with SCAND to show your idea into an environment friendly and worthwhile digital product able to securing a robust place within the NFT market.