Regardless of the rise of the info safety market, conventional cybersecurity has lengthy been primarily based on perimeter defenses. The idea was easy: when you’re contained in the community (whether or not by way of a VPN or bodily entry), you’re trusted.

Firewalls, intrusion preventers, and digital personal networks have been all aimed toward this concept of a safe interior world separated from an untrusted outer world.

However with corporations shifting to cloud-first, hybrid work, and multiplexed companion ecosystems, these conventional safety perimeters started to interrupt down. An finish consumer could possibly be sitting in a house workplace, a espresso store, or midway world wide and nonetheless want entry to enterprise techniques in a safe means.

On the identical time, cyber crimes turned extra refined, exploiting any vulnerability from inside after perimeter safety has been compromised.

What Is Zero Belief Structure?

Zero Belief is a safety framework established on one simple precept: by no means belief, all the time confirm.

Not like the earlier fashions that by default belief customers and gadgets as soon as contained in the community, Zero Belief tracks every request for entry, the place it’s coming from, what gadget is used, and who the consumer is.

It depends on the presumption that nothing within the system is fully safe by design and frequently authenticates entry by way of a number of dynamic elements.

| Conventional Safety | Zero Belief Structure |

| Trusts inside customers and gadgets | Trusts nobody by default |

| Focuses on perimeter safety | Focuses on id and entry management |

| Flat inside community | Segmented community with least privilege entry |

| Occasional safety checks | Steady verification and monitoring |

How ZTA Differs from Conventional Safety

Core Ideas of Zero Belief Structure

A Zero Belief community all the time depends on a well-defined, real-world set of ideas that preserve the system’s safety posture no matter the place customers or gadgets come from.

1. Identification Verification

Earlier than anybody can entry something, their id have to be verified. It’s now not only a password; it’d embody multi-factor authentication (MFA), gadget authentication, and even biometric authentication.

The aim is to make sure that the particular person (or gadget) that’s making an attempt to attach is certainly who they are saying they’re.

2. Least Privilege Entry

As soon as a person has been authenticated, they’re solely supplied with entry to the precise information or system they should have — nothing extra. This limits potential hurt if an account is ever compromised.

This manner, customers will solely possess enough privileges to get their work completed, and no extra permissions.

3. Regular Monitoring

A Zero Belief safety mannequin doesn’t cease checking after the primary login. It retains watching in actual time customers’ habits, the state of gadgets, location, and so forth. If one thing appears suspicious, the system can shut down entry or set off a brand new examine.

4. Microsegmentation

As a substitute of getting one large community with every little thing linked, Zero Belief separates issues into small segments. Every phase has its personal set of controls and guidelines. So even when the intruder will get into one phase, it could actually’t simply transfer to a different one.

How Zero Belief Safety Structure Works in Follow

Zero Belief isn’t solely a safety precept; it’s a sensible means of defending techniques. Let’s see the way it works in the actual world with an instance.

Instance: A Distant Worker Accessing a Firm System

Let’s take the case of a distant employee who must log in to the group’s inside monetary software.

The worker tries to log in with their username and password.

- Person and Gadget Are Verified

Earlier than letting them in, the system checks:

- Is that this actually the best particular person? (utilizing, as an example, multi-factor authentication)

- Is the gadget protected and accredited? (e.g., up-to-date software program, no indicators of tampering)

- Is the request coming from a safe space?

These checks are regulated by id and entry administration and software-defined perimeter instruments.

- Entry Is Granted However Solely What’s Wanted

If every little thing seems good, entry is allowed, however solely to what the worker wants. A monetary analyst, for instance, can view experiences however not modify system settings. That is the least privilege precept.

Because the consumer operates, their habits is adopted in actual time by a safety info and occasion administration (SIEM) system. If one thing fishy occurs (like making an attempt to obtain massive recordsdata or entry restricted zones), entry may be denied or audited.

Even when somebody breaks in, they received’t get far. Microsegmentation places every a part of the system in an remoted setting, so attackers can not merely stroll from one piece to a different.

Instruments That Make It Work

As seen above, Zero Belief entry is constructed on a set of built-in instruments that speak to 1 one other and confirm customers, assure community safety, forestall safety breaches, and monitor exercise in actual time:

- IAM (Identification and Entry Administration): Manages consumer logins and permissions.

- SDP (Software program-Outlined Perimeter): Offers or blocks app entry relying on who customers are and the place they’re from.

- MFA (Multi-Issue Authentication): Incorporates additional verifications like a textual content code or app approval.

- SIEM (Safety Data and Occasion Administration): Displays for suspicious habits in actual time.

Advantages of Zero Belief for Enterprise Programs

Two years in the past, implementing a Zero Belief structure was a precedence for a majority of corporations.

By 2032, by the way in which, the whole Zero Belief market is believed to be estimated at round $133 billion, up from round $32 billion in 2023. However what are the actual, tangible advantages for contemporary enterprise networks?

One of many best advantages is that Zero Belief community entry shrinks the assault space. As a result of no consumer and no gadget are trusted by default, every request for entry have to be authenticated.

Meaning it’s a lot tougher for attackers to make their means by way of the system, even when they do handle to get in.

Additionally, ZTA provides corporations extra management over customers’ entry. As a substitute of mere static consumer roles, Zero Belief takes under consideration all the context (i.e., the particular person’s id, gadget, location, and habits) to determine what they need to have the ability to see or do.

Third, Zero Belief is visibly efficient for distant and hybrid work. Staff are capable of entry enterprise techniques from any location, with out the usage of a VPN or presence within the workplace.

Lastly, Zero Belief gives higher visibility throughout the system. Since it’s all the time specializing in the consumer and the gadget, safety groups can establish probably malicious exercise early and react shortly.

Examples of Zero Belief Safety Options in Motion

There are numerous distinguished companies and sectors using Zero Belief Structure to enhance safety whereas permitting distant entry. These examples illustrate how the follow is executed in actual life.

Google’s BeyondCorp is sort of probably probably the most well-known instance. In response to a serious cyberattack in 2009, Google moved away from counting on VPNs and began engaged on an structure by which entry is predicated on consumer context and id, not on a community location.

That enabled workers to have the ability to work securely wherever with out essentially having to be on a trusted inside community. BeyondCorp was one of many preliminary real-world Zero Belief architectures.

Microsoft

Microsoft additionally makes use of Zero Belief throughout its Microsoft 365 and Azure options. It depends on sturdy id checks, persistent monitoring, and Conditional Entry and Defender for Identification instruments to handle entry and cease threats in actual time. Customers solely get entry to what they want, and the system watches for uncommon exercise across the clock.

Different Organizations

Many much less distinguished monetary, healthcare, and authorities organizations are additionally adopting Zero Belief. For instance, healthcare amenities management entry to affected person information by position and gadget safety.

Banks divide their networks in such a fashion that if one is attacked, it’s not simply going to unfold to the others. It protects the delicate information with out interrupting easy processes.

Challenges and Issues of Zero Belief Programs

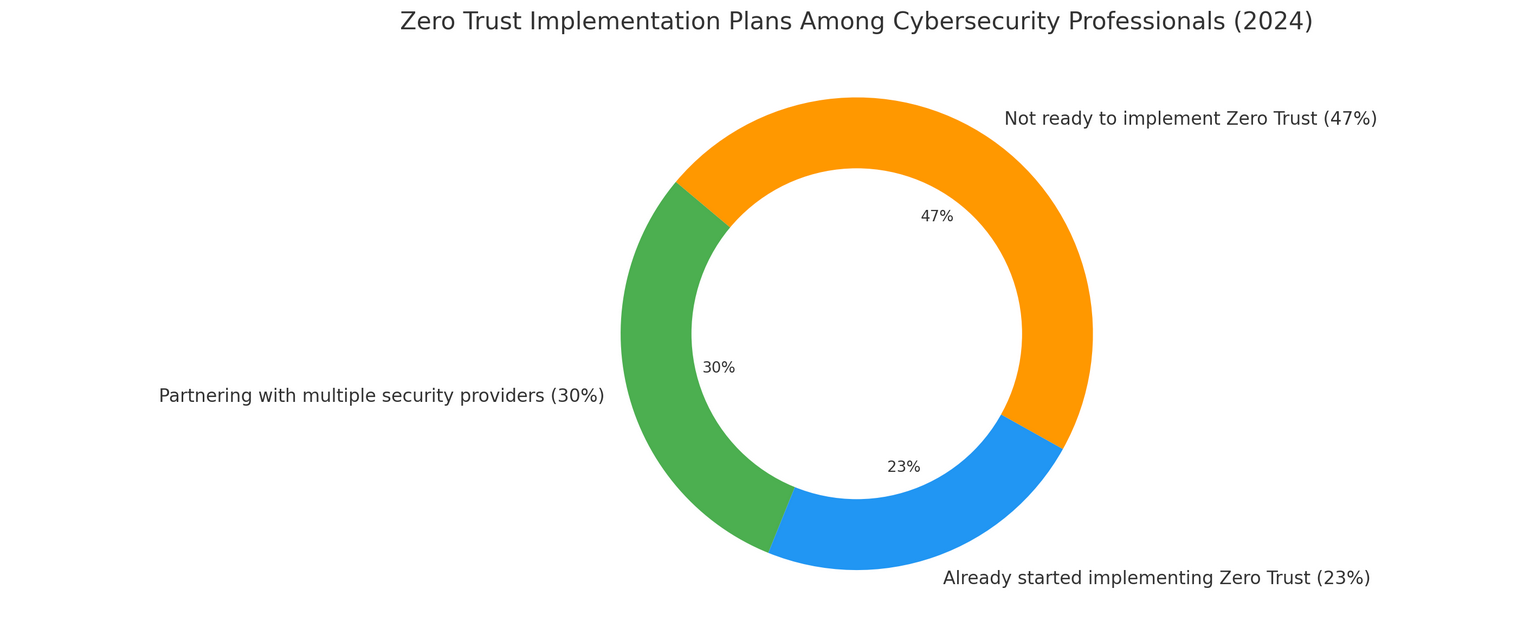

In 2024, when requested about their intentions to undertake Zero Belief, 30% of respondents (who have been cybersecurity professionals) admitted that they have been working with a number of safety distributors to develop a plan to include Zero Belief and cut back safety dangers.

23% of answerers, in flip, said that they had already began implementing Zero Belief. In contrast, 47% of respondents claimed to not be prepared but to undertake Zero Belief. However why?

Zero Belief Implementation Amongst Cybersecurity Professionals in 2024

First, Zero Belief typically means rethinking how your techniques are constructed. Conventional safety fashions have been primarily based on trusting every little thing contained in the community.

With Zero Belief, that mannequin modifications utterly. You’ll want to revamp how customers, gadgets, and purposes join, which can embody reorganizing entry guidelines, segmenting the community, and updating older techniques.

Second, you’ll probably must spend money on new instruments and processes. Zero Belief normally entails including id and entry administration, multi-factor authentication, endpoint safety, monitoring instruments, and software-defined perimeter options. These instruments should work collectively, which may take a while and planning.

One other factor is that the Zero Belief setting isn’t an install-and-forget configuration. It requires systematic updates: entry insurance policies and safety measures have to be modified when groups develop, roles shift, or new instruments are added.

It additionally requires good communication throughout enterprise items, safety groups, and IT to ensure the system works properly for all events.

Typically, corporations additionally face resistance to alter. Customers have in all probability obtained used to free entry or simplified login processes, and extra stringent controls will subsequently initially look like a nuisance.

That’s the reason it pays to roll out Zero Belief insurance policies in phases, precisely state the explanations for doing so, and supply coaching to your personnel.

Methods to Implement Zero Belief Structure

It’s truthful to state that Zero Belief isn’t a direct treatment; it’s a seamless course of. In 2024, most corporations had already began adopting a Zero Belief technique or cooperating with safety distributors to create a roadmap. However how do you start the method in a means that really works?

The primary factor to recollect earlier than Zero Belief implementation is that placing it into motion doesn’t occur unexpectedly; it’s a gradual course of that takes cautious planning and the best instruments.

Step one is to audit your present setting. Meaning specifying all customers, endpoints, purposes, and information in your group.

From there, you’ll be able to start to place extra inflexible id and entry controls in place utilizing options, reminiscent of id and entry administration options and multi-factor authentication.

Subsequent, implement the least privilege precept: customers ought to solely have the entry they really want. No extra. On the identical time, deploy microsegmentation to compartmentalize probably the most vital components of your system, so even when one thing goes fallacious, it’s simpler to comprise the problem.

As you progress ahead, it’s necessary to observe exercise in actual time. Safety info and occasion administration techniques can assist you:

- Spot uncommon consumer habits earlier than it turns into an issue.

- Catch threats early and reply shortly.

- Replace entry controls routinely primarily based on altering danger ranges.

We acknowledge that making a complete Zero Belief resolution could seem overwhelming, particularly if your organization lacks the in-house assets or obtainable time to do it by yourself.

That’s the place SCAND is available in. We assist organizations develop and implement Zero Belief software program tailored to their precise wants, whether or not you’re greenfielding or upgrading an present implementation.

In initiatives involving distant entry, delicate information, or hybrid environments, we:

- Use IAM frameworks to implement least privilege entry.

- Combine MFA and SDP instruments for identity- and context-aware authentication.

- Design infrastructure with microsegmentation, container isolation, and safe APIs.

- Constantly monitor and improve safety insurance policies utilizing SIEM and habits analytics instruments.

- Apply safe DevOps practices that go consistent with the Zero Belief strategy, from growth to deployment.

- Embed generative AI in cybersecurity to additional enhance safety.

Should you rethink your enterprise structure or change to the cloud, now’s the right second to find out about Zero Belief ideas. Allow us to hear from you at SCAND and uncover how we can assist you design strong safety techniques that may forestall any assaults.

Incessantly Requested Questions (FAQs)

Is Zero Belief just for massive enterprises?

No. Any group can undertake and profit from Zero Belief, particularly in the event that they want cloud safety to help distant work.

Do I must do away with my VPN and different safety instruments earlier than implementing a Zero Belief system?

Not essentially, however VPNs typically characterize a single level of belief. Zero Belief works as a extra dynamic and safe different.

How lengthy does it take to implement ZTA?

It will depend on your safety infrastructure, however most organizations undertake it regularly, beginning with id and entry safety controls.

Can SCAND assist implement Zero Belief safety techniques?

Completely. Whether or not you need to begin from scratch, modernize conventional perimeter-based safety, and even prolong Zero Belief (if you have already got it), we are able to provide the best strategy for your online business.