At its Knowledge + AI Summit, Databricks introduced a number of new instruments and platforms designed to raised help enterprise prospects who’re making an attempt to leverage their knowledge to create company-specific AI purposes and brokers.

Lakebase

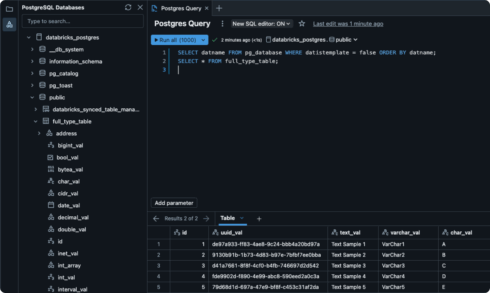

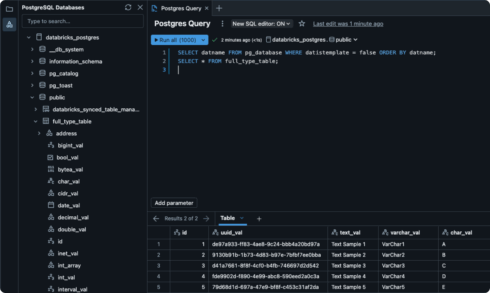

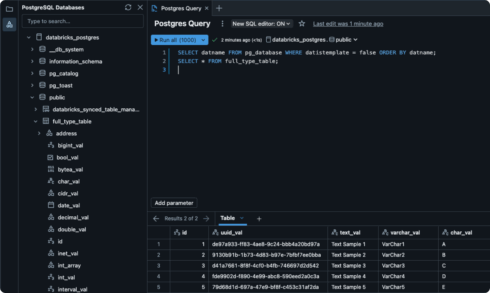

Lakebase is a managed Postgres database designed for working AI apps and brokers. It provides an operational database layer to Databricks’ Knowledge Intelligence Platform.

In response to the corporate, operational databases are an essential basis for contemporary purposes, however they’re based mostly on an outdated structure that’s extra suited to slowly altering apps, which is not the fact, particularly with the introduction of AI.

Lakebase makes an attempt to unravel this downside by bringing steady autoscaling to operational databases to help agent workloads and unify operational and analytical knowledge.

In response to Databricks, the important thing advantages of Lakebase are that it separates compute and storage, is constructed on open supply (Postgres), has a novel branching functionality very best for agent improvement, provides automated syncing of information to and from lakehouse tables, and is absolutely managed by Databricks.

It’s launching with a number of supported companions to facilitate third-party integration, enterprise intelligence, and governance instruments. These embody Accenture, Airbyte, Alation, Anomalo, Atlan, Boomi, Cdata, Celebal Applied sciences, Cloudflare, Collibra, Confluent, Dataiku, dbt Labs, Deloitte, EPAM, Fivetran, Hightouch, Immuta, Informatica, Lovable, Monte Carlo, Omni, Posit, Qlik, Redis, Retool, Sigma, Snowplow, Spotfire, Striim, Superblocks, ThoughtSpot and Tredence.

Lakebase is at the moment accessible as a public preview, and the corporate expects so as to add a number of vital enhancements over the following few months.

“We’ve spent the previous few years serving to enterprises construct AI apps and brokers that may cause on their proprietary knowledge with the Databricks Knowledge Intelligence Platform,” stated Ali Ghodsi, co-founder and CEO of Databricks. “Now, with Lakebase, we’re creating a brand new class within the database market: a contemporary Postgres database, deeply built-in with the lakehouse and immediately’s improvement stacks. As AI brokers reshape how companies function, Fortune 500 firms are prepared to exchange outdated methods. With Lakebase, we’re giving them a database constructed for the calls for of the AI period.”

Lakeflow Designer

Coming quickly as a preview, Lakeflow Designer is a no-code ETL functionality for creating manufacturing knowledge pipelines.

It includes a drag-and-drop UI and an AI assistant that enables customers to explain what they need in pure language.

“There’s plenty of stress for organizations to scale their AI efforts. Getting high-quality knowledge to the precise locations accelerates the trail to constructing clever purposes,” stated Ghodsi. “Lakeflow Designer makes it attainable for extra individuals in a company to create manufacturing pipelines so groups can transfer from thought to influence sooner.”

It’s based mostly on Lakeflow, the corporate’s resolution for knowledge engineers for constructing knowledge pipelines. Lakeflow is now usually accessible, with new options equivalent to Declarative Pipelines, a brand new IDE, new point-and-click ingestion connectors for Lakeflow Join, and the power to put in writing on to the lakehouse utilizing Zerobus.

Agent Bricks

That is Databricks’ new software for creating brokers for enterprise use circumstances. Customers can describe the duty they need the agent to do, join their enterprise knowledge, and Agent Bricks handles the creation.

Behind the scenes, Brokers Bricks will create artificial knowledge based mostly on the client’s knowledge with the intention to complement coaching the agent. It additionally makes use of a variety of optimization methods to refine the agent.

“For the primary time, companies can go from thought to production-grade AI on their very own knowledge with velocity and confidence, with management over high quality and price tradeoffs,” stated Ghodsi. “No guide tuning, no guesswork and all the safety and governance Databricks has to supply. It’s the breakthrough that lastly makes enterprise AI brokers each sensible and highly effective.”

And every part else…

Databricks One is a brand new platform that brings knowledge intelligence to enterprise groups. Customers can ask questions on their knowledge in pure language, leverage AI/BI dashboards, and use custom-built Databricks apps.

The corporate introduced the Databricks Free Version and is making its self-paced programs in Databricks Academy free as nicely. These adjustments have been made with college students and aspiring professionals in thoughts.

Databricks additionally introduced a public preview for full help of Apache Iceberg tables within the Unity Catalog. Different new upcoming Unity Catalog options embody new metrics, a curated inside market of licensed knowledge merchandise, and integration of Databricks’ AI Assistant.

Lastly, the corporate donated its declarative ETL framework to the Apache Spark mission, the place it’ll now be generally known as Apache Spark Declarative Pipelines.