Bringing an current codebase into compliance with the SEI CERT Coding Customary requires a price of effort and time. The everyday approach of assessing this value is to run a static evaluation software on the codebase (noting that putting in and sustaining the static evaluation software might incur its personal prices). A easy metric for estimating this value is subsequently to depend the variety of static evaluation alerts that report a violation of the CERT pointers. (This assumes that fixing anyone alert sometimes has no impression on different alerts, although usually a single challenge might set off a number of alerts.) However those that are accustomed to static evaluation instruments know that the alerts will not be at all times dependable – there are false positives that have to be detected and disregarded. Some pointers are inherently simpler than others for detecting violations.

This yr, we plan on making some thrilling updates to the SEI CERT C Coding Customary. This weblog submit is about one in every of our concepts for bettering the usual. This alteration would replace the requirements to higher harmonize with the present cutting-edge for static evaluation instruments, in addition to simplify the method of supply code safety auditing.

For this submit, we’re asking our readers and customers to offer us with suggestions. Would the adjustments that we suggest to our Threat Evaluation metric disrupt your work? How a lot effort would they impose on you, our readers? If you want to remark, please ship an electronic mail to information@sei.cmu.edu.

The premise for our adjustments is that some violations are simpler to restore than others. Within the SEI CERT Coding Customary, we assign every guideline a Remediation Price metric, which is outlined with the next textual content:

|

Worth |

That means |

Detection |

Correction |

|

1 |

Excessive |

Guide |

Guide |

|

2 |

Medium |

Computerized |

Guide |

|

3 |

Low |

Computerized |

Computerized |

Moreover, every guideline additionally has a Precedence metric, which is the product of the Remediation Price and two different metrics that assess severity (how consequential is it to not adjust to the rule) and probability (how probably that violating the rule results in an exploitable vulnerability?). All three metrics may be represented as numbers starting from 1 to three, which may produce a product between 1 and 27 (that’s, 3*3*3), the place low numbers indicate larger value.

The above desk may very well be alternately represented this manner:

|

Is Mechanically… |

Not Repairable |

Repairable |

|

Not Detectable |

1 (Excessive) |

1 (Excessive) |

|

Detectable |

2 (Medium) |

3 (Low) |

This Remediation Price metric was conceived again in 2006 when the SEI CERT C Coding Customary was first created. We didn’t use extra exact definitions of detectable or repairable on the time. However we did assume that some pointers can be robotically detectable whereas others wouldn’t. Likewise, we assumed that some pointers can be repairable whereas others wouldn’t. Lastly, a tenet that was repairable however not detectable can be assigned a Excessive value on the grounds that it was not worthwhile to restore code if we couldn’t detect whether or not or not it complied with a tenet.

We additionally reasoned that the questions of detectability and repairability must be thought of in principle. That’s, is a passable detection or restore heuristic doable? When contemplating if such a heuristic exists, you may ignore whether or not a industrial or open supply product claims to implement the heuristic.

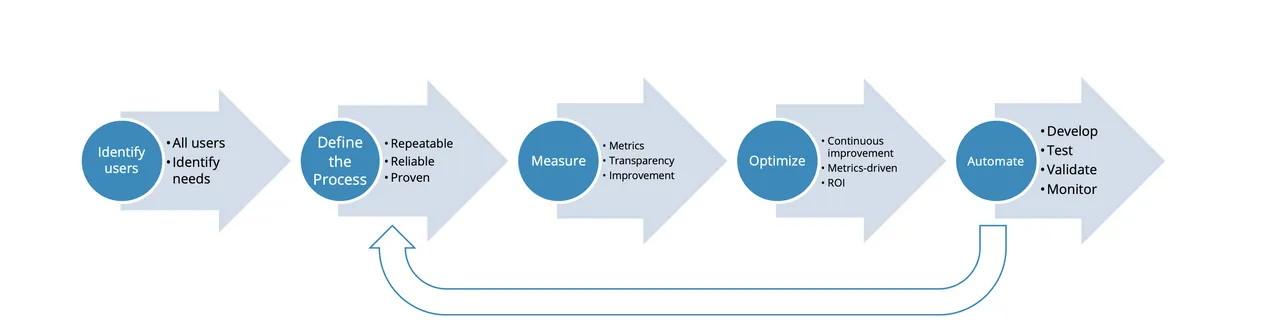

At this time, the scenario has modified, and subsequently we have to replace our definitions of detectable and repairable.

Detectability

A latest main change has been so as to add an Automated Detection part to each CERT guideline. This identifies the evaluation instruments that declare to detect – and restore – violations of the rule. For instance, Parasoft claims to detect violations of each rule and advice within the SEI CERT C Coding Customary. If a tenet’s Remediation Price is Excessive, indicating that the rule is non-detectable, does that create incompatibility with all of the instruments listed within the Automated Detection part?

The reply is that the instruments in such a tenet could also be topic to false positives (that’s, offering alerts on code that truly complies with the rule), or false negatives (that’s, failing to report some really noncompliant code), or each. It’s straightforward to assemble an analyzer with no false positives (merely by no means report any alerts) or false negatives (merely alert that each line of code is noncompliant). However for a lot of pointers, detection with no false positives and no false negatives is, in principle, undecidable. Some attributes are simpler to research, however on the whole sensible analyses are approximate, affected by false positives, false negatives, or each. (A sound evaluation is one which has no false negatives, although it might need false positives. Most sensible instruments, nonetheless, have each false negatives and false positives.) For instance, EXP34-C, the C rule that forbids dereferencing null pointers, is just not robotically detectable by this stricter definition. As a counterexample, violations of rule EXP45-C (don’t carry out assignments in choice statements) may be detected reliably.

An appropriate definition of detectable is: Can a static evaluation software decide if code violates the rule with each a low false constructive price and low false unfavourable price? We don’t require that there can by no means be false positives or false negatives, however we will require that they each be small, which means {that a} software’s alerts are full and correct for sensible functions.

Most pointers, together with EXP34-C, will, by this definition, be undetectable utilizing the present crop of instruments. This doesn’t imply that instruments can’t report violations of EXP34-C; it simply implies that any such violation is likely to be a false constructive, the software would possibly miss some violations, or each.

Repairability

Our notation of what’s repairable has been formed by latest advances in Automated Program Restore (APR) analysis and expertise, such because the Redemption undertaking. Particularly, the Redemption undertaking and power contemplate a static evaluation alert repairable no matter whether or not it’s a false constructive. Repairing a false constructive ought to, in principle, not alter the code habits. Moreover, in Redemption, a single restore must be restricted to an area area and never distributed all through the code. For example, altering the quantity or forms of a operate’s parameter checklist requires modifying each name to that operate, and performance calls may be distributed all through the code. Such a change would subsequently not be native.

With that stated, our definition of repairable may be expressed as: Code is repairable if an alert may be reliably fastened by an APR software, and the one modifications to code are close to the location of the alert. Moreover, repairing a false constructive alert should not break the code. For instance, the null-pointer-dereference rule (EXP34-C) is repairable as a result of a pointer dereference may be preceded by an robotically inserted null test. In distinction, CERT rule MEM31-C requires that each one dynamic reminiscence be freed precisely as soon as. An alert that complains that some pointer goes out of scope with out being freed appears repairable by inserting a name to free(pointer). Nevertheless, if the alert is a false constructive, and the pointer’s pointed-to reminiscence was already freed, then the APR software might have simply created a double-free vulnerability, in essence changing working code into susceptible code. Subsequently, rule MEM31-C is just not, with present capabilities, (robotically) repairable.

The New Remediation Price

Whereas the earlier Remediation Price metric did deal with detectability and repairability as interrelated, we now imagine they’re unbiased and fascinating metrics by themselves. A rule that was neither detectable nor repairable was given the identical remediation value as one which was repairable however not detectable, and we now imagine these two guidelines ought to have these variations mirrored in our metrics. We’re subsequently contemplating changing the previous Remediation Price metric with two metrics: Detectable and Repairable. Each metrics are easy sure/no questions.

There may be nonetheless the query of the best way to generate the Precedence metric. As famous above, this was the product of the Remediation Price, expressed as an integer from 1 to three, with two different integers from 1 to three. We are able to subsequently derive a brand new Remediation Price metric from the Detectable and Repairable metrics. The obvious answer can be to assign a 1 to every sure and a 2 to every no. Thus, now we have created a metric much like the previous Remediation Price utilizing the next desk:

|

Is Mechanically… |

Not Repairable |

Repairable |

|

Not Detectable |

1 |

2 |

|

Detectable |

2 |

4 |

Nevertheless, we determined {that a} worth of 4 is problematic. First, the previous Remediation Price metric had a most of three, and having a most of 4 skews our product. Now the very best precedence can be 3*3*4=36 as an alternative of 27. This may additionally make the brand new remediation value extra vital than the opposite two metrics. We determined that changing the 4 with a 3 solves these issues:

|

Is Mechanically… |

Not Repairable |

Repairable |

|

Not Detectable |

1 |

2 |

|

Detectable |

2 |

3 |

Subsequent Steps

Subsequent will come the duty of inspecting every guideline to interchange its Remediation Price with new Detectable and Repairable metrics. We should additionally replace the Precedence and Stage metrics for pointers the place the Detectable and Repairable metrics disagree with the previous Remediation Price.

Instruments and processes that incorporate the CERT metrics might want to replace their metrics to replicate CERT’s new Detectable and Repairable metrics. For instance, CERT’s personal SCALe undertaking gives software program safety audits ranked by Precedence, and future rankings of the CERT C guidelines will change.

Listed below are the previous and new metrics for the C Integer Guidelines:

|

Rule |

Detectable |

Repairable |

New REM |

Previous REM |

Title |

|

INT30-C |

No |

Sure |

2 |

3 |

Guarantee that unsigned integer operations don’t wrap |

|

INT31-C |

No |

Sure |

2 |

3 |

Guarantee that integer conversions don’t end in misplaced or misinterpreted knowledge |

|

INT32-C |

No |

Sure |

2 |

3 |

Guarantee that operations on signed integers don’t end in overflow |

|

INT33-C |

No |

Sure |

2 |

2 |

Guarantee that division and the rest operations don’t end in divide-by-zero errors |

|

INT34-C |

No |

Sure |

2 |

2 |

Do not shift an expression by a unfavourable variety of bits or by larger than or equal to the variety of bits that exist within the operand |

|

INT35-C |

No |

No |

1 |

2 |

Use appropriate integer precisions |

|

INT36-C |

Sure |

No |

2 |

3 |

Changing a pointer to integer or integer to pointer |

On this desk, New REM (Remediation Price) is the metric we’d produce from the Detectable and Repairable metrics, and Previous REM is the present Remediation Price metric. Clearly, solely INT33-C and INT34-C have the identical New REM values as Previous REM values. Which means that their Precedence and Stage metrics stay unchanged, however the different guidelines would have revised Precedence and Stage metrics.

As soon as now we have computed the brand new Threat Evaluation metrics for the CERT C Safe Coding Guidelines, we’d subsequent deal with the C suggestions, which even have Threat Evaluation metrics. We might then proceed to replace these metrics for the remaining CERT requirements: C++, Java, Android, and Perl.

Auditing

The brand new Detectable and Repairable metrics additionally alter how supply code safety audits must be carried out.

Any alert from a tenet that’s robotically repairable may, actually, not be audited in any respect. As a substitute, it may very well be instantly repaired. If an automatic restore software is just not out there, it may as an alternative be repaired manually by builders, who might not care whether or not or not it’s a true constructive. A corporation might select whether or not to use the entire potential repairs or to assessment them; they may apply further effort to assessment automated repairs, however this may increasingly solely be essential to fulfill their requirements of software program high quality and their belief within the APR software.

Any alert from a tenet that’s robotically detectable also needs to, actually, not be audited. It must be repaired robotically with an APR software or despatched to the builders for guide restore.

This raises a possible query: Detectable pointers ought to, in principle, virtually by no means yield false positives. Is that this really true? The alert is likely to be false as a consequence of bugs within the static evaluation software or bugs within the mapping (between the software and the CERT guideline). We may conduct a sequence of supply code audits to verify {that a} guideline really is robotically detectable and revise pointers that aren’t, actually, robotically detectable.

Solely pointers which can be neither robotically detectable nor robotically repairable ought to really be manually audited.

Given the massive variety of SA alerts generated by most code within the DoD, any optimizations to the auditing course of ought to end in extra alerts being audited and repaired. This can reduce the trouble required in addressing alerts. Many organizations don’t deal with all alerts, they usually consequently settle for the danger of un-resolved vulnerabilities of their code. So as an alternative of decreasing effort, this improved course of reduces danger.

This improved course of may be summed up by the next pseudocode:

- For every alert:

- If alert is repairable

- If now we have an APR software to restore alert:

- Use APR software to restore alert

- else (No APR software)

- Ship alert to builders for guide restore

- If now we have an APR software to restore alert:

- else (Alert is just not repairable)

- if alert is detectable:

- Ship alert to builders for guide restore

- else (Alert is just not detectable)

- if alert is detectable:

- If alert is repairable

Your Suggestions Wanted

We’re publishing this particular plan to solicit suggestions. Would these adjustments to our Threat Evaluation metric disrupt your work? How a lot effort would they impose on you? If you want to remark, please ship an electronic mail to information@sei.cmu.edu.