OpenAI is becoming a member of the open weight mannequin recreation with the launch of gpt-oss-120b and gpt-oss-20b.

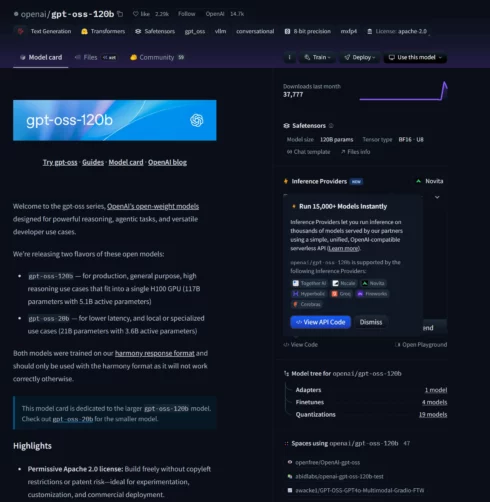

Gpt-oss-120b is optimized for manufacturing, excessive reasoning use circumstances, and gpt-oss-20b is designed for decrease latency or native use circumstances.

In line with the corporate, these open fashions are similar to its closed fashions by way of efficiency and functionality, however at a a lot decrease value. For instance, gpt-oss-120b working on an 80 GB GPU achieved related efficiency to o4-mini on core reasoning benchmarks, whereas gpt-oss-20b working on an edge machine with 16 GB of reminiscence was similar to o3-mini on a number of frequent benchmarks.

“Releasing gpt-oss-120b and gpt-oss-20b marks a major step ahead for open-weight fashions,” OpenAI wrote in a put up. “At their measurement, these fashions ship significant developments in each reasoning capabilities and security. Open fashions complement our hosted fashions, giving builders a wider vary of instruments to speed up vanguard analysis, foster innovation and allow safer, extra clear AI growth throughout a variety of use circumstances.”

The brand new open fashions are perfect for builders who need to have the ability to customise and deploy fashions in their very own surroundings, whereas builders searching for multimodal assist, built-in instruments, and integration with OpenAI’s platform could be higher suited with the corporate’s closed fashions.

Each new fashions can be found below the Apache 2.0 license, and are suitable with OpenAI’s Responses API, can be utilized inside agentic workflows, and supply full chain-of-thought.

In line with OpenAI, these fashions have been educated utilizing its superior pre- and post-training methods, with a concentrate on reasoning, effectivity, and real-world usability in numerous kinds of deployment environments.

Each fashions can be found for obtain on Hugging Face and are quantized in MXFP4 to allow gpt-oss-120B to run with 80 GB of reminiscence and gpt-oss-2bb to run with 16 GB. OpenAI additionally created a playground for builders to experiment with the fashions on-line.

The corporate partnered with a number of deployment suppliers for these fashions, together with Azure, vLLM, Ollama, llama.cpp, LM Studio, AWS, Fireworks, Collectively AI, Baseten, Databricks, Vercel, Cloudflare, and OpenRouter. It additionally labored with NVIDIA, AMD, Cerebras, and Groq to assist guarantee constant efficiency throughout completely different programs.

As a part of the preliminary launch, Microsoft will probably be offering GPU-optimized variations of the smaller mannequin to Home windows units.

“A wholesome open mannequin ecosystem is one dimension to serving to make AI extensively accessible and useful for everybody. We invite builders and researchers to make use of these fashions to experiment, collaborate and push the boundaries of what’s potential. We look ahead to seeing what you construct,” the corporate wrote.