Occasions are messages which can be despatched by a system to inform operators or different methods a couple of change in its area. With event-driven architectures powered by methods like Apache Kafka changing into extra outstanding, there are actually many purposes within the fashionable software program stack that make use of occasions and messages to function successfully. On this weblog, we are going to study the usage of three totally different knowledge backends for occasion knowledge – Apache Druid, Elasticsearch and Rockset.

Utilizing Occasion Information

Occasions are generally utilized by methods within the following methods:

- For reacting to adjustments in different methods: e.g. when a fee is accomplished, ship the consumer a receipt.

- Recording adjustments that may then be used to recompute state as wanted: e.g. a transaction log.

- Supporting separation of knowledge entry (learn/write) mechanisms like CQRS.

- Assist understanding and analyze the present and previous state of a system.

We are going to give attention to the usage of occasions to assist perceive, analyze and diagnose bottlenecks in purposes and enterprise processes, utilizing Druid, Elasticsearch and Rockset along side a streaming platform like Kafka.

Varieties of Occasion Information

Purposes emit occasions that correspond to necessary actions or state adjustments of their context. Some examples of such occasions are:

- For an airline value aggregator, occasions generated when a consumer books a flight, when the reservation is confirmed with the airline, when consumer cancels their reservation, when a refund is accomplished, and so forth.

// an instance occasion generated when a reservation is confirmed with an airline.

{

"sort": "ReservationConfirmed",

"reservationId": "RJ4M4P",

"passengerSequenceNumber": "ABC123",

"underName": {

"title": "John Doe"

},

"reservationFor": {

"flightNumber": "UA999",

"supplier": {

"title": "Continental",

"iataCode": "CO",

},

"vendor": {

"title": "United",

"iataCode": "UA"

},

"departureAirport": {

"title": "San Francisco Airport",

"iataCode": "SFO"

},

"departureTime": "2019-10-04T20:15:00-08:00",

"arrivalAirport": {

"title": "John F. Kennedy Worldwide Airport",

"iataCode": "JFK"

},

"arrivalTime": "2019-10-05T06:30:00-05:00"

}

}

- For an e-commerce web site, occasions generated because the cargo goes via every stage from being dispatched from the distribution heart to being obtained by the customer.

// instance occasion when a cargo is dispatched.

{

"sort": "ParcelDelivery",

"deliveryAddress": {

"sort": "PostalAddress",

"title": "Pickup Nook",

"streetAddress": "24 Ferry Bldg",

"addressLocality": "San Francisco",

"addressRegion": "CA",

"addressCountry": "US",

"postalCode": "94107"

},

"expectedArrivalUntil": "2019-10-12T12:00:00-08:00",

"provider": {

"sort": "Group",

"title": "FedEx"

},

"itemShipped": {

"sort": "Product",

"title": "Google Chromecast"

},

"partOfOrder": {

"sort": "Order",

"orderNumber": "432525",

"service provider": {

"sort": "Group",

"title": "Bob Dole"

}

}

}

- For an IoT platform, occasions generated when a tool registers, comes on-line, experiences wholesome, requires restore/substitute, and so forth.

// an instance occasion generated from an IoT edge gadget.

{

"deviceId": "529d0ea0-e702-11e9-81b4-2a2ae2dbcce4",

"timestamp": "2019-10-04T23:56:59+0000",

"standing": "on-line",

"acceleration": {

"accelX": "0.522",

"accelY": "-.005",

"accelZ": "0.4322"

},

"temp": 77.454,

"potentiometer": 0.0144

}

A lot of these occasions can present visibility into a selected system or enterprise course of. They may help reply questions with regard to a selected entity (consumer, cargo, or gadget), in addition to assist evaluation and prognosis of potential points rapidly, in mixture, over a selected time vary.

Constructing Occasion Analytics

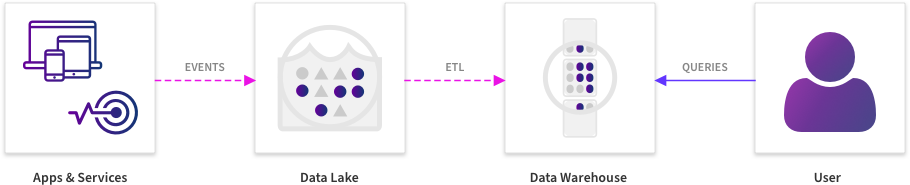

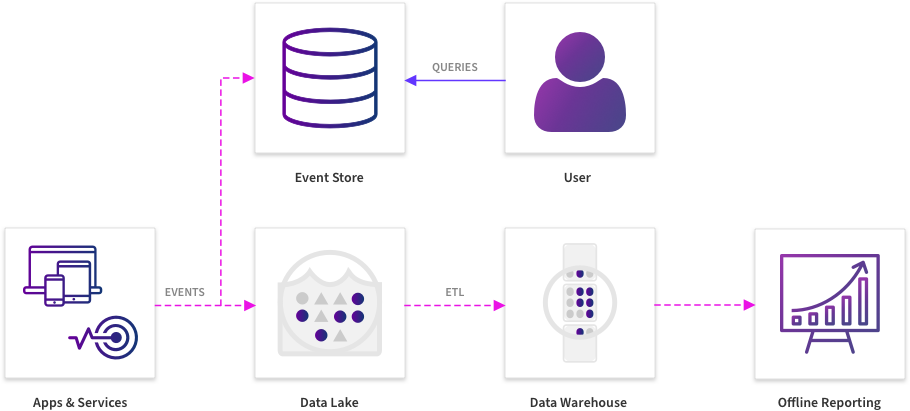

Prior to now, occasions like these would stream into an information lake and get ingested into an information warehouse and be handed off to a BI/knowledge science engineer to mine the info for patterns.

Earlier than

After

This has modified with a brand new era of knowledge infrastructure as a result of responding to adjustments in these occasions rapidly and in a well timed method is changing into vital to success. In a scenario the place each second of unavailability can rack up income losses, understanding patterns and mitigating points which can be adversely affecting system or course of well being have turn out to be time-critical workouts.

When there’s a want for evaluation and prognosis to be as real-time as potential, the necessities of a system that helps carry out occasion analytics have to be rethought. There are instruments specializing in performing occasion analytics in particular domains – reminiscent of product analytics and clickstream analytics, however given the precise wants of a enterprise, we frequently need to construct customized tooling that’s particular to the enterprise or course of, permitting its customers to rapidly perceive and take motion as required primarily based on these occasions. In a variety of these case, methods like these are constructed in-house by combining totally different items of know-how together with streaming pipelines, lakes and warehouses. With regards to serving queries, this wants an analytics backend that has the next properties:

- Quick Ingestion — Even with tons of of 1000’s of occasions flowing each second, a backend to facilitate occasion knowledge analytics should have the ability to sustain with that price. Advanced offline ETL processes are usually not preferable as they’d add minutes to hours earlier than the info is obtainable to question.

- Interactive Latencies — The system should permit ad-hoc queries and drilldowns in real-time. Generally understanding a sample within the occasions requires having the ability to group by totally different attributes within the occasions to attempt to perceive the correlations in real-time.

- Advanced Queries — The system should permit querying utilizing an expressive question language to permit expressing worth lookups, filtering on a predicate, mixture capabilities, and joins.

- Developer-Pleasant – The system should include libraries and SDKs that permit builders to jot down customized purposes on prime of it, in addition to assist dashboarding.

- Configurable and Scalable – This contains having the ability to management the time for which data are retained, variety of replicas of knowledge being queried, and having the ability to scale as much as assist extra knowledge with minimal operational overhead.

Druid

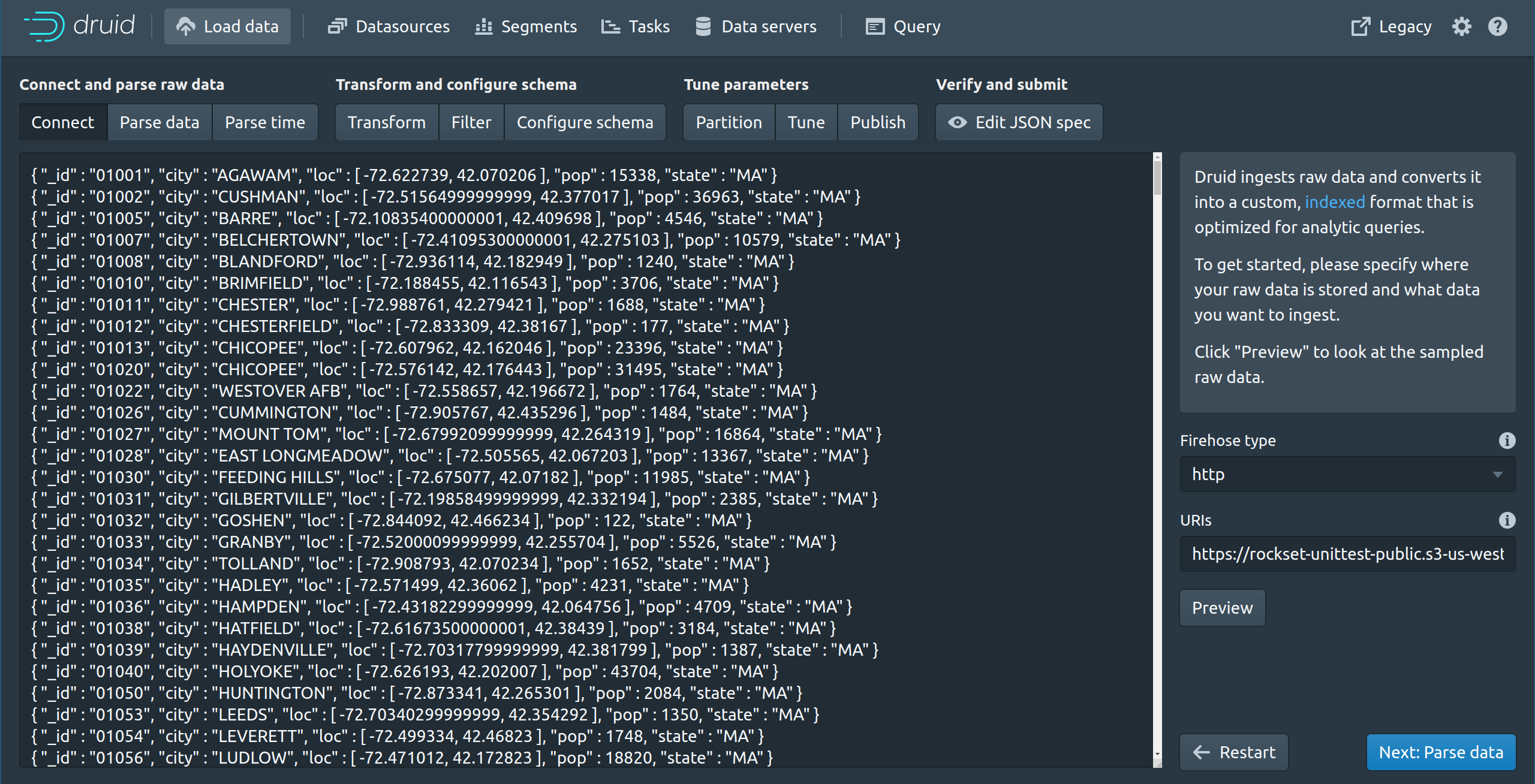

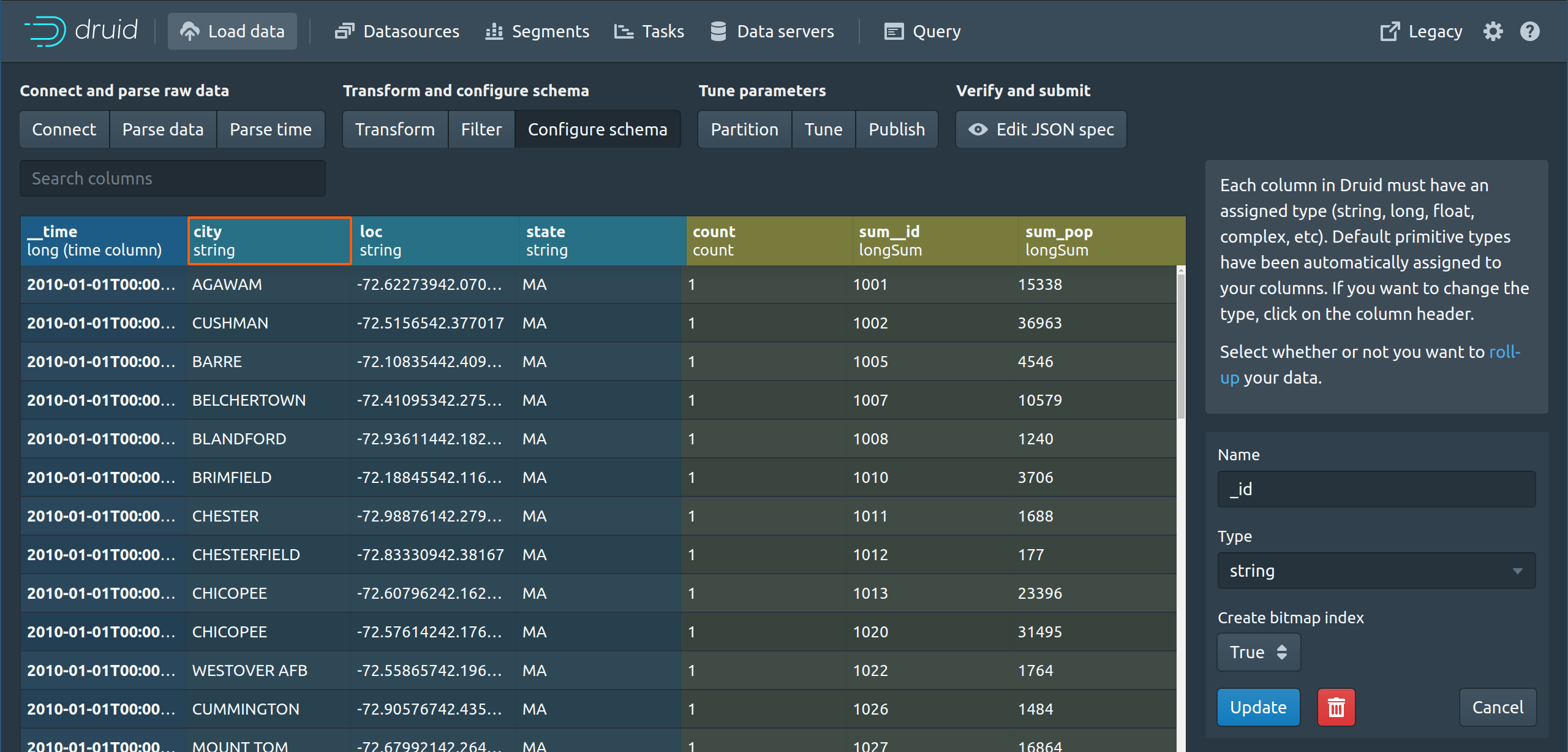

Apache Druid is a column-oriented distributed knowledge retailer for serving quick queries over knowledge. Druid helps streaming knowledge sources, Apache Kafka and Amazon Kinesis, via an indexing service that takes knowledge coming in via these streams and ingests them, and batch ingestion from Hadoop and knowledge lakes for historic occasions. Instruments like Apache Superset are generally used to research and visualize the info in Druid. It’s potential to configure aggregations in Druid that may be carried out at ingestion time to show a spread of data right into a single document that may then be written.

On this instance, we’re inserting a set of JSON occasions into Druid. Druid doesn’t natively assist nested knowledge, so, we have to flatten arrays in our JSON occasions by offering a flattenspec, or by doing a little preprocessing earlier than the occasion lands in it.

Druid assigns varieties to columns — string, lengthy, float, advanced, and so forth. The sort enforcement on the column degree may be restrictive if the incoming knowledge presents with combined varieties for a selected discipline/fields. Every column besides the timestamp may be of sort dimension or metric. One can filter and group by on dimension columns, however not on metric columns. This wants some forethought when selecting which columns to pre-aggregate and which of them shall be used for slice-and-dice analyses.

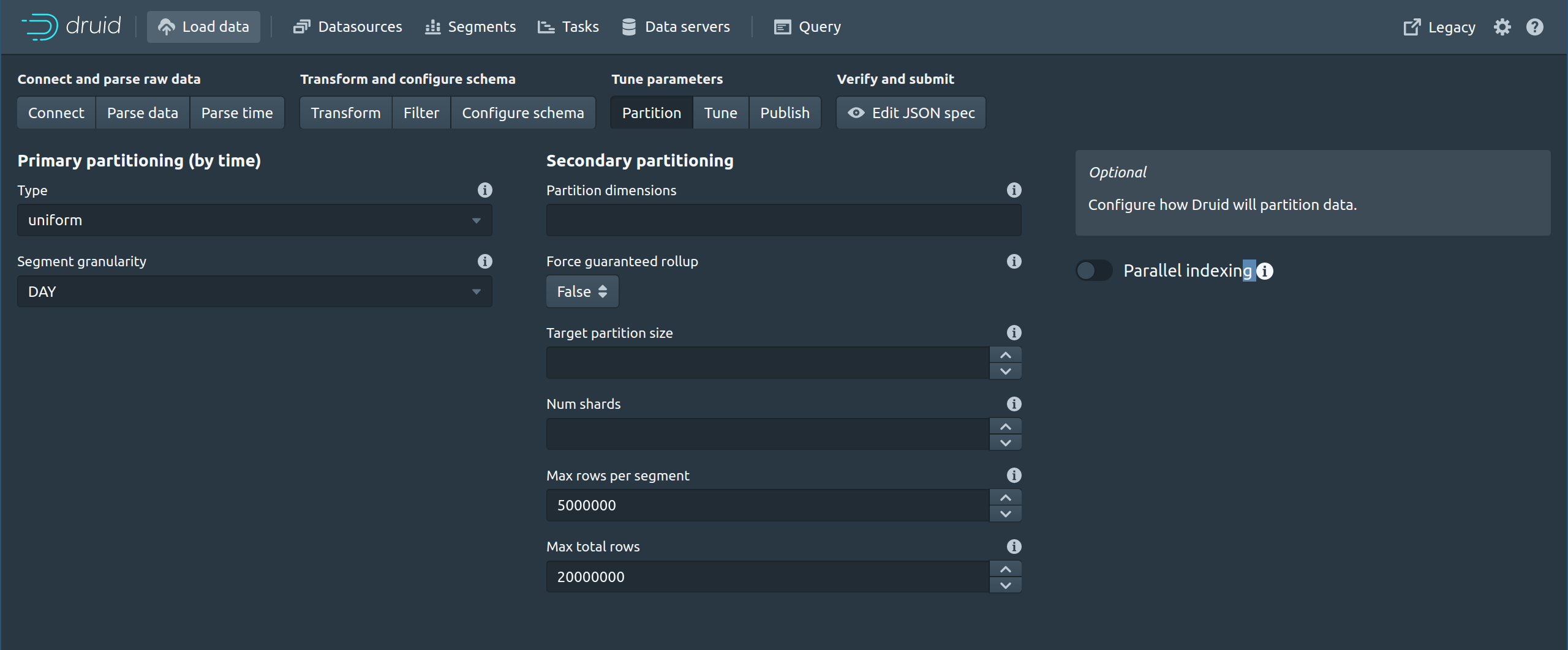

Partition keys have to be picked fastidiously for load-balancing and scaling up. Streaming new updates to the desk after creation requires utilizing one of many supported methods of ingesting – Kafka, Kinesis or Tranquility.

Druid works effectively for occasion analytics in environments the place the info is considerably predictable and rollups and pre-aggregations may be outlined a priori. It includes some upkeep and tuning overhead by way of engineering, however for occasion analytics that doesn’t contain advanced joins, it could possibly serve queries with low latency and scale up as required.

Abstract:

- Low latency analytical queries over the column retailer

- Ingest time aggregations may help scale back quantity of knowledge written

- Good assist for SDKs and libraries in several programming languages

- Works effectively with Hadoop

- Kind enforcement on the column degree may be restrictive with combined varieties

- Medium to excessive operational overhead at scale

- Estimating sources and capability planning is troublesome at scale

- Lacks assist for nested knowledge natively

- Lacks assist for SQL JOINs

Elasticsearch

Elasticsearch is a search and analytics engine that can be used for queries over occasion knowledge. Hottest for queries over system and machine logs for its full-text search capabilities, Elasticsearch can be utilized for advert hoc analytics in some particular instances. Constructed on prime of Apache Lucene, Elasticsearch is commonly used along side Logstash for ingesting knowledge, and Kibana as a dashboard for reporting on it. When used along with Kafka, the Kafka Join Elasticsearch sink connector is used to maneuver knowledge from Kafka to Elasticsearch.

Elasticsearch indexes the ingested knowledge, and these indexes are usually replicated and are used to serve queries. The Elasticsearch question DSL is generally used for growth functions, though there’s SQL assist in X-Pack that helps some kinds of SQL analytical queries towards indices in Elasticsearch. That is essential as a result of for occasion analytics, we need to question in a flexible method.

Elasticsearch SQL works effectively for fundamental SQL queries however can not presently be used to question nested fields, or run queries that contain extra advanced analytics like relational JOINs. That is partly as a result of underlying knowledge mannequin.

It’s potential to make use of Elasticsearch for some fundamental occasion analytics and Kibana is a superb visible exploration software with it. Nonetheless, the restricted assist for SQL implies that the info might should be preprocessed earlier than it may be queried successfully. Additionally, there’s non-trivial overhead in operating and sustaining the ingestion pipeline and Elasticsearch itself because it scales up. Due to this fact, whereas it suffices for fundamental analytics and reporting, its knowledge mannequin and restricted question capabilities make it fall wanting being a completely featured analytics engine for occasion knowledge.

Abstract:

- Glorious assist for full-text search

- Extremely performant for level lookups due to inverted index

- Wealthy SDKs and library assist

- Lacks assist for JOINs

- SQL assist for analytical queries is nascent and never absolutely featured

- Excessive operational overhead at scale

- Estimating sources and capability planning is troublesome

Rockset

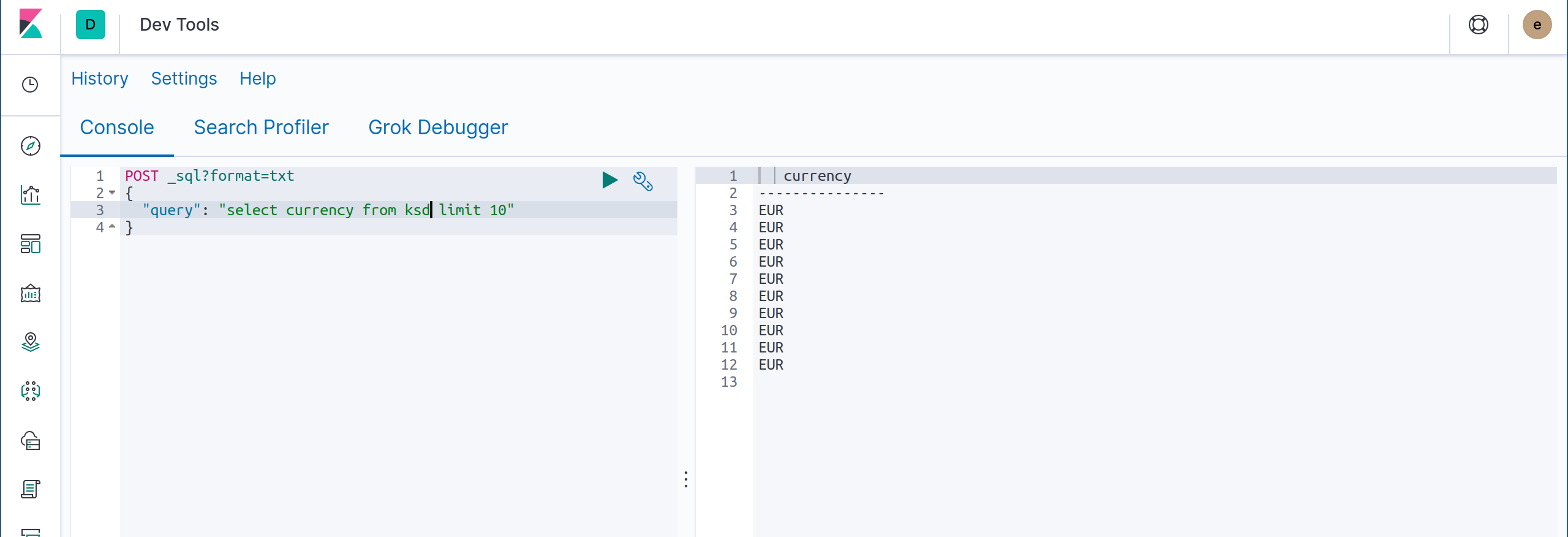

Rockset is a backend for occasion stream analytics that can be utilized to construct customized instruments that facilitate visualizing, understanding, and drilling down. Constructed on prime of RocksDB, it’s optimized for operating search and analytical queries over tens to tons of of terabytes of occasion knowledge.

Ingesting occasions into Rockset may be executed through integrations that require nothing greater than learn permissions once they’re within the cloud, or instantly by writing into Rockset utilizing the JSON Write API.

These occasions are processed inside seconds, listed and made accessible for querying. It’s potential to pre-process knowledge utilizing discipline mappings and SQL-function-based transformations throughout ingestion time. Nonetheless, no preprocessing is required for any advanced occasion construction — with native assist for nested fields and mixed-type columns.

Rockset helps utilizing SQL with the flexibility to execute advanced JOINs. There are APIs and language libraries that permit customized code hook up with Rockset and use SQL to construct an utility that may do customized drilldowns and different customized options. Utilizing Rockset”s Converged Index™, ad-hoc queries run to completion very quick.

Making use of the ALT structure, the system robotically scales up totally different tiers—ingest, storage and compute—as the dimensions of the info or the question load grows when constructing a customized dashboard or utility characteristic, thereby eradicating a lot of the want for capability planning and operational overhead. It doesn’t require partition or shard administration, or tuning as a result of optimizations and scaling are robotically dealt with beneath the hood.

For quick ad-hoc analytics over real-time occasion knowledge, Rockset may help by serving queries utilizing full SQL, and connectors to instruments like Tableau, Redash, Superset and Grafana, in addition to programmatic entry through REST APIs and SDKs in several languages.

Abstract:

- Optimized for level lookups in addition to advanced analytical queries

- Help for full SQL together with distributed JOINs

- Constructed-in connectors to streams and knowledge lakes

- No capability estimation wanted – scales robotically

- Helps SDKs and libraries in several programming languages

- Low operational overhead

- Free without end for small datasets

- Supplied as a managed service

Go to our Kafka options web page for extra data on constructing real-time dashboards and APIs on Kafka occasion streams.

References: