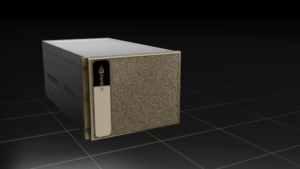

The newest DGX from Nvidia

Prospects have a variety of choices in relation to constructing their generative AI stacks to coach, fine-tune, and run AI fashions. In some instances, the variety of choices could also be overwhelming. To assist simplify the decision-making and cut back that all-important time it takes to coach your first mannequin, Nvidia gives DGX Cloud, which arrived on AWS final week.

Nvidia’s DGX techniques are thought-about the gold customary for GenAI workloads, together with coaching massive language fashions (LLMs), fine-tuning them, and working inference workloads in manufacturing. The DGX techniques are geared up with the newest GPUs, together with Nvidia H100 and H200s, in addition to the corporate’s enterprise AI stack, like Nvidia Inference Microservices (NIMs), Riva, NeMo, and RAPIDS frameworks, amongst different instruments.

With its DGX Cloud providing, Nvidia is giving clients the array of GenAI improvement and manufacturing capabilities that include DGX techniques, however delivered by way of the cloud. It beforehand provided DGX Cloud on Microsoft Azure, Google Cloud, and Oracle Cloud Infrastructure, and final week at re:Invent 2024, it introduced the supply of DGX Cloud on AWS.

“When you consider DGX Cloud, we’re providing a managed service that will get you the very best of the very best,” mentioned Alexis Bjorlin, the vp of DGX Cloud at Nvidia. “It’s extra of an opinionated answer to optimize the AI efficiency and the pipelines.”

There’s loads that goes into constructing a GenAI system past simply requisitioning Nvidia GPUs, downloading Llama-3, and throwing some information at it. There are sometimes extra steps, like information curation, advantageous tuning of a mannequin, and artificial information era, {that a} buyer should combine into an end-to-end AI workflow and shield with guardrails, Bjorlin mentioned. How a lot accuracy do you want? Do you want to shrink the fashions?

Nvidia has a big quantity of expertise constructing these AI pipelines on a wide range of several types of infrastructure, and it shares that have with clients by its DGX Cloud service. That permits it to chop down on the complexity the client is uncovered to, thereby accelerating the GenAI improvement and deployment lifecycle, Bjorlin mentioned.

“Getting up and working with time-to-first-train is a key metric,” Bjorlin advised BigDATAwire in an interview final week at re:Invent. “How lengthy does it take you to stand up and advantageous tune a mannequin and have a mannequin that’s your individual personalized mannequin that you could then select what you do with? That’s one of many metrics we maintain ourselves accountable to: developer velocity.”

However the experience extends past simply getting that first coaching or fine-tuning workload up and working. With DGX Cloud, Nvidia also can present professional help in a few of the finer elements of mannequin improvement, reminiscent of optimizing the coaching routines, Bjorlin mentioned.

“Typically we’re working with clients they usually need extra environment friendly coaching,” she mentioned. “So that they need to transfer from FP16 or BF16 to FP8. Possibly it’s the quantization of the info? How do you’re taking and prepare a mannequin and shard it throughout the infrastructure utilizing 4 kinds of parallelism, whether or not it’s information parallel pipeline, mannequin parallel, or professional parallel.

“We have a look at the mannequin and we assist architect…it to run on the infrastructure,” she continued. “All of that is pretty complicated since you’re making an attempt to do an overlap of each your compute and your comms and your reminiscence timelines. So that you’re making an attempt to get the utmost effectivity. That’s why we’re providing outcome-based capabilities.”

With DGX Cloud working on AWS, Nvidia is supporting H100 GPUs working on EC2 P5 cases (sooner or later, it is going to be supported on the brand new P6 cases that AWS introduced on the convention). That may give clients of all sizes the processing oomph to coach, fine-tune, and run a few of the largest LLMs.

AWS has a wide range of kinds of clients utilizing DGX Cloud. It has just a few very massive firms utilizing it to coach basis fashions, and a bigger variety of smaller companies fine-tuning pre-trained fashions utilizing their very own information, Bjorlin mentioned. Nvidia wants to take care of the flexibleness to accommodate all of them.

“Increasingly more persons are consuming compute by the cloud. And we have to be consultants at understanding that to repeatedly optimize our silicon, our techniques, our information heart scale designs and our software program stack,” she mentioned.

One of many benefits of utilizing DGX Cloud, apart from the time-to-first prepare, is clients can get entry to a DGX system with as little as a one-month dedication. That’s useful for AI startups, such because the members of Nvidia’s Inception program, who’re nonetheless testing their AI concepts and maybe aren’t prepared to enter manufacturing.

Nvidia has 9,000 Inception companions, and having DGX Cloud obtainable on AWS will assist them succeed, Bjorlin mentioned. “It’s a proving floor,” she mentioned. “They get a variety of builders in an organization saying, ‘I’m going to check out just a few cases of DGX cloud on AWS.’”

“Nvidia is a really developer-centric firm,” she added. “Builders around the globe are coding and dealing on Nvidia techniques, and so it’s a simple approach for us to carry them in and have them construct an AI software, after which they’ll go and serve on AWS.”

Associated Gadgets:

Nvidia Introduces New Blackwell GPU for Trillion-Parameter AI Fashions

NVIDIA Is More and more the Secret Sauce in AI Deployments, However You Nonetheless Want Expertise

The Generative AI Future Is Now, Nvidia’s Huang Says