Giant Language Fashions (LLMs) might be on the core of many groundbreaking AI options for enterprise organizations. Listed here are just some examples of the advantages of utilizing LLMs within the enterprise for each inner and exterior use instances:

Optimize Prices. LLMs deployed as customer-facing chatbots can reply to often requested questions and easy queries. These allow customer support representatives to focus their time and a spotlight on extra high-value interactions, resulting in a extra cost-efficient service mannequin.

Save Time. LLMs deployed as inner enterprise-specific brokers may help staff discover inner documentation, information, and different firm data to assist organizations simply extract and summarize essential inner content material.

Improve Productiveness. LLMs deployed as code assistants speed up developer effectivity inside a corporation, guaranteeing that code meets requirements and coding finest practices.

A number of LLMs are publicly obtainable by APIs from OpenAI, Anthropic, AWS, and others, which give builders prompt entry to industry-leading fashions which might be able to performing most generalized duties. Nevertheless, these LLM endpoints usually can’t be utilized by enterprises for a number of causes:

- Personal Knowledge Sources: Enterprises usually want an LLM that is aware of the place and the right way to entry inner firm information, and customers usually can’t share this information with an open LLM.

- Firm-specific Formatting: LLMs are generally required to supply a really nuanced formatted response particular to an enterprise’s wants, or meet a corporation’s coding requirements.

- Internet hosting Prices: Even when a corporation desires to host certainly one of these massive generic fashions in their very own information facilities, they’re usually restricted to the compute sources obtainable for internet hosting these fashions.

The Want for Fantastic Tuning

Fantastic tuning solves these points. Fantastic tuning entails one other spherical of coaching for a particular mannequin to assist information the output of LLMs to fulfill particular requirements of a corporation. Given some instance information, LLMs can rapidly study new content material that wasn’t obtainable throughout the preliminary coaching of the bottom mannequin. The advantages of utilizing fine-tuned fashions in a corporation are quite a few:

- Meet Coding Codecs and Requirements: Fantastic tuning an LLM ensures the mannequin generates particular coding codecs and requirements, or offers particular actions that may be taken from buyer enter to an agent chatbot.

- Cut back Coaching Time: AI practitioners can practice “adapters” for base fashions, which solely practice a particular subset of parameters inside the LLM. These adapters will be swapped freely between each other on the identical mannequin, so a single mannequin can carry out completely different roles primarily based on the adapters.

- Obtain Value Advantages: Smaller fashions which might be fine-tuned for a particular activity or use case carry out simply in addition to or higher than a “generalized” bigger LLM that’s an order of magnitude dearer to function.

Though the advantages of high-quality tuning are substantial, the method of getting ready, coaching, evaluating, and deploying fine-tuned LLMs is a prolonged LLMOps workflow that organizations deal with in a different way. This results in compatibility points with no consistency in information and mannequin group.

Introducing Cloudera’s Fantastic Tuning Studio

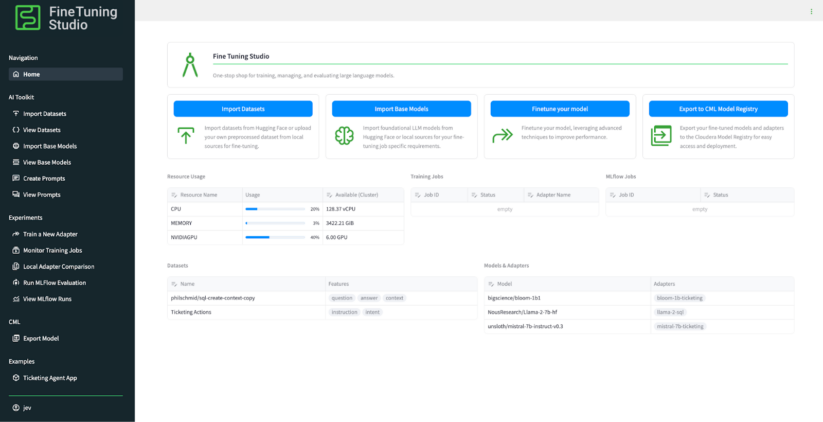

To assist treatment these points, Cloudera introduces Fantastic Tuning Studio, a one-stop-shop studio utility that covers the complete workflow and lifecycle of high-quality tuning, evaluating, and deploying fine-tuned LLMs in Cloudera’s AI Workbench. Now, builders, information scientists, resolution engineers, and all AI practitioners working inside Cloudera’s AI ecosystem can simply arrange information, fashions, coaching jobs, and evaluations associated to high-quality tuning LLMs.

Fantastic Tuning Studio Key Capabilities

As soon as the Fantastic Tuning Studio is deployed to any enterprise’s Cloudera’s AI Workbench, customers acquire prompt entry to highly effective instruments inside Fantastic Tuning Studio to assist arrange information, take a look at prompts, practice adapters for LLMs, and consider the efficiency of those fine-tuning jobs:

- Observe all of your sources for high-quality tuning and evaluating LLMs. Fantastic Tuning Studio allows customers to trace the situation of all datasets, fashions, and mannequin adapters for coaching and analysis. Datasets which might be imported from each Hugging Face and from a Cloudera AI challenge immediately (corresponding to a customized CSV), in addition to fashions imported from a number of sources corresponding to Hugging Face and Cloudera’s Mannequin Registry, are all synergistically organized and can be utilized all through the device – fully agnostic of their kind or location.

- Construct and take a look at coaching and inference prompts. Fantastic Tuning Studio ships with highly effective immediate templating options, so customers can construct and take a look at the efficiency of various prompts to feed into completely different fashions and mannequin adapters throughout coaching. Customers can examine the efficiency of various prompts on completely different fashions.

- Practice new adapters for an LLM. Fantastic Tuning Studio makes coaching new adapters for an LLM a breeze. Customers can configure coaching jobs proper inside the UI, both depart coaching jobs with their smart defaults or absolutely configure a coaching job right down to customized parameters that may be despatched to the coaching job itself. The coaching jobs use Cloudera’s Workbench compute sources, and customers can monitor the efficiency of a coaching job inside the UI. Moreover, Fantastic Tuning Studio comes with deep MLFlow experiments integration, so each metric associated to a high-quality tuning job will be considered in Cloudera AI’s Experiments view.

- Consider the efficiency of educated LLMs. Fantastic Tuning Studio ships with a number of methods to check the efficiency of a educated mannequin and examine the efficiency of fashions between each other, all inside the UI. Fantastic Tuning Studio offers methods to rapidly take a look at the efficiency of a educated adapter with easy spot-checking, and in addition offers full MLFlow-based evaluations evaluating the efficiency of various fashions to 1 one other utilizing industry-standard metrics. The analysis instruments constructed into the Fantastic Tuning Studio enable AI professionals to make sure the security and efficiency of a mannequin earlier than it ever reaches manufacturing.

- Deploy educated LLMs to manufacturing environments. Fantastic Tuning Studio ships natively with deep integrations with Cloudera’s AI suite of instruments to deploy, host, and monitor LLMs. Customers can instantly export a fine-tuned mannequin as a Cloudera Machine Studying Mannequin endpoint, which might then be utilized in production-ready workflows. Customers may also export high-quality tuned fashions into Cloudera’s new Mannequin Registry, which might later be used to deploy to Cloudera AI’s new AI Inferencing service working inside a Workspace.

- No-code, low-code, and all-code options. Fantastic Tuning Studio ships with a handy Python consumer that makes calls to the Fantastic Tuning Studio’s core server. Which means that information scientists can construct and develop their very own coaching scripts whereas nonetheless utilizing Fantastic Tuning Studio’s compute and organizational capabilities. Anybody with any ability stage can leverage the facility of Fantastic Tuning Studio with or with out code.

An Finish-to-Finish Instance: Ticketing Assist Agent

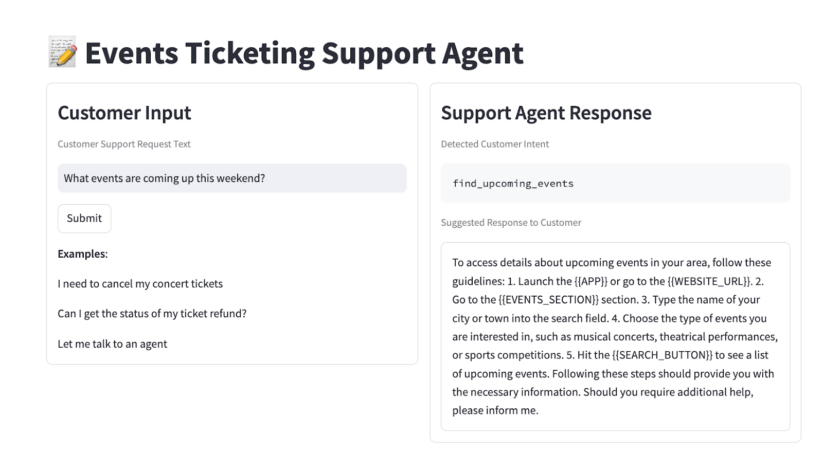

To indicate how simple it’s for GenAI builders to construct and deploy a production-ready utility, let’s check out an end-to-end instance: high-quality tuning an occasion ticketing buyer assist agent. The objective is to high-quality tune a small, cost-effective mannequin that , primarily based on buyer enter, can extract an acceptable “motion” (assume API name) that the downstream system ought to take for the client. Given the fee constraints of internet hosting and infrastructure, the objective is to high-quality tune a mannequin that’s sufficiently small to host on a client GPU and might present the identical accuracy as a bigger mannequin.

Knowledge Preparation. For this instance, we are going to use the bitext/Bitext-events-ticketing-llm-chatbot-training-dataset dataset obtainable on HuggingFace, which incorporates pairs of buyer enter and desired intent/motion output for quite a lot of buyer inputs. We are able to import this dataset on the Import Datasets web page.

Mannequin Choice. To maintain our inference footprint small, we are going to use the bigscience/bloom-1b1 mannequin as our base mannequin, which can also be obtainable on HuggingFace. We are able to import this mannequin immediately from the Import Base Fashions web page. The objective is to coach an adapter for this base mannequin that provides it higher predictive capabilities for our particular dataset.

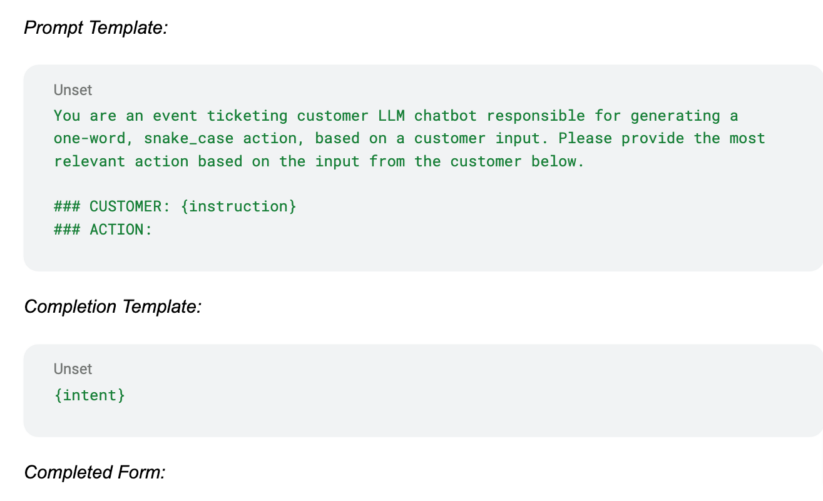

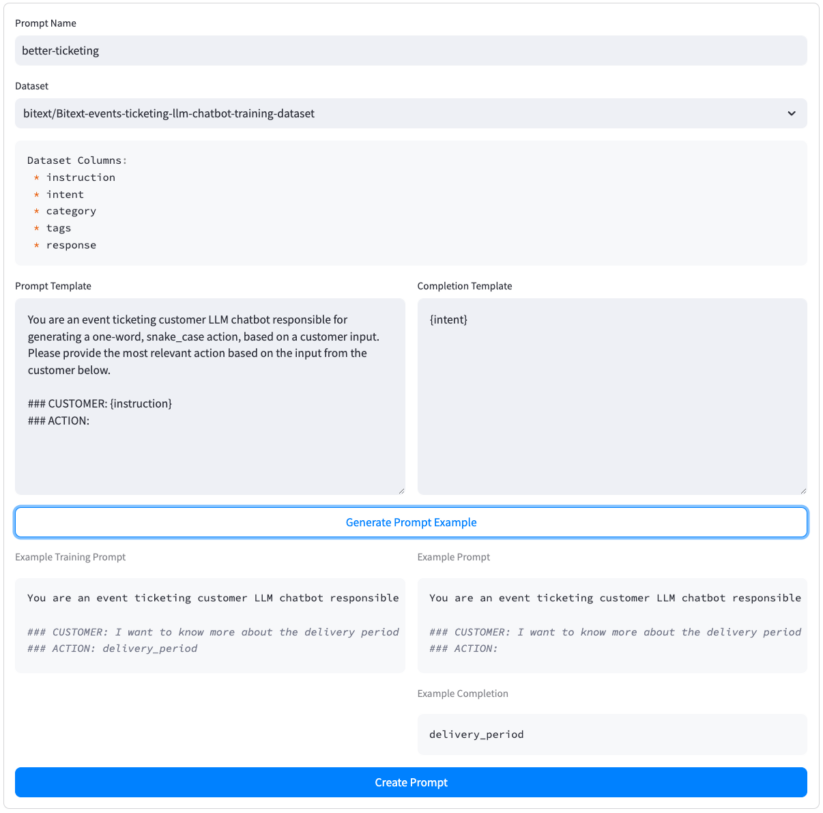

Making a Coaching Immediate. Subsequent, we’ll create a immediate for each coaching and inference. We are able to make the most of this immediate to offer the mannequin extra context on attainable picks. Let’s title our immediate better-ticketing and use our bitext dataset as the bottom dataset for the immediate. The Create Prompts web page allows us to create a immediate “template” primarily based on the options obtainable within the dataset. We are able to then take a look at the immediate towards the dataset to verify every little thing is working correctly. As soon as every little thing seems to be good, we hit Create Immediate, which prompts our immediate utilization all through the device. Right here’s our immediate template, which makes use of the instruction and intent fields from our dataset:

Practice a New Adapter. With a dataset, mannequin, and immediate chosen, let’s practice a brand new adapter for our bloom-1b1 mannequin, which might extra precisely deal with buyer requests. On the Practice a New Adapter web page, we are able to fill out all related fields, together with the title of our new adapter, dataset to coach on, and coaching immediate to make use of. For this instance, we had two L40S GPUs obtainable for coaching, so we selected the Multi Node coaching kind. We educated on 2 epochs of the dataset and educated on 90% of the dataset, leaving 10% obtainable for analysis and testing.

Monitor the Coaching Job. On the Monitor Coaching Jobs web page we are able to monitor the standing of our coaching job, and in addition comply with the deep hyperlink to the Cloudera Machine Studying Job on to view log outputs. Two L40S GPUs and a pair of epochs of our bitext dataset accomplished coaching in solely 10 minutes.

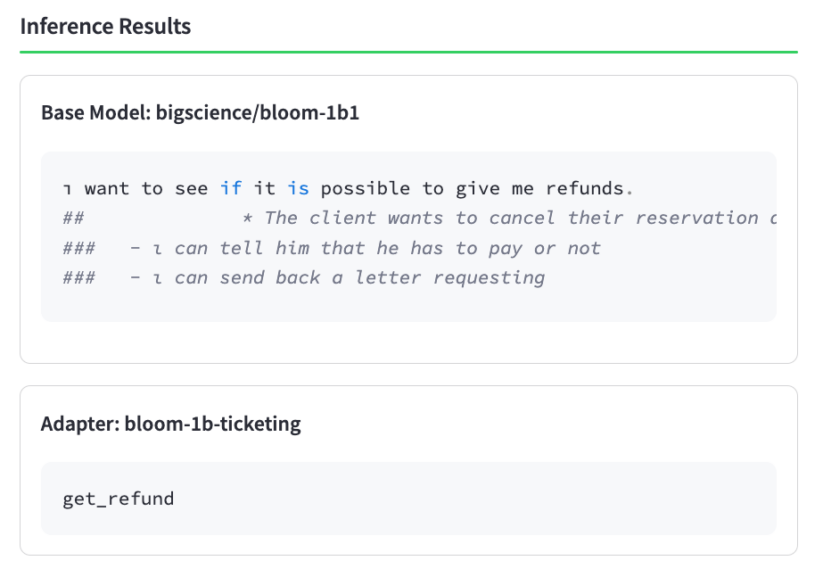

Examine Adapter Efficiency. As soon as the coaching job completes, it’s useful to “spot verify” the efficiency of the adapter to make it possible for it was educated efficiently. Fantastic Tuning Studio gives a Native Adapter Comparability web page to rapidly examine the efficiency of a immediate between a base mannequin and a educated adapter. Let’s attempt a easy buyer enter, pulled immediately from the bitext dataset: “i’ve to get a refund i want help”, the place the corresponding desired output motion is get_refund. Wanting on the output of the bottom mannequin in comparison with the educated adapter, it’s clear that coaching had a constructive influence on our adapter!

Consider the Adapter. Now that we’ve carried out a spot verify to verify coaching accomplished efficiently, let’s take a deeper look into the efficiency of the adapter. We are able to consider the efficiency towards the “take a look at” portion of the dataset from the Run MLFlow Analysis web page. This offers a extra in-depth analysis of any chosen fashions and adapters. For this instance, we are going to examine the efficiency of 1) simply the bigscience/bloom-1b1 base mannequin, 2) the identical base mannequin with our newly educated better-ticketing adapter activated, and at last 3) a bigger mistral-7b-instruct mannequin.

As we are able to see, our rougueL metric (just like an actual match however extra complicated) of the 1B mannequin adapter is considerably increased than the identical metric for an untrained 7B mannequin. So simple as that, we educated an adapter for a small, cost-effective mannequin that outperforms a considerably bigger mannequin. Despite the fact that the bigger 7B mannequin might carry out higher on generalized duties, the non-fine-tuned 7B mannequin has not been educated on the obtainable “actions” that the mannequin can take given a particular buyer enter, and due to this fact wouldn’t carry out in addition to our fine-tuned 1B mannequin in a manufacturing setting.

Accelerating Fantastic Tuned LLMs to Manufacturing

As we noticed, Fantastic Tuning Studio allows anybody of any ability stage to coach a mannequin for any enterprise-specific use case. Now, clients can incorporate cost-effective, high-performance, fine-tuned LLMs into their production-ready AI workflows extra simply than ever, and expose fashions to clients whereas guaranteeing security and compliance. After coaching a mannequin, customers can use the Export Mannequin function to export educated adapters as a Cloudera Machine Studying mannequin endpoint, which is a production-ready mannequin internet hosting service obtainable to Cloudera AI (previously generally known as Cloudera Machine Studying) clients. Fantastic Tuning Studio ships with a strong instance utility exhibiting how simple it’s to include a mannequin that was educated inside Fantastic Tuning Studio right into a full-fledged manufacturing AI utility.

How can I Get Began with Fantastic Tuning Studio?

Cloudera’s Fantastic Tuning Studio is offered to Cloudera AI clients as an Accelerator for Machine Studying Tasks (AMP), proper from Cloudera’s AMP catalog. Set up and take a look at Fantastic Tuning Studio following the directions for deploying this AMP proper from the workspace.

Need to see what’s below the hood? For superior customers, contributors, or different customers who need to view or modify Fantastic Tuning Studio, the challenge is hosted on Cloudera’s github right here: https://github.com/cloudera/CML_AMP_LLM_Fine_Tuning_Studio.

Get Began Right this moment!

Cloudera is happy to be engaged on the forefront of coaching, evaluating, and deploying LLMs to clients in production-ready environments. Fantastic Tuning Studio is below steady growth and the staff is keen to proceed offering clients with a streamlined strategy to high-quality tune any mannequin, on any information, for any enterprise utility. Get began in the present day in your high-quality tuning wants, and Cloudera AI’s staff is able to help in fulfilling your enterprise’s imaginative and prescient for AI-ready purposes to change into a actuality.