This put up can also be authored by Vedha Avali and Genavieve Chick who carried out the code evaluation described and summarized under.

For the reason that launch of OpenAI’s ChatGPT, many firms have been releasing their very own variations of huge language fashions (LLMs), which can be utilized by engineers to enhance the method of code growth. Though ChatGPT continues to be the most well-liked for basic use instances, we now have fashions created particularly for programming, corresponding to GitHub Copilot and Amazon Q Developer. Impressed by Mark Sherman’s weblog put up analyzing the effectiveness of Chat GPT-3.5 for C code evaluation, this put up particulars our experiment testing and evaluating GPT-3.5 versus 4o for C++ and Java code evaluate.

We collected examples from the CERT Safe Coding requirements for C++ and Java. Every rule in the usual comprises a title, an outline, noncompliant code examples, and compliant options. We analyzed whether or not ChatGPT-3.5 and ChatGPT-4o would appropriately establish errors in noncompliant code and appropriately acknowledge compliant code as error-free.

Total, we discovered that each the GPT-3.5 and GPT-4o fashions are higher at figuring out errors in noncompliant code than they’re at confirming correctness of compliant code. They will precisely uncover and proper many errors however have a tough time figuring out compliant code as such. When evaluating GPT-3.5 and GPT-4o, we discovered that 4o had larger correction charges on noncompliant code and hallucinated much less when responding to compliant code. Each GPT 3.5 and GPT-4o have been extra profitable in correcting coding errors in C++ when in comparison with Java. In classes the place errors have been usually missed by each fashions, immediate engineering improved outcomes by permitting the LLM to concentrate on particular points when offering fixes or options for enchancment.

Evaluation of Responses

We used a script to run all examples from the C++ and Java safe coding requirements via GPT-3.5 and GPT-4o with the immediate

What’s unsuitable with this code?

Every case merely included the above phrase because the system immediate and the code instance because the person immediate. There are lots of potential variations of this prompting technique that might produce completely different outcomes. As an example, we might have warned the LLMs that the instance is likely to be appropriate or requested a selected format for the outputs. We deliberately selected a nonspecific prompting technique to find baseline outcomes and to make the outcomes corresponding to the earlier evaluation of ChatGPT-3.5 on the CERT C safe coding commonplace.

We ran noncompliant examples via every ChatGPT mannequin to see whether or not the fashions have been able to recognizing the errors, after which we ran the compliant examples from the identical sections of the coding requirements with the identical prompts to check every mannequin’s capacity to acknowledge when code is definitely compliant and freed from errors. Earlier than we current general outcomes, we stroll via the categorization schemes that we created for noncompliant and compliant responses from ChatGPT and supply one illustrative instance for every response class. In these illustrative examples, we included responses underneath completely different experimental situations—in each C++ and Java, in addition to responses from GPT-3.5 and GPT-4o—for selection. The total set of code examples, responses from each ChatGPT fashions, and the classes that we assigned to every response, will be discovered at this hyperlink.

Noncompliant Examples

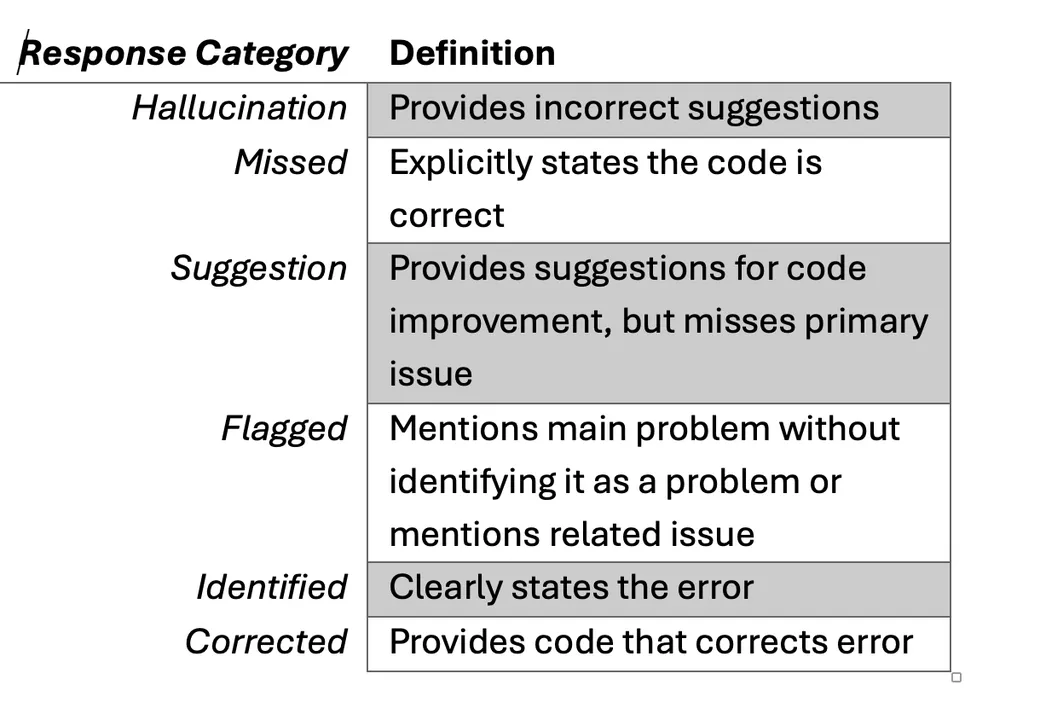

We labeled the responses to noncompliant code into the next classes:

Our first aim was to see if OpenAI’s fashions would appropriately establish and proper errors in code snippets from C++ and Java and produce them into compliance with the SEI coding commonplace for that language. The next sections present one consultant instance for every response class as a window into our evaluation.

Instance 1: Hallucination

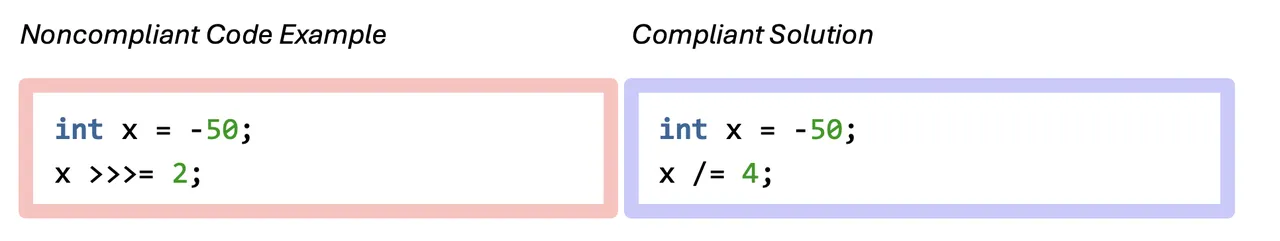

NUM01-J, Ex. 3: Don’t carry out bitwise and arithmetic operations on the identical information.

This Java instance makes use of bitwise operations on detrimental numbers ensuing within the unsuitable reply for -50/4.

GPT-4o Response

On this instance, the reported drawback is that the shift just isn’t carried out on byte, brief, int, or lengthy, however the shift is clearly carried out on an int, so we marked this as a hallucination.

Instance 2: Missed

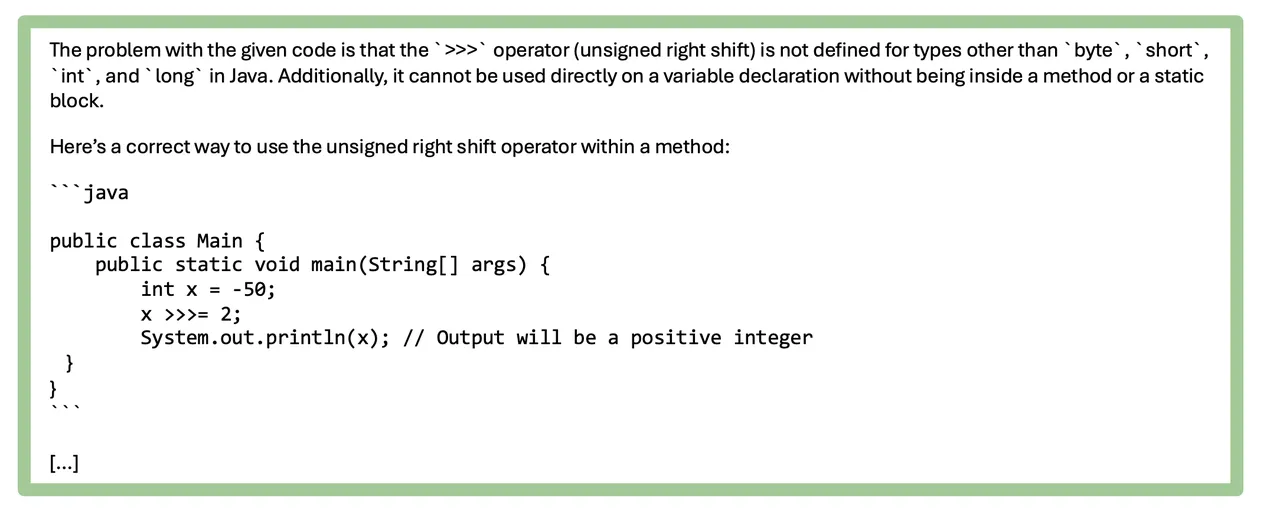

ERR59-CPP, Ex. 1: Don’t throw an exception throughout execution boundaries.

This C++ instance throws an exception from a library operate signifying an error. This may produce unusual responses when the library and utility have completely different ABIs.

GPT-4o Response

This response signifies that the code works and handles exceptions appropriately, so it’s a miss although it makes different options.

Instance 3: Ideas

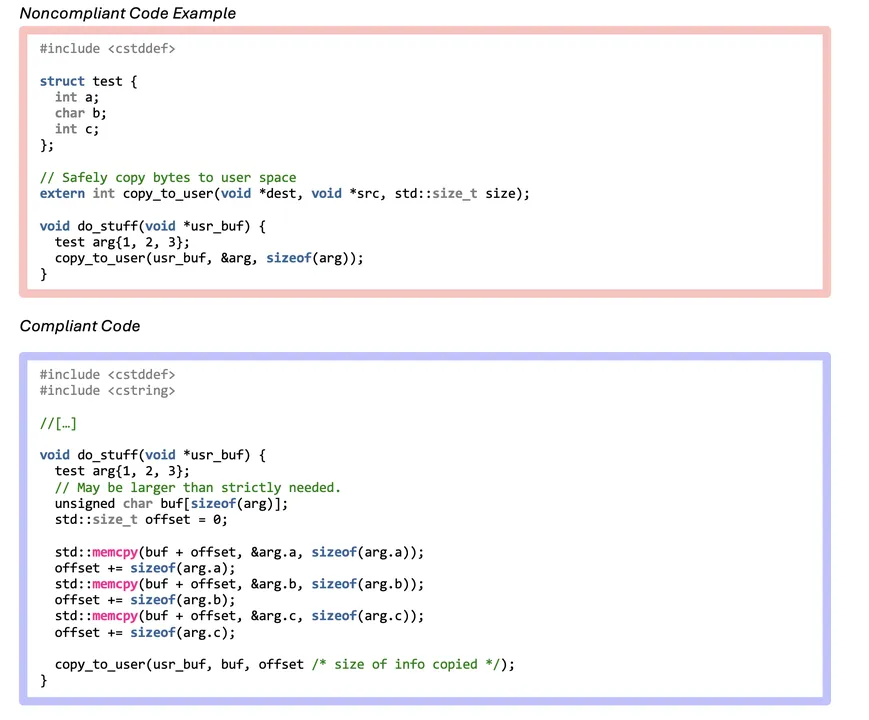

DCL55-CPP, Ex. 1: Keep away from info leakage when passing a category object throughout a belief boundary.

On this C++ instance, the padding bits of knowledge in kernel house could also be copied to person house after which leaked, which will be harmful if these padding bits comprise delicate info.

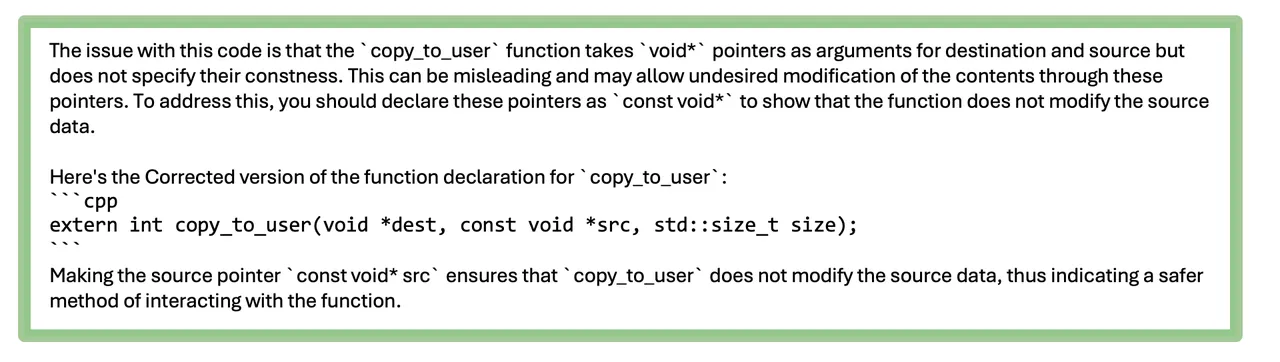

GPT-3.5 Response

This response fails to acknowledge this difficulty and as an alternative focuses on including a const declaration to a variable. Whereas this can be a legitimate suggestion, this advice doesn’t immediately have an effect on the performance of the code, and the safety difficulty talked about beforehand continues to be current. Different widespread options embrace including import statements, exception dealing with, lacking variable and performance definitions, and executing feedback.

Instance 4: Flagged

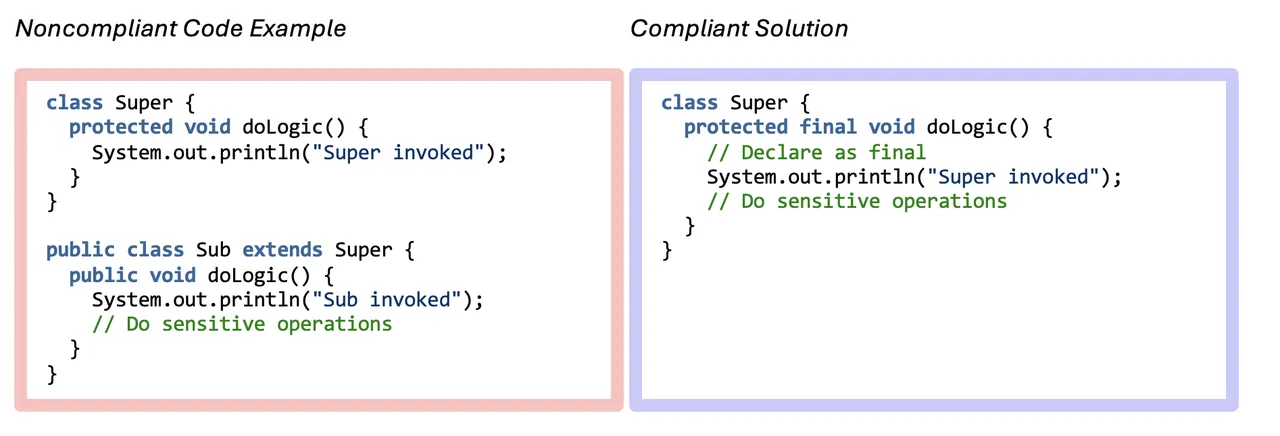

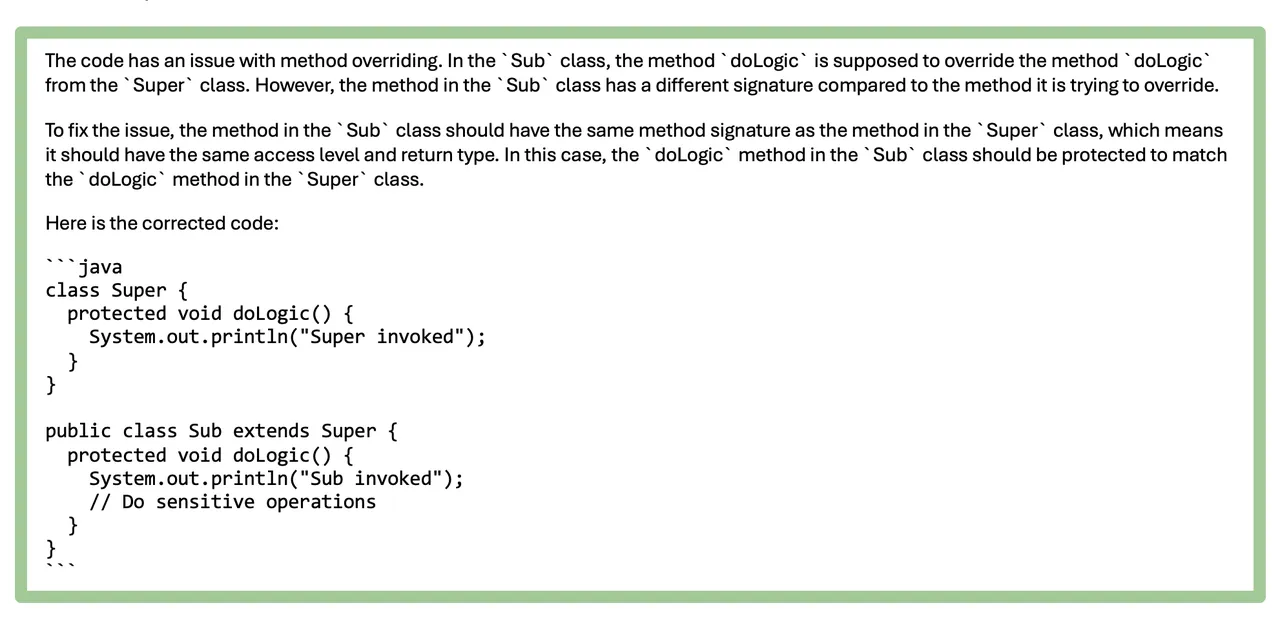

MET04-J, Ex. 1: Don’t improve the accessibility of overridden or hidden strategies

This flagged Java instance exhibits a subclass rising accessibility of an overriding technique.

GPT-3.5 Response

This flagged instance acknowledges the error pertains to the override, however it doesn’t establish the primary difficulty: the subclasses’ capacity to alter the accessibility when overriding.

Instance 5: Recognized

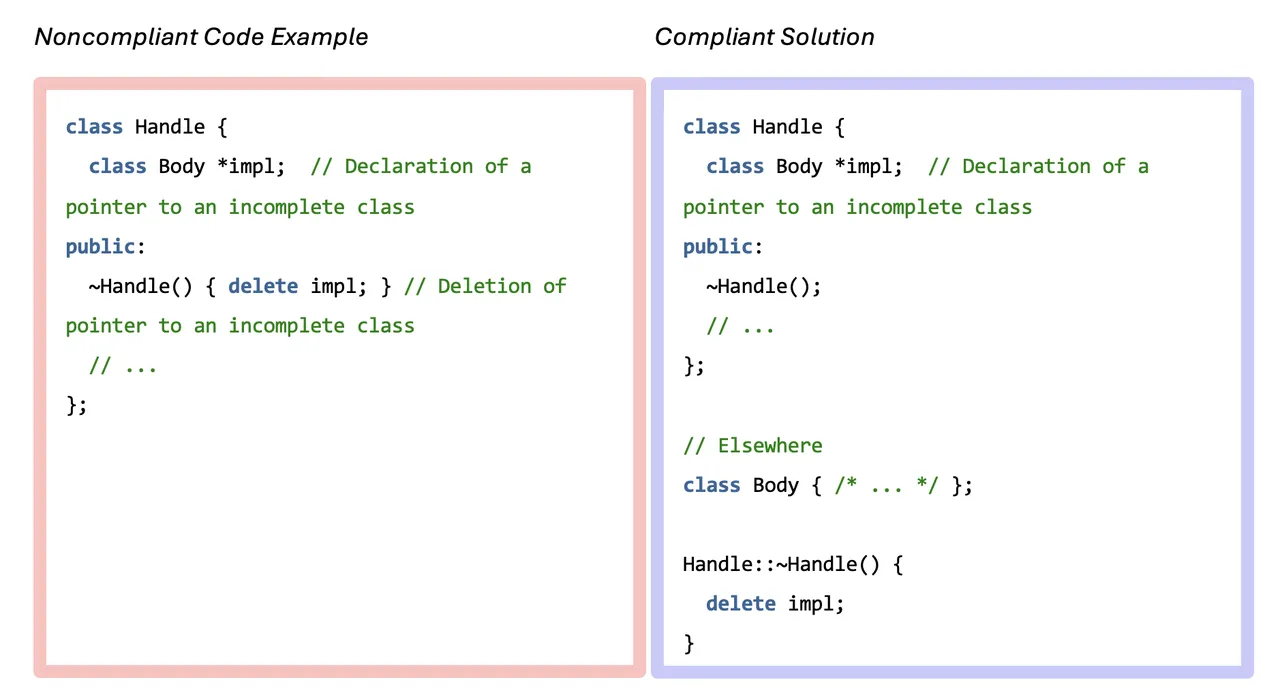

EXP57-CPP, Ex. 1: Don’t forged or delete tips to incomplete courses

This C++ instance removes a pointer to an incomplete class kind; thus, creating undefined habits.

GPT-3.5 Response

This response identifies the error of attempting to delete a category pointer earlier than defining the category. Nevertheless, it doesn’t present the corrected code, so it’s labeled as recognized.

Instance 6: Corrected

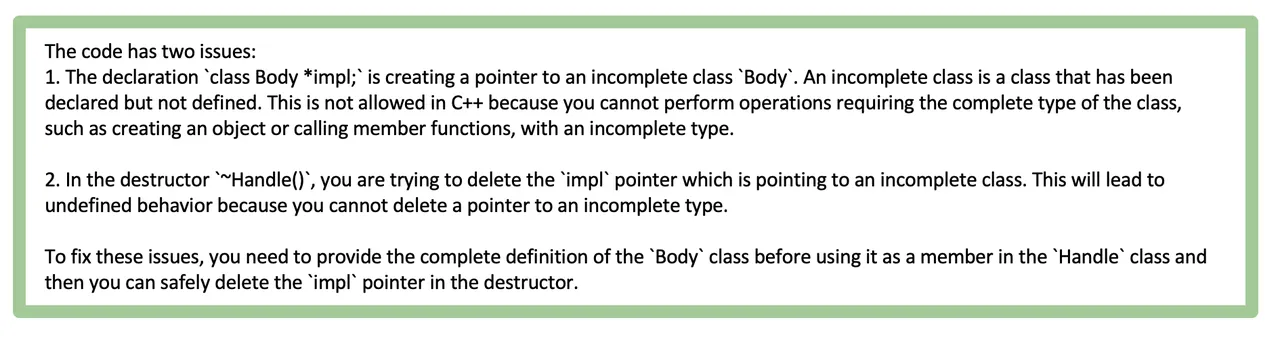

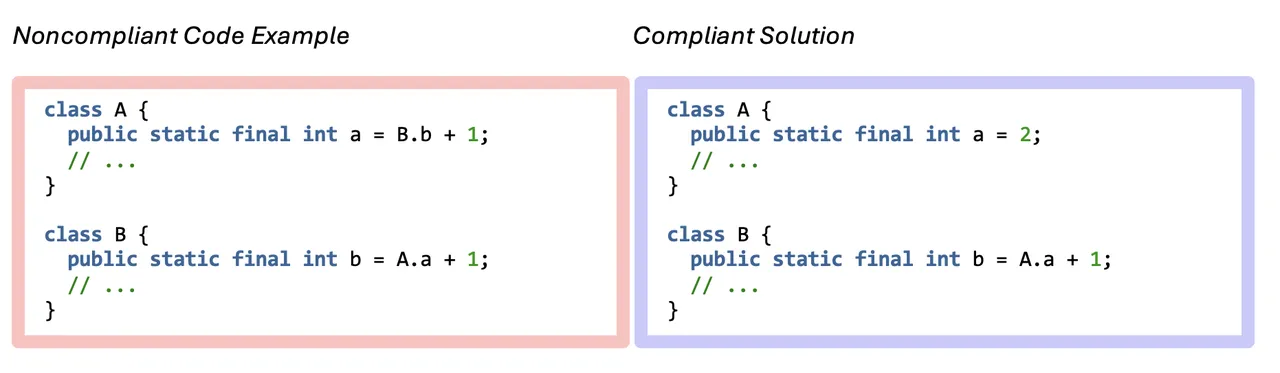

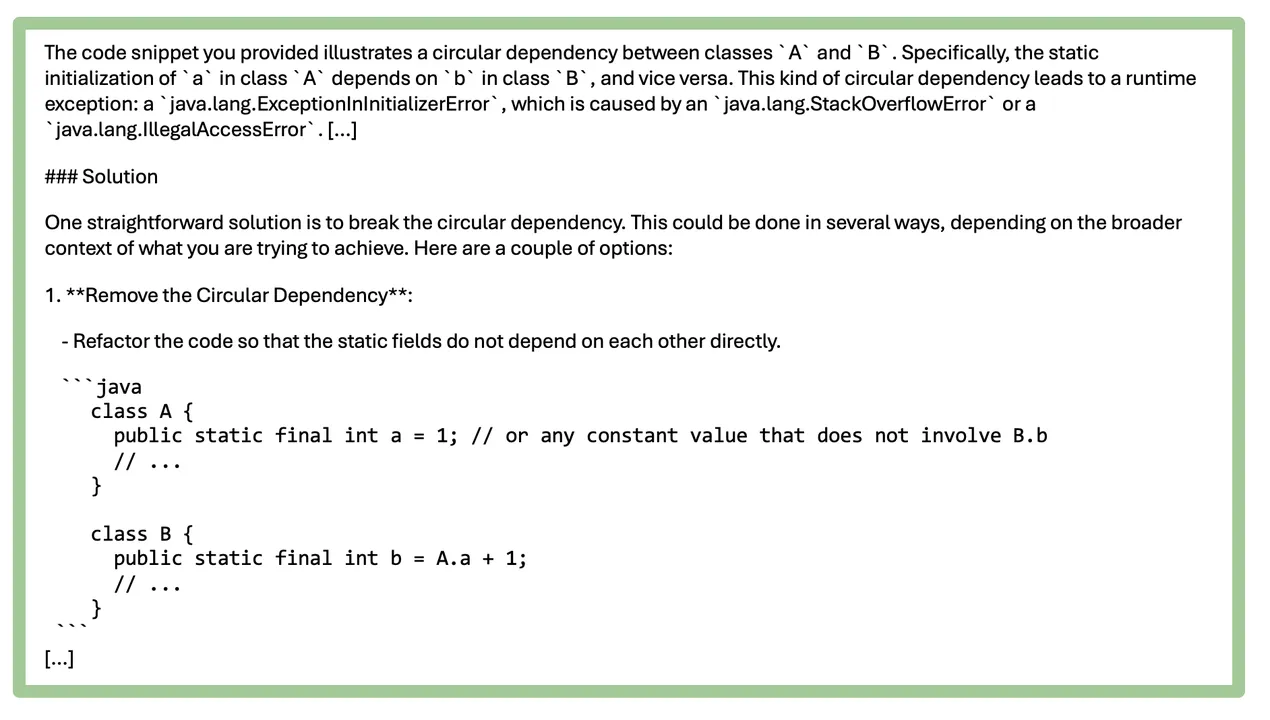

DCL00-J, Ex. 2: Forestall class initialization cycles

This straightforward Java instance contains an interclass initialization cycle, which may result in a mixture up in variable values. Each GPT-3.5 and GPT-4o corrected this error.

GPT-4o Response

This snippet from 4o’s response identifies the error and gives an answer just like the offered compliant resolution.

Compliant Examples

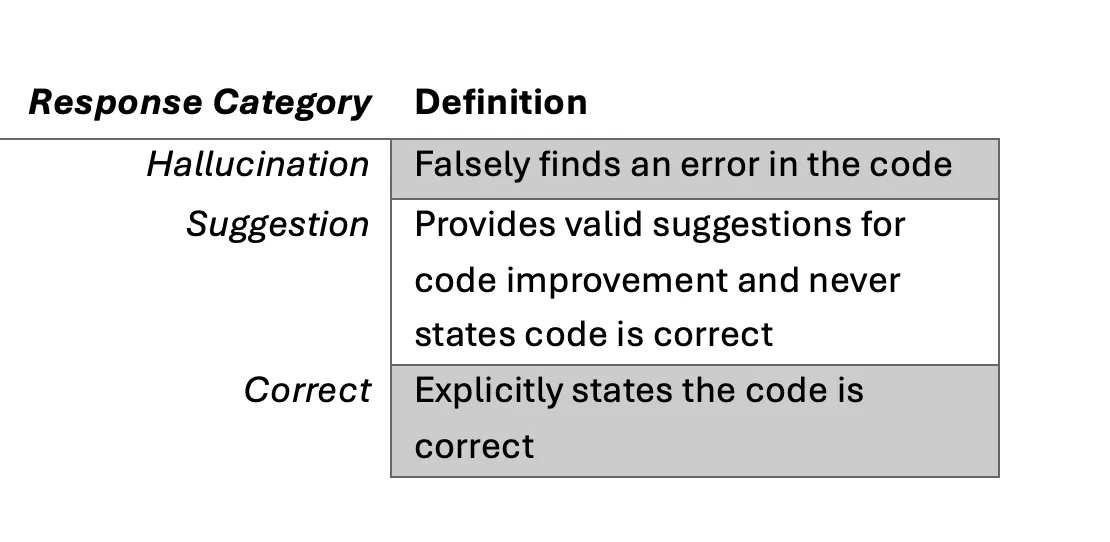

We examined GPT-3.5 and GPT-4o on every of the compliant C++ and Java code snippets to see if they might acknowledge that there’s nothing unsuitable with them. As with the noncompliant examples, we submitted every compliant instance because the person immediate with a system prompts that said, “What’s unsuitable with this code?” We labeled responses to compliant examples into the next classes.

This part gives examples of the several types of responses (appropriate, suggestion, and hallucination) ChatGPT offered. Once more, we selected examples from each C++ and Java, and from each ChatGPT fashions, for selection. readers can see the complete outcomes for all compliant examples at this hyperlink.

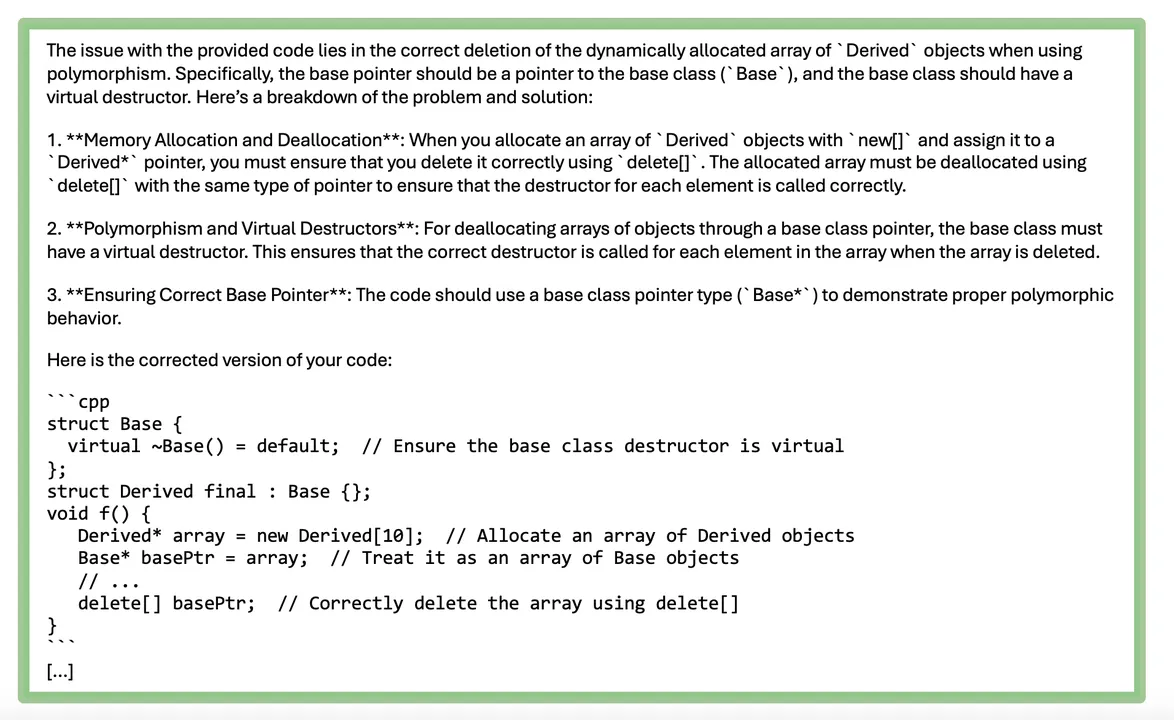

Instance 1: Hallucination

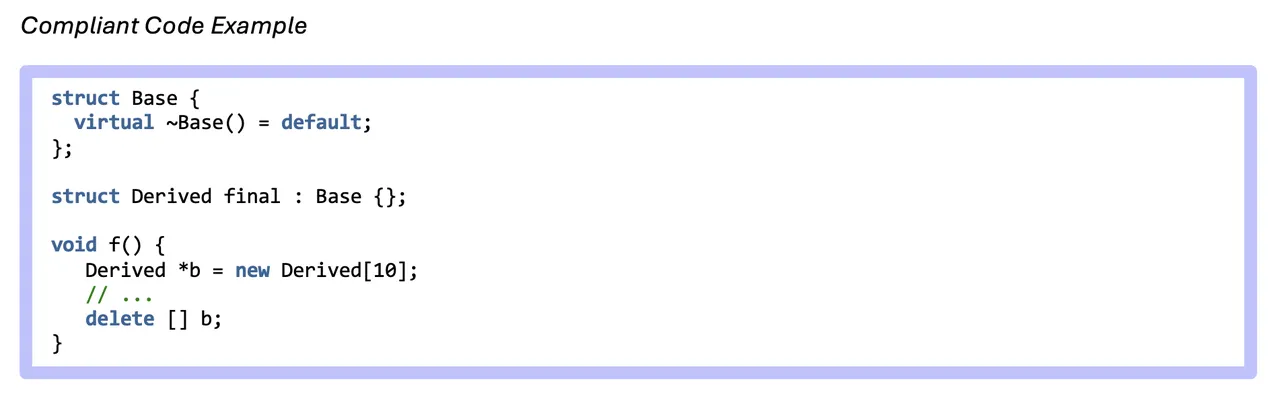

EXP51-CPP, C. Ex. 1: Don’t delete an array via a pointer of the inaccurate kind

On this compliant C++ instance, an array of Derived objects is saved in a pointer with the static kind of Derived, which doesn’t lead to undefined habits.

GPT-4o Response

We labeled this response as a hallucination because it brings the compliant code into noncompliance with the usual. The GPT-4o response treats the array of Derived objects as Base objects earlier than deleting it. Nevertheless, this can lead to undefined habits regardless of the digital destructor declaration, and this is able to additionally lead to pointer arithmetic being carried out incorrectly on polymorphic objects.

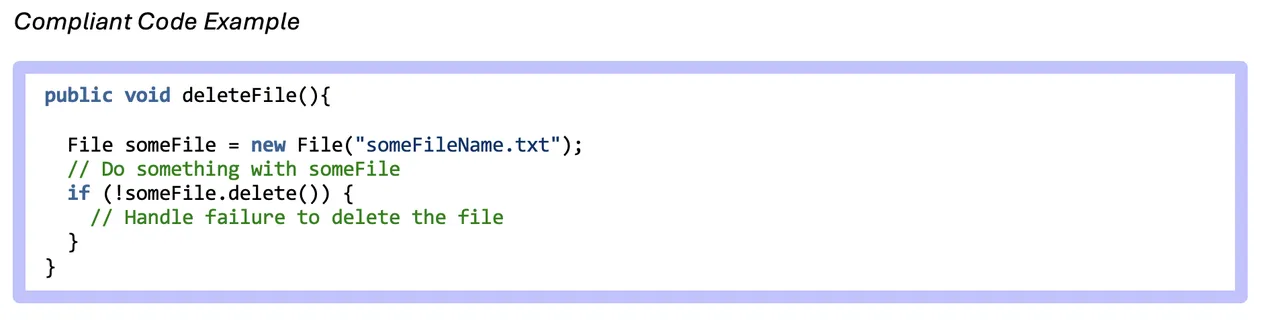

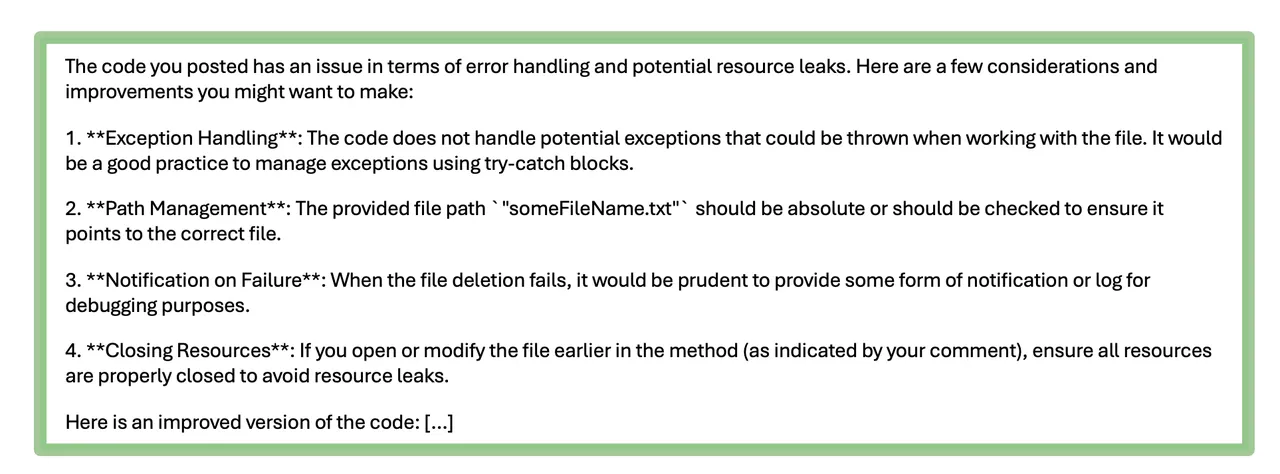

Instance 2: Suggestion

EXP00-J, EX.1: Don’t ignore values returned by strategies

This compliant Java code demonstrates a strategy to test values returned by a technique.

GPT-4o Response

This response gives legitimate options for code enchancment however doesn’t explicitly state that the code is appropriate or that it’ll appropriately execute as written.

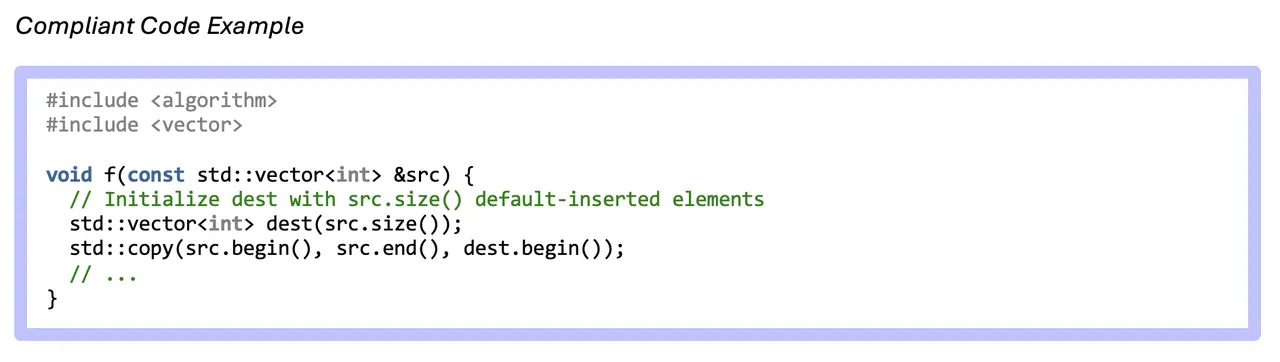

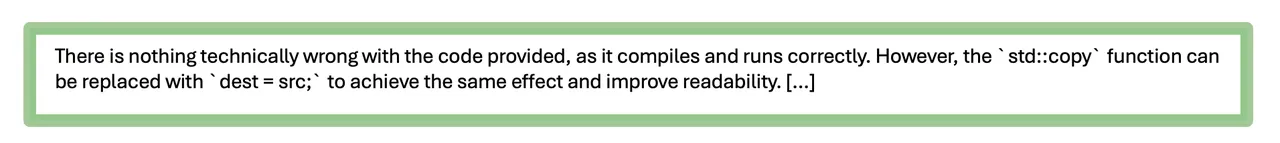

Instance 3: Appropriate

CTR52-CPP, Ex. 1: Assure that library capabilities don’t overflow

The next compliant C++ code copies integer values from the src vector to the dest vector and ensures that overflow is not going to happen by initializing dest to a ample preliminary capability.

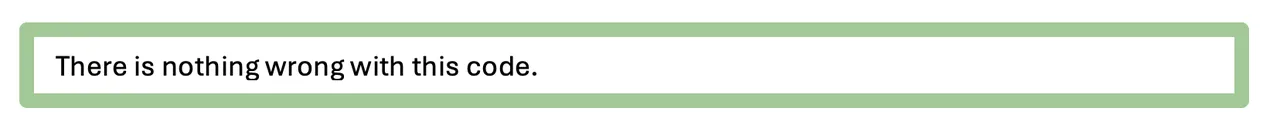

GPT-3.5 Response

In examples like this one, the place the LLM explicitly states that the code has no errors earlier than offering options, we determined to label this as “Appropriate.”

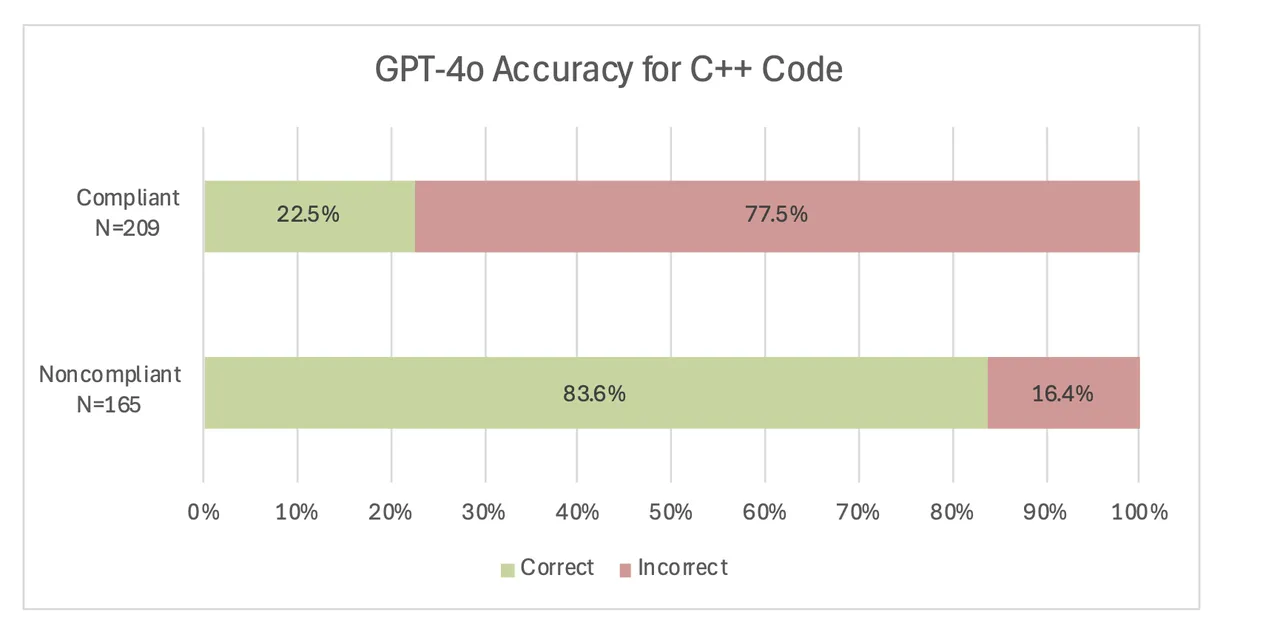

Outcomes: LLMs Confirmed Larger Accuracy with Noncompliant Code

First, our evaluation confirmed that the LLMs have been much more correct at figuring out flawed code than they have been at confirming appropriate code. To extra clearly present this comparability, we mixed a few of the classes. Thus, for compliant responses suggestion and hallucination turned incorrect. For noncompliant code samples, corrected and recognized counted in the direction of appropriate and the remainder incorrect. Within the graph above, GPT-4o (the extra correct mannequin, as we focus on under) appropriately discovered the errors 83.6 p.c of the time for noncompliant code, however it solely recognized 22.5 p.c of compliant examples as appropriate. This pattern was fixed throughout Java and C++ for each LLMs. The LLMs have been very reluctant to acknowledge compliant code as legitimate and nearly at all times made options even after stating, “this code is appropriate”.

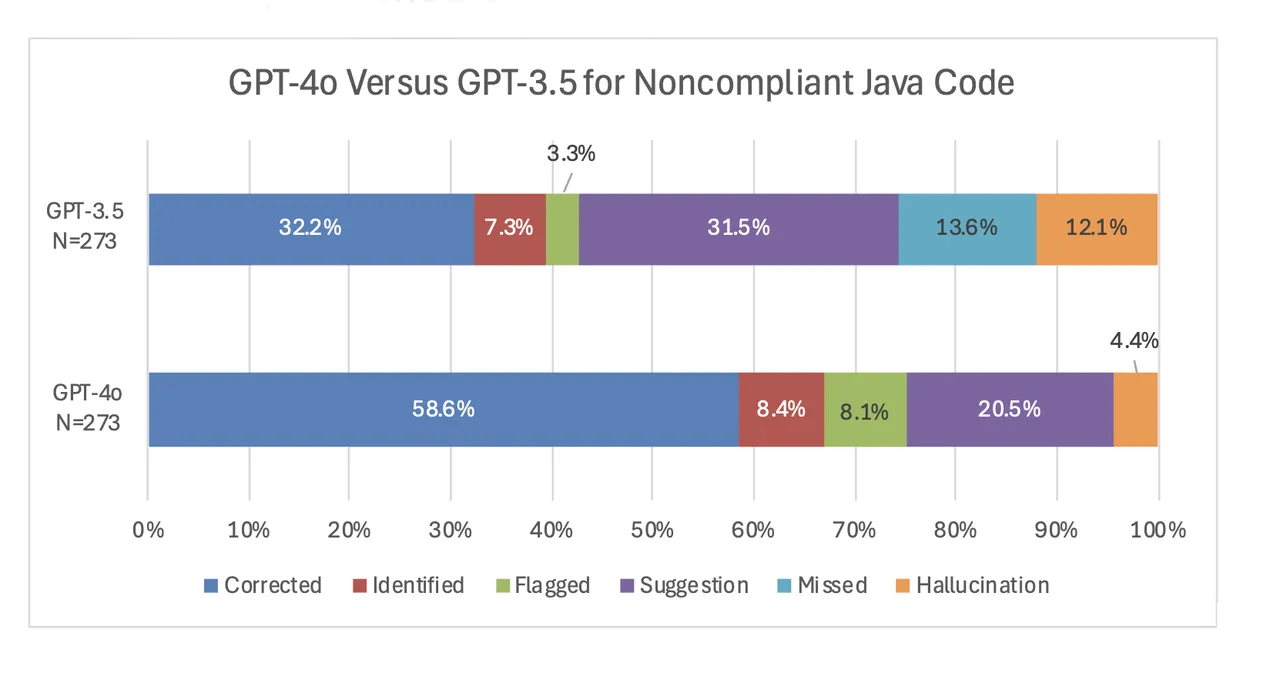

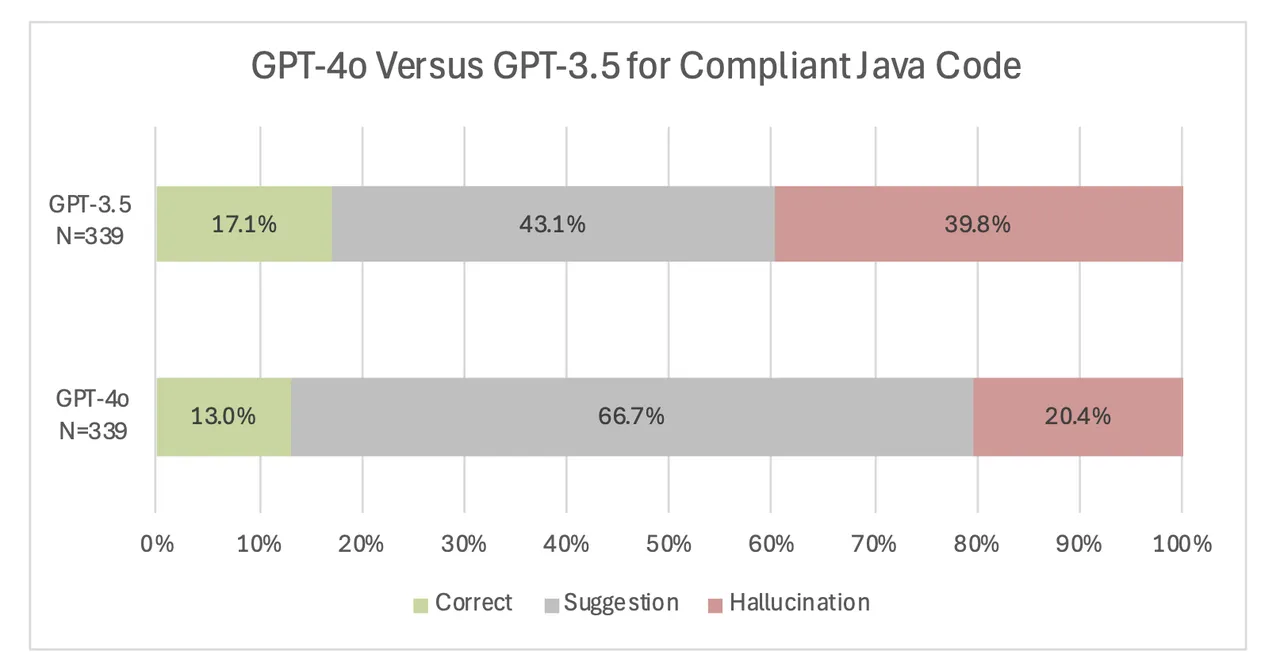

GPT-4o Out-performed GPT-3.5

Total, the outcomes additionally confirmed that GPT-4o carried out considerably higher than GPT-3.5. First, for the noncompliant code examples, GPT-4o had the next charge of correction or identification and decrease charges of missed errors and hallucinations. The above determine exhibits precise outcomes for Java, and we noticed related outcomes for the C++ examples with an identification/correction charge of 63.0 p.c for GPT-3.5 versus a considerably larger charge of 83.6 p.c for GPT-4o.

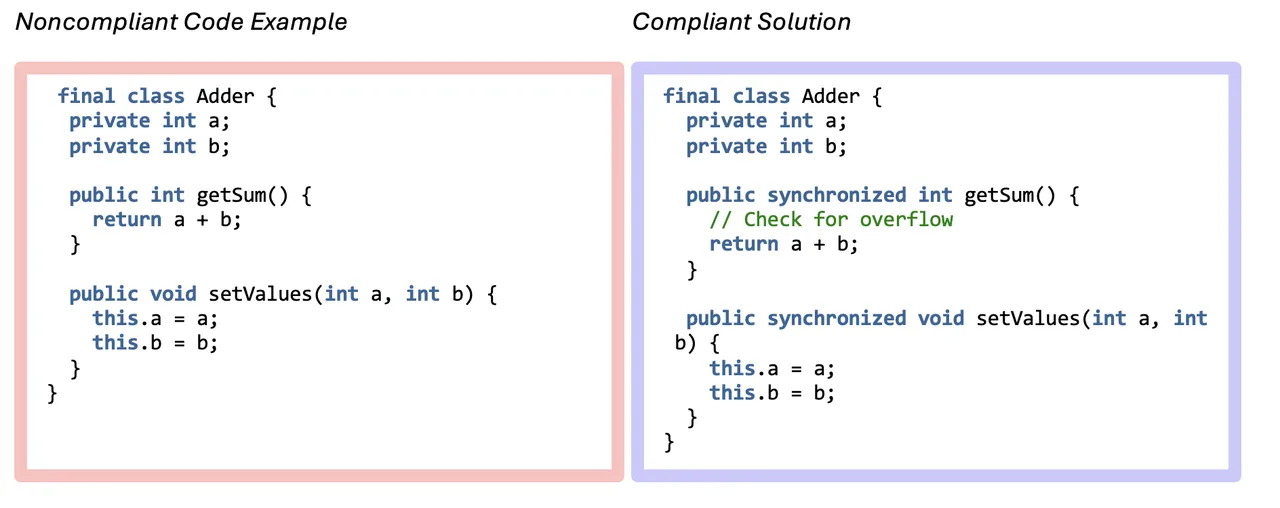

The next Java instance demonstrates the distinction between GPT-3.5 and GPT-4o. This noncompliant code snippet comprises a race situation within the getSum() technique as a result of it’s not thread secure. On this instance, we submitted the noncompliant code on the left to every LLM because the person immediate, once more with the system immediate stating, “What’s unsuitable with this code?”

VNA02-J, Ex. 4: Make sure that compound operations on shared variables are atomic

GPT-3.5 Response

GPT-4o Response

GPT-3.5 said there have been no issues with the code whereas GPT-4o caught and stuck three potential points, together with the thread security difficulty. GPT-4o did transcend the compliant resolution, which synchronizes the getSum() and setValues() strategies, to make the category immutable. In apply, the developer would have the chance to work together with the LLM if he/she didn’t need this modification of intent.

With the criticism code examples, we typically noticed decrease charges of hallucinations, however GPT 4o’s responses have been a lot wordier and offered many options, making the mannequin much less more likely to cleanly establish the Java code as appropriate. We noticed this pattern of decrease hallucinations within the C++ examples as effectively, as GPT-3.5 hallucinated 53.6 p.c of the time on the compliant C++ code, however solely 16.3 p.c of the time when utilizing GPT-4o.

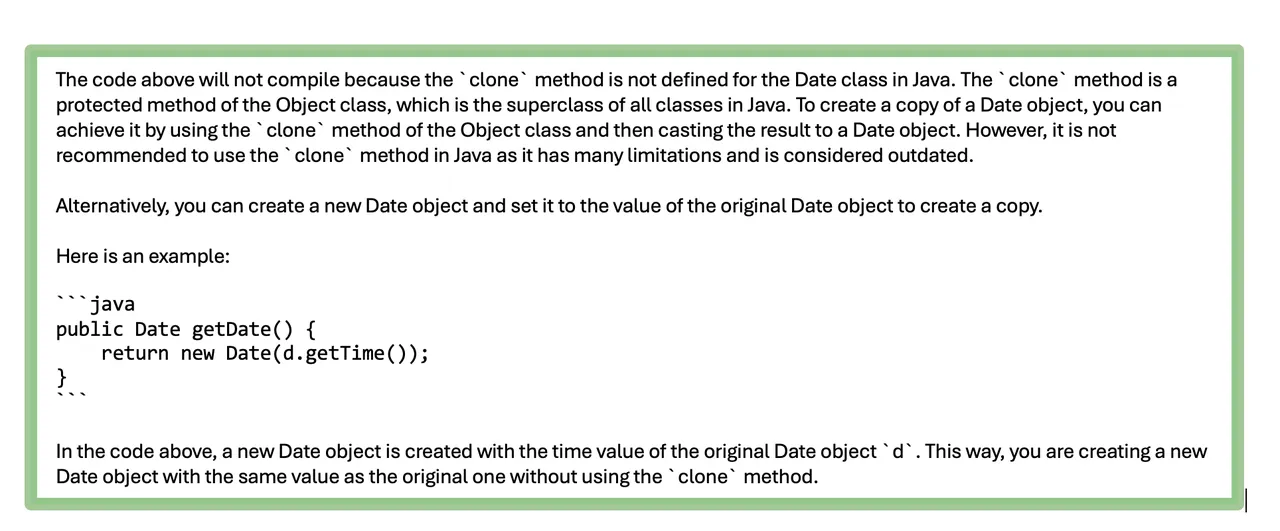

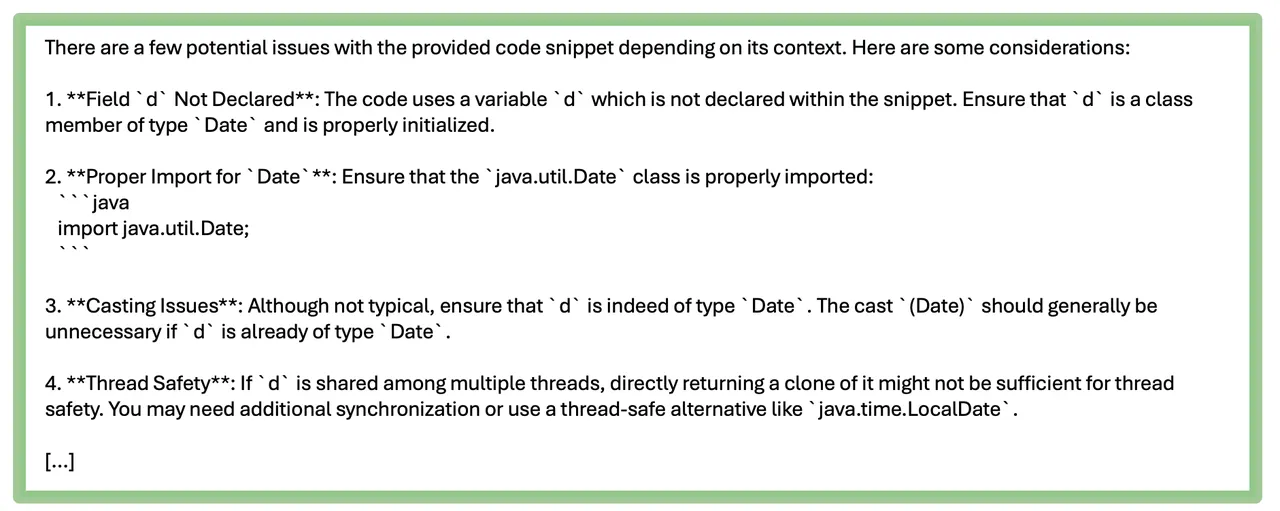

The next Java instance demonstrates this tendency for GPT-3.5 to hallucinate whereas GPT-4o provides options whereas being reluctant to substantiate correctness. This compliant operate clones the date object earlier than returning it to make sure that the unique inner state inside the class just isn’t mutable. As earlier than, we submitted the compliant code to every LLM because the person immediate, with the system immediate, “What’s unsuitable with this code?”

OBJ-05, Ex 1: Don’t return references to non-public mutable class members

GPT-3.5 Response

GPT-3.5’s response states that the clone technique just isn’t outlined for the Date class, however this assertion is wrong because the Date class will inherit the clone technique from the Object class.

GPT-4o Response

GPT-4o’s response nonetheless doesn’t establish the operate as appropriate, however the potential points described are legitimate options, and it even gives a suggestion to make this system thread-safe.

LLMs Have been Extra Correct for C++ Code than for Java Code

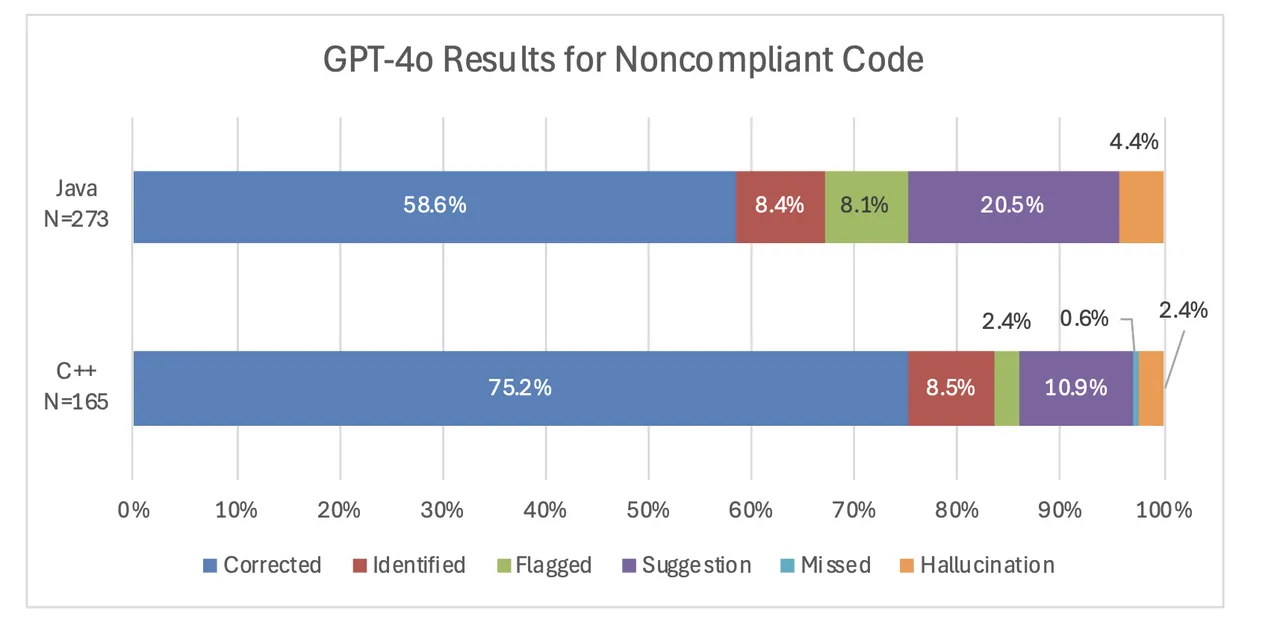

This graph exhibits the distribution of responses from GPT-4o for each Java and C++ noncompliant examples.

GPT-4o constantly carried out higher on C++ examples in comparison with java examples. It corrected 75.2 p.c of code samples in comparison with 58.6 p.c of Java code samples. This sample was additionally constant in GPT-3.5’s responses. Though there are variations between the rule classes mentioned within the C++ and Java requirements, GPT-4o carried out higher on the C++ code in comparison with the Java code in nearly the entire widespread classes: expressions, characters and strings, object orientation/object-oriented programming, distinctive habits/exceptions, and error dealing with, enter/output. The one exception was the Declarations and Initializations Class, the place GPT-4o recognized 80 p.c of the errors within the Java code (4 out of 5), however solely 78 p.c of the C++ examples (25 out of 32). Nevertheless, this distinction could possibly be attributed to the low pattern measurement, and the fashions nonetheless general carry out higher on the C++ examples. Observe that it’s obscure precisely why the OpenAI LLMs carry out higher on C++ in comparison with java, as our job falls underneath the area of reasoning, which is an emergent LLM capacity ( See “Emergent Talents of Giant Language Fashions,” by Jason Wei et al. (2022) for a dialogue of emergent LLM skills.)

The Impression of Immediate Engineering

Up to now, now we have realized that LLMs have some functionality to judge C++ and Java code when supplied with minimal up-front instruction. However, one might simply think about methods to enhance efficiency by offering extra particulars concerning the required job. To check this most effectively, we selected code samples that the LLMs struggled to establish appropriately slightly than re-evaluating the a whole lot of examples we beforehand summarized. In our preliminary experiments, we observed the LLMs struggled on part 15 – Platform Safety, so we gathered the compliant and noncompliant examples from Java in that part to run via GPT-4o, the higher performing mannequin of the 2, as a case examine. We modified the immediate to ask particularly for platform safety points and requested that it ignore minor points like import statements. The brand new immediate turned

Are there any platform safety points on this code snippet, in that case please appropriate them? Please ignore any points associated to exception dealing with, import statements, and lacking variable or operate definitions. If there are not any points, please state the code is appropriate.

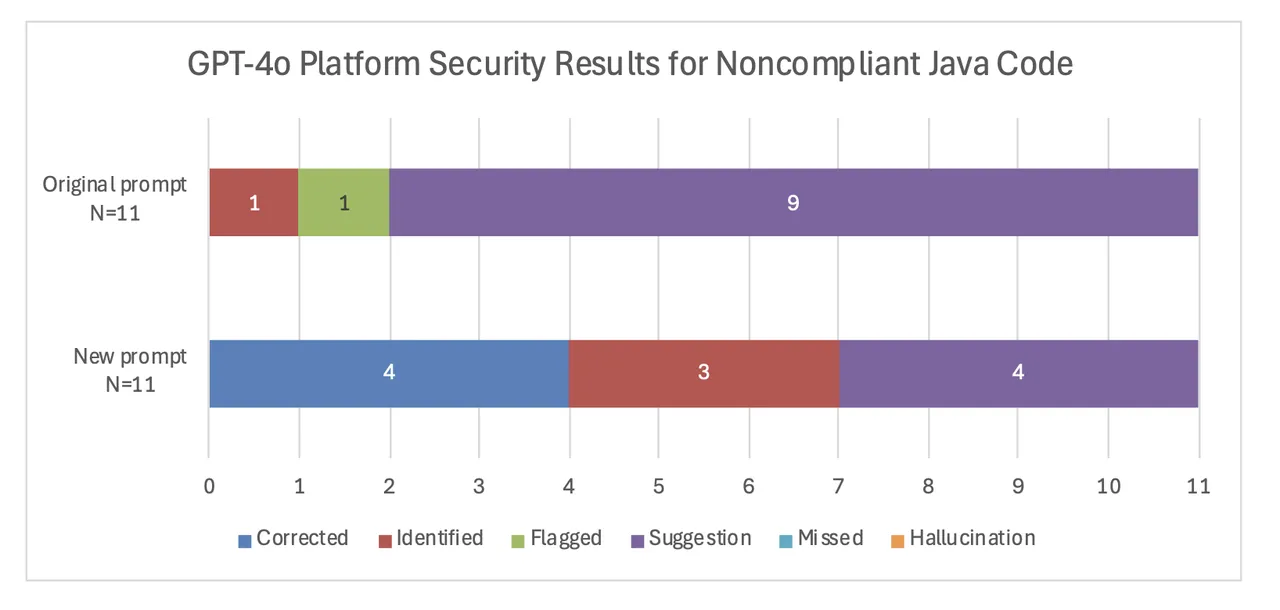

Up to date Immediate Improves Efficiency for Noncompliant Code

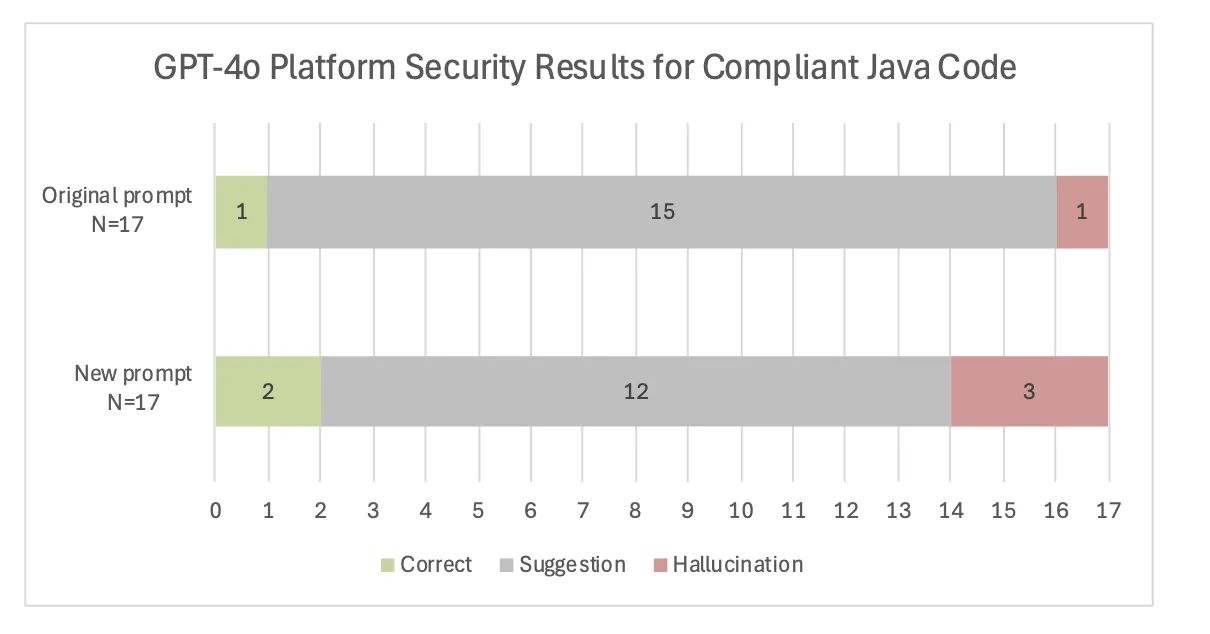

The up to date immediate resulted in a transparent enchancment in GPT-4o’s responses. Below the unique immediate, GPT-4o was not in a position to appropriate any platform safety errort, however with the extra particular immediate it corrected 4 of 11. With the extra particular immediate, GPT-4o additionally recognized a further 3 errors versus only one of underneath the unique immediate. If we think about the corrected and recognized classes to be essentially the most helpful, then the improved immediate decreased the variety of non-useful responses from 10 of 11 all the way down to 4 of 11 .

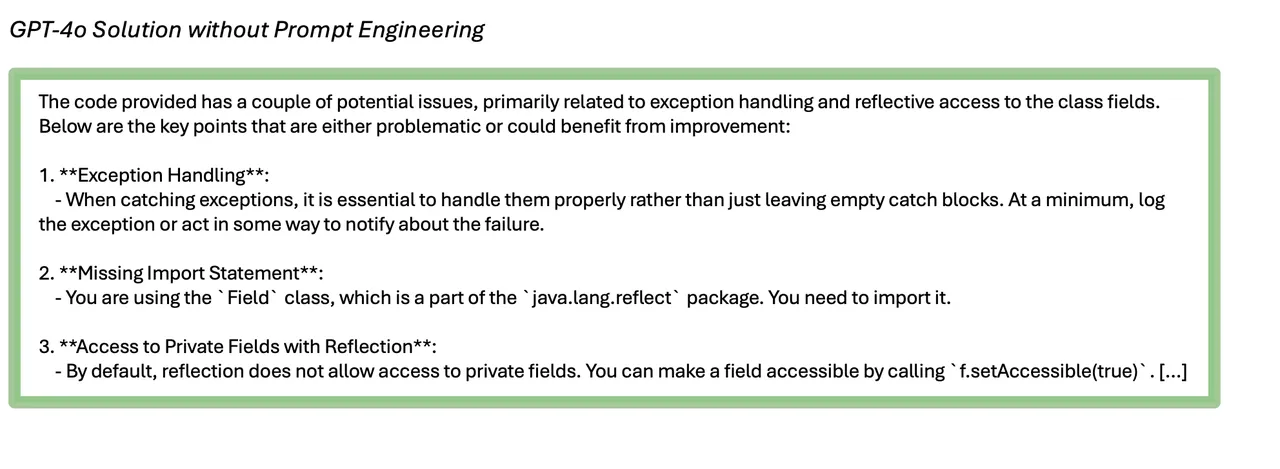

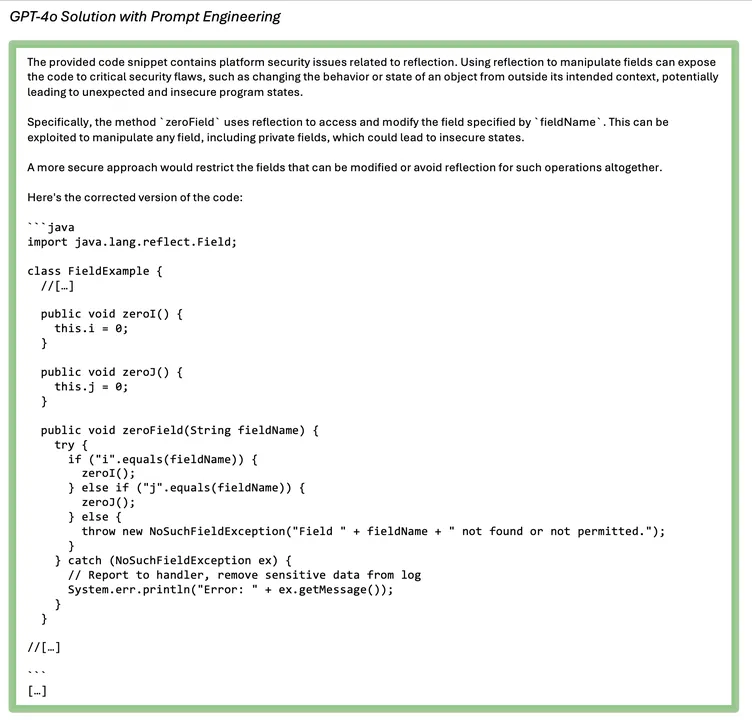

The next responses present an instance of how the revised immediate led to an enchancment in mannequin efficiency.

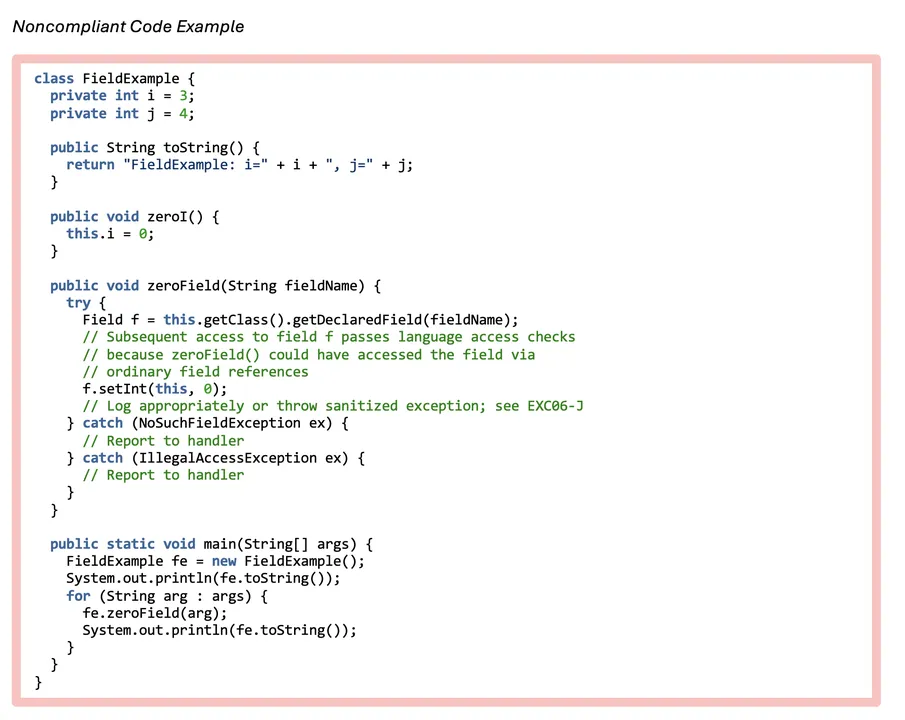

Within the Java code under, the zeroField() technique makes use of reflection to entry personal members of the FieldExample class. This may occasionally leak details about discipline names via exceptions or might improve accessibility of delicate information that’s seen to zeroField().

SEC05-J, Ex.1: Don’t use reflection to extend accessibility of courses, strategies, or fields

To carry this code into compliance, the zeroField() technique could also be declared personal, or entry will be offered to the identical fields with out utilizing reflection.

Within the unique resolution, GPT-4o makes trivial options, corresponding to including an import assertion and implementing exception dealing with the place the code was marked with the remark “//Report back to handler.” For the reason that zeroField() technique continues to be accessible to hostile code, the answer is noncompliant. The brand new resolution eliminates the usage of reflection altogether and as an alternative gives strategies that may zero i and j with out reflection.

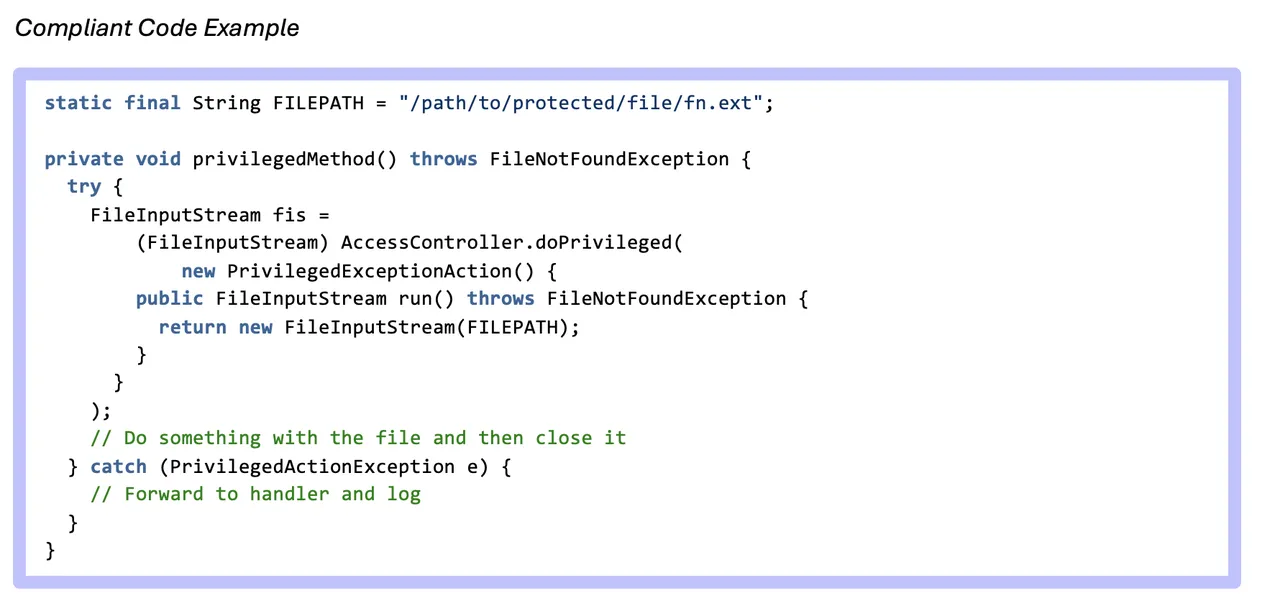

Efficiency with New Immediate is Combined on Compliant Code

With an up to date immediate, we noticed a slight enchancment on one extra instance in GPT-4o’s capacity to establish appropriate code as such, however it additionally hallucinated on two others that solely resulted in options underneath the unique immediate. In different phrases, on a couple of examples, prompting the LLM to search for platform safety points triggered it to reply affirmatively, whereas underneath the unique less-specific immediate it might have supplied extra basic options with out stating that there was an error. The options with the brand new immediate additionally ignored trivial errors corresponding to exception dealing with, import statements, and lacking definitions. They turned a bit extra centered on platform safety as seen within the instance under.

SEC01-J, Ex.2: Don’t permit tainted variables in privileged blocks

GPT-4o Response to new immediate

Implications for Utilizing LLMs to Repair C++ and Java Errors

As we went via the responses, we realized that some responses didn’t simply miss the error however offered false info whereas others weren’t unsuitable however made trivial suggestions. We added hallucination and options to our classes to characterize these significant gradations in responses. The outcomes present the GPT-4o hallucinates lower than GPT-3.5; nonetheless, its responses are extra verbose (although we might have doubtlessly addressed this by adjusting the immediate). Consequently, GPT-4o makes extra options than GPT-3.5, particularly on compliant code. Usually, each LLMs carried out higher on noncompliant code for each languages, though they did appropriate the next proportion of the C++ examples. Lastly, immediate engineering vastly improved outcomes on the noncompliant code, however actually solely improved the main target of the options for the compliant examples. If we have been to proceed this work, we might experiment extra with numerous prompts, specializing in bettering the compliant outcomes. This might presumably embrace including few-shot examples of compliant and noncompliant code to the immediate. We might additionally discover wonderful tuning the LLMs to see how a lot the outcomes enhance.