Picture by Creator

Outliers are irregular observations that differ considerably from the remainder of your knowledge. They could happen attributable to experimentation error, measurement error, or just that variability is current throughout the knowledge itself. These outliers can severely affect your mannequin’s efficiency, resulting in biased outcomes – very similar to how a high performer in relative grading at universities can increase the typical and have an effect on the grading standards. Dealing with outliers is an important a part of the information cleansing process.

On this article, I am going to share how one can spot outliers and other ways to take care of them in your dataset.

Detecting Outliers

There are a number of strategies used to detect outliers. If I had been to categorise them, right here is the way it appears to be like:

- Visualization-Primarily based Strategies: Plotting scatter plots or field plots to see knowledge distribution and examine it for irregular knowledge factors.

- Statistics-Primarily based Strategies: These approaches contain z scores and IQR (Interquartile Vary) which provide reliability however could also be much less intuitive.

I will not cowl these strategies extensively to remain centered, on the subject. Nevertheless, I am going to embody some references on the finish, for exploration. We are going to use the IQR technique in our instance. Right here is how this technique works:

IQR (Interquartile Vary) = Q3 (seventy fifth percentile) – Q1 (twenty fifth percentile)

The IQR technique states that any knowledge factors under Q1 – 1.5 * IQR or above Q3 + 1.5 * IQR are marked as outliers. Let’s generate some random knowledge factors and detect the outliers utilizing this technique.

Make the required imports and generate the random knowledge utilizing np.random:

import pandas as pd

import numpy as np

import matplotlib.pyplot as plt

import seaborn as sns

# Generate random knowledge

np.random.seed(42)

knowledge = pd.DataFrame({

'worth': np.random.regular(0, 1, 1000)

})

Detect the outliers from the dataset utilizing the IQR Technique:

# Operate to detect outliers utilizing IQR

def detect_outliers_iqr(knowledge):

Q1 = knowledge.quantile(0.25)

Q3 = knowledge.quantile(0.75)

IQR = Q3 - Q1

lower_bound = Q1 - 1.5 * IQR

upper_bound = Q3 + 1.5 * IQR

return (knowledge < lower_bound) | (knowledge > upper_bound)

# Detect outliers

outliers = detect_outliers_iqr(knowledge['value'])

print(f"Variety of outliers detected: {sum(outliers)}")

Output ⇒ Variety of outliers detected: 8

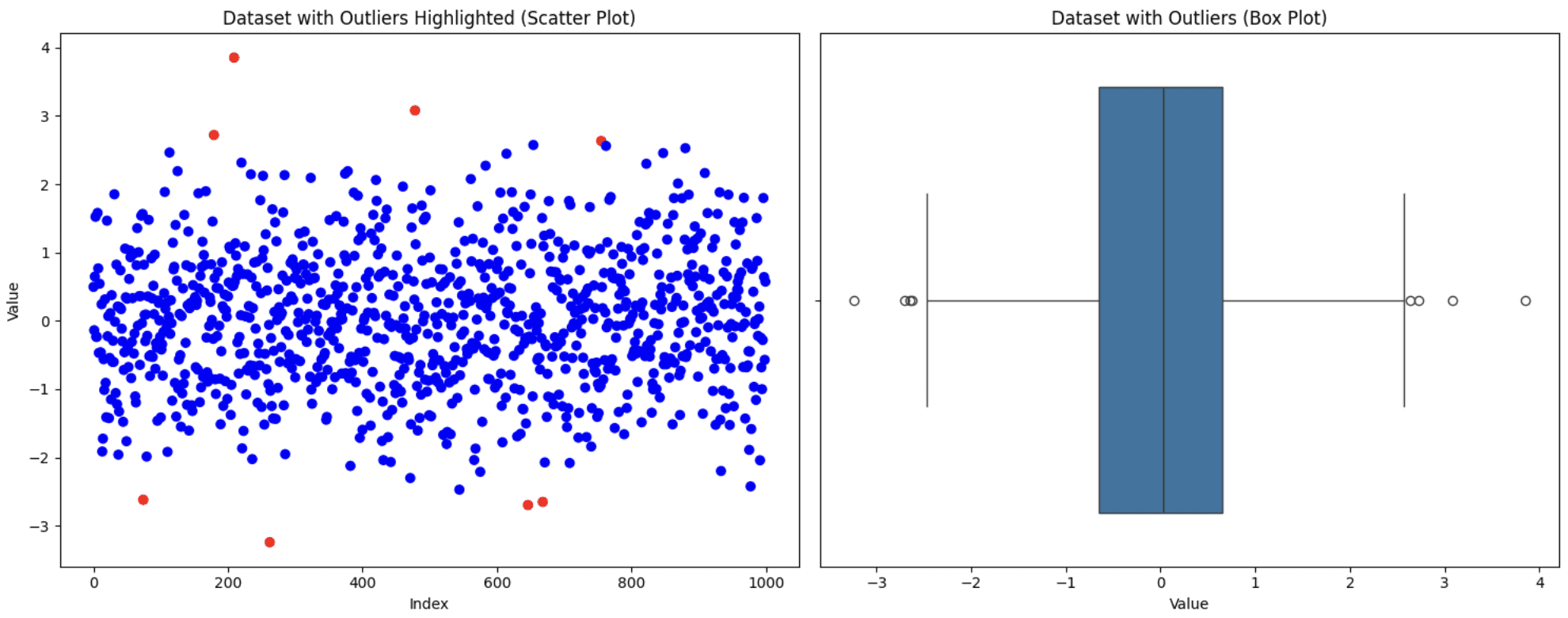

Visualize the dataset utilizing scatter and field plots to see the way it appears to be like

# Visualize the information with outliers utilizing scatter plot and field plot

fig, (ax1, ax2) = plt.subplots(1, 2, figsize=(15, 6))

# Scatter plot

ax1.scatter(vary(len(knowledge)), knowledge['value'], c=['blue' if not x else 'red' for x in outliers])

ax1.set_title('Dataset with Outliers Highlighted (Scatter Plot)')

ax1.set_xlabel('Index')

ax1.set_ylabel('Worth')

# Field plot

sns.boxplot(x=knowledge['value'], ax=ax2)

ax2.set_title('Dataset with Outliers (Field Plot)')

ax2.set_xlabel('Worth')

plt.tight_layout()

plt.present()

Authentic Dataset

Now that we’ve detected the outliers, let’s focus on a few of the other ways to deal with the outliers.

Dealing with Outliers

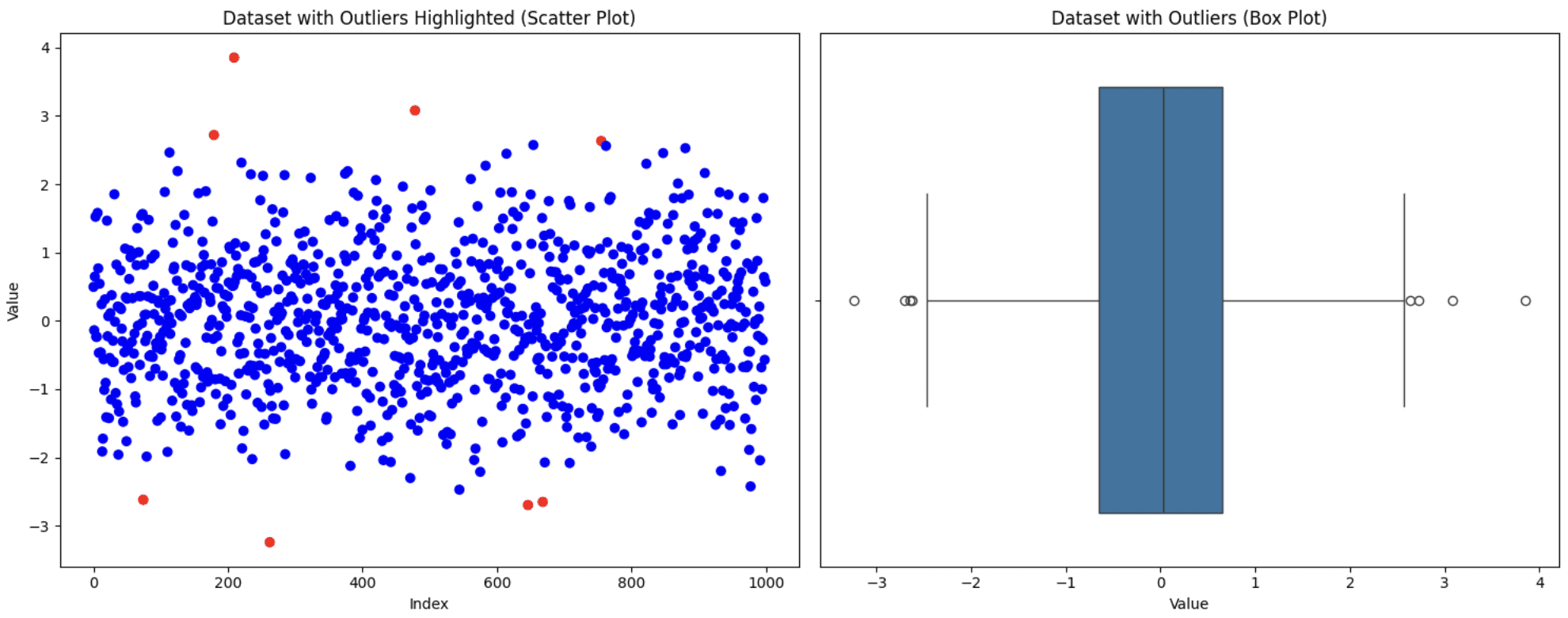

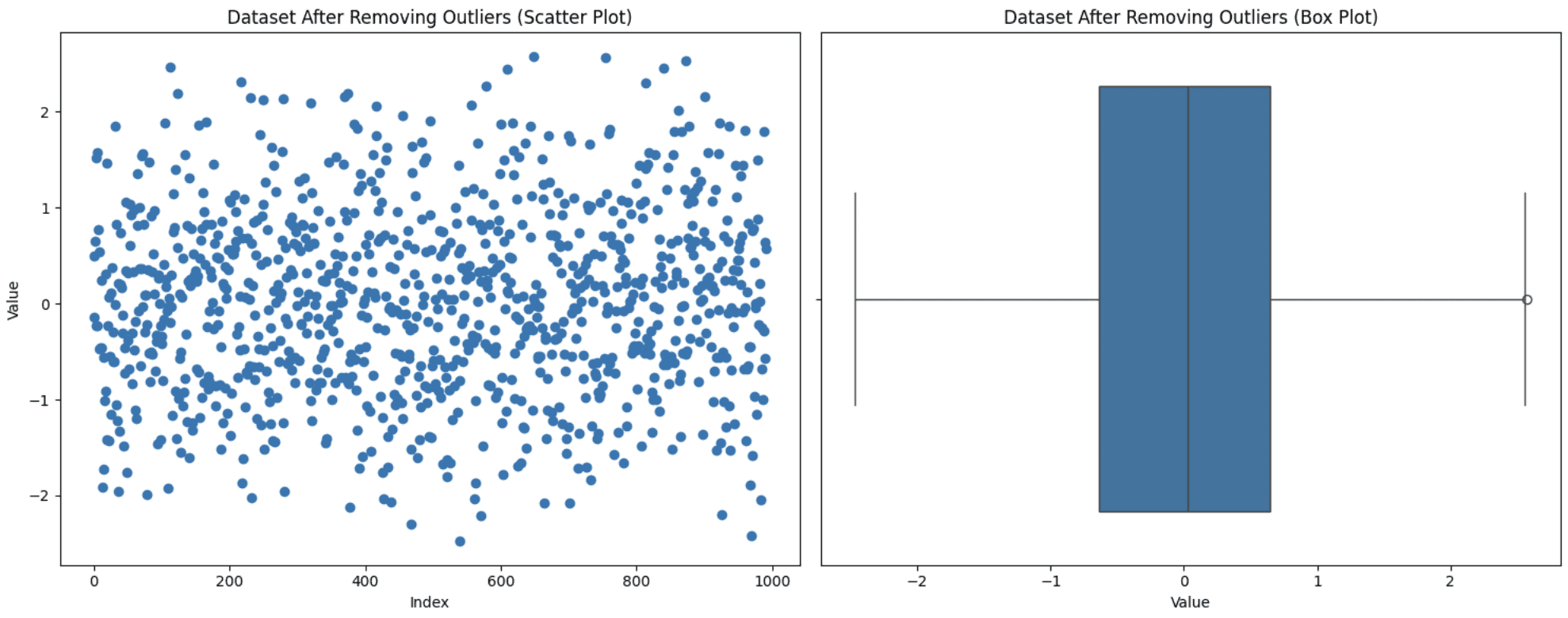

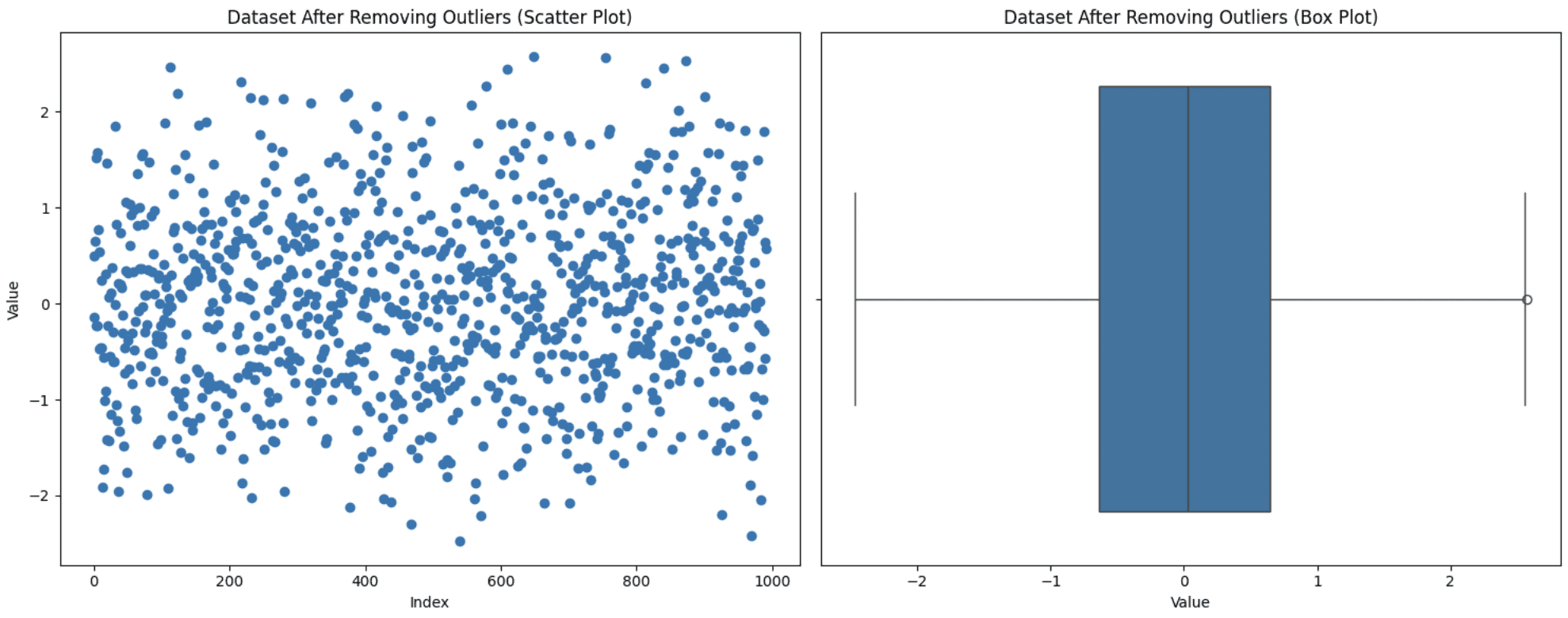

1. Eradicating Outliers

This is without doubt one of the easiest approaches however not all the time the best one. It’s good to think about sure elements. If eradicating these outliers considerably reduces your dataset measurement or in the event that they maintain helpful insights, then excluding them out of your evaluation not be probably the most favorable choice. Nevertheless, in the event that they’re attributable to measurement errors and few in quantity, then this strategy is appropriate. Let’s apply this method to the dataset generated above:

# Take away outliers

data_cleaned = knowledge[~outliers]

print(f"Authentic dataset measurement: {len(knowledge)}")

print(f"Cleaned dataset measurement: {len(data_cleaned)}")

fig, (ax1, ax2) = plt.subplots(1, 2, figsize=(15, 6))

# Scatter plot

ax1.scatter(vary(len(data_cleaned)), data_cleaned['value'])

ax1.set_title('Dataset After Eradicating Outliers (Scatter Plot)')

ax1.set_xlabel('Index')

ax1.set_ylabel('Worth')

# Field plot

sns.boxplot(x=data_cleaned['value'], ax=ax2)

ax2.set_title('Dataset After Eradicating Outliers (Field Plot)')

ax2.set_xlabel('Worth')

plt.tight_layout()

plt.present()

Eradicating Outliers

Discover that the distribution of the information can really be modified by eradicating outliers. For those who take away some preliminary outliers, the definition of what’s an outlier might very nicely change. Due to this fact, knowledge that may have been within the regular vary earlier than, could also be thought-about outliers below a brand new distribution. You may see a brand new outlier with the brand new field plot.

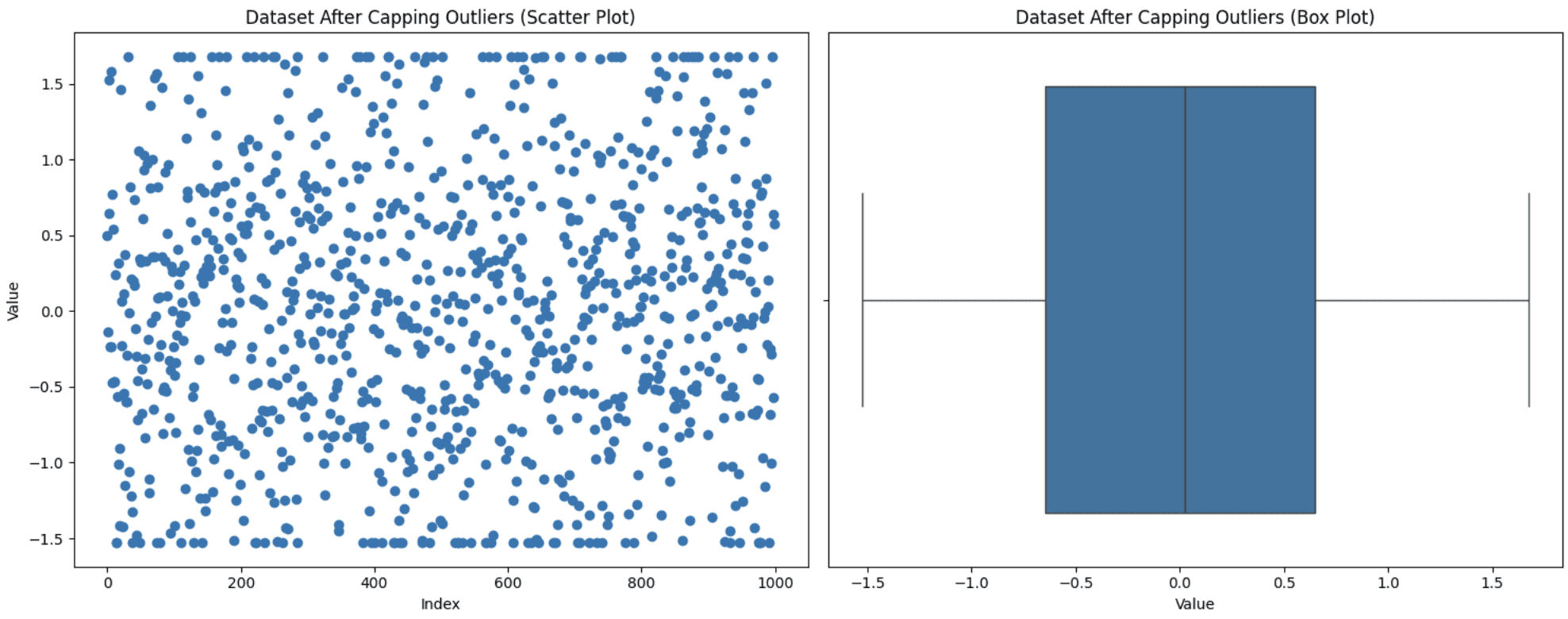

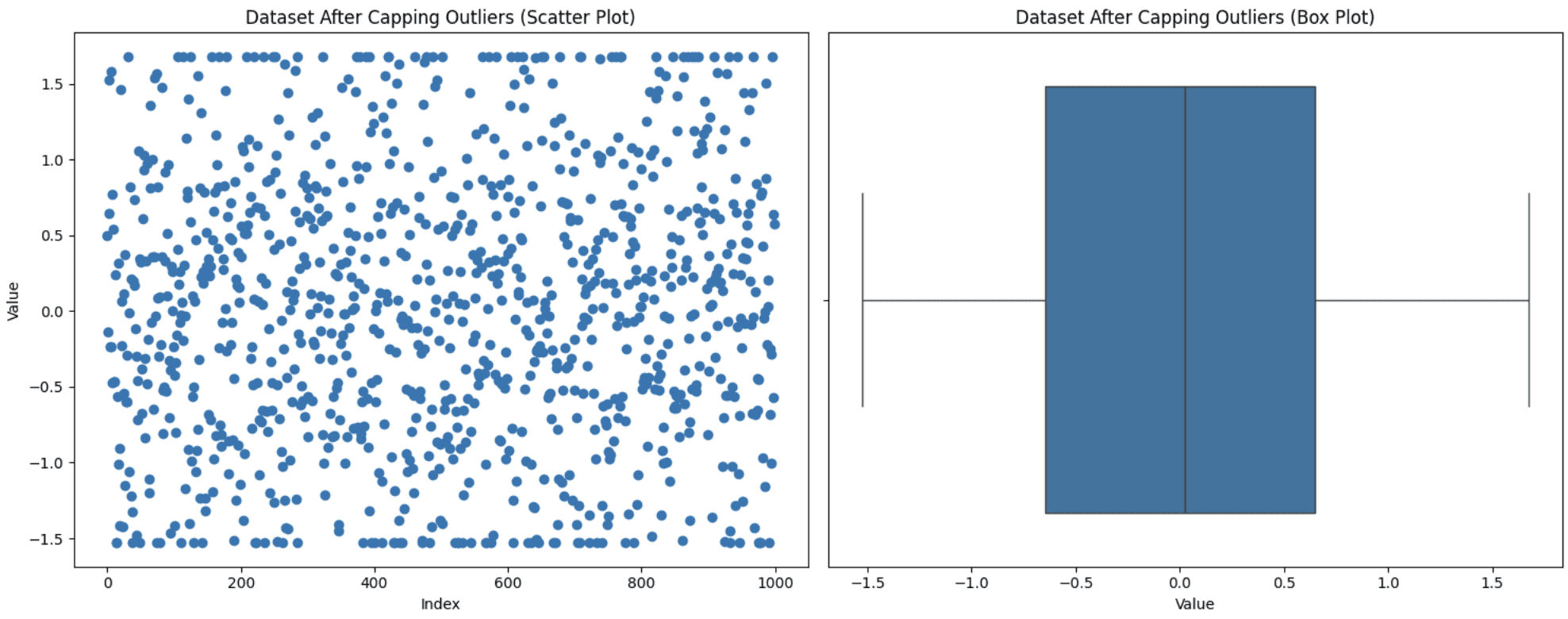

2. Capping Outliers

This system is used when you don’t want to discard your knowledge factors however conserving these excessive values may affect your evaluation. So, you set a threshold for the utmost and the minimal values after which carry the outliers inside this vary. You may apply this capping to outliers or to your dataset as a complete too. Let’s apply the capping technique to our full dataset to carry it throughout the vary of the Fifth-Ninety fifth percentile. Right here is how one can execute this:

def cap_outliers(knowledge, lower_percentile=5, upper_percentile=95):

lower_limit = np.percentile(knowledge, lower_percentile)

upper_limit = np.percentile(knowledge, upper_percentile)

return np.clip(knowledge, lower_limit, upper_limit)

knowledge['value_capped'] = cap_outliers(knowledge['value'])

fig, (ax1, ax2) = plt.subplots(1, 2, figsize=(15, 6))

# Scatter plot

ax1.scatter(vary(len(knowledge)), knowledge['value_capped'])

ax1.set_title('Dataset After Capping Outliers (Scatter Plot)')

ax1.set_xlabel('Index')

ax1.set_ylabel('Worth')

# Field plot

sns.boxplot(x=knowledge['value_capped'], ax=ax2)

ax2.set_title('Dataset After Capping Outliers (Field Plot)')

ax2.set_xlabel('Worth')

plt.tight_layout()

plt.present()

Capping Outliers

You may see from the graph that the higher and decrease factors within the scatter plot look like in a line attributable to capping.

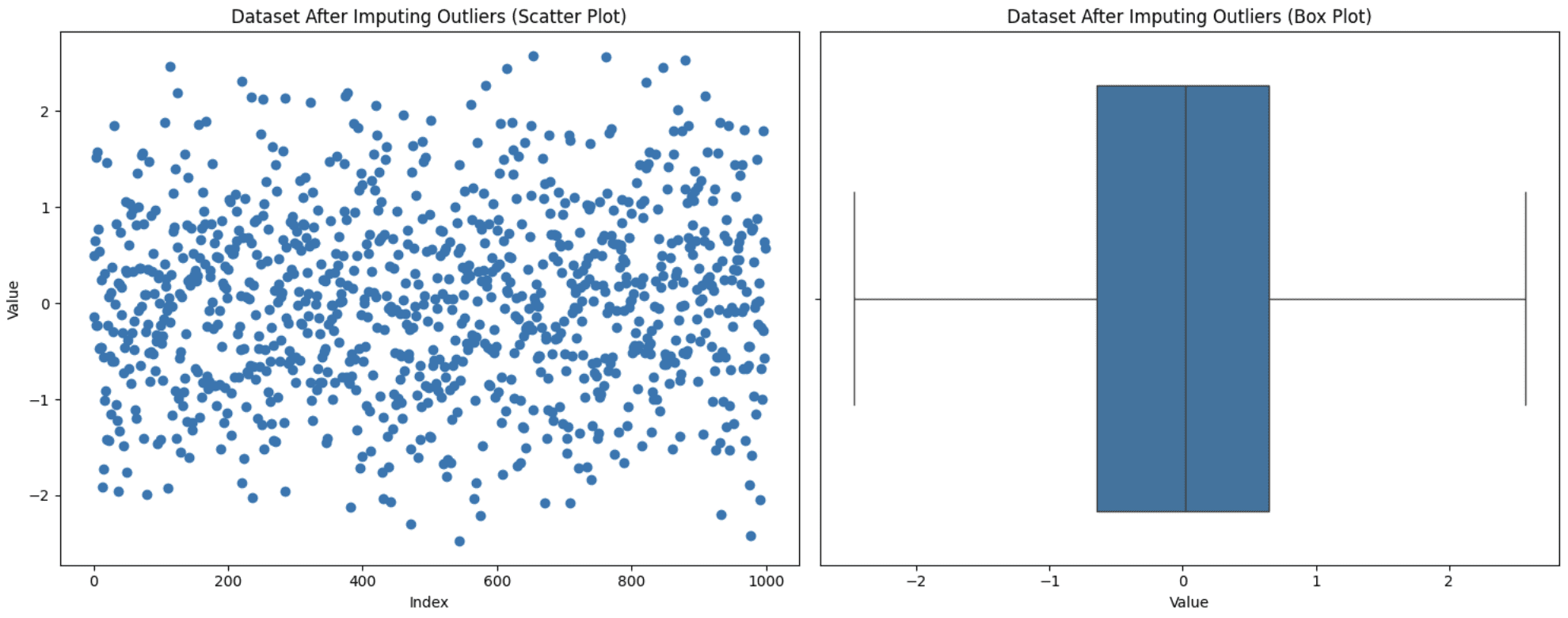

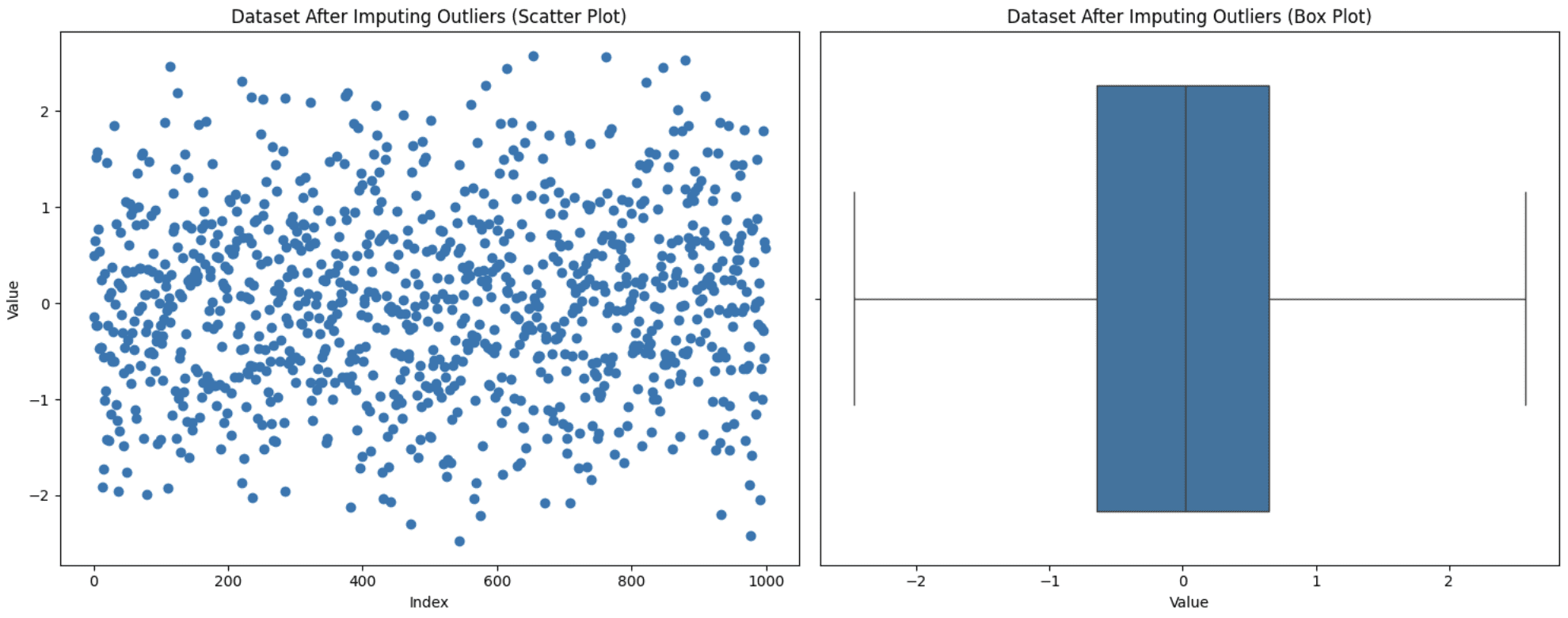

3. Imputing Outliers

Generally eradicating values from the evaluation is not an choice as it could result in info loss, and also you additionally don’t desire these values to be set to max or min like in capping. On this scenario, one other strategy is to substitute these values with extra significant choices like imply, median, or mode. The selection varies relying on the area of knowledge below commentary, however be aware of not introducing biases whereas utilizing this method. Let’s substitute our outliers with the mode (probably the most steadily occurring worth) worth and see how the graph seems:

knowledge['value_imputed'] = knowledge['value'].copy()

median_value = knowledge['value'].median()

knowledge.loc[outliers, 'value_imputed'] = median_value

fig, (ax1, ax2) = plt.subplots(1, 2, figsize=(15, 6))

# Scatter plot

ax1.scatter(vary(len(knowledge)), knowledge['value_imputed'])

ax1.set_title('Dataset After Imputing Outliers (Scatter Plot)')

ax1.set_xlabel('Index')

ax1.set_ylabel('Worth')

# Field plot

sns.boxplot(x=knowledge['value_imputed'], ax=ax2)

ax2.set_title('Dataset After Imputing Outliers (Field Plot)')

ax2.set_xlabel('Worth')

plt.tight_layout()

plt.present()

Imputing Outliers

Discover that now we haven’t any outliers, however this does not assure that outliers will probably be eliminated since after the imputation, the IQR additionally modifications. It’s good to experiment to see what suits greatest in your case.

4. Making use of a Transformation

Transformation is utilized to your full dataset as a substitute of particular outliers. You principally change the best way your knowledge is represented to cut back the affect of the outliers. There are a number of transformation methods like log transformation, sq. root transformation, box-cox transformation, Z-scaling, Yeo-Johnson transformation, min-max scaling, and many others. Choosing the proper transformation in your case is determined by the character of the information and your finish aim of the evaluation. Listed below are a couple of suggestions that will help you choose the best transformation method:

- For right-skewed knowledge: Use log, sq. root, or Field-Cox transformation. Log is even higher once you need to compress small quantity values which might be unfold over a big scale. Sq. root is healthier when, aside from proper skew, you need a much less excessive transformation and likewise need to deal with zero values, whereas Field-Cox additionally normalizes your knowledge, which the opposite two do not.

- For left-skewed knowledge: Mirror the information first after which apply the methods talked about for right-skewed knowledge.

- To stabilize variance: Use Field-Cox or Yeo-Johnson (just like Field-Cox however handles zero and destructive values as nicely).

- For mean-centering and scaling: Use z-score standardization (commonplace deviation = 1).

- For range-bound scaling (mounted vary i.e., [2,5]): Use min-max scaling.

Let’s generate a right-skewed dataset and apply the log transformation to the whole knowledge to see how this works:

import pandas as pd

import numpy as np

import matplotlib.pyplot as plt

import seaborn as sns

# Generate right-skewed knowledge

np.random.seed(42)

knowledge = np.random.exponential(scale=2, measurement=1000)

df = pd.DataFrame(knowledge, columns=['value'])

# Apply Log Transformation (shifted to keep away from log(0))

df['log_value'] = np.log1p(df['value'])

fig, axes = plt.subplots(2, 2, figsize=(15, 10))

# Authentic Knowledge - Scatter Plot

axes[0, 0].scatter(vary(len(df)), df['value'], alpha=0.5)

axes[0, 0].set_title('Authentic Knowledge (Scatter Plot)')

axes[0, 0].set_xlabel('Index')

axes[0, 0].set_ylabel('Worth')

# Authentic Knowledge - Field Plot

sns.boxplot(x=df['value'], ax=axes[0, 1])

axes[0, 1].set_title('Authentic Knowledge (Field Plot)')

axes[0, 1].set_xlabel('Worth')

# Log Reworked Knowledge - Scatter Plot

axes[1, 0].scatter(vary(len(df)), df['log_value'], alpha=0.5)

axes[1, 0].set_title('Log Reworked Knowledge (Scatter Plot)')

axes[1, 0].set_xlabel('Index')

axes[1, 0].set_ylabel('Log(Worth)')

# Log Reworked Knowledge - Field Plot

sns.boxplot(x=df['log_value'], ax=axes[1, 1])

axes[1, 1].set_title('Log Reworked Knowledge (Field Plot)')

axes[1, 1].set_xlabel('Log(Worth)')

plt.tight_layout()

plt.present()

Making use of Log Transformation

You may see {that a} easy transformation has dealt with many of the outliers itself and lowered them to only one. This reveals the ability of transformation in dealing with outliers. On this case, it’s essential to be cautious and know your knowledge nicely sufficient to decide on applicable transformation as a result of failing to take action might trigger issues for you.

Wrapping Up

This brings us to the tip of our dialogue about outliers, other ways to detect them, and deal with them. This text is a part of the pandas collection, and you may verify different articles on my writer web page. As talked about above, listed here are some further sources so that you can research extra about outliers:

- Outlier detection strategies in Machine Studying

- Completely different transformations in Machine Studying

- Varieties Of Transformations For Higher Regular Distribution

Kanwal Mehreen Kanwal is a machine studying engineer and a technical author with a profound ardour for knowledge science and the intersection of AI with medication. She co-authored the e-book “Maximizing Productiveness with ChatGPT”. As a Google Era Scholar 2022 for APAC, she champions range and educational excellence. She’s additionally acknowledged as a Teradata Range in Tech Scholar, Mitacs Globalink Analysis Scholar, and Harvard WeCode Scholar. Kanwal is an ardent advocate for change, having based FEMCodes to empower girls in STEM fields.