Finetuning Embedding Fashions for Higher Retrieval and RAG

TL;DR: Finetuning an embedding mannequin on in-domain information can considerably enhance vector search and retrieval-augmented era (RAG) accuracy. With Databricks, it’s straightforward to finetune, deploy, and consider embedding fashions to optimize retrieval in your particular use case—leveraging artificial information with out guide labeling.

Why It Issues: In case your vector search or RAG system isn’t retrieving one of the best outcomes, finetuning an embedding mannequin is a straightforward but highly effective method to increase efficiency. Whether or not you’re coping with monetary paperwork, information bases, or inside code documentation, finetuning can provide you extra related search outcomes and higher downstream LLM responses.

What We Discovered: We finetuned and examined two embedding fashions on three enterprise datasets and noticed main enhancements in retrieval metrics (Recall@10) and downstream RAG efficiency. This implies finetuning is usually a game-changer for accuracy with out requiring guide labeling, leveraging solely your present information.

Wish to strive embedding finetuning? We offer a reference answer that will help you get began. Databricks makes vector search, RAG, reranking, and embedding finetuning straightforward. Attain out to your Databricks Account Government or Options Architect for extra data.

Why Finetune Embeddings?

Embedding fashions energy trendy vector search and RAG techniques. An embedding mannequin transforms textual content into vectors, making it doable to search out related content material primarily based on which means reasonably than simply key phrases. Nevertheless, off-the-shelf fashions aren’t at all times optimized in your particular area—that’s the place finetuning is available in.

Finetuning an embedding mannequin on domain-specific information helps in a number of methods:

- Enhance retrieval accuracy: Customized embeddings enhance search outcomes by aligning along with your information.

- Improve RAG efficiency: Higher retrieval reduces hallucinations and allows extra grounded generative AI responses.

- Enhance value and latency: A smaller finetuned mannequin can generally outperform bigger, costly alternate options.

On this weblog submit, we present that finetuning an embedding mannequin is an efficient means to enhance retrieval and RAG efficiency for task-specific, enterprise use circumstances.

Outcomes: Finetuning Works

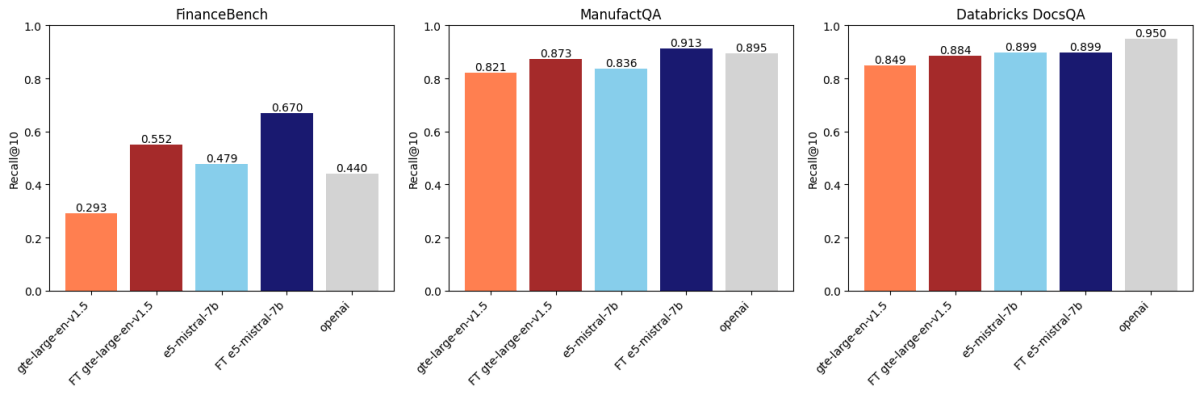

We finetuned two embedding fashions (gte-large-en-v1.5 and e5-mistral-7b-instruct) on artificial information and evaluated them on three datasets from our Area Intelligence Benchmark Suite (DIBS) (FinanceBench, ManufactQA, and Databricks DocsQA). We then in contrast them in opposition to OpenAI’s text-embedding-3-large.

Key Takeaways:

- Finetuning improved retrieval accuracy throughout datasets, typically considerably outperforming baseline fashions.

- Finetuned embeddings carried out in addition to or higher than reranking in lots of circumstances, displaying they could be a robust standalone answer.

- Higher retrieval led to higher RAG efficiency on FinanceBench, demonstrating end-to-end advantages.

Retrieval Efficiency

After evaluating throughout three datasets, we discovered that embedding finetuning improves accuracy on two of those datasets. Determine 1 reveals that for FinanceBench and ManufactQA, finetuned embeddings outperformed their base variations, generally even beating OpenAI’s API mannequin (mild gray). For Databricks DocsQA, nonetheless, OpenAI text-embedding-3-large accuracy surpasses all finetuned fashions. It’s doable that it’s because the mannequin has been skilled on public Databricks documentation. This reveals that whereas finetuning might be efficient, it strongly is dependent upon the coaching dataset and the analysis activity.

Finetuning vs. Reranking

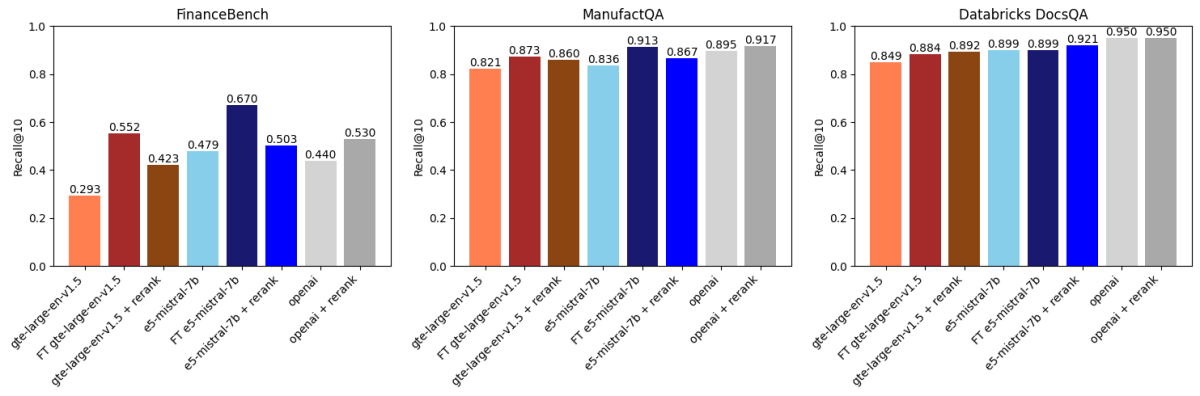

We then in contrast the above outcomes with API-based reranking utilizing voyageai/rerank-1 (Determine 2). A reranker sometimes takes the highest ok outcomes retrieved by an embedding mannequin, reranks these outcomes by relevance to the search question, after which returns the reranked high ok (in our case ok=30 adopted by ok=10). This works as a result of rerankers are normally bigger, extra highly effective fashions than embedding fashions and likewise mannequin the interplay between the question and the doc in a means that’s extra expressive.

What we discovered was:

- Finetuning gte-large-en-v1.5 outperformed reranking on FinanceBench and ManufactQA.

- OpenAI’s text-embedding-3-large benefited from reranking, however the enhancements had been marginal on some datasets.

- For Databricks DocsQA, reranking had a smaller impression, however finetuning nonetheless introduced enhancements, displaying the dataset-dependent nature of those strategies.

Rerankers normally incur extra per-query inference latency and price relative to embedding fashions. Nevertheless, they can be utilized with present vector databases and may in some circumstances be more economical than re-embedding information with a more recent embedding mannequin. The selection of whether or not to make use of a reranker is dependent upon your area and your latency/value necessities.

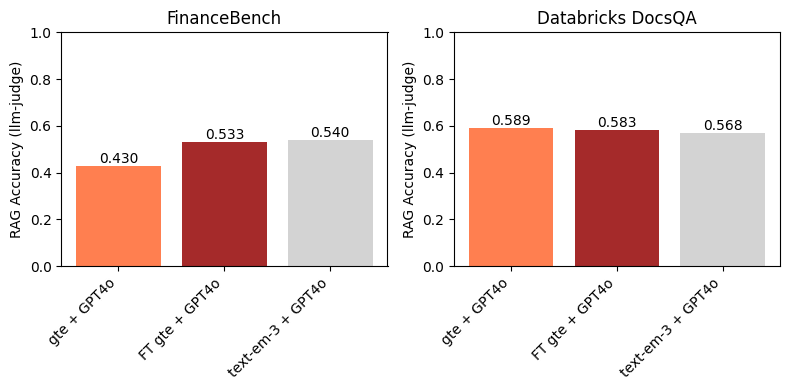

Finetuning Helps RAG Efficiency

For FinanceBench, higher retrieval translated on to higher RAG accuracy when mixed with GPT-4o (see Appendix). Nevertheless, in domains the place retrieval was already robust, comparable to Databricks DocsQA, finetuning didn’t add a lot—highlighting that finetuning works finest when retrieval is a transparent bottleneck.

How We Finetuned and Evaluated Embedding Fashions

Listed here are a number of the extra technical particulars of our artificial information era, finetuning, and analysis.

Embedding Fashions

We finetuned two open-source embedding fashions:

- gte-large-en-v1.5 is a well-liked embedding mannequin primarily based on BERT Massive (434M parameters, 1.75 GB). We selected to run experiments on this mannequin due to its modest dimension and open licensing. This embedding mannequin can also be at present supported on the Databricks Basis Mannequin API.

- e5-mistral-7b-instruct belongs to a more recent class of embedding fashions constructed on high of robust LLMs (on this case Mistral-7b-instruct-v0.1). Though e5-mistral-7b-instruct is best on the usual embedding benchmarks comparable to MTEB and is ready to deal with longer and extra nuanced prompts, it’s a lot bigger than gte-large-en-v1.5 (because it has 7 billion parameters) and is barely slower and costlier to serve.

We then in contrast them in opposition to OpenAI’s text-embedding-3-large.

Analysis Datasets

We evaluated all fashions on the next datasets from our Area Intelligence Benchmark Suite (DIBS): FinanceBench, ManufactQA, and Databricks DocsQA.

| Dataset | Description | # Queries | # Corpus |

|---|---|---|---|

| FinanceBench | Questions on SEC 10-Okay paperwork generated by human consultants. Retrieval is finished over particular person pages from a superset of 360 SEC 10-Okay filings. | 150 | 53,399 |

| ManufactQA | Questions and solutions sampled from public boards of an digital units producer. | 6,787 | 6,787 |

| Databricks DocsQA | Questions primarily based on publicly accessible Databricks documentation generated by Databricks consultants. | 139 | 7,561 |

We report recall@10 as our most important retrieval metric; this measures whether or not the proper doc is within the high 10 retrieved paperwork.

The golden customary for embedding mannequin high quality is the MTEB benchmark, which includes retrieval duties comparable to BEIR in addition to many different non-retrieval duties. Whereas fashions comparable to gte-large-en-v1.5 and e5-mistral-7b-instruct do nicely on MTEB, we had been curious to see how they carried out on our inside enterprise duties.

Coaching Knowledge

We skilled separate fashions on artificial information tailor-made for every of the benchmarks above:

| Coaching Set | Description | # Distinctive Samples |

| Artificial FinanceBench | Queries generated from 2,400 SEC 10-Okay paperwork | ~6,000 |

| Artificial Databricks Docs QA | Queries generated from public Databricks documentation. | 8,727 |

| ManufactQA | Queries generated from electronics manufacturing PDFs | 14,220 |

With the intention to generate the coaching set for every area, we took present paperwork and generated pattern queries grounded within the content material of every doc utilizing LLMs comparable to Llama 3 405B. The artificial queries had been then filtered for high quality by an LLM-as-a-judge (GPT4o). The filtered queries and their related paperwork had been then used as contrastive pairs for finetuning. We used in-batch negatives for contrastive coaching, however including laborious negatives may additional enhance efficiency (see Appendix).

Hyperparameter Tuning

We ran sweeps throughout:

- Studying fee, batch dimension, softmax temperature

- Epoch rely (1-3 epochs examined)

- Question immediate variations (e.g., “Question:” vs. instruction-based prompts)

- Pooling technique (imply pooling vs. final token pooling)

All finetuning was executed utilizing the open supply mosaicml/composer, mosaicml/llm-foundry, and mosaicml/streaming libraries on the Databricks platform.

The best way to Enhance Vector Search and RAG on Databricks

Finetuning is just one method for enhancing vector search and RAG efficiency; we record a couple of extra approaches beneath.

For Higher Retrieval:

- Use a greater embedding mannequin: Many customers unknowingly work with outdated embeddings. Merely swapping in a higher-performing mannequin can yield speedy positive factors. Examine the MTEB leaderboard for high fashions.

- Strive hybrid search: Mix dense embeddings with keyword-based seek for improved accuracy. Databricks Vector Search makes this straightforward with a one-click answer.

- Use a reranker: A reranker can refine outcomes by reordering them primarily based on relevance. Databricks offers this as a built-in characteristic (at present in Personal Preview). Attain out to your Account Government to strive it.

For Higher RAG:

Get Began with Finetuning on Databricks

Finetuning embeddings might be an straightforward win for enhancing retrieval and RAG in your AI techniques. On Databricks, you may:

- Finetune and serve embedding fashions on scalable infrastructure.

- Use built-in instruments for vector search, reranking, and RAG.

- Shortly take a look at totally different fashions to search out what works finest in your use case.

Able to strive it? We’ve constructed a reference answer to make fine-tuning simpler—attain out to your Databricks Account Government or Options Architect to get entry.

Appendix

|

|

|

|

|

||||

|

|

|

|

|

|

|

||

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

Desk 1: Comparability of gte-large-en-v1.5, e5-mistral-7b-instruct and text-embedding-3-large. Similar information as Determine 1.

Producing Artificial Coaching Knowledge

For all datasets, the queries within the coaching set weren’t the identical because the queries within the take a look at set. Nevertheless, within the case of Databricks DocsQA (however not FinanceBench or ManufactQA), the paperwork used to generate artificial queries had been the identical paperwork used within the analysis set. The main target of our research is to enhance retrieval on explicit duties and domains (versus a zero-shot, generalizable embedding mannequin); we subsequently see this as a sound method for sure manufacturing use circumstances. For FinanceBench and ManufactQA, the paperwork used to generate artificial information didn’t overlap with the corpus used for analysis.

There are numerous methods to pick destructive passages for contrastive coaching. They’ll both be chosen randomly, or they are often pre-defined. Within the first case, the destructive passages are chosen from throughout the coaching batch; these are sometimes called “in-batch negatives” or “smooth negatives”. Within the second case, the consumer preselects textual content examples which might be semantically troublesome, i.e. they’re probably associated to the question however barely incorrect or irrelevant. This second case is usually referred to as “laborious negatives”. On this work, we merely used in-batch negatives; the literature signifies that utilizing laborious negatives would seemingly result in even higher outcomes.

Finetuning Particulars

For all finetuning experiments, most sequence size is about to 2048. We then evaluated all checkpoints. For all benchmarking, corpus paperwork had been truncated to 2048 tokens (not chunked), which was an affordable constraint for our explicit datasets. We select the strongest baselines on every benchmark after sweeping over question prompts and pooling technique.

Enhancing RAG Efficiency

A RAG system consists of each a retriever and a generative mannequin. The retriever selects a set of paperwork related to a specific question, after which feeds them to the generative mannequin. We chosen one of the best finetuned gte-large-en-v1.5 fashions and used them for the primary retrieval stage of a easy RAG system (following the final method described in Lengthy Context RAG Efficiency of LLMs and The Lengthy Context RAG Capabilities of OpenAI o1 and Google Gemini). Particularly, we retrieved ok=10 paperwork every with a most size of 512 tokens and used GPT4o because the generative LLM. Last accuracy was evaluated utilizing an LLM-as-a-judge (GPT4o).

On FinanceBench, Determine 3 reveals that utilizing a finetuned embedding mannequin results in an enchancment in downstream RAG accuracy. Moreover, it’s aggressive with text-embedding-3-large. That is anticipated, since finetuning gte led to a big enchancment in Recall@10 over baseline gte (Determine 1). This instance highlights the efficacy of embedding mannequin finetuning on explicit domains and datasets.

On the Databricks DocsQA dataset, we don’t discover any enhancements when utilizing the finetuned gte mannequin above baseline gte. That is considerably anticipated, because the margins between the baseline and finetuned fashions in Figures 1 and a couple of are small. Apparently, though text-embedding-3-large has (barely) larger Recall@10 than any of the gte fashions, it doesn’t result in larger downstream RAG accuracy. As proven in Determine 1, all of the embedding fashions had comparatively excessive Recall@10 on the Databricks DocsQA dataset; this means that retrieval is probably going not the bottleneck for RAG, and that finetuning an embedding mannequin on this dataset isn’t essentially essentially the most fruitful method.

We wish to thank Quinn Leng and Matei Zaharia for suggestions on this blogpost.