Knowledge groups spend approach an excessive amount of time troubleshooting points, making use of patches, and restarting failed workloads. It isn’t unusual for engineers to spend their whole day investigating and debugging their workloads.

We now have now made it simpler for information engineers to watch and diagnose points with their jobs. With these capabilities you realize when a job run fails or takes an unusually lengthy period of time, perceive the explanation for the failure, and shortly remediate the basis reason for the issue.

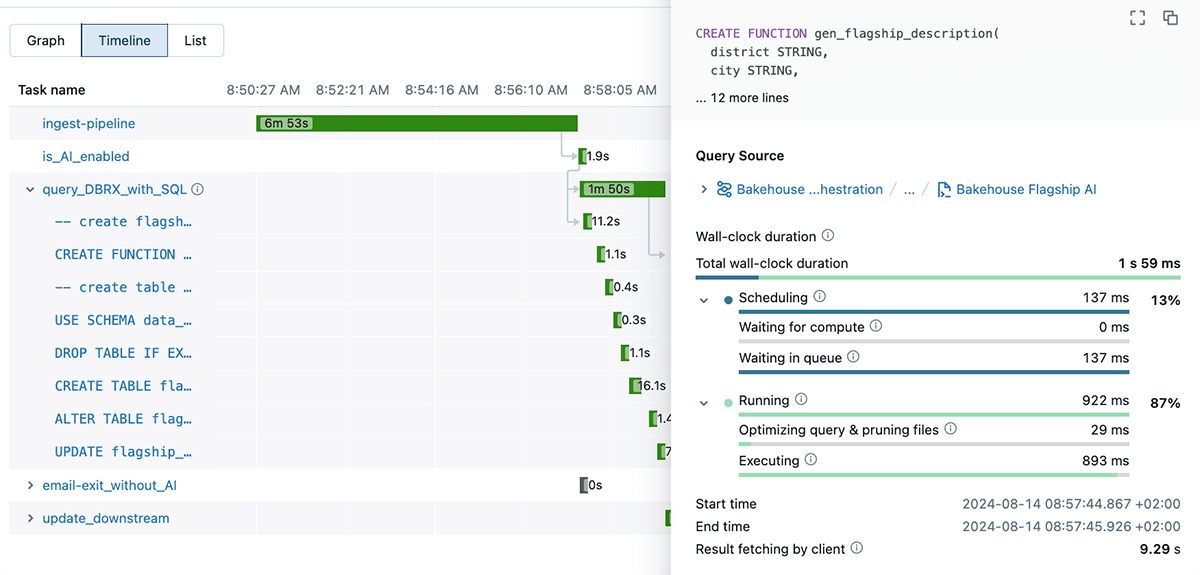

Visible job runs in a Timeline view

As a knowledge engineer, step one in optimizing a workload is knowing the place time is spent. In a fancy information workflow, it will possibly really feel like looking for a needle in a haystack. The brand new Timeline view shows job runs as horizontal bars on a timeline, exhibiting process dependencies, durations, and statuses. It lets you shortly pinpoint bottlenecks and areas of serious time expenditure in your DAG runs. By offering a complete overview of how duties intersect and the place delays happen, the Timeline View helps streamline your processes and enhance effectivity.

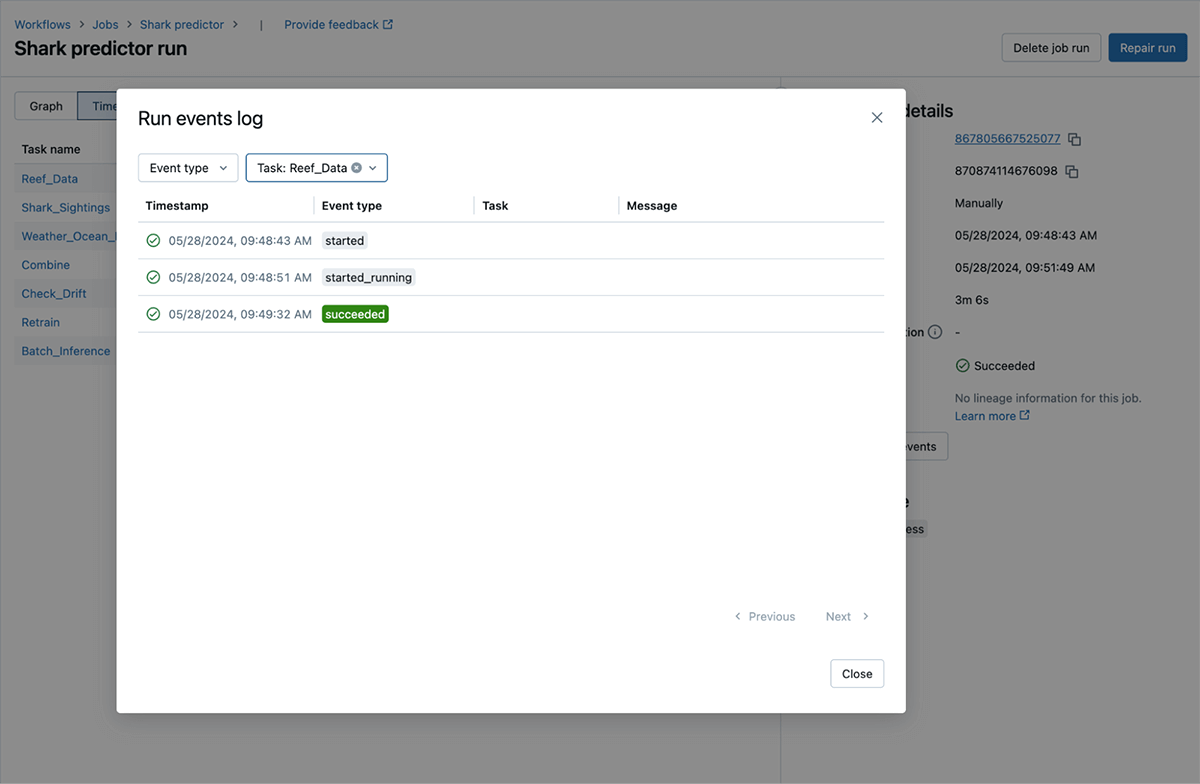

Run Occasions: See essential details about job progress

Monitoring the progress of workflow runs can usually be opaque and cumbersome: reviewing detailed logs to collect important troubleshooting data. We now have constructed run occasions to visualise run progress straight inside the product. With this function, essential and related occasions (comparable to compute startup and shutdown, customers beginning a run, retries, standing modifications, and notifications, and many others.) are simple to search out.

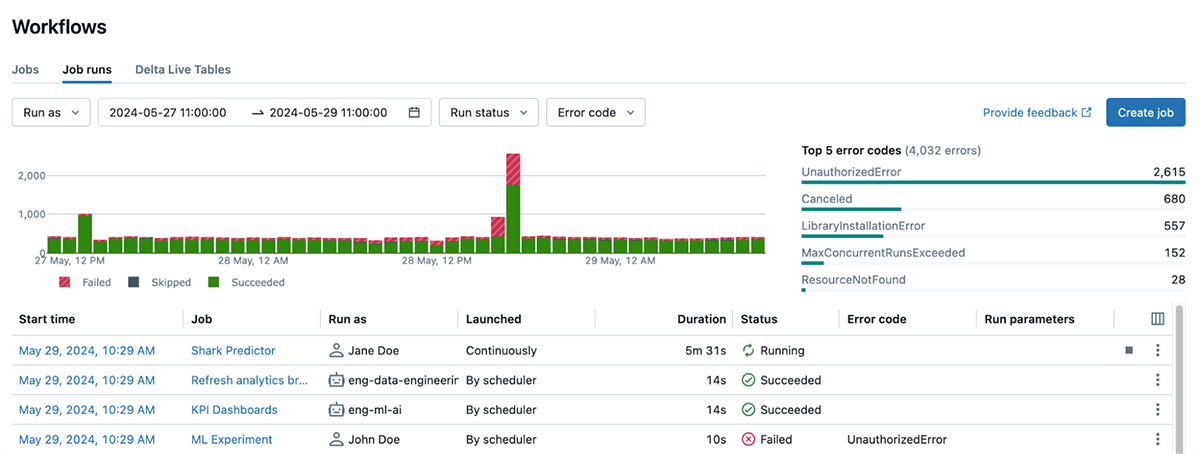

Higher, less complicated, and actionable errors

Navigating error messages can usually be daunting, complicated, and time-consuming, particularly when these messages are inconsistent and overly technical. We have simplified error codes and made them way more actionable. This helps you monitor uncommon errors throughout jobs, filter runs by error codes, and resolve run failures a lot quicker. These error descriptions make it simple so that you can shortly perceive what went flawed with out sifting via advanced logs and re-understanding your entire code. For instance, UnauthorizedError for a run can inform that there’s a permission subject accessing the useful resource for the job run.

Databricks Assistant now built-in with Workflows

Databricks Assistant, our AI-powered Knowledge Intelligence Engine, now diagnoses job failures and gives steps to repair and take a look at the answer. You get context-aware assist inside Databricks Workflows, when and the place you want it probably the most. This function is supported for pocket book duties solely however help for different process sorts will probably be added quickly.

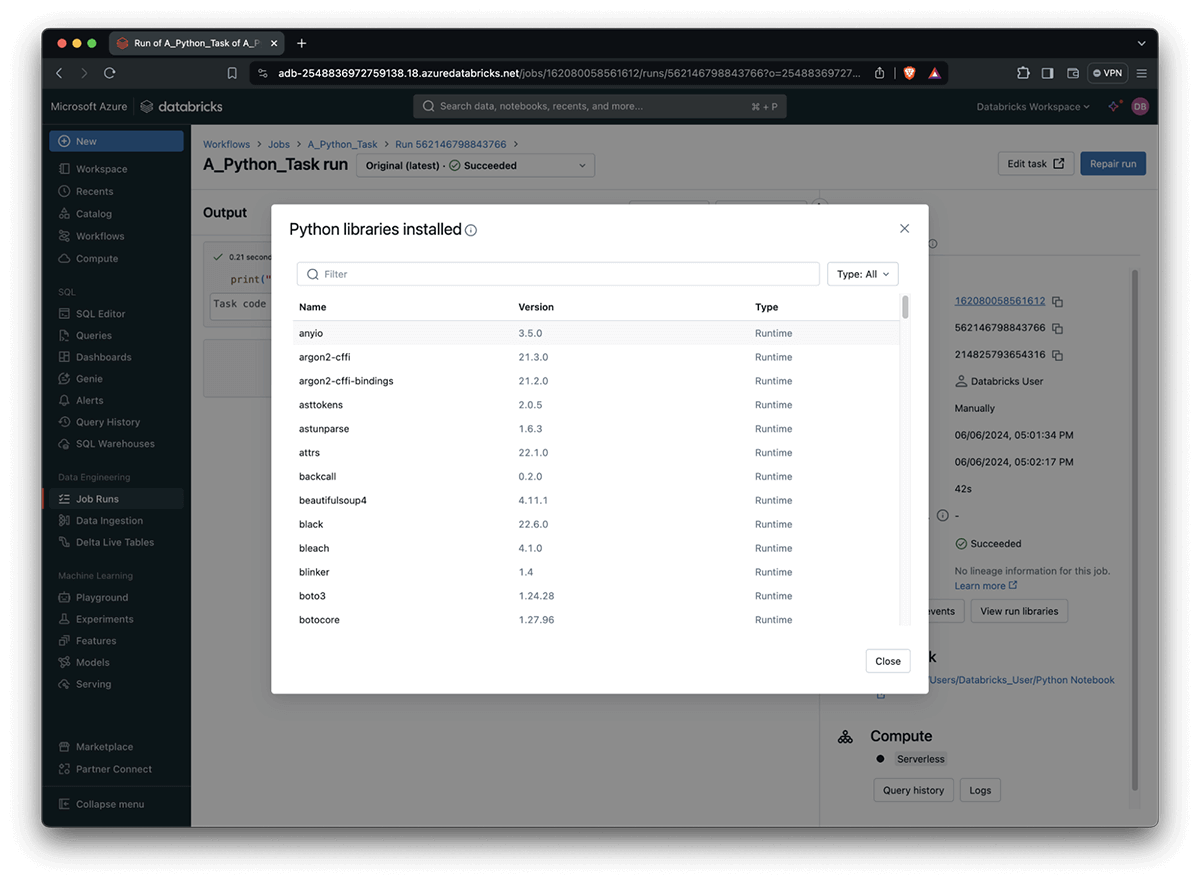

Record the Python libraries utilized by your jobs

Conflicting variations, damaged packages, and cryptic errors make debugging library points a irritating and time-consuming problem. Now you can checklist the Python libraries utilized by your process run together with the model quantity used. That is particularly useful as Python packages would possibly already be pre-installed as a part of your DBR picture or throughout bootstrap actions in your compute cluster. This function additionally highlights which of the above resulted within the bundle model used.

How one can get began?

To get began with Databricks Workflows, see the quickstart information. You possibly can attempt these capabilities throughout Azure, AWS & GCP by merely clicking on the Workflows tab at the moment.

What’s Subsequent

We are going to proceed to broaden on bettering monitoring, alerting and managing capabilities. We’re engaged on new methods to search out the roles you care about by bettering looking & tagging capabilities. We might additionally like to hear from you about your expertise and every other options you’d prefer to see.