Within the dynamic world of synthetic intelligence and tremendous development of Generative AI, builders are always in search of revolutionary methods to extract significant perception from textual content. This weblog submit walks you thru an thrilling challenge that harnesses the facility of Google’s Gemini AI to create an clever English Educator Software that analyzes textual content paperwork and supplies tough phrases, medium phrases, their synonyms, antonyms, use-cases and in addition supplies the necessary questions with a solution from the textual content. I imagine training is the sector that advantages most from the developments of Generative AIs or LLMs and it’s GREAT!

Studying Aims

- Integrating Google Gemini AI fashions into Python-based APIs.

- Perceive the best way to combine and make the most of the English Educator App API to boost language studying functions with real-time information and interactive options.

- Learn to leverage the English Educator App API to construct personalized instructional instruments, bettering consumer engagement and optimizing language instruction.

- Implementation of clever textual content evaluation utilizing superior AI prompting.

- Managing complicated AI interplay error-free with error-handling strategies.

This text was printed as part of the Information Science Blogathon.

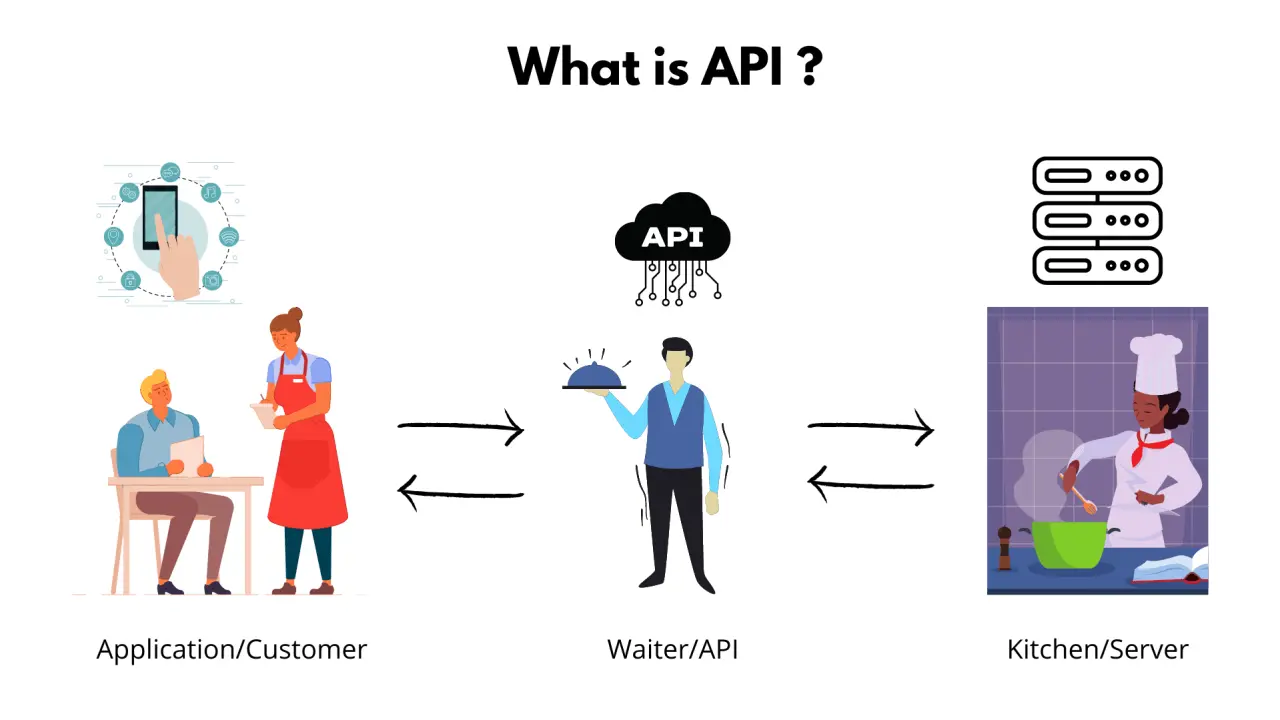

What are APIs?

API (Software Programming Interfaces) function a digital bridge between totally different software program functions. They’re outlined as a set of protocols and guidelines that allow seamless communication, permitting builders to entry particular functionalities with out diving into complicated underlying implementation.

What’s REST API?

REST (Representational State Switch) is an architectural model for designing networked functions. It makes use of normal HTTP strategies to carry out operations on sources.

Vital REST strategies are:

- GET: Retrieve information from a server.

- POST: Create new sources.

- PUT: Replace current sources fully.

- PATCH: Partially replace current sources.

- DELETE: Take away sources.

Key traits embrace:

- Stateless communication

- Uniform interface

- Consumer-Serve structure

- Cacheable sources

- Layered system design

REST APIs use URLs to determine sources and usually return information in JSON codecs. They supply a standardized, scalable method for various functions to speak over the web, making them elementary in trendy internet and cell improvement.

Pydantic and FastAPI: A Excellent Pair

Pydantic revolutionizes information validation in Python by permitting builders to create sturdy information fashions with kind hints and validation guidelines. It ensures information integrity and supplies crystal-clear interface definitions, catching potential errors earlier than they propagate via the system.

FastAPI enhances Pydantic superbly, providing a contemporary, high-performance asynchronous internet framework for constructing APIs.

Its key benefit of FastAPI:

- Computerized interactive API documentation

- Excessive-speed efficiency

- Constructed-in assist for Asynchronous Server Gateway Interface

- Intuitive information validation

- Clear and easy syntax

A Temporary on Google Gemini

Google Gemini represents a breakthrough in multimodal AI fashions, able to processing complicated data throughout textual content, code, audio, and picture. For this challenge, I leverage the ‘gemini-1.5-flash’ mannequin, which supplies:

- Fast and clever textual content processing utilizing prompts.

- Superior pure language understanding.

- Versatile system directions for personalized outputs utilizing prompts.

- Means to generate a nuanced, context-aware response.

Mission Setup and Atmosphere Configuration

Establishing the event atmosphere is essential for a easy implementation. We use Conda to create an remoted, reproducible atmosphere

# Create a brand new conda atmosphere

conda create -n educator-api-env python=3.11

# Activate the atmosphere

conda activate educator-api-env

# Set up required packages

pip set up "fastapi[standard]" google-generativeai python-dotenvMission Architectural Elements

Our API is structured into three main parts:

- fashions.py : Outline information constructions and validation

- providers.py : Implements AI-powered textual content extractor providers

- foremost.py : Create API endpoints and handles request routing

Constructing the API: Code Implementation

Getting Google Gemini API Key and Safety setup for the challenge.

Create a .env file within the challenge root, Seize your Gemini API Key from right here, and put your key within the .env file

GOOGLE_API_KEY="ABCDEFGH-67xGHtsf"This file will probably be securely accessed by the service module utilizing os.getenv(“

Pydantic Fashions: Making certain Information Integrity

We outline structured fashions that assure information consistency for the Gemini response. We are going to implement two information fashions for every information extraction service from the textual content.

Vocabulary information extraction mannequin:

- WordDetails: It can construction and validate the extracted phrase from the AI

from pydantic import BaseModel, Subject

from typing import Listing, Non-compulsory

class WordDetails(BaseModel):

phrase: str = Subject(..., description="Extracted vocabulary phrase")

synonyms: Listing[str] = Subject(

default_factory=record, description="Synonyms of the phrase"

)

antonyms: Listing[str] = Subject(

default_factory=record, description="Antonyms of the phrase"

)

usecase: Non-compulsory[str] = Subject(None, description="Use case of the phrase")

instance: Non-compulsory[str] = Subject(None, description="Instance sentence")- VocabularyResponse: It can construction and validate the extracted phrases into two classes very tough phrases and medium tough phrases.

class VocabularyResponse(BaseModel):

difficult_words: Listing[WordDetails] = Subject(

..., description="Listing of inauspicious vocabulary phrases"

)

medium_words: Listing[WordDetails] = Subject(

..., description="Listing of medium vocabulary phrases"

)Query and Reply extraction mannequin

- QuestionAnswerModel: It can construction and validate the extracted questions and solutions.

class QuestionAnswerModel(BaseModel):

query: str = Subject(..., description="Query")

reply: str = Subject(..., description="Reply")- QuestionAnswerResponse: It can construction and validate the extracted responses from the AI.

class QuestionAnswerResponse(BaseModel):

questions_and_answers: Listing[QuestionAnswerModel] = Subject(

..., description="Listing of questions and solutions"

)These fashions present automated validation, kind checking, and clear interface definitions, stopping potential runtime errors.

Service Module: Clever Textual content Processing

The service module has two providers:

This service GeminiVocabularyService amenities:

- Makes use of Gemini to determine difficult phrases.

- Generates complete phrase insights.

- Implement sturdy JSON parsing.

- Manages potential error eventualities.

First, now we have to import all the mandatory libraries and arrange the logging and atmosphere variables.

import os

import json

import logging

from fastapi import HTTPException

import google.generativeai as genai

from dotenv import load_dotenv

# Configure logging

logging.basicConfig(degree=logging.INFO)

logger = logging.getLogger(__name__)

# Load atmosphere variables

load_dotenv()This GeminiVocabularyService class has three methodology.

The __init__ Methodology has necessary Gemini configuration, Google API Key, Setting generative mannequin, and immediate for the vocabulary extraction.

Immediate:

"""You're an skilled vocabulary extractor.

For the given textual content:

1. Establish 3-5 difficult vocabulary phrases

2. Present the next for EACH phrase in a STRICT JSON format:

- phrase: The precise phrase

- synonyms: Listing of 2-3 synonyms

- antonyms: Listing of 2-3 antonyms

- usecase: A quick clarification of the phrase's utilization

- instance: An instance sentence utilizing the phrase

IMPORTANT: Return ONLY a sound JSON that matches this construction:

{

"difficult_words": [

{

"word": "string",

"synonyms": ["string1", "string2"],

"antonyms": ["string1", "string2"],

"usecase": "string",

"instance": "string"

}

],

"medium_words": [

{

"word": "string",

"synonyms": ["string1", "string2"],

"antonyms": ["string1", "string2"],

"usecase": "string",

"instance": "string"

}

],

}

""" Code Implementation

class GeminiVocabularyService:

def __init__(self):

_google_api_key = os.getenv("GOOGLE_API_KEY")

# Retrieve API Key

self.api_key = _google_api_key

if not self.api_key:

increase ValueError(

"Google API Secret is lacking. Please set GOOGLE_API_KEY in .env file."

)

# Configure Gemini API

genai.configure(api_key=self.api_key)

# Era Configuration

self.generation_config = {

"temperature": 0.7,

"top_p": 0.95,

"max_output_tokens": 8192,

}

# Create Generative Mannequin

self.vocab_model = genai.GenerativeModel(

model_name="gemini-1.5-flash",

generation_config=self.generation_config, # kind: ignore

system_instruction="""

You're an skilled vocabulary extractor.

For the given textual content:

1. Establish 3-5 difficult vocabulary phrases

2. Present the next for EACH phrase in a STRICT JSON format:

- phrase: The precise phrase

- synonyms: Listing of 2-3 synonyms

- antonyms: Listing of 2-3 antonyms

- usecase: A quick clarification of the phrase's utilization

- instance: An instance sentence utilizing the phrase

IMPORTANT: Return ONLY a sound JSON that matches this construction:

{

"difficult_words": [

{

"word": "string",

"synonyms": ["string1", "string2"],

"antonyms": ["string1", "string2"],

"usecase": "string",

"instance": "string"

}

],

"medium_words": [

{

"word": "string",

"synonyms": ["string1", "string2"],

"antonyms": ["string1", "string2"],

"usecase": "string",

"instance": "string"

}

],

}

""",

)The extracted_vocabulary methodology has the chat course of, and response from the Gemini by sending textual content enter utilizing the sending_message_async() operate. This methodology has one non-public utility operate _parse_response(). This non-public utility operate will validate the response from the Gemini, test the mandatory parameters then parse the information to the extracted vocabulary operate. It can additionally log the errors comparable to JSONDecodeError, and ValueError for higher error administration.

Code Implementation

The extracted_vocabulary methodology:

async def extract_vocabulary(self, textual content: str) -> dict:

attempt:

# Create a brand new chat session

chat_session = self.vocab_model.start_chat(historical past=[])

# Ship message and await response

response = await chat_session.send_message_async(textual content)

# Extract and clear the textual content response

response_text = response.textual content.strip()

# Try and extract JSON

return self._parse_response(response_text)

besides Exception as e:

logger.error(f"Vocabulary extraction error: {str(e)}")

logger.error(f"Full response: {response_text}")

increase HTTPException(

status_code=500, element=f"Vocabulary extraction failed: {str(e)}"

)The _parsed_response methodology:

def _parse_response(self, response_text: str) -> dict:

# Take away markdown code blocks if current

response_text = response_text.exchange("```json", "").exchange("```", "").strip()

attempt:

# Try and parse JSON

parsed_data = json.masses(response_text)

# Validate the construction

if (

not isinstance(parsed_data, dict)

or "difficult_words" not in parsed_data

):

increase ValueError("Invalid JSON construction")

return parsed_data

besides json.JSONDecodeError as json_err:

logger.error(f"JSON Decode Error: {json_err}")

logger.error(f"Problematic response: {response_text}")

increase HTTPException(

status_code=400, element="Invalid JSON response from Gemini"

)

besides ValueError as val_err:

logger.error(f"Validation Error: {val_err}")

increase HTTPException(

status_code=400, element="Invalid vocabulary extraction response"

)The entire CODE of the GeminiVocabularyService module.

class GeminiVocabularyService:

def __init__(self):

_google_api_key = os.getenv("GOOGLE_API_KEY")

# Retrieve API Key

self.api_key = _google_api_key

if not self.api_key:

increase ValueError(

"Google API Secret is lacking. Please set GOOGLE_API_KEY in .env file."

)

# Configure Gemini API

genai.configure(api_key=self.api_key)

# Era Configuration

self.generation_config = {

"temperature": 0.7,

"top_p": 0.95,

"max_output_tokens": 8192,

}

# Create Generative Mannequin

self.vocab_model = genai.GenerativeModel(

model_name="gemini-1.5-flash",

generation_config=self.generation_config, # kind: ignore

system_instruction="""

You're an skilled vocabulary extractor.

For the given textual content:

1. Establish 3-5 difficult vocabulary phrases

2. Present the next for EACH phrase in a STRICT JSON format:

- phrase: The precise phrase

- synonyms: Listing of 2-3 synonyms

- antonyms: Listing of 2-3 antonyms

- usecase: A quick clarification of the phrase's utilization

- instance: An instance sentence utilizing the phrase

IMPORTANT: Return ONLY a sound JSON that matches this construction:

{

"difficult_words": [

{

"word": "string",

"synonyms": ["string1", "string2"],

"antonyms": ["string1", "string2"],

"usecase": "string",

"instance": "string"

}

],

"medium_words": [

{

"word": "string",

"synonyms": ["string1", "string2"],

"antonyms": ["string1", "string2"],

"usecase": "string",

"instance": "string"

}

],

}

""",

)

async def extract_vocabulary(self, textual content: str) -> dict:

attempt:

# Create a brand new chat session

chat_session = self.vocab_model.start_chat(historical past=[])

# Ship message and await response

response = await chat_session.send_message_async(textual content)

# Extract and clear the textual content response

response_text = response.textual content.strip()

# Try and extract JSON

return self._parse_response(response_text)

besides Exception as e:

logger.error(f"Vocabulary extraction error: {str(e)}")

logger.error(f"Full response: {response_text}")

increase HTTPException(

status_code=500, element=f"Vocabulary extraction failed: {str(e)}"

)

def _parse_response(self, response_text: str) -> dict:

# Take away markdown code blocks if current

response_text = response_text.exchange("```json", "").exchange("```", "").strip()

attempt:

# Try and parse JSON

parsed_data = json.masses(response_text)

# Validate the construction

if (

not isinstance(parsed_data, dict)

or "difficult_words" not in parsed_data

):

increase ValueError("Invalid JSON construction")

return parsed_data

besides json.JSONDecodeError as json_err:

logger.error(f"JSON Decode Error: {json_err}")

logger.error(f"Problematic response: {response_text}")

increase HTTPException(

status_code=400, element="Invalid JSON response from Gemini"

)

besides ValueError as val_err:

logger.error(f"Validation Error: {val_err}")

increase HTTPException(

status_code=400, element="Invalid vocabulary extraction response"

)

Query-Reply Era Service

This Query Reply Service amenities:

- Creates contextually wealthy comprehension questions.

- Generates exact, informative solutions.

- Handles complicated textual content evaluation requirement.

- JSON and Worth error dealing with.

This QuestionAnswerService has three strategies:

__init__ methodology

The __init__ methodology is generally the identical because the Vocabulary service class apart from the immediate.

Immediate:

"""

You're an skilled at creating complete comprehension questions and solutions.

For the given textual content:

1. Generate 8-10 numerous questions masking:

- Vocabulary that means

- Literary units

- Grammatical evaluation

- Thematic insights

- Contextual understanding

IMPORTANT: Return ONLY a sound JSON on this EXACT format:

{

"questions_and_answers": [

{

"question": "string",

"answer": "string"

}

]

}

Tips:

- Questions must be clear and particular

- Solutions must be concise and correct

- Cowl totally different ranges of comprehension

- Keep away from sure/no questions

""" Code Implementation:

The __init__ methodology of QuestionAnswerService class

def __init__(self):

_google_api_key = os.getenv("GOOGLE_API_KEY")

# Retrieve API Key

self.api_key = _google_api_key

if not self.api_key:

increase ValueError(

"Google API Secret is lacking. Please set GOOGLE_API_KEY in .env file."

)

# Configure Gemini API

genai.configure(api_key=self.api_key)

# Era Configuration

self.generation_config = {

"temperature": 0.7,

"top_p": 0.95,

"max_output_tokens": 8192,

}

self.qa_model = genai.GenerativeModel(

model_name="gemini-1.5-flash",

generation_config=self.generation_config, # kind: ignore

system_instruction="""

You're an skilled at creating complete comprehension questions and solutions.

For the given textual content:

1. Generate 8-10 numerous questions masking:

- Vocabulary that means

- Literary units

- Grammatical evaluation

- Thematic insights

- Contextual understanding

IMPORTANT: Return ONLY a sound JSON on this EXACT format:

{

"questions_and_answers": [

{

"question": "string",

"answer": "string"

}

]

}

Tips:

- Questions must be clear and particular

- Solutions must be concise and correct

- Cowl totally different ranges of comprehension

- Keep away from sure/no questions

""",

)The Query and Reply Extraction

The extract_questions_and_answers methodology has a chat session with Gemini, a full immediate for higher extraction of questions and solutions from the enter textual content, an asynchronous message despatched to the Gemini API utilizing send_message_async(full_prompt), after which stripping response information for clear information. This methodology additionally has a personal utility operate identical to the earlier one.

Code Implementation:

extract_questions_and_answers

async def extract_questions_and_answers(self, textual content: str) -> dict:

"""

Extracts questions and solutions from the given textual content utilizing the supplied mannequin.

"""

attempt:

# Create a brand new chat session

chat_session = self.qa_model.start_chat(historical past=[])

full_prompt = f"""

Analyze the next textual content and generate complete comprehension questions and solutions:

{textual content}

Make sure the questions and solutions present deep insights into the textual content's that means, model, and context.

"""

# Ship message and await response

response = await chat_session.send_message_async(full_prompt)

# Extract and clear the textual content response

response_text = response.textual content.strip()

# Try and parse and validate the response

return self._parse_response(response_text)

besides Exception as e:

logger.error(f"Query and reply extraction error: {str(e)}")

logger.error(f"Full response: {response_text}")

increase HTTPException(

status_code=500, element=f"Query-answer extraction failed: {str(e)}"

)_parse_response

def _parse_response(self, response_text: str) -> dict:

"""

Parses and validates the JSON response from the mannequin.

"""

# Take away markdown code blocks if current

response_text = response_text.exchange("```json", "").exchange("```", "").strip()

attempt:

# Try and parse JSON

parsed_data = json.masses(response_text)

# Validate the construction

if (

not isinstance(parsed_data, dict)

or "questions_and_answers" not in parsed_data

):

increase ValueError("Response should be a listing of questions and solutions.")

return parsed_data

besides json.JSONDecodeError as json_err:

logger.error(f"JSON Decode Error: {json_err}")

logger.error(f"Problematic response: {response_text}")

increase HTTPException(

status_code=400, element="Invalid JSON response from the mannequin"

)

besides ValueError as val_err:

logger.error(f"Validation Error: {val_err}")

increase HTTPException(

status_code=400, element="Invalid question-answer extraction response"

)API Endpoints: Connecting Customers to AI

The primary file defines two main POST endpoint:

It’s a submit methodology that can primarily eat enter information from the purchasers and ship it to the AI APIs via the vocabulary Extraction Service. It can additionally test the enter textual content for minimal phrase necessities and in spite of everything, it is going to validate the response information utilizing the Pydantic mannequin for consistency and retailer it within the storage.

@app.submit("/extract-vocabulary/", response_model=VocabularyResponse)

async def extract_vocabulary(textual content: str):

# Validate enter

if not textual content or len(textual content.strip()) < 10:

increase HTTPException(status_code=400, element="Enter textual content is just too quick")

# Extract vocabulary

consequence = await vocab_service.extract_vocabulary(textual content)

# Retailer vocabulary in reminiscence

key = hash(textual content)

vocabulary_storage[key] = VocabularyResponse(**consequence)

return vocabulary_storage[key]This submit methodology, could have principally the identical because the earlier POST methodology besides it is going to use Query Reply Extraction Service.

@app.submit("/extract-question-answer/", response_model=QuestionAnswerResponse)

async def extract_question_answer(textual content: str):

# Validate enter

if not textual content or len(textual content.strip()) < 10:

increase HTTPException(status_code=400, element="Enter textual content is just too quick")

# Extract vocabulary

consequence = await qa_service.extract_questions_and_answers(textual content)

# Retailer consequence for retrieval (utilizing hash of textual content as key for simplicity)

key = hash(textual content)

qa_storage[key] = QuestionAnswerResponse(**consequence)

return qa_storage[key]There are two main GET methodology:

First, the get-vocabulary methodology will test the hash key with the purchasers’ textual content information, if the textual content information is current within the storage the vocabulary will probably be offered as JSON information. This methodology is used to indicate the information on the CLIENT SIDE UI on the internet web page.

@app.get("/get-vocabulary/", response_model=Non-compulsory[VocabularyResponse])

async def get_vocabulary(textual content: str):

"""

Retrieve the vocabulary response for a beforehand processed textual content.

"""

key = hash(textual content)

if key in vocabulary_storage:

return vocabulary_storage[key]

else:

increase HTTPException(

status_code=404, element="Vocabulary consequence not discovered for the supplied textual content"

)Second, the get-question-answer methodology can even test the hash key with the purchasers’ textual content information identical to the earlier methodology, and can produce the JSON response saved within the storage to the CLIENT SIDE UI.

@app.get("/get-question-answer/", response_model=Non-compulsory[QuestionAnswerResponse])

async def get_question_answer(textual content: str):

"""

Retrieve the question-answer response for a beforehand processed textual content.

"""

key = hash(textual content)

if key in qa_storage:

return qa_storage[key]

else:

increase HTTPException(

status_code=404,

element="Query-answer consequence not discovered for the supplied textual content",

)Key Implementation Function

To run the applying, now we have to import the libraries and instantiate a FastAPI service.

Import Libraries

from fastapi import FastAPI, HTTPException

from fastapi.middleware.cors import CORSMiddleware

from typing import Non-compulsory

from .fashions import VocabularyResponse, QuestionAnswerResponse

from .providers import GeminiVocabularyService, QuestionAnswerServiceInstantiate FastAPI Software

# FastAPI Software

app = FastAPI(title="English Educator API")Cross-origin Useful resource Sharing (CORS) Assist

Cross-origin useful resource sharing (CORS) is an HTTP-header-based mechanism that enables a server to point any origins comparable to area, scheme, or port apart from its personal from which a browser ought to allow loading sources. For safety causes, the browser restricts CORS HTTP requests initiated from scripts.

# FastAPI Software

app = FastAPI(title="English Educator API")

# Add CORS middleware

app.add_middleware(

CORSMiddleware,

allow_origins=["*"],

allow_credentials=True,

allow_methods=["*"],

allow_headers=["*"],

)In-memory validation mechanism: Easy Key Phrase Storage

We use easy key-value-based storage for the challenge however you need to use MongoDB.

# Easy key phrase storage

vocabulary_storage = {}

qa_storage = {}Enter Validation mechanisms and Complete error dealing with.

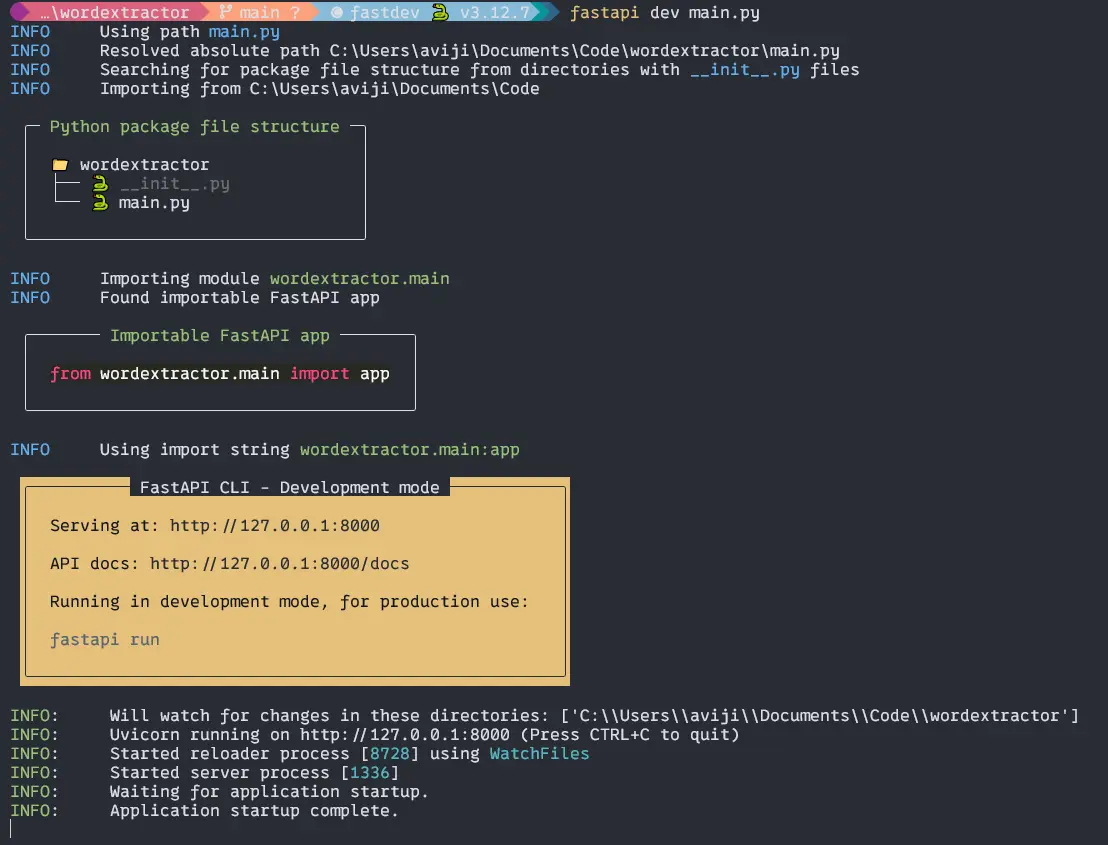

Now’s the time to run the applying.

To run the applying in improvement mode, now we have to make use of FasyAPI CLI which can put in with the FastAPI.

Sort the code to your terminal within the software root.

$ fastapi dev foremost.pyOutput:

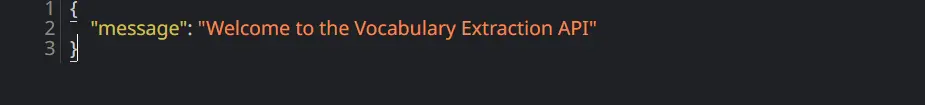

Then in case you CTRL + Proper Click on on the hyperlink http://127.0.0.1:8000 you’re going to get a welcome display on the internet browser.

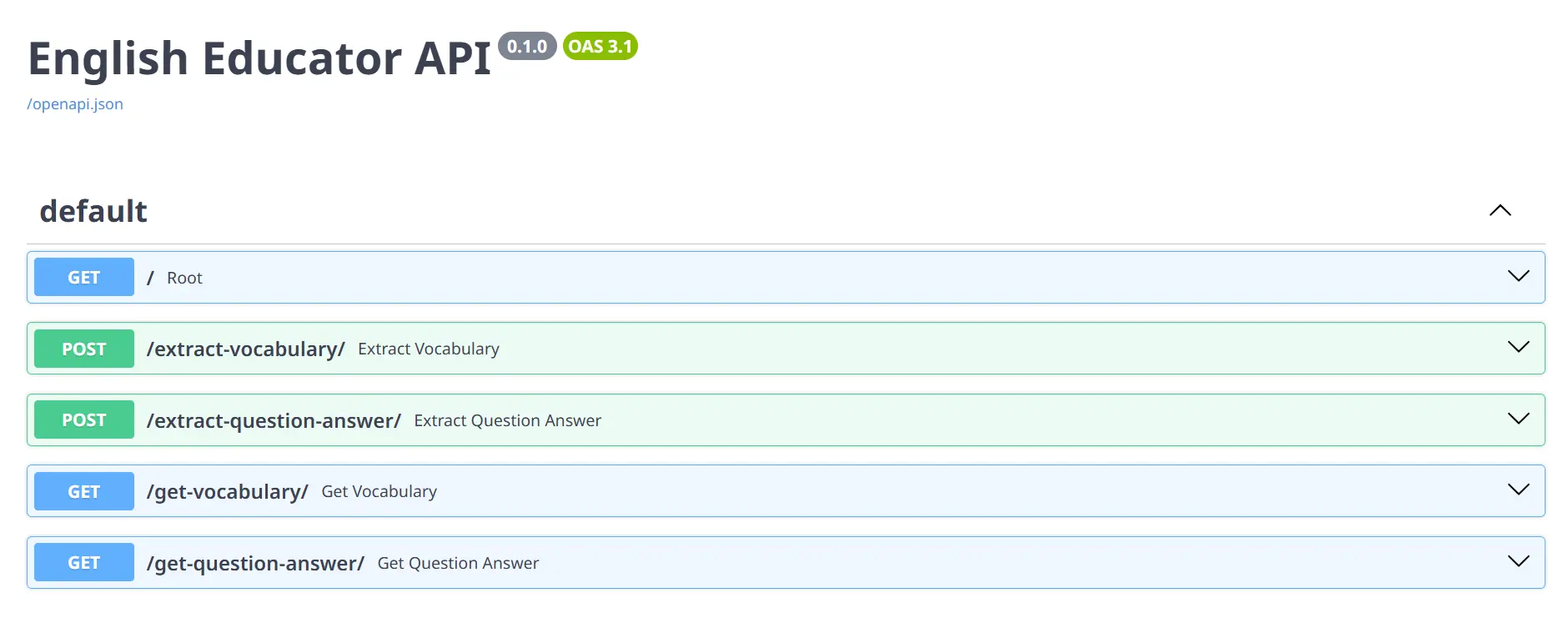

To go to the docs web page of FastAPI simply click on on the following URL or kind http://127.0.0.1:8000/docs in your browser, and you will notice all of the HTTP strategies on the web page for testing.

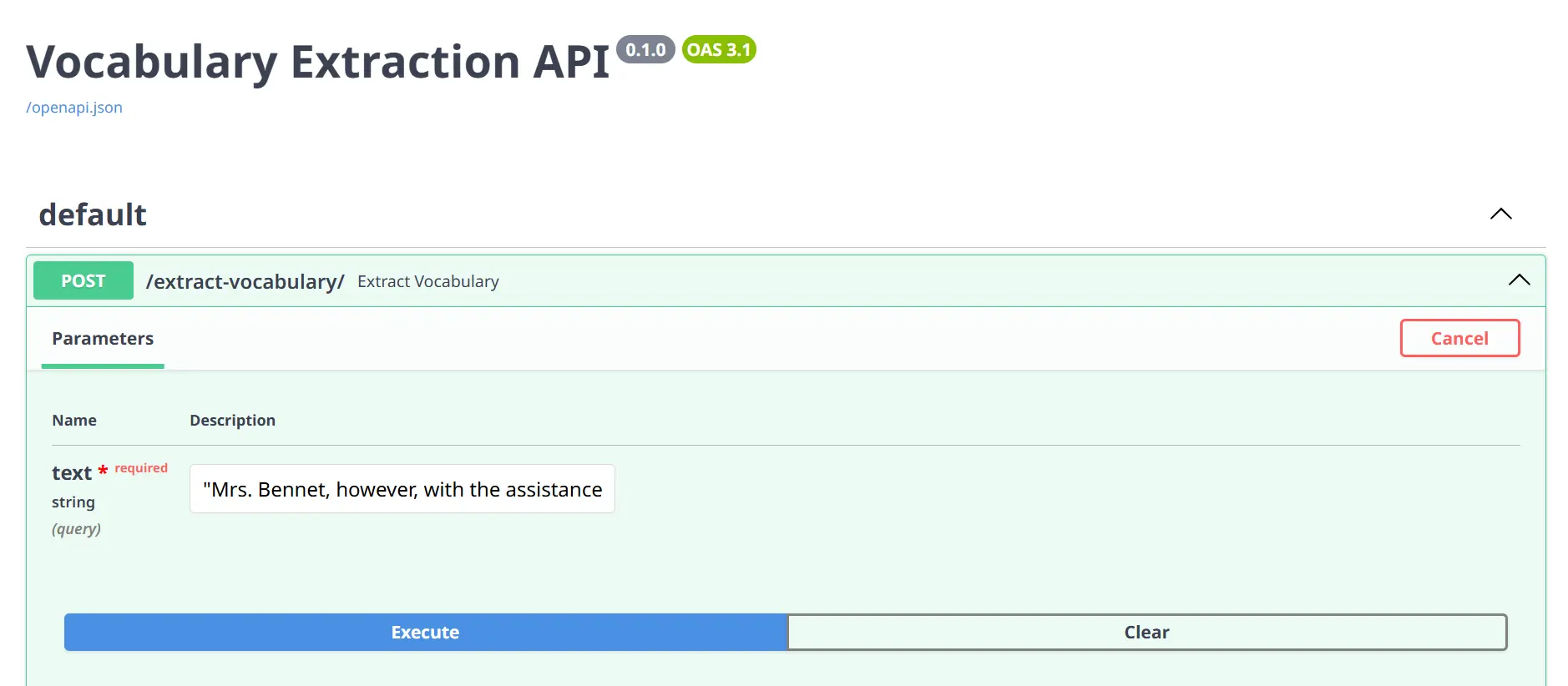

Now to check the API, Click on on any of the POST strategies and TRY IT OUT, put any textual content you need to within the enter area, and execute. You’ll get the response in response to the providers comparable to vocabulary, and query reply.

Execute:

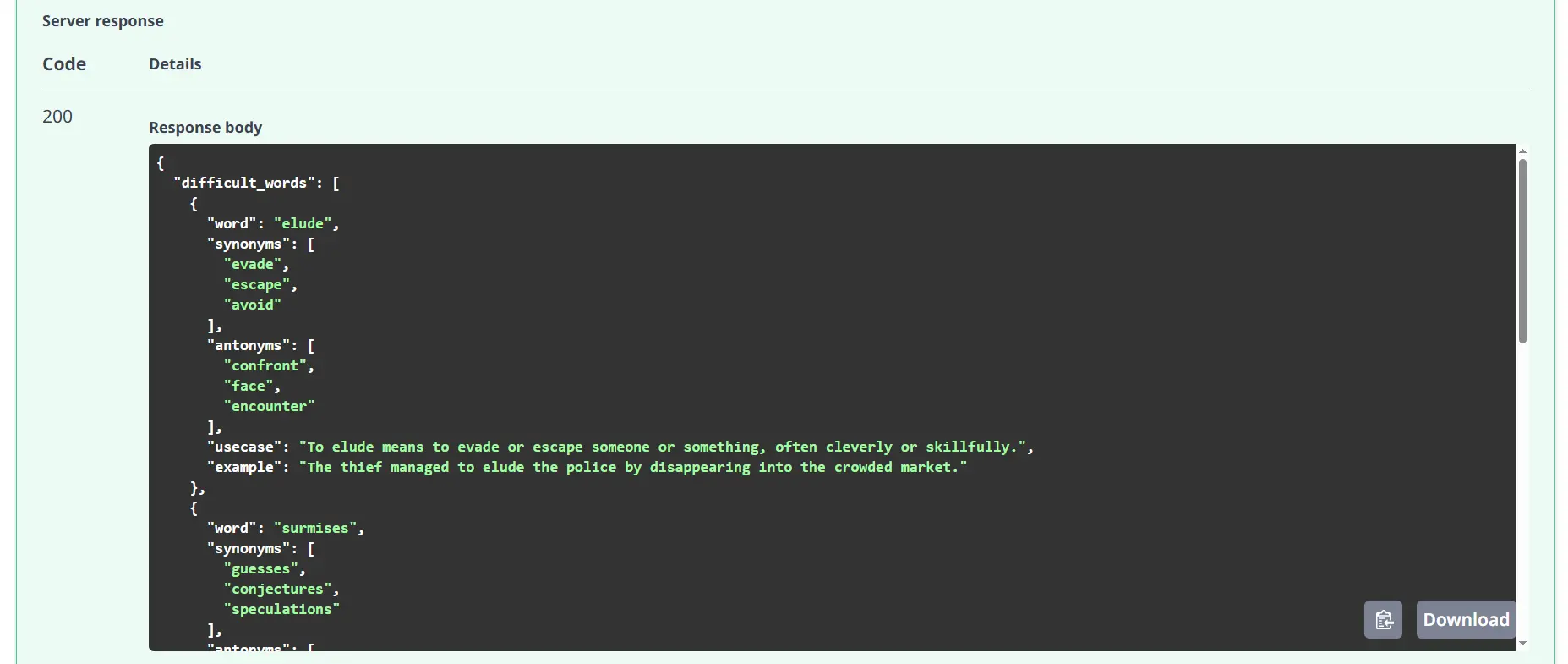

Response:

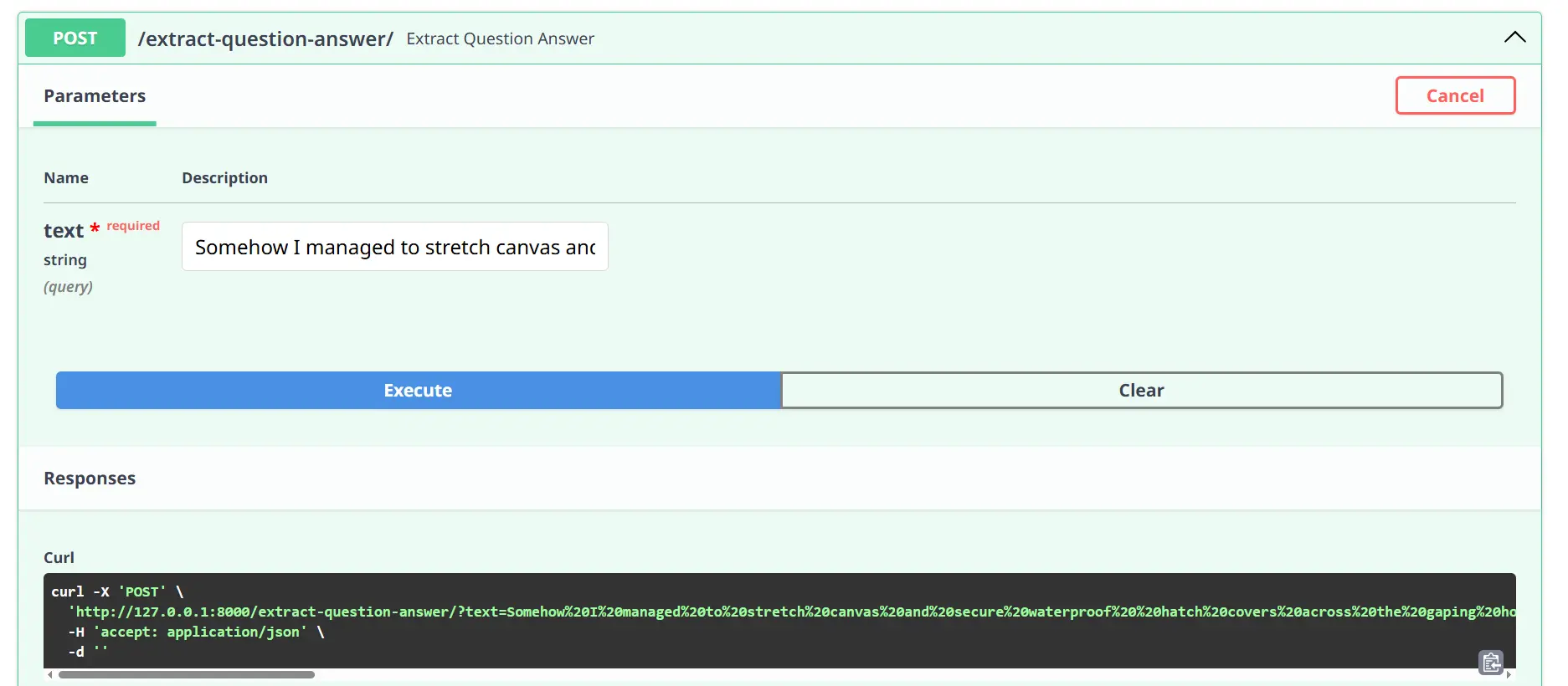

Execute:

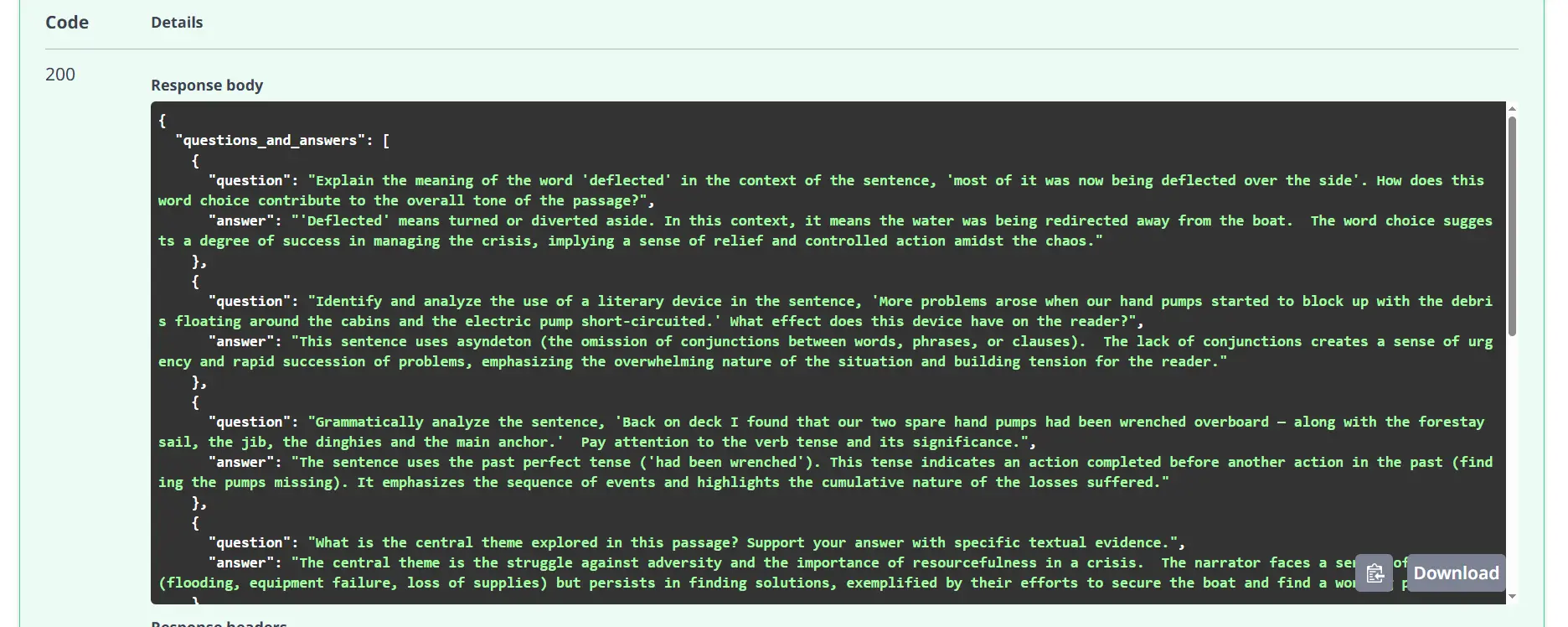

Response:

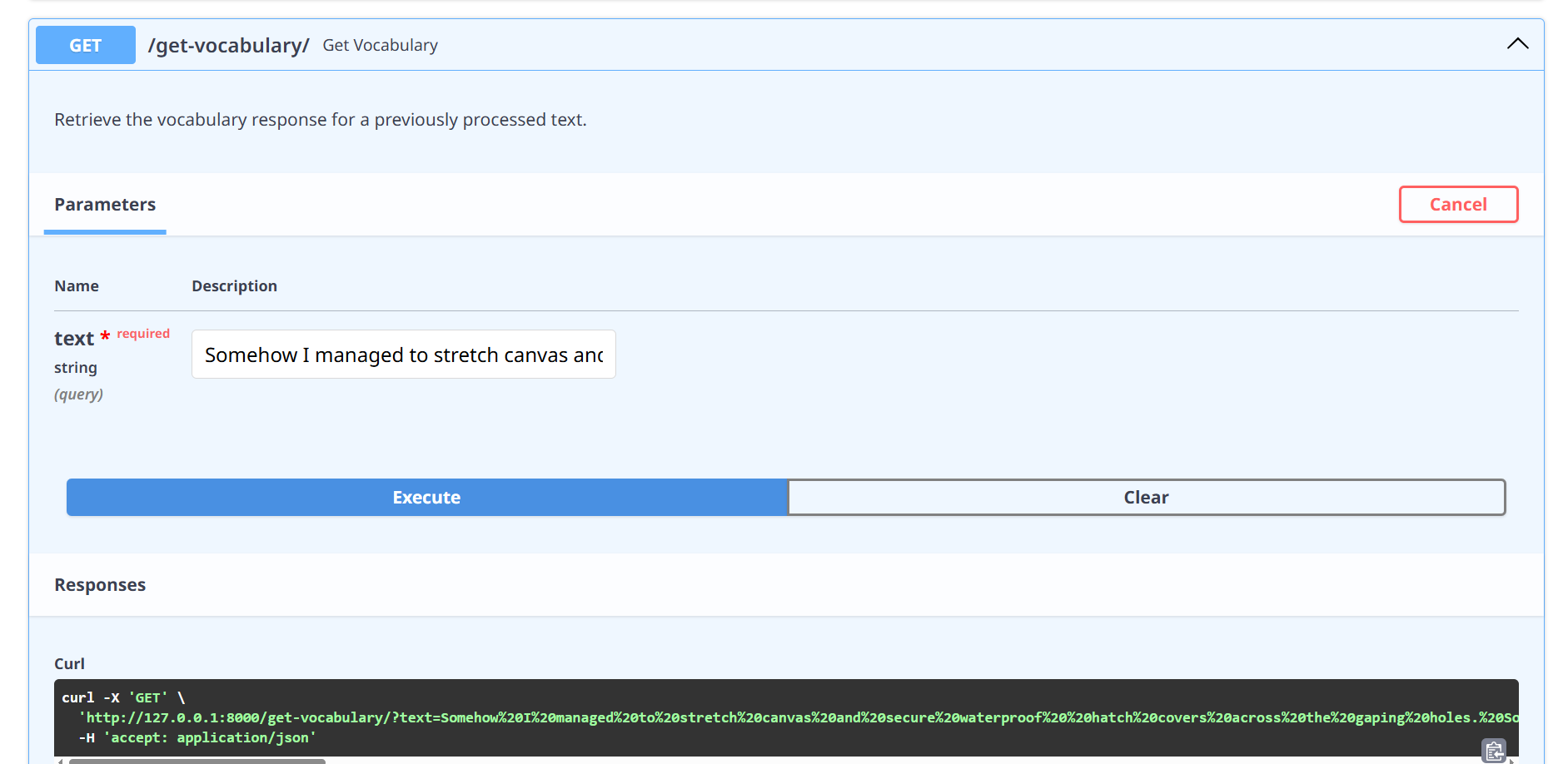

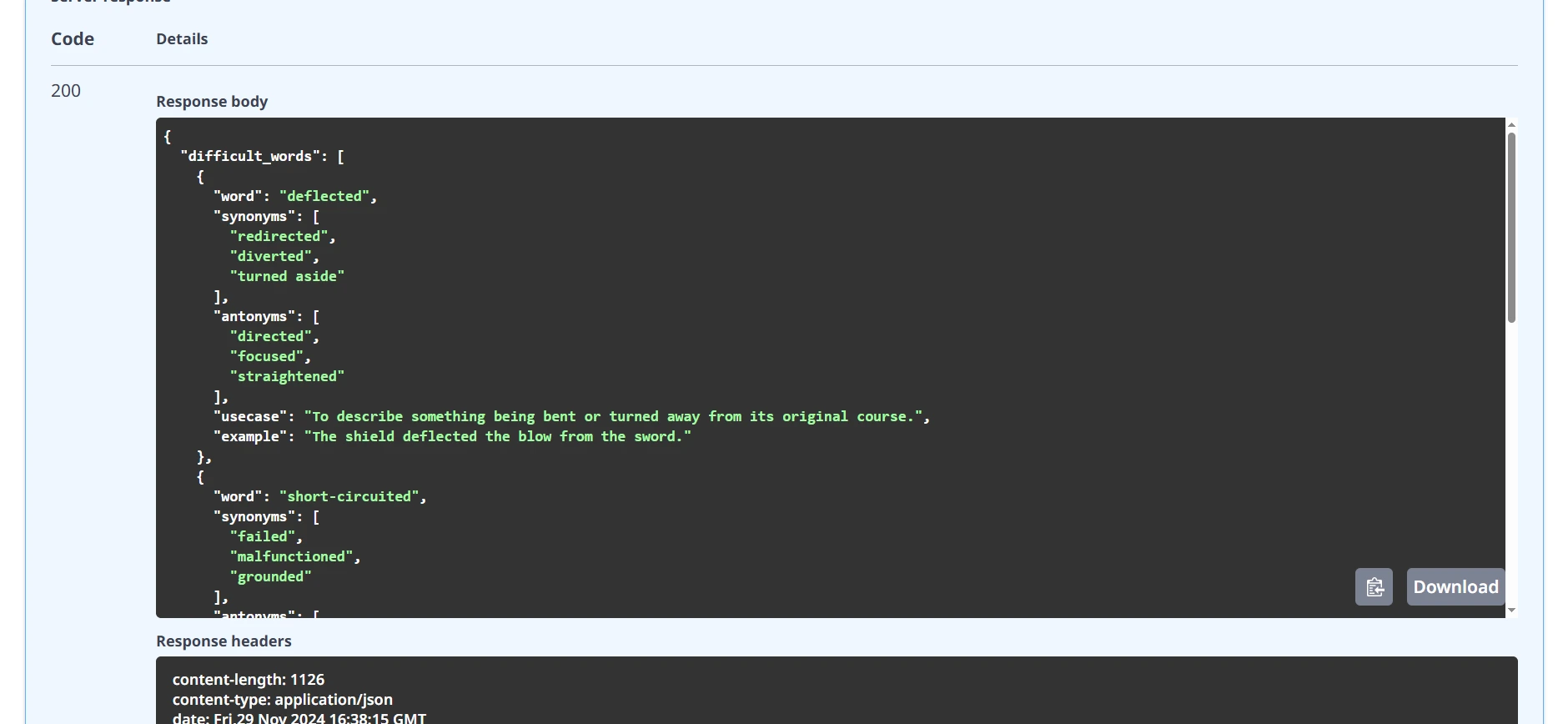

Testing Get Strategies

Get vocabulary from the storage.

Execute:

Put the identical textual content you placed on the POST methodology on the enter area.

Response:

You’ll get the under output from the storage.

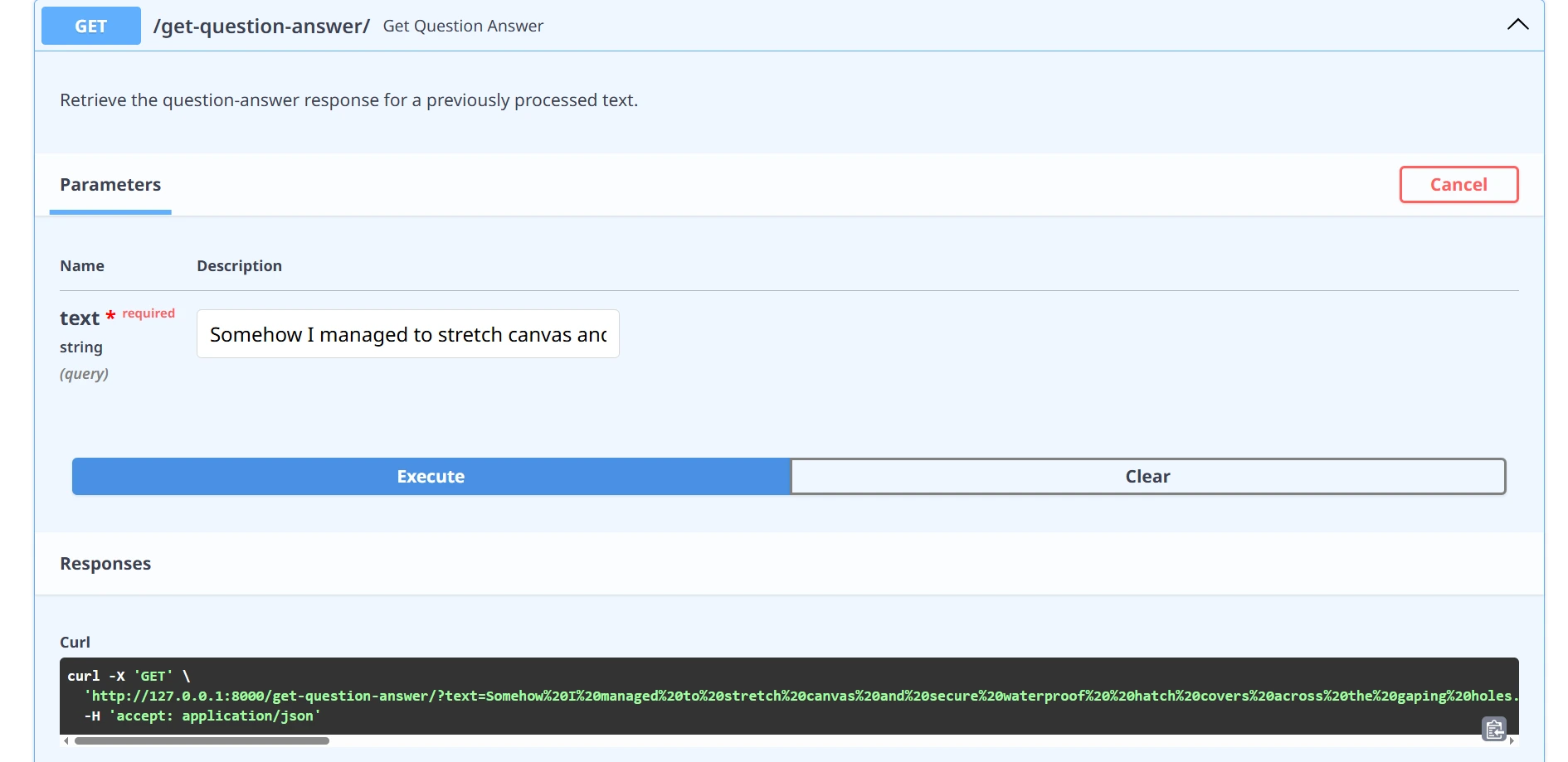

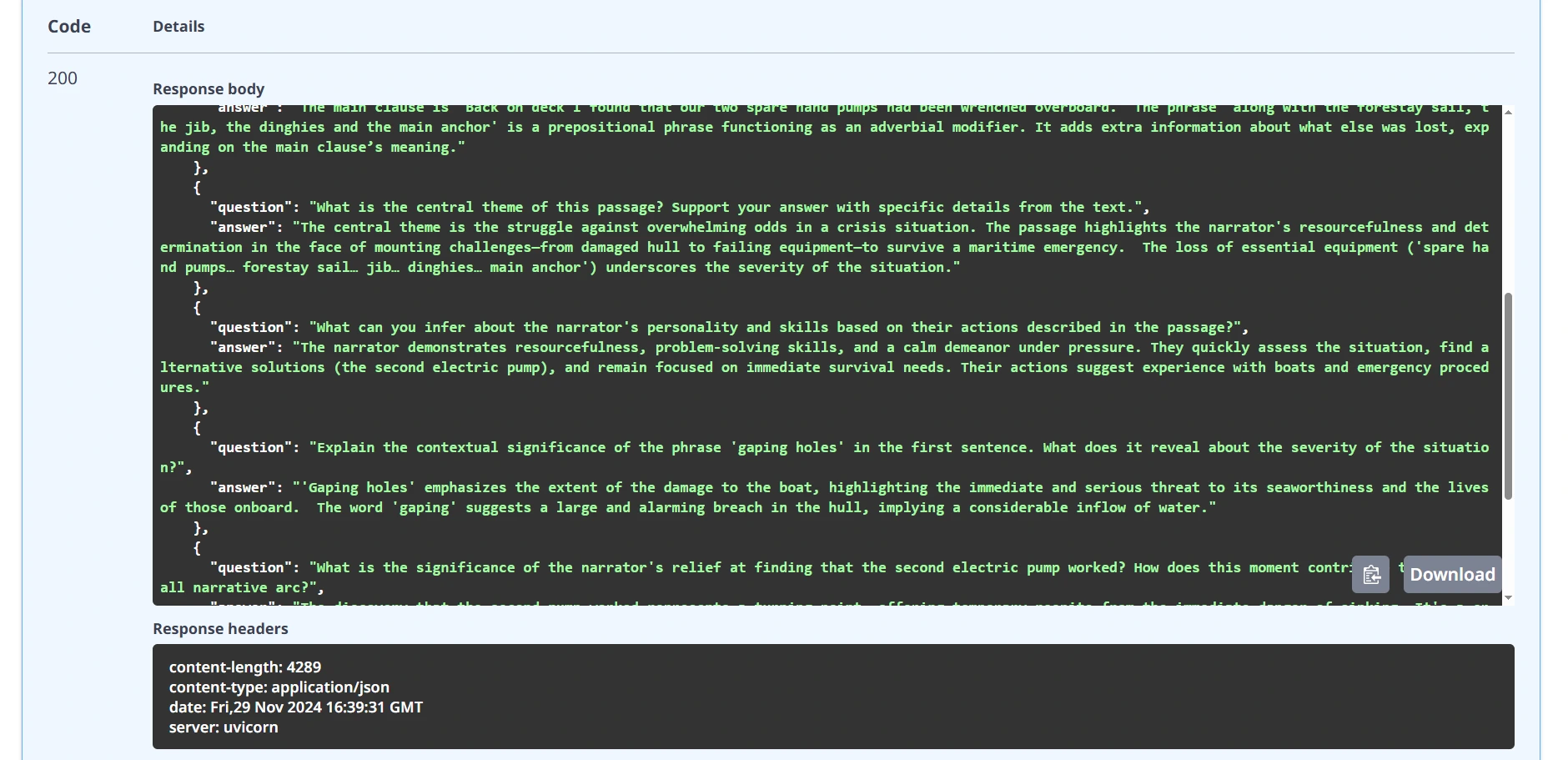

and in addition for question-and-answer

Execute:

Response:

That will probably be totally working internet server API for English educators utilizing Google Gemini AI.

Additional Improvement Alternative

The present implementation opens doorways to thrilling future enhancements:

- Discover persistent storage options to retain information successfully throughout classes.

- Combine sturdy authentication mechanisms for enhanced safety.

- Advance textual content evaluation capabilities with extra subtle options.

- Design and construct an intuitive front-end interface for higher consumer interplay.

- Implement environment friendly fee limiting and caching methods to optimize efficiency.

Sensible Concerns and Limitations

Whereas our API demonstrates highly effective capabilities, you need to take into account:

- Think about API utilization prices and fee limits when planning utilization to keep away from sudden expenses and guarantee scalability.

- Be conscious of processing time for complicated texts, as longer or intricate inputs might lead to slower response occasions.

- Put together for steady mannequin updates from Google, which can affect the API’s efficiency or capabilities over time.

- Perceive that AI-generated responses can differ, so it’s necessary to account for potential inconsistencies in output high quality.

Conclusion

Now we have created a versatile, clever API that transforms textual content evaluation via the synergy of Google Gemini, FastAPI, and Pydantic. This resolution demonstrates how trendy AI applied sciences may be leveraged to extract deep, significant insights from textual information.

You may get all of the code of the challenge within the CODE REPO.

Key Takeaways

- AI-powered APIs can present clever, context-aware textual content evaluation.

- FastAPI simplifies complicated API improvement with automated documentation.

- The English Educator App API empowers builders to create interactive and customized language studying experiences.

- Integrating the English Educator App API can streamline content material supply, bettering each instructional outcomes and consumer engagement.

Incessantly Requested Query

A. The present model makes use of environment-based API key administration and contains elementary enter validation. For manufacturing, further safety layers are advisable.

A. At all times evaluation Google Gemini’s present phrases of service and licensing for business implementations.

A. Efficiency will depend on Gemini API response occasions, enter complexity, and your particular processing necessities.

A. The English Educator App API supplies instruments for educators to create customized language studying experiences, providing options like vocabulary extraction, pronunciation suggestions, and superior textual content evaluation.

The media proven on this article is just not owned by Analytics Vidhya and is used on the Writer’s discretion.