Within the fast-evolving world of AI, massive language fashions are pushing boundaries in pace, accuracy, and cost-efficiency. The latest launch of Deepseek R1, an open-source mannequin rivaling OpenAI’s o1, is a scorching subject within the AI area, particularly given its 27x decrease value and superior reasoning capabilities. Pair this with Qdrant’s binary quantization for environment friendly and fast vector searches, we will index over 1,000+ web page paperwork. On this article, we’ll create a Bhagavad Gita AI Assistant, able to indexing 1,000+ pages, answering advanced queries in seconds utilizing Groq, and delivering insights with domain-specific precision.

Studying Goals

- Implement binary quantization in Qdrant for memory-efficient vector indexing.

- Perceive how you can construct a Bhagavad Gita AI Assistant utilizing Deepseek R1, Qdrant, and LlamaIndex for environment friendly textual content retrieval.

- Be taught to optimize Bhagavad Gita AI Assistant with Groq for quick, domain-specific question responses and large-scale doc indexing.

- Construct a RAG pipeline utilizing LlamaIndex and FastEmbed native embeddings to course of 1,000+ pages of the Bhagavad Gita.

- Combine Deepseek R1 from Groq’s inferencing for real-time, low-latency responses.

- Develop a Streamlit UI to showcase AI-powered insights with considering transparency.

This text was revealed as part of the Knowledge Science Blogathon.

Deepseek R1 vs OpenAI o1

Deepseek R1 challenges OpenAI’s dominance with 27x decrease API prices and near-par efficiency on reasoning benchmarks. Not like OpenAI’s o1 closed, subscription-based mannequin ($200/month), Deepseek R1 is free, open-source, and splendid for budget-conscious tasks and experimentation.

Reasoning- ARC-AGI Benchmark: [Source: ARC-AGI Deepseek]

- Deepseek: 20.5% accuracy (public), 15.8% (semi-private).

- OpenAI: 21% accuracy (public), 18% (semi-private).

From my expertise to date, Deepseek does a terrific job with math reasoning, coding-related use circumstances, and context-aware prompts. Nevertheless, OpenAI retains an edge in common data breadth, making it preferable for fact-diverse functions.

What’s Binary Quantization in Vector Databases?

Binary quantization (BQ) is Qdrant’s indexing compression approach to optimize high-dimensional vector storage and retrieval. By changing 32-bit floating-point vectors into 1-bit binary values, it slashes reminiscence utilization by 40x and accelerates search speeds dramatically.

How It Works

- Binarization: Vectors are simplified to 0s and 1s primarily based on a threshold (e.g., values >0 turn out to be 1).

- Environment friendly Indexing: Qdrant’s HNSW algorithm makes use of these binary vectors for fast approximate nearest neighbor (ANN) searches.

- Oversampling: To steadiness pace and accuracy, BQ retrieves additional candidates (e.g., 200 for a restrict of 100) and re-ranks them utilizing unique vectors.

Why It Issues

- Storage: A 1536-dimension OpenAI vector shrinks from 6KB to 0.1875 KB.

- Pace: Boolean operations on 1-bit vectors execute sooner, decreasing latency.

- Scalability: Preferrred for big datasets (1M+ vectors) with minimal recall tradeoffs.

Keep away from binary quantization for low-dimension vectors (<1024), the place info loss considerably impacts accuracy. Conventional scalar quantization (e.g., uint8) might swimsuit smaller embeddings higher.

Constructing the Bhagavad Gita Assistant

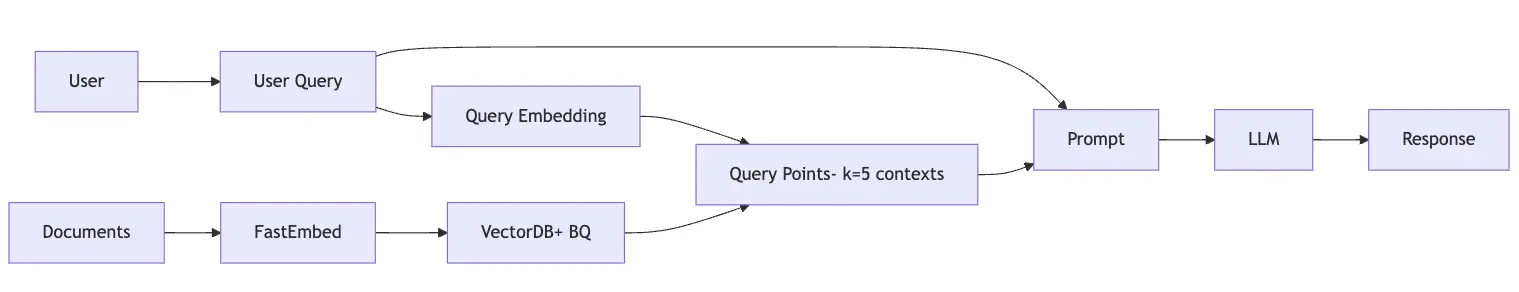

Under is the circulate chart that explains on how we will construct Bhagwad Gita Assistant:

Structure Overview

- Knowledge Ingestion: 900-page Bhagavad Gita PDF break up into textual content chunks.

- Embedding: Qdrant FastEmbed’s text-to-vector embedding mannequin.

- Vector DB: Qdrant with BQ shops embeddings, enabling millisecond searches.

- LLM Inference: Deepseek R1 through Groq LPUs generates context-aware responses.

- UI: Streamlit app with expandable “considering course of” visibility.

Step-by-Step Implementation

Allow us to now comply with the steps on by one:

Step1: Set up and Preliminary Setup

Let’s arrange the inspiration of our RAG pipeline utilizing LlamaIndex. We have to set up important packages together with the core LlamaIndex library, Qdrant vector retailer integration, FastEmbed for embeddings, and Groq for LLM entry.

Observe:

- For doc indexing, we are going to use a GPU from Colab to retailer the information. This can be a one-time course of.

- As soon as the information is saved, we will use the gathering identify to run inferences wherever, whether or not on VS Code, Streamlit, or different platforms.

!pip set up llama-index

!pip set up llama-index-vector-stores-qdrant llama-index-embeddings-fastembed

!pip set up llama-index-readers-file

!pip set up llama-index-llms-groq As soon as the set up is finished, let’s import the required modules.

import logging

import sys

import os

import qdrant_client

from qdrant_client import fashions

from llama_index.core import SimpleDirectoryReader

from llama_index.embeddings.fastembed import FastEmbedEmbedding

from llama_index.llms.groq import Groq # deep search r1 implementationStep2: Doc Processing and Embedding

Right here, we deal with the essential job of changing uncooked textual content into vector representations. The SimpleDirectoryReader hundreds paperwork from a specified folder.

Create a folder, i.e., an information listing, and add all of your paperwork inside it. In our case, we downloaded the Bhagavad Gita doc and saved it within the information folder.

You may obtain the ~900-page Bhagavad Gita doc right here: iskconmangaluru

information = SimpleDirectoryReader("information").load_data()

texts = [doc.text for doc in data]

embeddings = []

BATCH_SIZE = 50Qdrant’s FastEmbed is a light-weight, quick Python library designed for environment friendly embedding era. It helps standard textual content fashions and makes use of quantized mannequin weights together with the ONNX Runtime for inference, making certain excessive efficiency with out heavy dependencies.

To transform the textual content chunks into embeddings, we are going to use Qdrant’s FastEmbed. We course of these in batches of fifty paperwork to handle reminiscence effectively.

embed_model = FastEmbedEmbedding(model_name="thenlper/gte-large")

for web page in vary(0, len(texts), BATCH_SIZE):

page_content = texts[page:page + BATCH_SIZE]

response = embed_model.get_text_embedding_batch(page_content)

embeddings.lengthen(response)Step3: Qdrant Setup with Binary Quantization

Time to configure Qdrant shopper, our vector database, with optimized settings for efficiency. We create a group named “bhagavad-gita” with particular vector parameters and allow binary quantization for environment friendly storage and retrieval.

There are 3 ways to make use of the Qdrant shopper:

- In-Reminiscence Mode: Utilizing location=”:reminiscence:”, which creates a short lived occasion that runs solely as soon as.

- Localhost: Utilizing location=”localhost”, which requires operating a Docker occasion. You may comply with the setup information right here: Qdrant Quickstart.

- Cloud Storage: Storing collections within the cloud. To do that, create a brand new cluster, present a cluster identify, and generate an API key. Copy the important thing and retrieve the URL from the curl command.

Observe the gathering identify must be distinctive, after each information change this must be modified as properly.

collection_name = "bhagavad-gita"

shopper = qdrant_client.QdrantClient(

#location=":reminiscence:",

url = "QDRANT_URL", # change QDRANT_URL together with your endpoint

api_key = "QDRANT_API_KEY", # change QDRANT_API_KEY together with your API keys

prefer_grpc=True

)

We first examine if a group with the required collection_name exists in Qdrant. If it doesn’t, solely then we create a brand new assortment configured to retailer 1,024-dimensional vectors and use cosine similarity for distance measurement.

We allow on-disk storage for the unique vectors and apply binary quantization, which compresses the vectors to cut back reminiscence utilization and improve search pace. The always_ram parameter ensures that the quantized vectors are stored in RAM for sooner entry.

if not shopper.collection_exists(collection_name=collection_name):

shopper.create_collection(

collection_name=collection_name,

vectors_config=fashions.VectorParams(dimension=1024,

distance=fashions.Distance.COSINE,

on_disk=True),

quantization_config=fashions.BinaryQuantization(

binary=fashions.BinaryQuantizationConfig(

always_ram=True,

),

),

)

else:

print("Assortment already exists")Step4: Index the doc

The indexing course of uploads our processed paperwork and their embeddings to Qdrant in batches. Every doc is saved alongside its vector illustration, making a searchable data base.

The GPU can be used at this stage, and relying on the information dimension, this step might take a couple of minutes.

for idx in vary(0, len(texts), BATCH_SIZE):

docs = texts[idx:idx + BATCH_SIZE]

embeds = embeddings[idx:idx + BATCH_SIZE]

shopper.upload_collection(collection_name=collection_name,

vectors=embeds,

payload=[{"context": context} for context in docs])

shopper.update_collection(collection_name= collection_name,

optimizer_config=fashions.OptimizersConfigDiff(indexing_threshold=20000)) Step5: RAG Pipeline with Deepseek R1

Course of-1: R- Retrieve related doc

The search perform takes a person question, converts it to an embedding, and retrieves probably the most related paperwork from Qdrant primarily based on cosine similarity. We exhibit this with a pattern question concerning the Bhagavad-gītā, displaying how you can entry and print the retrieved context.

def search(question,okay=5):

# question = person immediate

query_embedding = embed_model.get_query_embedding(question)

outcome = shopper.query_points(

collection_name = collection_name,

question=query_embedding,

restrict = okay

)

return outcome

relevant_docs = search("In Bhagavad-gītā who's the individual dedicated to?")

print(relevant_docs.factors[4].payload['context'])Course of-2: A- Augmenting immediate

For RAG it’s vital to outline the system’s interplay template utilizing ChatPromptTemplate. The template creates a specialised assistant educated in Bhagavad-gita, able to understanding a number of languages (English, Hindi, Sanskrit).

It contains structured formatting for context injection and question dealing with, with clear directions for dealing with out-of-context questions.

from llama_index.core import ChatPromptTemplate

from llama_index.core.llms import ChatMessage, MessageRole

message_templates = [

ChatMessage(

content="""

You are an expert ancient assistant who is well versed in Bhagavad-gita.

You are Multilingual, you understand English, Hindi and Sanskrit.

Always structure your response in this format:

[Your step-by-step thinking process here]

[Your final answer here]

""",

position=MessageRole.SYSTEM),

ChatMessage(

content material="""

We have now offered context info under.

{context_str}

---------------------

Given this info, please reply the query: {question}

---------------------

If the query shouldn't be from the offered context, say `I do not know. Not sufficient info acquired.`

""",

position=MessageRole.USER,

),

]Course of-3: G- Producing the response

The ultimate pipeline brings every part collectively in a cohesive RAG system. It follows the Retrieve-Increase-Generate sample: retrieving related paperwork, augmenting them with our specialised immediate template, and producing responses utilizing the LLM. Right here for LLM we are going to use Deepseek R-1 distill Llama 70 B hosted on Groq, get your keys from right here: Groq Console.

os.environ['GROQ_API_KEY'] = "GROQ_API_KEY" # change together with your keys

llm = Groq(mannequin="deepseek-r1-distill-llama-70b")

def pipeline(question):

# R - Retriver

relevant_documents = search(question)

context = [doc.payload['context'] for doc in relevant_documents.factors]

context = "n".be part of(context)

# A - Increase

chat_template = ChatPromptTemplate(message_templates=message_templates)

# G - Generate

response = llm.full(

chat_template.format(

context_str=context,

question=question)

)

return response

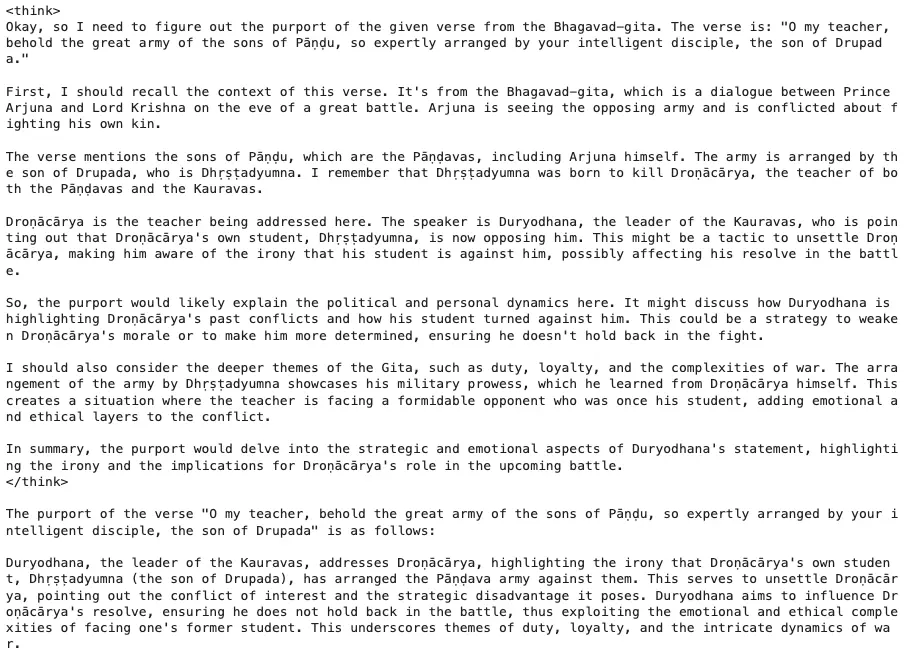

print(pipeline("""what's the PURPORT of O my trainer, behold the good military of the sons of Pāṇḍu, so

expertly organized by your clever disciple, the son of Drupada."""))Output: (Syntax: reasoning response)

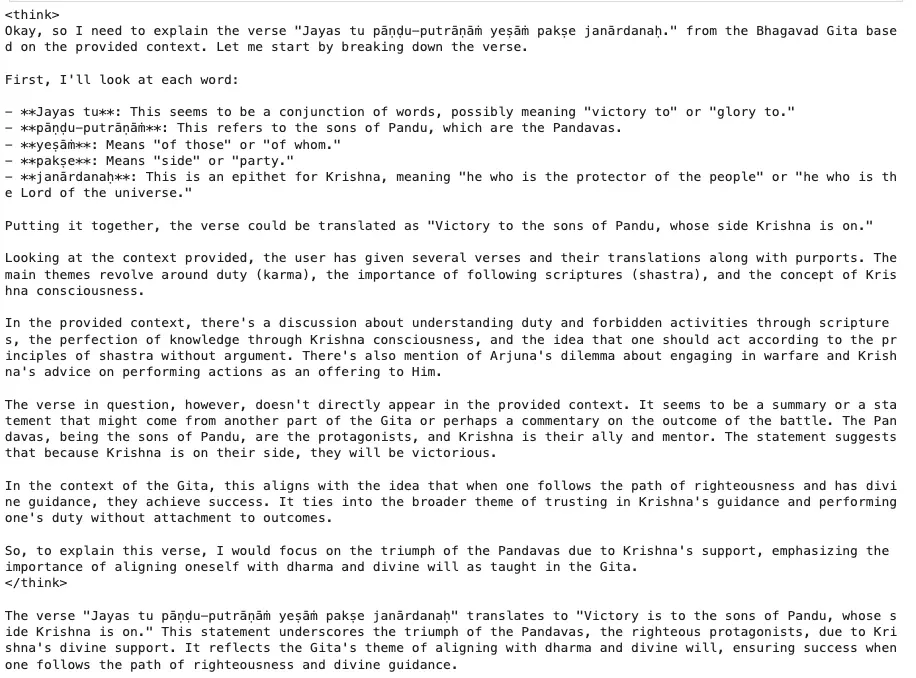

print(pipeline("""

Jayas tu pāṇḍu-putrāṇāṁ yeṣāṁ pakṣe janārdanaḥ.

clarify this gita from translation

"""))

Now what if you could use this utility once more? Are we alleged to endure all of the steps once more?

The reply is not any.

Step6: Saved Index Inference

There may be not a lot distinction in what you’ve already written. We are going to reuse the identical search and pipeline perform together with the gathering identify that we have to run the query_points.

shopper = qdrant_client.QdrantClient(

url= "QDRANT_URL",

api_key = "QDRANT_API_KEY",

prefer_grpc = True

)

# the search and pipeline code stay the identical.

def search(question, shopper, embed_model, okay=5):

collection_name = "bhagavad-gita"

query_embedding = embed_model.get_query_embedding(question)

outcome = shopper.query_points(

collection_name=collection_name,

question=query_embedding,

restrict=okay

)

return outcome

def pipeline(question, embed_model, llm, shopper):

# R - Retriever

relevant_documents = search(question, shopper, embed_model)

context = [doc.payload['context'] for doc in relevant_documents.factors]

context = "n".be part of(context)

# A - Increase

chat_template = ChatPromptTemplate(message_templates=message_templates)

# G - Generate

response = llm.full(

chat_template.format(

context_str=context,

question=question)

)

return responseWe are going to use the identical above two features and message_template within the Streamlit app.py.

Step7: Streamlit UI

In Streamlit after each person query, the state is refreshed. To keep away from refreshing your entire web page once more, we are going to outline a number of initialization steps beneath Streamlit cache_resource.

Keep in mind when the person enters the query, the FastEmbed will obtain the mannequin weights simply as soon as, the identical goes for Groq and Qdrant instantiation.

import streamlit as st

from time import sleep

import qdrant_client

from qdrant_client import fashions

from llama_index.core import ChatPromptTemplate

from llama_index.core.llms import ChatMessage, MessageRole

from llama_index.embeddings.fastembed import FastEmbedEmbedding

from llama_index.llms.groq import Groq

from dotenv import load_dotenv

import os

load_dotenv()

@st.cache_resource

def initialize_models():

embed_model = FastEmbedEmbedding(model_name="thenlper/gte-large")

llm = Groq(mannequin="deepseek-r1-distill-llama-70b")

shopper = qdrant_client.QdrantClient(

url=os.getenv("QDRANT_URL"),

api_key=os.getenv("QDRANT_API_KEY"),

prefer_grpc=True

)

return embed_model, llm, shopper

st.title("🕉️ Bhagavad Gita Assistant")

# this can run solely as soon as, and be saved contained in the cache

embed_model, llm, shopper = initialize_models() When you observed the response output, the format is

On the UI, I wish to maintain the reasoning beneath the Streamlit expander, to retrieve the reasoning half, let’s use string indexing to extract the reasoning and the precise response.

def extract_thinking_and_answer(response_text):

"""Extract considering course of and closing reply from response"""

strive:

considering = response_text[response_text.find("") + 7:response_text.find(" ")].strip()

reply = response_text[response_text.find("") + 8:].strip()

return considering, reply

besides:

return "", response_textChatbot Part

Initializes a messages historical past in Streamlit’s session state. A “Clear Chat” button within the sidebar permits customers to reset this historical past.

Iterates by way of saved messages and shows them in a chat-like interface. For assistant responses, it separates the considering course of (proven in an expandable part) from the precise reply utilizing the extract_thinking_and_answer perform.

The remaining piece of code is a typical format to outline the chatbot part in Streamlit i.e., enter dealing with that creates an enter subject for person questions. When a query is submitted, it’s displayed and added to the message historical past. Now it processes the person’s query by way of the RAG pipeline whereas displaying a loading spinner. The response is break up into considering course of and reply elements.

def important():

if "messages" not in st.session_state:

st.session_state.messages = []

with st.sidebar:

if st.button("Clear Chat"):

st.session_state.messages = []

st.rerun()

# Show chat messages

for message in st.session_state.messages:

with st.chat_message(message["role"]):

if message["role"] == "assistant":

considering, reply = extract_thinking_and_answer(message["content"])

with st.expander("Present considering course of"):

st.markdown(considering)

st.markdown(reply)

else:

st.markdown(message["content"])

# Chat enter

if immediate := st.chat_input("Ask your query concerning the Bhagavad Gita..."):

# Show person message

st.chat_message("person").markdown(immediate)

st.session_state.messages.append({"position": "person", "content material": immediate})

# Generate and show response

with st.chat_message("assistant"):

message_placeholder = st.empty()

with st.spinner("Considering..."):

full_response = pipeline(immediate, embed_model, llm, shopper)

considering, reply = extract_thinking_and_answer(full_response.textual content)

with st.expander("Present considering course of"):

st.markdown(considering)

response = ""

for chunk in reply.break up():

response += chunk + " "

message_placeholder.markdown(response + "▌")

sleep(0.05)

message_placeholder.markdown(reply)

# Add assistant response to historical past

st.session_state.messages.append({"position": "assistant", "content material": full_response.textual content})

if __name__ == "__main__":

important()Necessary Hyperlinks

- Yow will discover the complete code

- Different Bhagavad Gita PDF- Obtain

- Substitute the “

” placeholder together with your keys.

Conclusion

By combining Deepseek R1’s reasoning, Qdrant’s binary quantization, and LlamaIndex’s RAG pipeline, we’ve constructed an AI assistant that delivers sub-2-second responses on 1,000+ pages. This venture underscores how domain-specific LLMs and optimized vector databases can democratize entry to historic texts whereas sustaining value effectivity. As open-source fashions proceed to evolve, the chances for area of interest AI functions are limitless.

Key Takeaways

- Deepseek R1 rivals OpenAI o1 in reasoning at 1/twenty seventh the fee, splendid for domain-specific duties like scripture evaluation, whereas OpenAI fits broader data wants.

- Understanding RAG Pipeline Implementation with demonstrated code examples for doc processing, embedding era, and vector storage utilizing LlamaIndex and Qdrant.

- Environment friendly Vector Storage optimization by way of Binary Quantization in Qdrant, enabling processing of huge doc collections whereas sustaining efficiency and accuracy.

- Structured Immediate Engineering implementation with clear templates for dealing with multilingual queries (English, Hindi, Sanskrit) and managing out-of-context questions successfully.

- Interactive UI utilizing Streamlit, to inference the applying as soon as saved within the vector database.

Ceaselessly Requested Questions

A. Minimal impression on recall! Qdrant’s oversampling re-ranks prime candidates utilizing unique vectors, sustaining accuracy whereas boosting pace 40x and slashing reminiscence utilization by 97%.

A. Sure! The RAG pipeline makes use of FastEmbed’s embeddings and Deepseek R1’s language flexibility. Customized prompts information responses in English, Hindi, or Sanskrit. Whereas you should utilize the embedding mannequin that may perceive Hindi tokens, in our case the token used perceive English and Hindi textual content.

A. Deepseek R1 gives 27x decrease API prices, comparable reasoning accuracy (20.5% vs o1’s 21%), and superior coding/domain-specific efficiency. It’s splendid for specialised duties like scripture evaluation the place value and targeted experience matter.

The media proven on this article shouldn’t be owned by Analytics Vidhya and is used on the Writer’s discretion.