On this weblog, we stroll by way of tips on how to construct a real-time dashboard for operational monitoring and analytics on streaming occasion information from Kafka, which regularly requires advanced SQL, together with filtering, aggregations, and joins with different information units.

Apache Kafka is a broadly used distributed information log constructed to deal with streams of unstructured and semi-structured occasion information at large scales. Kafka is usually utilized by organizations to trace stay software occasions starting from sensor information to consumer exercise, and the flexibility to visualise and dig deeper into this information might be important to understanding enterprise efficiency.

Tableau, additionally broadly in style, is a instrument for constructing interactive dashboards and visualizations.

On this publish, we’ll create an instance real-time Tableau dashboard on streaming information in Kafka in a collection of simple steps, with no upfront schema definition or ETL concerned. We’ll use Rockset as a knowledge sink that ingests, indexes, and makes the Kafka information queryable utilizing SQL, and JDBC to attach Tableau and Rockset.

Streaming Information from Reddit

For this instance, let’s take a look at real-time Reddit exercise over the course of every week. Versus posts, let’s take a look at feedback – maybe a greater proxy for engagement. We’ll use the Kafka Join Reddit supply connector to pipe new Reddit feedback into our Kafka cluster. Every particular person remark seems like this:

{

"payload":{

"controversiality":0,

"title":"t1_ez72epm",

"physique":"I like that they loved it too! Thanks!",

"stickied":false,

"replies":{

"information":{

"youngsters":[]

},

"sort":"Itemizing"

},

"saved":false,

"archived":false,

"can_gild":true,

"gilded":0,

"rating":1,

"writer":"natsnowchuk",

"link_title":"Our 4 month outdated loves “airplane” rides. Hoping he enjoys the actual airplane trip this a lot in December.",

"parent_id":"t1_ez6v8xa",

"created_utc":1567718035,

"subreddit_type":"public",

"id":"ez72epm",

"subreddit_id":"t5_2s3i3",

"link_id":"t3_d0225y",

"link_author":"natsnowchuk",

"subreddit":"Mommit",

"link_url":"https://v.redd.it/pd5q8b4ujsk31",

"score_hidden":false

}

}

Connecting Kafka to Rockset

For this demo, I’ll assume we have already got arrange our Kafka subject, put in the Confluent Reddit Connector and adopted the accompanying directions to arrange a feedback subject processing all new feedback from Reddit in real-time.

To get this information into Rockset, we’ll first have to create a brand new Kafka integration in Rockset. All we want for this step is the title of the Kafka subject that we’d like to make use of as a knowledge supply, and the kind of that information (JSON / Avro).

As soon as we’ve created the combination, we will see a listing of attributes that we have to use to arrange our Kafka Join connector. For the needs of this demo, we’ll use the Confluent Platform to handle our cluster, however for self-hosted Kafka clusters these attributes might be copied into the related .properties file as specified right here. Nonetheless as long as now we have the Rockset Kafka Connector put in, we will add these manually within the Kafka UI:

Now that now we have the Rockset Kafka Sink arrange, we will create a Rockset assortment and begin ingesting information!

We now have information streaming stay from Reddit immediately into into Rockset through Kafka, with out having to fret about schemas or ETL in any respect.

Connecting Rockset to Tableau

Let’s see this information in Tableau!

I’ll assume now we have an account already for Tableau Desktop.

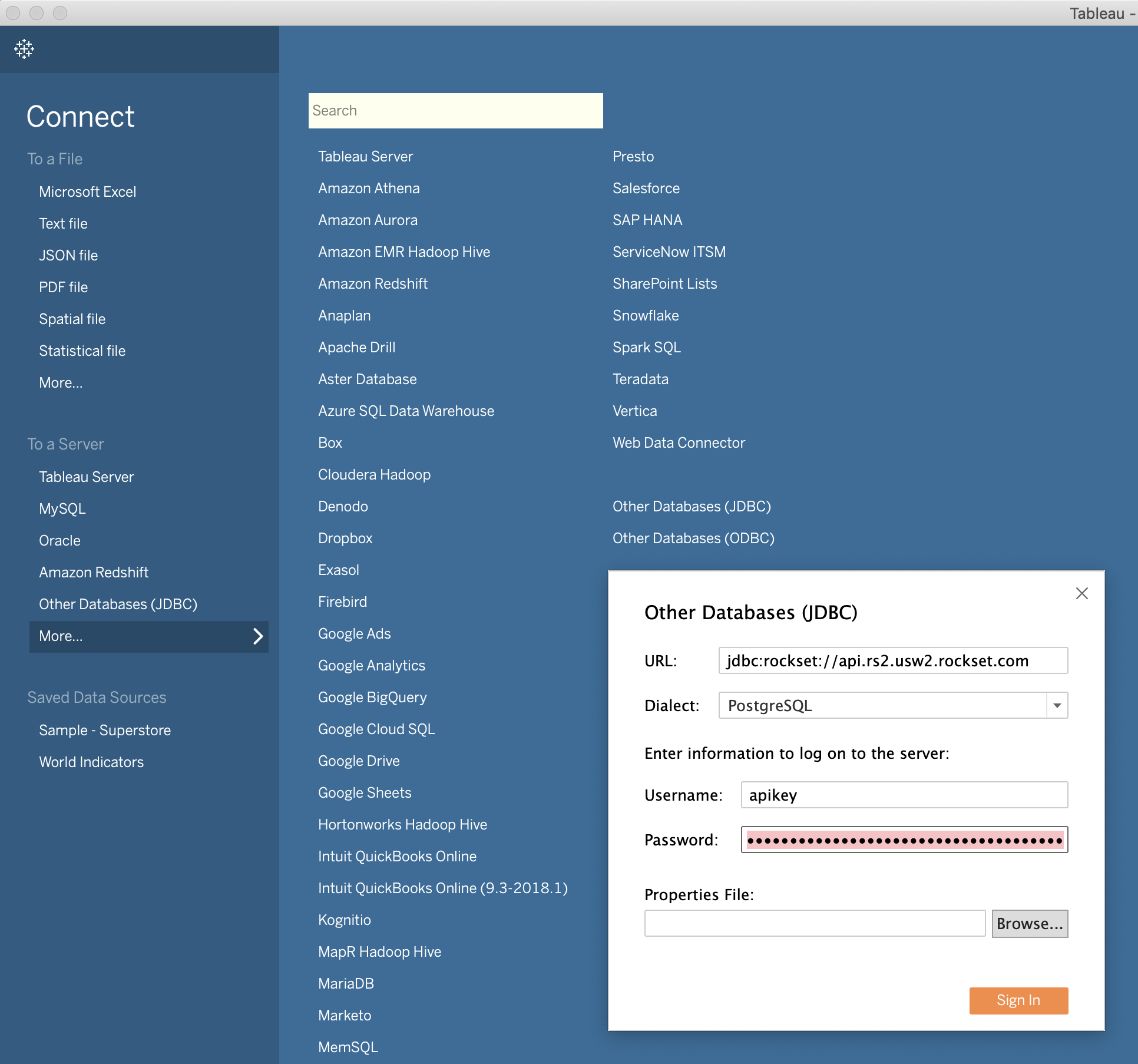

To attach Tableau with Rockset, we first have to obtain the Rockset JDBC driver from Maven and place it in ~/Library/Tableau/Drivers for Mac or C:Program FilesTableauDrivers for Home windows.

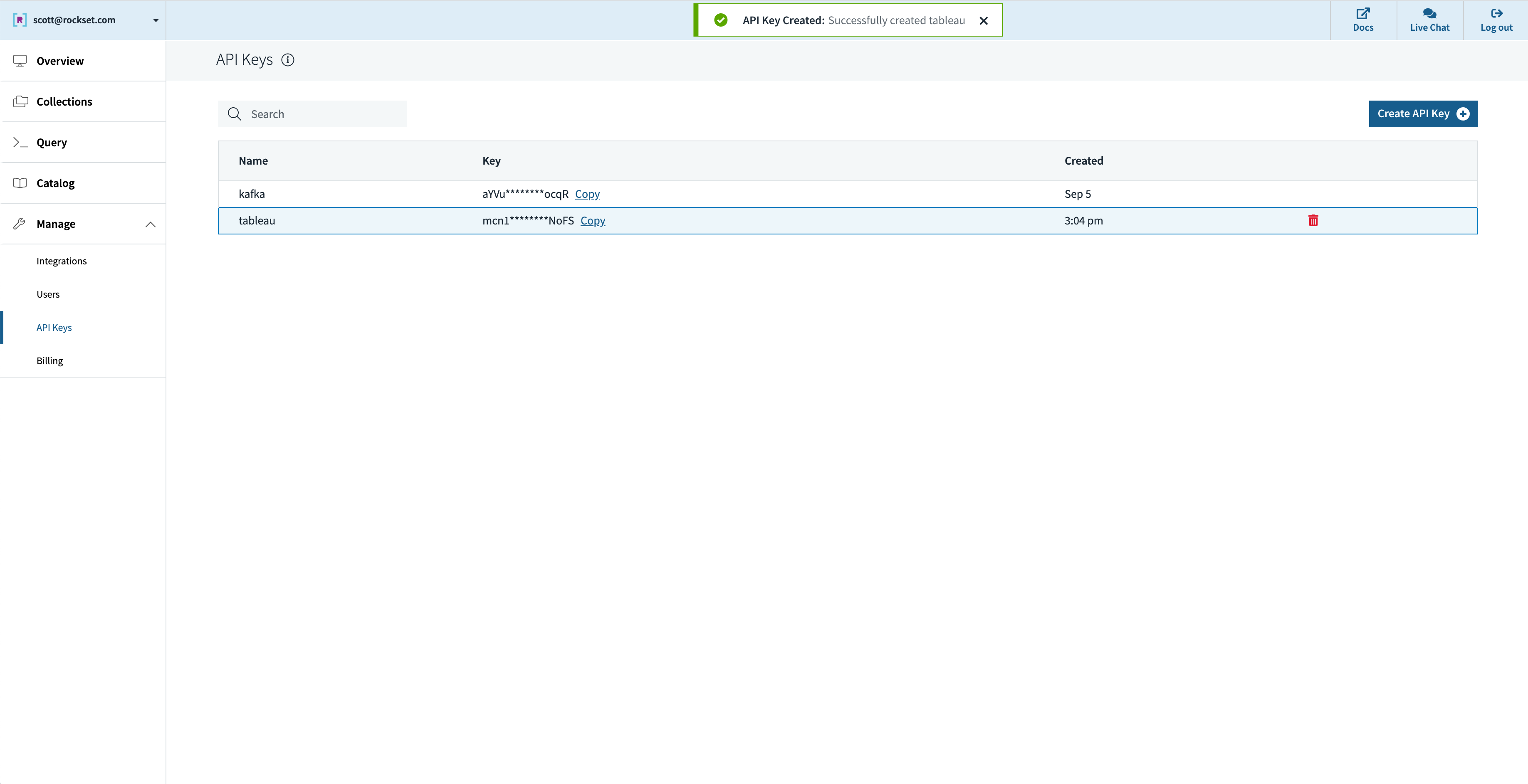

Subsequent, let’s create an API key in Rockset that Tableau will use for authenticating requests:

In Tableau, we connect with Rockset by selecting “Different Databases (JDBC)” and filling the fields, with our API key because the password:

That’s all it takes!

Creating real-time dashboards

Now that now we have information streaming into Rockset, we will begin asking questions. Given the character of the info, we’ll write the queries we want first in Rockset, after which use them to energy our stay Tableau dashboards utilizing the ‘Customized SQL’ function.

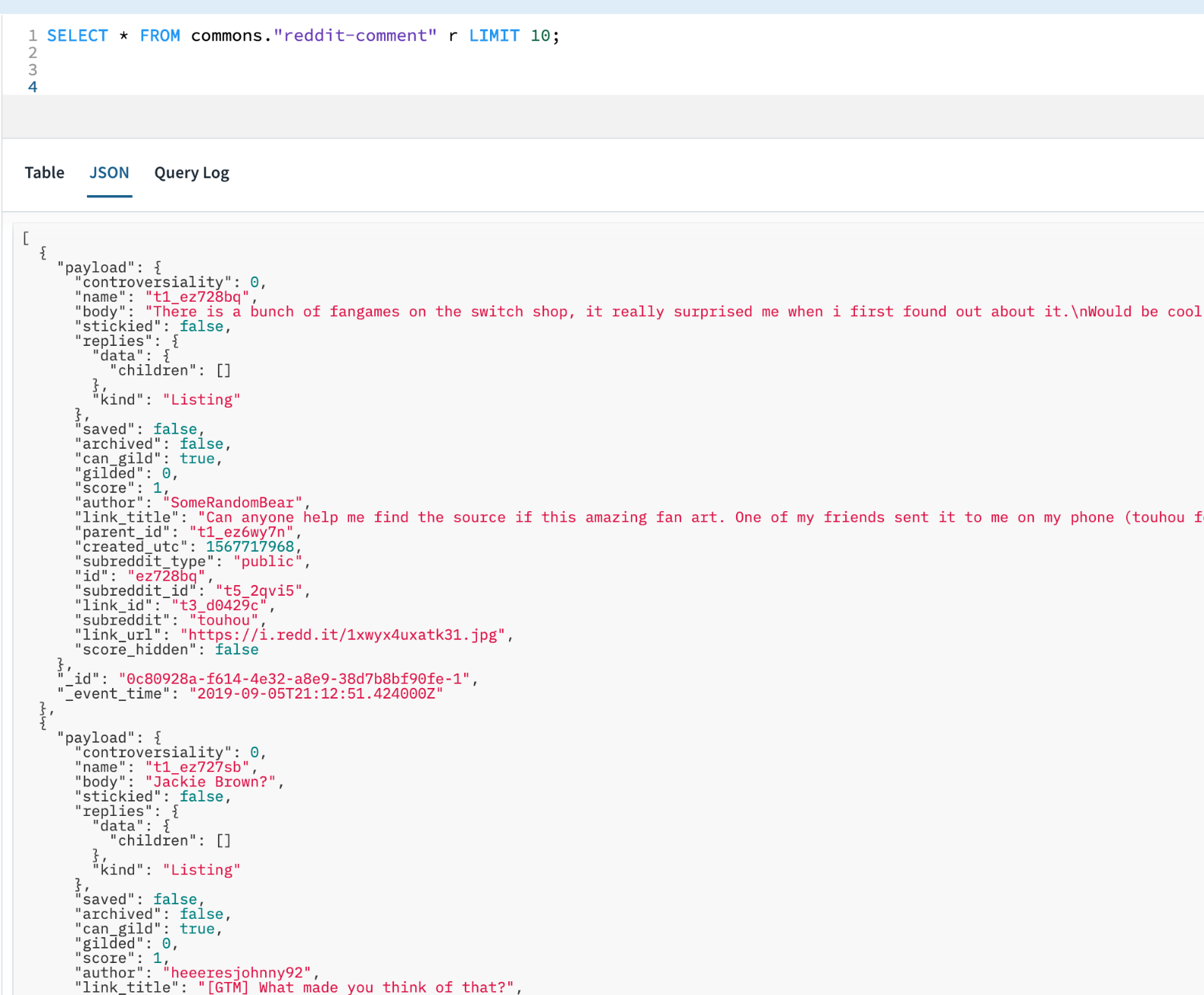

Let’s first take a look at the character of the info in Rockset:

Given the nested nature of a lot of the major fields, we received’t be capable of use Tableau to immediately entry them. As an alternative, we’ll write the SQL ourselves in Rockset and use the ‘Customized SQL’ choice to deliver it into Tableau.

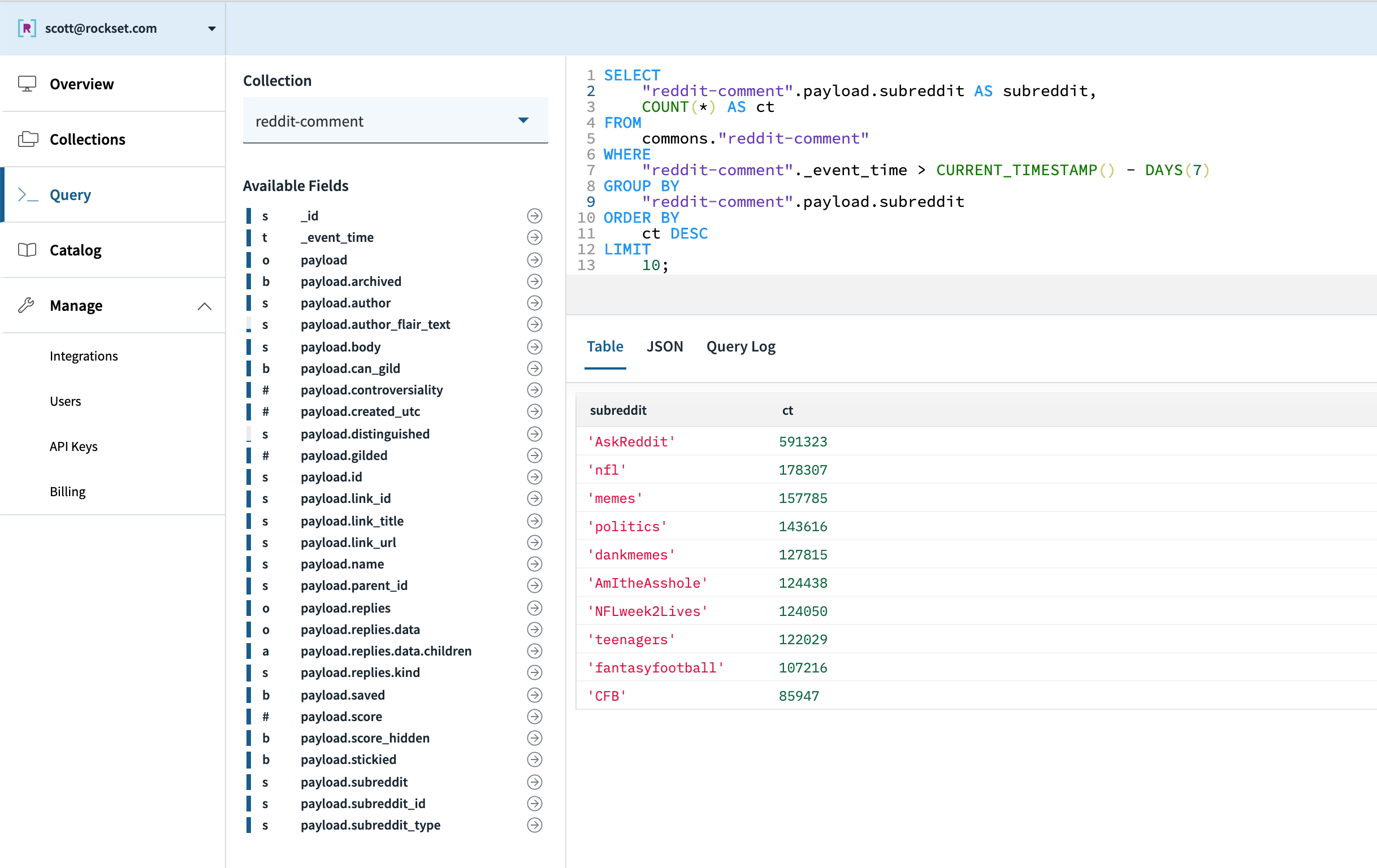

To start out with, let’s discover common Reddit traits of the final week. If feedback replicate engagement, which subreddits have probably the most engaged customers? We are able to write a primary question to search out the subreddits with the best exercise during the last week:

We are able to simply create a customized SQL information supply to signify this question and consider the ends in Tableau:

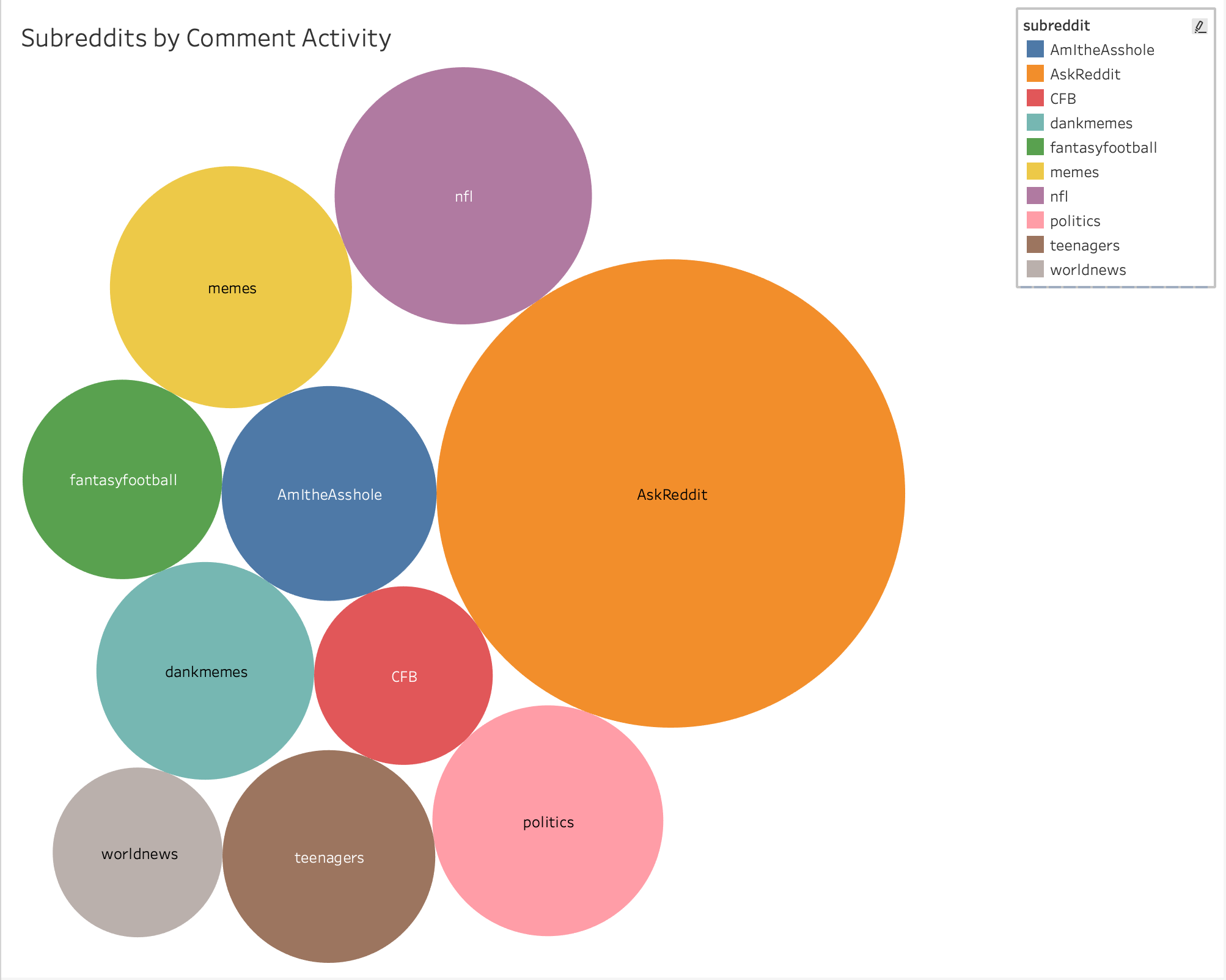

Right here’s the ultimate chart after accumulating every week of information:

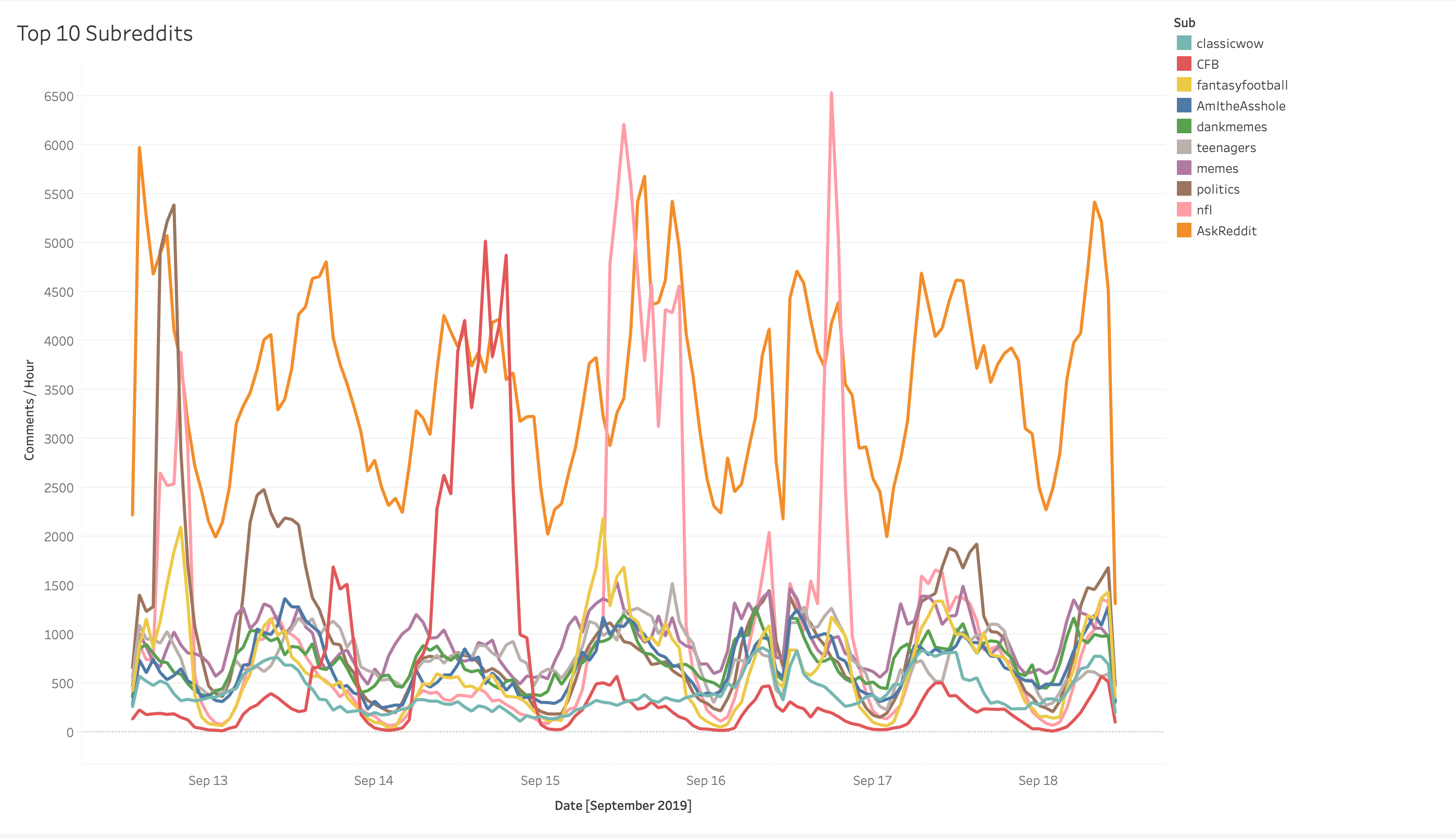

Curiously, Reddit appears to like soccer — we see 3 football-related Reddits within the high 10 (r/nfl, r/fantasyfootball, and r/CFB). Or on the very least, these Redditors who love soccer are extremely energetic firstly of the season. Let’s dig into this a bit extra – are there any exercise patterns we will observe in day-to-day subreddit exercise? One may hypothesize that NFL-related subreddits spike on Sundays, whereas these NCAA-related spike as a substitute on Saturdays.

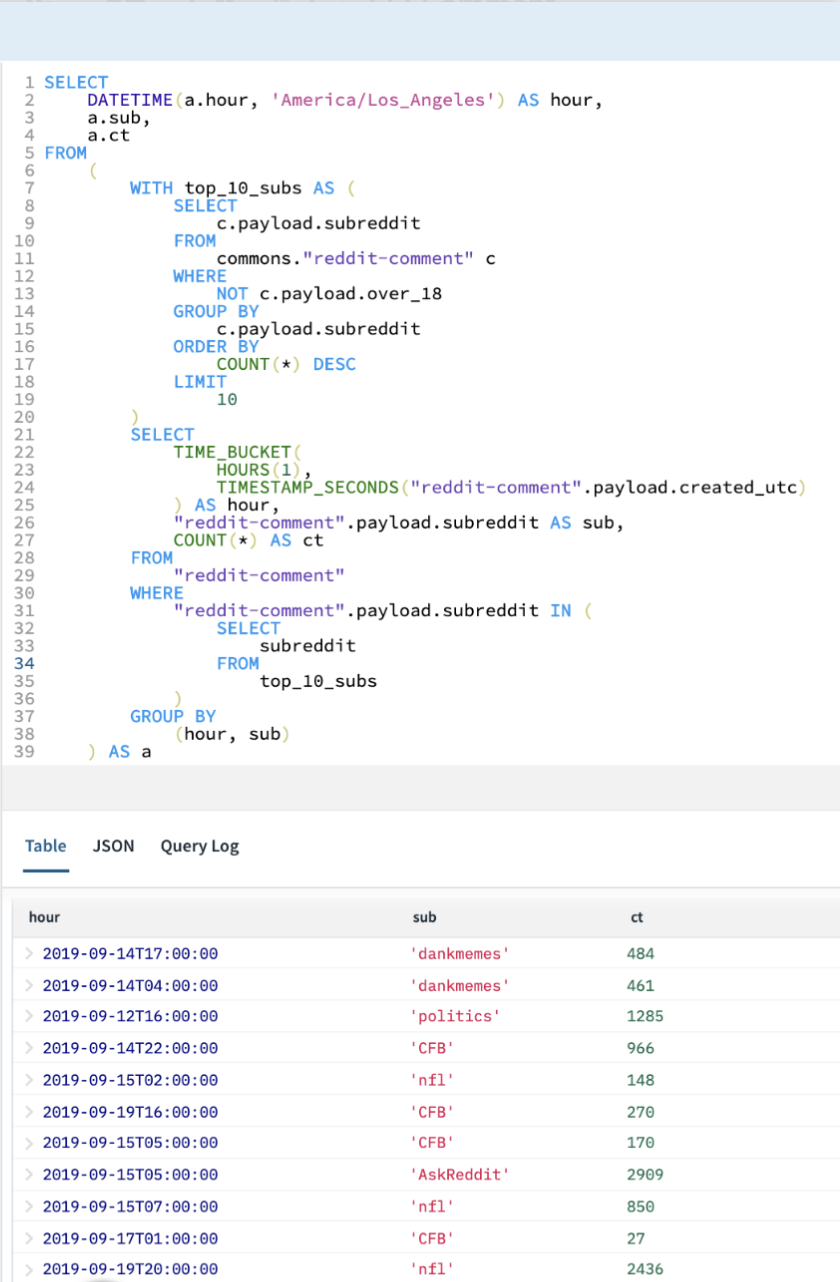

To reply this query, let’s write a question to bucket feedback per subreddit per hour and plot the outcomes. We’ll want some subqueries to search out the highest total subreddits:

Unsurprisingly, we do see giant spikes for r/CFB on Saturday and a good bigger spike for r/nfl on Sunday (though considerably surprisingly, probably the most energetic single hour of the week on r/nfl occurred on Monday Evening Soccer as Baker Mayfield led the Browns to a convincing victory over the injury-plagued Jets). Additionally curiously, peak game-day exercise in r/nfl surpassed the highs of every other subreddit at every other 1 hour interval, together with r/politics in the course of the Democratic Main Debate the earlier Monday.

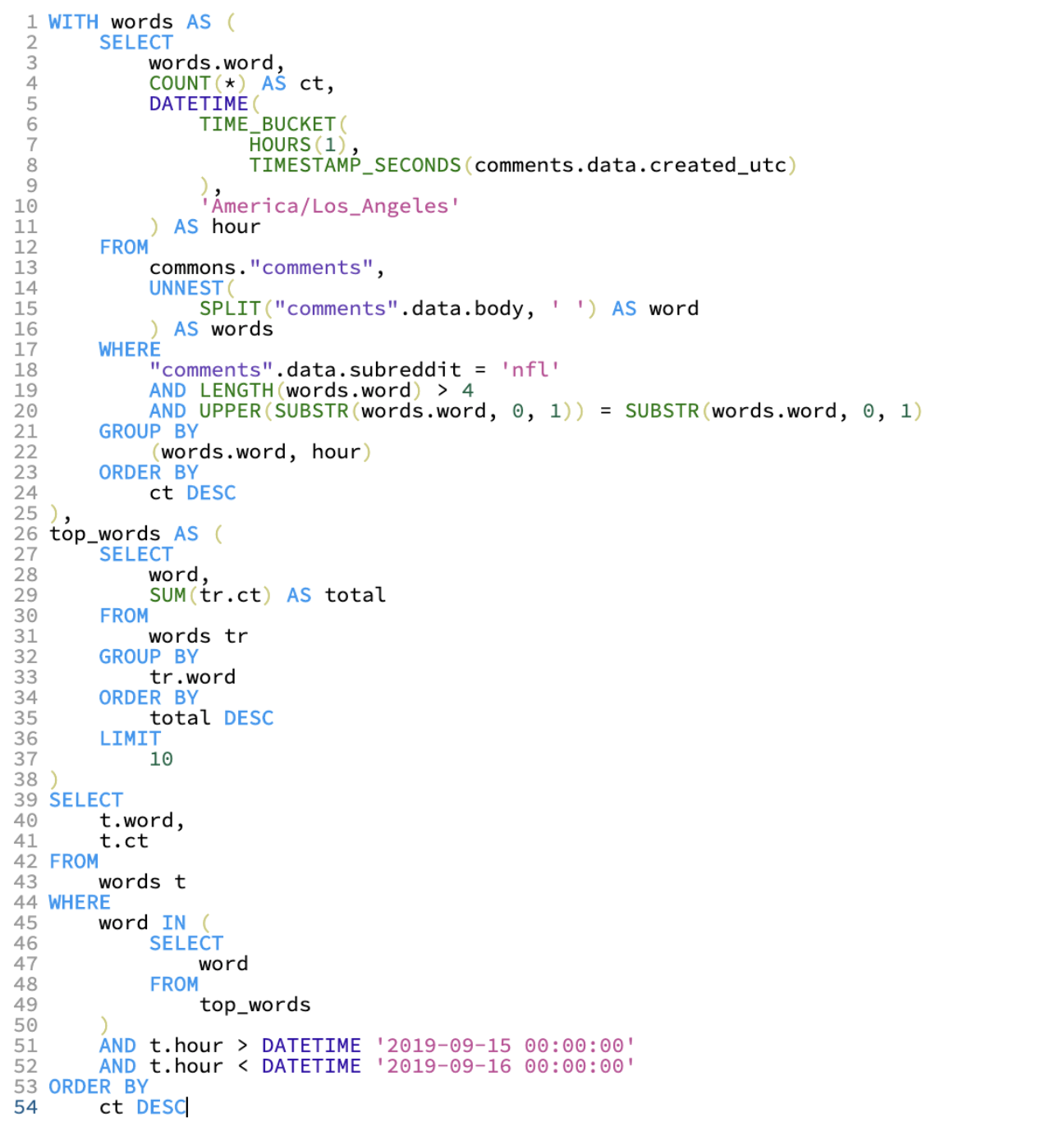

Lastly, let’s dig a bit deeper into what precisely had the parents at r/nfl so fired up. We are able to write a question to search out the ten most incessantly occurring participant / crew names and plot them over time as effectively. Let’s dig into Sunday specifically:

Observe that to get this information, we needed to cut up every remark by phrase and be a part of the unnested ensuing array again towards the unique assortment. Not a trivial question!

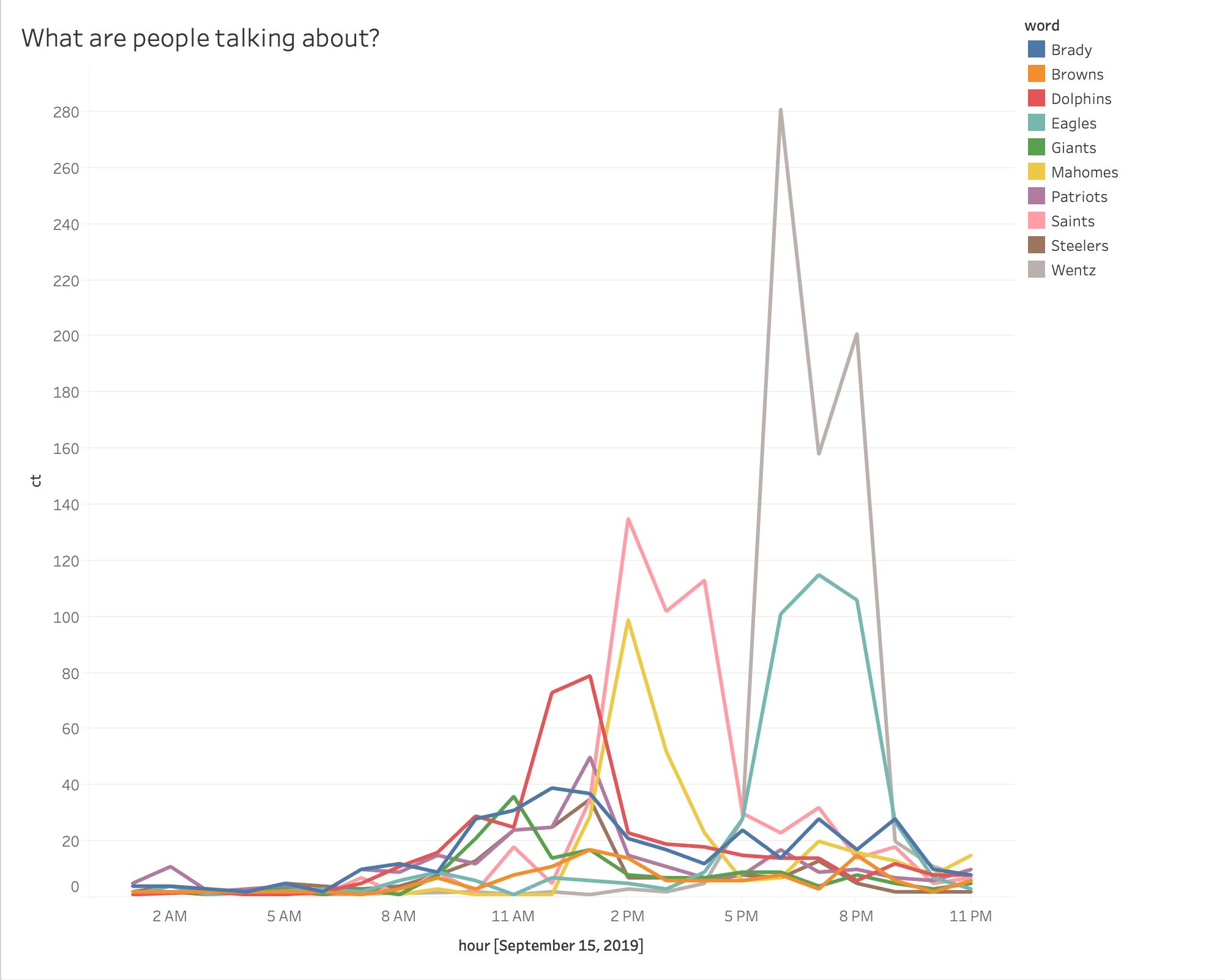

Once more utilizing the Tableau Customized SQL function, we see that Carson Wentz appears to have probably the most buzz in Week 2!

Abstract

On this weblog publish, we walked by way of creating an interactive, stay dashboard in Tableau to investigate stay streaming information from Kafka. We used Rockset as a knowledge sink for Kafka occasion information, so as to present low-latency SQL to serve real-time Tableau dashboards. The steps we adopted had been:

- Begin with information in a Kafka subject.

- Create a group in Rockset, utilizing the Kafka subject as a supply.

- Write a number of SQL queries that return the info wanted in Tableau.

- Create a knowledge supply in Tableau utilizing customized SQL.

- Use the Tableau interface to create charts and real-time dashboards.

Go to our Kafka options web page for extra info on constructing real-time dashboards and APIs on Kafka occasion streams.