Introduction

As safety professionals, we’re continually on the lookout for methods to scale back danger and enhance our workflow’s effectivity. We have made nice strides in utilizing AI to establish malicious content material, block threats, and uncover and repair vulnerabilities. We additionally printed the Safe AI Framework (SAIF), a conceptual framework for safe AI methods to make sure we’re deploying AI in a accountable method.

Immediately we’re highlighting one other approach we use generative AI to assist the defenders acquire the benefit: Leveraging LLMs (Giant Language Mannequin) to speed-up our safety and privateness incidents workflows.

Incident administration is a workforce sport. We’ve to summarize safety and privateness incidents for various audiences together with executives, leads, and accomplice groups. This generally is a tedious and time-consuming course of that closely depends upon the goal group and the complexity of the incident. We estimate that writing an intensive abstract can take almost an hour and extra advanced communications can take a number of hours. However we hypothesized that we might use generative AI to digest info a lot sooner, releasing up our incident responders to give attention to different extra important duties – and it proved true. Utilizing generative AI we might write summaries 51% sooner whereas additionally enhancing the standard of them.

Our incident response method

When suspecting a possible information incident, for instance,we comply with a rigorous course of to handle it. From the identification of the issue, the coordination of specialists and instruments, to its decision after which closure. At Google, when an incident is reported, our Detection & Response groups work to revive regular service as shortly as potential, whereas assembly each regulatory and contractual compliance necessities. They do that by following the 5 essential steps within the Google incident response program:

-

Identification: Monitoring safety occasions to detect and report on potential information incidents utilizing superior detection instruments, indicators, and alert mechanisms to offer early indication of potential incidents.

-

Coordination: Triaging the reviews by gathering info and assessing the severity of the incident based mostly on elements comparable to potential hurt to prospects, nature of the incident, sort of information that is perhaps affected, and the affect of the incident on prospects. A communication plan with applicable leads is then decided.

-

Decision: Gathering key info in regards to the incident comparable to root trigger and affect, and integrating extra assets as wanted to implement crucial fixes as a part of remediation.

-

Closure: After the remediation efforts conclude, and after a knowledge incident is resolved, reviewing the incident and response to establish key areas for enchancment.

-

Steady enchancment: Is essential for the event and upkeep of incident response applications. Groups work to enhance this system based mostly on classes discovered, making certain that crucial groups, coaching, processes, assets, and instruments are maintained.

Google’s Incident Response Course of diagram movement

Leveraging generative AI

Our detection and response processes are important in defending our billions of world customers from the rising menace panorama, which is why we’re constantly on the lookout for methods to enhance them with the newest applied sciences and methods. The expansion of generative AI has introduced with it unbelievable potential on this space, and we had been wanting to discover the way it might assist us enhance components of the incident response course of. We began by leveraging LLMs to not solely pioneer fashionable approaches to incident response, but in addition to make sure that our processes are environment friendly and efficient at scale.

Managing incidents generally is a advanced course of and an extra issue is efficient inside communication to leads, executives and stakeholders on the threats and standing of incidents. Efficient communication is important because it correctly informs executives in order that they’ll take any crucial actions, in addition to to satisfy regulatory necessities. Leveraging LLMs for this sort of communication can save important time for the incident commanders whereas enhancing high quality on the identical time.

People vs. LLMs

Provided that LLMs have summarization capabilities, we wished to discover if they’re able to generate summaries on par, or in addition to people can. We ran an experiment that took 50 human-written summaries from native and non-native English audio system, and 50 LLM-written ones with our best (and last) immediate, and offered them to safety groups with out revealing the writer.

We discovered that the LLM-written summaries coated all the key factors, they had been rated 10% greater than their human-written equivalents, and minimize the time essential to draft a abstract in half.

Comparability of human vs LLM content material completeness

Comparability of human vs LLM writing kinds

Managing dangers and defending privateness

Leveraging generative AI is just not with out dangers. With the intention to mitigate the dangers round potential hallucinations and errors, any LLM generated draft should be reviewed by a human. However not all dangers are from the LLM – human misinterpretation of a reality or assertion generated by the LLM can even occur. That’s the reason it’s essential to make sure there’s human accountability, in addition to to observe high quality and suggestions over time.

Provided that our incidents can comprise a mix of confidential, delicate, and privileged information, we had to make sure we constructed an infrastructure that doesn’t retailer any information. Each part of this pipeline – from the consumer interface to the LLM to output processing – has logging turned off. And, the LLM itself doesn’t use any enter or output for re-training. As a substitute, we use metrics and indicators to make sure it’s working correctly.

Enter processing

The kind of information we course of throughout incidents may be messy and infrequently unstructured: Free-form textual content, logs, pictures, hyperlinks, affect stats, timelines, and code snippets. We would have liked to construction all of that information so the LLM “knew” which a part of the data serves what objective. For that, we first changed lengthy and noisy sections of codes/logs by self-closing tags ( and

Throughout immediate engineering, we refined this method and added extra tags comparable to

Pattern {incident} enter

Immediate engineering

As soon as we added construction to the enter, it was time to engineer the immediate. We began easy by exploring how LLMs can view and summarize all the present incident info with a brief activity:

Caption: First immediate model

Limits of this immediate:

-

The abstract was too lengthy, particularly for executives making an attempt to grasp the danger and affect of the incident

-

Some essential info weren't coated, such because the incident’s affect and its mitigation

-

The writing was inconsistent and never following our greatest practices comparable to “passive voice”, “tense”, “terminology” or “format”

-

Some irrelevant incident information was being built-in into the abstract from electronic mail threads

-

The mannequin struggled to grasp what probably the most related and up-to-date info was

For model 2, we tried a extra elaborate immediate that might tackle the issues above: We informed the mannequin to be concise and we defined what a well-written abstract must be: About the principle incident response steps (coordination and backbone).

Second immediate model

Limits of this immediate:

-

The summaries nonetheless didn't all the time succinctly and precisely tackle the incident within the format we had been anticipating

-

At occasions, the mannequin overpassed the duty or didn't take all the rules into consideration

-

The mannequin nonetheless struggled to stay to the newest updates

-

We observed an inclination to attract conclusions on hypotheses with some minor hallucinations

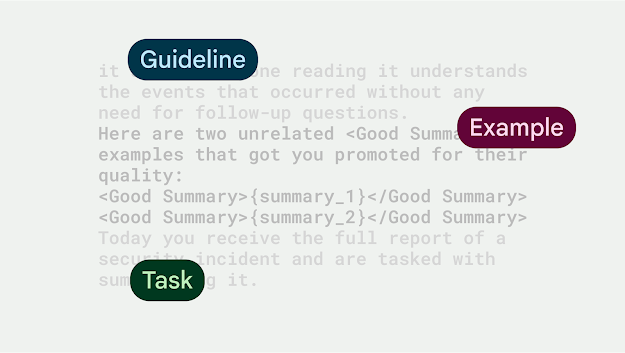

For the last immediate, we inserted 2 human-crafted abstract examples and launched a

Remaining immediate

This produced excellent summaries, within the construction we wished, with all key factors coated, and virtually with none hallucinations.

Workflow integration

In integrating the immediate into our workflow, we wished to make sure it was complementing the work of our groups, vs. solely writing communications. We designed the tooling in a approach that the UI had a ‘Generate Abstract’ button, which might pre-populate a textual content area with the abstract that the LLM proposed. A human consumer can then both settle for the abstract and have it added to the incident, do guide modifications to the abstract and settle for it, or discard the draft and begin once more.

UI displaying the ‘generate draft’ button and LLM proposed abstract round a faux incident

Quantitative wins

Our newly-built instrument produced well-written and correct summaries, leading to 51% time saved, per incident abstract drafted by an LLM, versus a human.

Time financial savings utilizing LLM-generated summaries (pattern dimension: 300)

The one edge circumstances we have now seen had been round hallucinations when the enter dimension was small in relation to the immediate dimension. In these circumstances, the LLM made up many of the abstract and key factors had been incorrect. We fastened this programmatically: If the enter dimension is smaller than 200 tokens, we gained’t name the LLM for a abstract and let the people write it.

Evolving to extra advanced use circumstances: Govt updates

Given these outcomes, we explored different methods to use and construct upon the summarization success and apply it to extra advanced communications. We improved upon the preliminary abstract immediate and ran an experiment to draft government communications on behalf of the Incident Commander (IC). The objective of this experiment was to make sure executives and stakeholders shortly perceive the incident info, in addition to permit ICs to relay essential info round incidents. These communications are advanced as a result of they transcend only a abstract - they embody totally different sections (comparable to abstract, root trigger, affect, and mitigation), comply with a selected construction and format, in addition to adhere to writing finest practices (comparable to impartial tone, energetic voice as a substitute of passive voice, reduce acronyms).

This experiment confirmed that generative AI can evolve past excessive stage summarization and assist draft advanced communications. Furthermore, LLM-generated drafts, decreased time ICs spent writing government summaries by 53% of time, whereas delivering no less than on-par content material high quality when it comes to factual accuracy and adherence to writing finest practices.

What’s subsequent

We're continually exploring new methods to make use of generative AI to guard our customers extra effectively and stay up for tapping into its potential as cyber defenders. For instance, we're exploring utilizing generative AI as an enabler of formidable reminiscence security tasks like instructing an LLM to rewrite C++ code to memory-safe Rust, in addition to extra incremental enhancements to on a regular basis safety workflows, comparable to getting generative AI to learn design paperwork and subject safety suggestions based mostly on their content material.

.png)