Giant Language Fashions (LLMs) have the potential to automate and cut back the workloads of many sorts, together with these of cybersecurity analysts and incident responders. However generic LLMs lack the domain-specific data to deal with these duties nicely. Whereas they could have been constructed with coaching knowledge that included some cybersecurity-related sources, that’s usually inadequate for taking up extra specialised duties that require extra updated and, in some circumstances, proprietary data to carry out nicely—data not accessible to the LLMs after they had been educated.

There are a number of present options for tuning “inventory” (unmodified) LLMs for particular varieties of duties. However sadly, these options had been inadequate for the varieties of functions of LLMs that Sophos X-Ops is making an attempt to implement. For that cause, SophosAI has assembled a framework that makes use of DeepSpeed, a library developed by Microsoft that can be utilized to coach and tune the inference of a mannequin with (in principle) trillions of parameters by scaling up the compute energy and variety of graphics processing models (GPUs) used throughout coaching. The framework is open supply licensed and may be present in our GitHub repository.

Whereas most of the elements of the framework are usually not novel and leverage present open-source libraries, SophosAI has synthesized a number of of the important thing parts for ease of use. And we proceed to work on bettering the efficiency of the framework.

The (insufficient) alternate options

There are a number of present approaches to adapting inventory LLMs to domain-specific data. Every of them has its personal benefits and limitations.

| Method | Strategies utilized | Limitations |

| Retrieval Augmented Technology |

|

|

| Continued Coaching |

|

|

| Parameter Environment friendly High-quality-tuning |

|

|

To be totally efficient, a website knowledgeable LLM requires pre-training of all its parameters to be taught the proprietary data of an organization. That endeavor may be useful resource intensive and time consuming—which is why we turned to DeepSpeed for our coaching framework, which we applied in Python. The model of the framework that we’re releasing as open supply may be run within the Amazon Net Providers SageMaker machine studying service, nevertheless it could possibly be tailored to different environments.

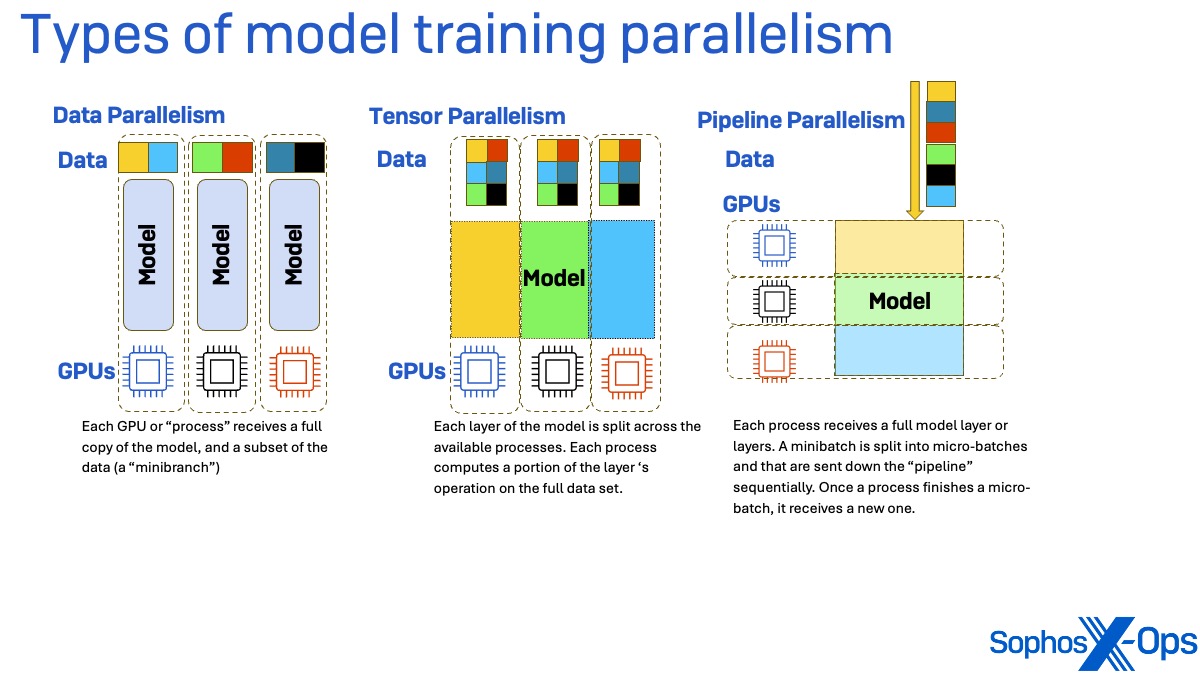

Coaching frameworks (together with DeepSpeed) can help you scale up massive mannequin coaching duties via parallelism. There are three foremost varieties of parallelism: knowledge, tensor, and pipeline.

In knowledge parallelism, every course of engaged on the coaching activity (primarily every graphics processor unit, or GPU) receives a replica of the total mannequin’s weights however solely a subset of the info, known as a minibatch. After the ahead go via the info (to calculate loss , or the quantity of inaccuracy within the parameters of the mannequin getting used for coaching) and the backward go (to calculate the gradient of the loss) are accomplished, the ensuing gradients are synchronized.

In Tensor parallelism, every layer of the mannequin getting used for coaching is break up throughout the accessible processes. Every course of computes a portion of the layer ‘s operation utilizing the total coaching knowledge set. The partial outputs from every of those layers are synchronized throughout processes to create a single output matrix.

Pipeline parallelism splits up the mannequin otherwise. As a substitute of parallelizing by splitting layers of the mannequin, every layer of the mannequin receives its personal course of. The minibatches of knowledge are divided into micro-batches and which are despatched down the “pipeline” sequentially. As soon as a course of finishes a micro-batch, it receives a brand new one. This technique could expertise “bubbles” the place a course of is idling, ready for the output of processes internet hosting earlier mannequin layers.

These three parallelism methods can be mixed in a number of methods—and are, within the DeepSpeed coaching library.

Doing it with DeepSpeed

DeepSpeed performs sharded knowledge parallelism. Each mannequin layer is break up such that every course of will get a slice, and every course of is given a separate mini batch as enter. Throughout the ahead go, every course of shares its slice of the layer with the opposite processes. On the finish of this communication, every course of now has a replica of the total mannequin layer.

Every course of computes the layer output for its mini batch. After the method finishes computation for the given layer and its mini batch, the method discards the elements of the layer it was not initially holding.

The backwards go via the coaching knowledge is completed in a similar way. As with knowledge parallelism, the gradients are gathered on the finish of the backwards go and synchronized throughout processes.

Coaching processes are extra constrained of their efficiency by reminiscence than processing energy—and bringing on extra GPUs with further reminiscence to deal with a batch that’s too massive for the GPU’s personal reminiscence may cause vital efficiency price due to the communication pace between GPUs, in addition to the price of utilizing extra processors than would in any other case be required to run the method. One of many key components of the DeepSpeed library is its Zero Redundancy Optimizer (ZeRO), a set of reminiscence utilization methods that may effectively parallelize very massive language mannequin coaching. ZeRO can cut back the reminiscence consumption of every GPU by partitioning the mannequin states (optimizers, gradients, and parameters) throughout parallelized knowledge processes as an alternative of duplicating them throughout every course of.

The trick is discovering the fitting mixture of coaching approaches and optimizations in your computational finances. There are three selectable ranges of partitioning in ZeRO:

- ZeRO Stage 1 shards the optimizer state throughout.

- Stage 2 shards the optimizer + the gradients.

- Stage 3 shards the optimizer + the gradients + the mannequin weights.

Every stage has its personal relative advantages. ZeRO Stage 1 can be quicker, for instance, however would require extra reminiscence than Stage 2 or 3. There are two separate inference approaches inside the DeepSpeed toolkit:

- DeepSpeed Inference: inference engine with optimizations equivalent to kernel injection; this has decrease latency however requires extra reminiscence.

- ZeRO Inference: permits for offloading parameters into CPU or NVMe reminiscence throughout inference; this has increased latency however consumes much less GPU reminiscence.

Our Contributions

The Sophos AI staff has put collectively a toolkit based mostly on DeepSpeed that helps take a number of the ache out of using it. Whereas the elements of the toolkit itself are usually not novel, what’s new is the comfort of getting a number of key parts synthesized for ease of use.

On the time of its creation, this instrument repository was the primary to mix coaching and each DeepSpeed inference varieties (DeepSpeed Inference and ZeRO Inference) into one configurable script. It was additionally the primary repository to create a customized container for operating the newest DeepSpeed model on Amazon Net Service’s SageMaker. And it was the primary repository to carry out distributed script based mostly DeepSpeed inference that was not run as an endpoint on SageMaker. The coaching strategies at present supported embrace continued pre-training, supervised fine-tuning, and eventually desire optimization.

The repository and its documentation may be discovered right here on Sophos’ GitHub.