The period of getting to copy-paste code from an AI chat tab into your code editor has come to an finish some time in the past. AI-powered coding assistants have develop into more and more refined, with instruments like Aider displaying how highly effective command-line AI integration may be for improvement workflows. As a draw back, these instruments usually require you to be taught particular instructions and syntax to speak successfully with the AI.

Claude Code builds on this basis with a extra intuitive method. As an alternative of memorizing instructions, you’ll be able to describe what you wish to do utilizing pure language.

Getting Began

Obtain the venture supplies through the Obtain Supplies hyperlink on the prime and backside of this web page. Subsequent, unzip the venture someplace for later use.

To observe together with this tutorial, you’ll must have the next put in:

With that taken care of, it’s time to take a more in-depth take a look at what you are able to do with Claude Code and tips on how to set up it.

What’s Claude Code?

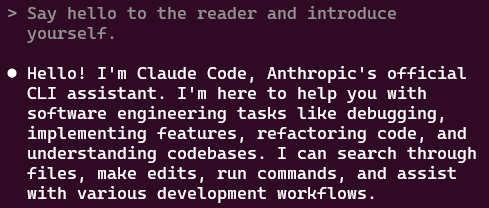

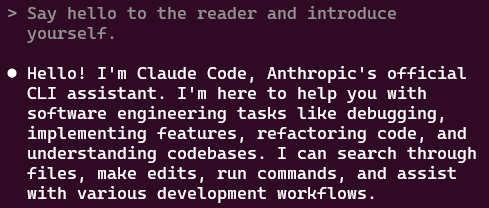

Claude Code is an agentic command line device. That’s a flowery time period for a CLI program that may perceive what you wish to accomplish after which determine and execute the steps to do it, quite than simply operating one particular command at a time. As an alternative of getting to change forwards and backwards between your code editor and an AI chat tab, you’ll be able to delegate coding duties on to Claude proper out of your command line.

Consider it as a wise assistant that may enable you to with something you want to do, with entry to a variety of instruments and assets. It’s designed to streamline your improvement course of by bringing Claude‘s coding capabilities proper the place you’re already working.

Organising Claude Code

Earlier than delving into the set up, you want to know that utilizing Claude Code isn’t free.

Claude Code wants both a Claude subscription or an Anthropic API key to operate. Should you can swing it, I’d strongly advocate getting an annual Claude Professional subscription — it’s far more cost-effective than paying per API name since Claude Code can burn via tokens shortly.

Undecided if Claude Code is price it? Seize an API key and cargo it with $10 in credit score. That’ll get you thru this tutorial with some tokens left over to experiment.

No matter possibility you go along with, the subsequent step is to put in Claude Code!

Set up

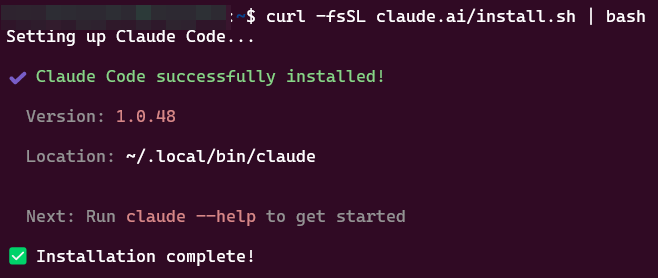

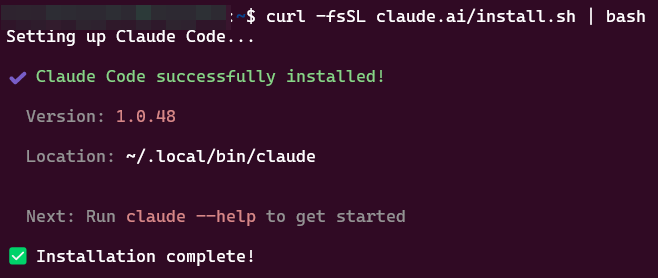

Open a brand new terminal window and run the command under to put in Claude Code:

npm set up -g @anthropic-ai/claude-code

You must see the next message after the set up is full:

Configuring Claude Code

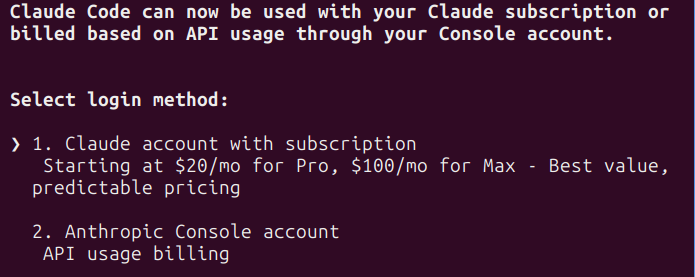

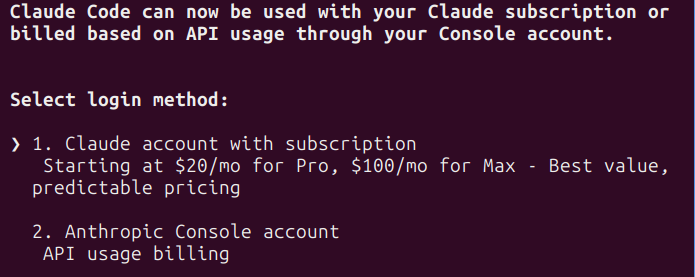

If you run Claude Code for the primary time, it should ask you to set a colour mode, select no matter appears to be like finest in your terminal. After that, you’ll get requested on your login technique:

When you’ve got a Claude Professional or Max account, select possibility 1 right here and it’ll attempt to open an online browser to register together with your account. Should you favor to make use of your Anthropic Console account, select possibility 2 and enter your API key when requested.

Word: If the browser window doesn’t open mechanically, you’ll be able to copy the URL from the terminal and paste it into your browser manually to get the code. Copy that code and paste it again into the terminal when prompted.

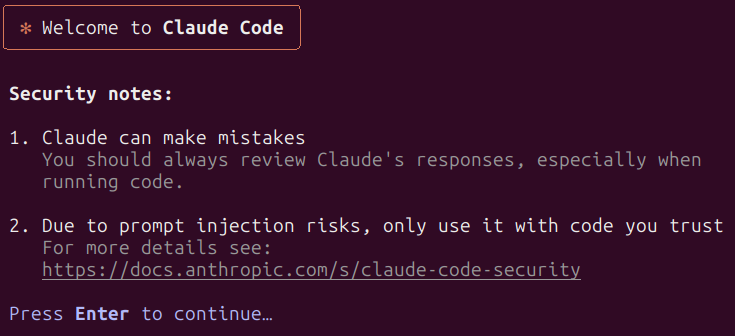

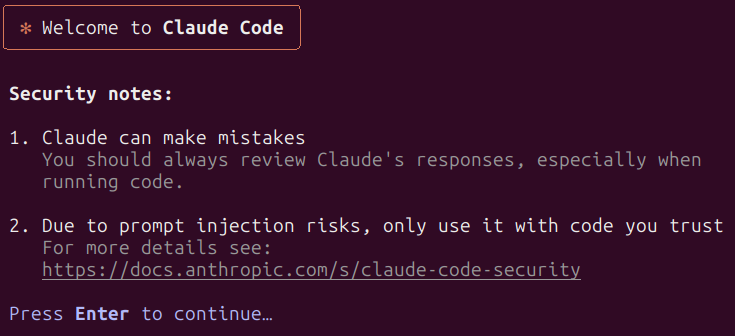

When you’re logged in, you’ll get a remaining disclaimer. Press Enter to dismiss it and also you’ll be good to go.

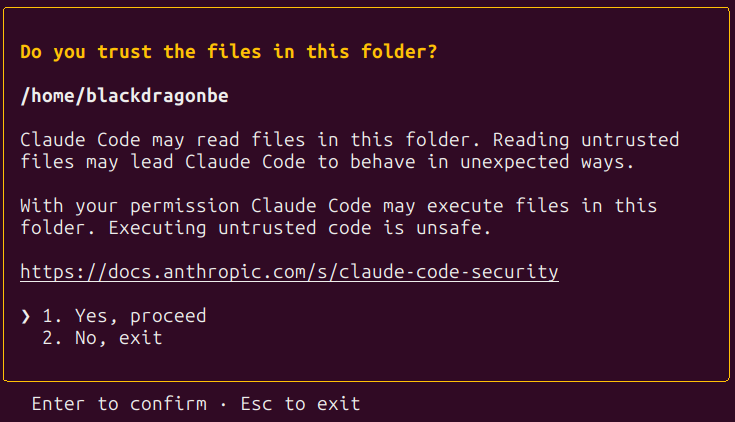

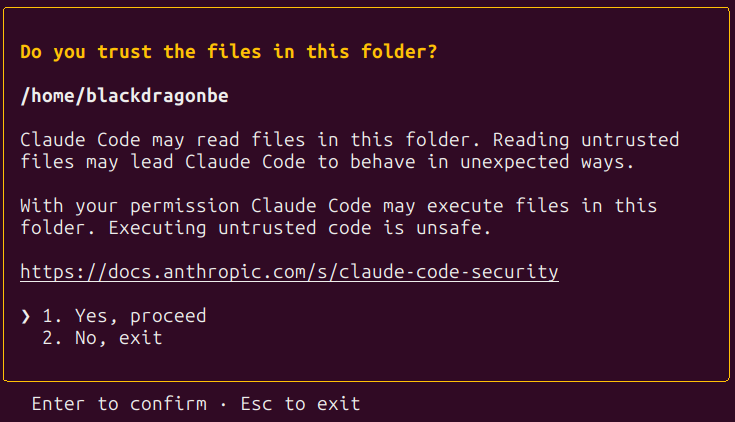

If all went effectively, you need to see a field with a message asking you if you happen to belief the information within the folder just like the one under.

Select no for now and prepare to discover ways to use Claude Code.

Making a Venture From Scratch

To get your toes moist, begin by making a contemporary Python venture utilizing Claude Code.

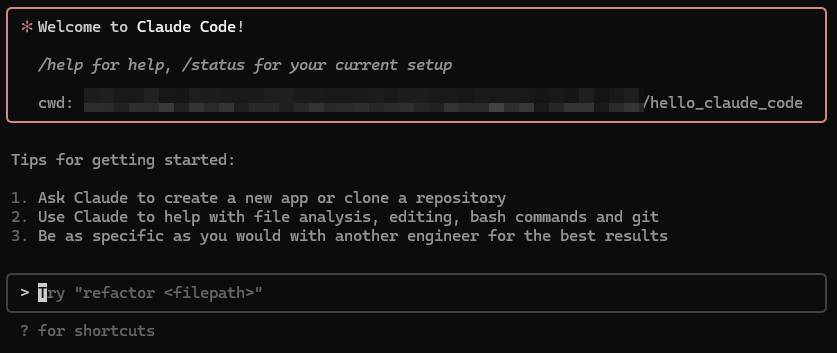

Create a brand new folder on your venture and title it “hello_claude_code“. Open a brand new terminal in that folder and run the next command:

claude

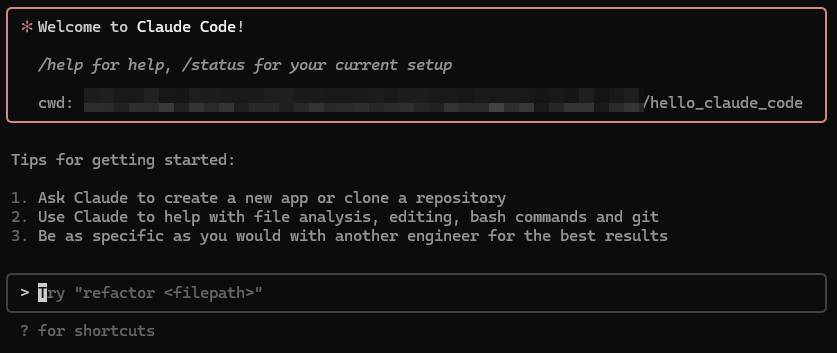

If it asks if you happen to belief the information within the folder, select sure. You must now see the welcome message and a immediate enter.

Speaking with Claude

Now you can begin speaking with Claude Code. Sort your immediate and press Enter to ship it to Claude. For a primary immediate, strive saying “Hey”.

Claude will “assume” for a short time earlier than responding.

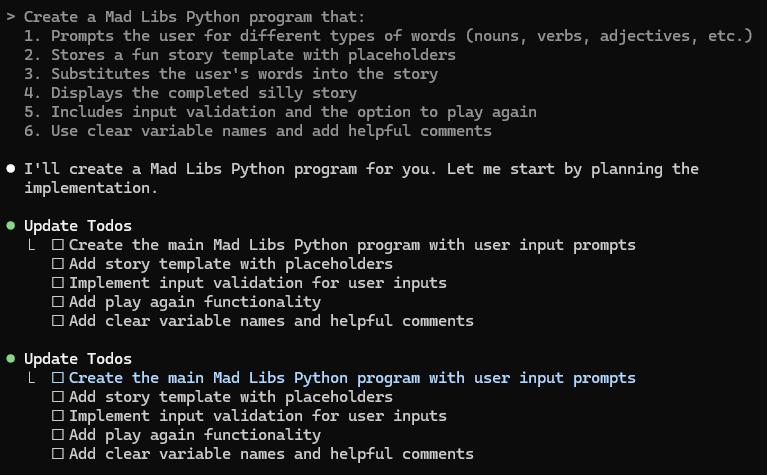

To get Claude to create a venture for you, copy and paste the next immediate into the terminal and press Enter:

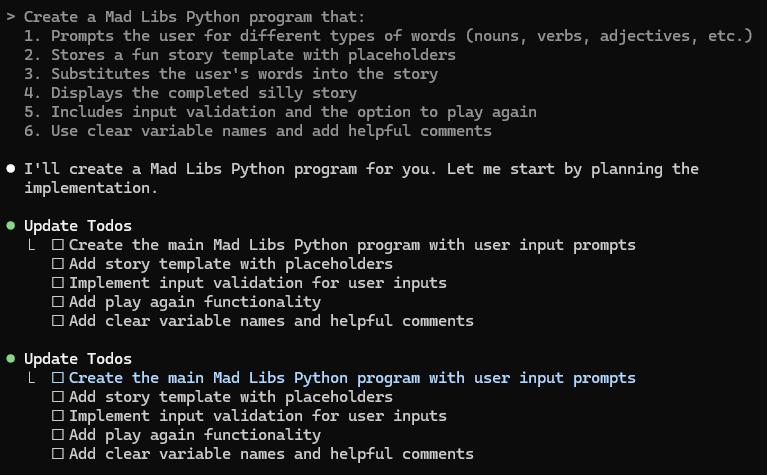

Create a Mad Libs Python program that:

1. Prompts the person for several types of phrases (nouns, verbs, adjectives, and so forth.)

2. Shops a enjoyable story template with placeholders

3. Substitutes the person's phrases into the story

4. Shows the finished foolish story

5. Contains enter validation and the choice to play once more

6. Use clear variable names and add useful feedback

Word: At all times be particular in your prompts, don’t anticipate Claude to learn your thoughts. For the most effective outcomes, add clear particulars and context. Brief and obscure prompts will end in less-than-ideal outcomes.

After a a while, the agent side of Claude Code will kick in. It received’t write code within the terminal simply but, however will as a substitute provide you with a plan of motion.

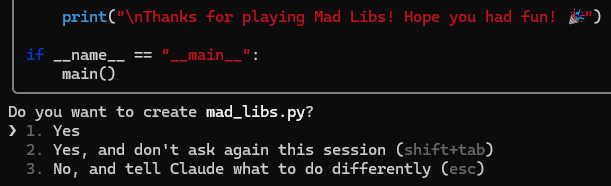

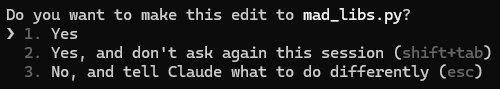

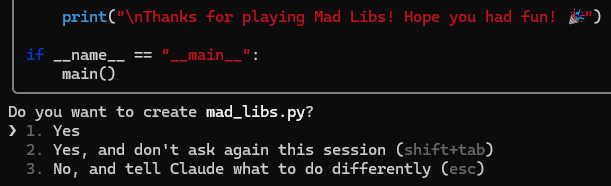

If all goes effectively Claude will wish to write a file. It received’t do that with out asking on your permission, so that you’ll see the code it desires to jot down, adopted by a query just like the one under:

You’ve gotten three choices right here:

-

Sure: this can enable Claude to jot down this explicit file.

-

Sure, and don’t ask once more this session: Claude will write this file and received’t ask you once more if it could write information.

-

No: Claude received’t write the file and can watch for a brand new immediate.

Test if the code appears to be like good after which press Enter to proceed and select the default possibility for now, which is “sure”.

At this level you’ll be able to examine if the file truly exists within the venture folder.

For such a easy venture, there’s a very good likelihood Claude will use a single file. If it does ask to jot down extra, reply with sure.

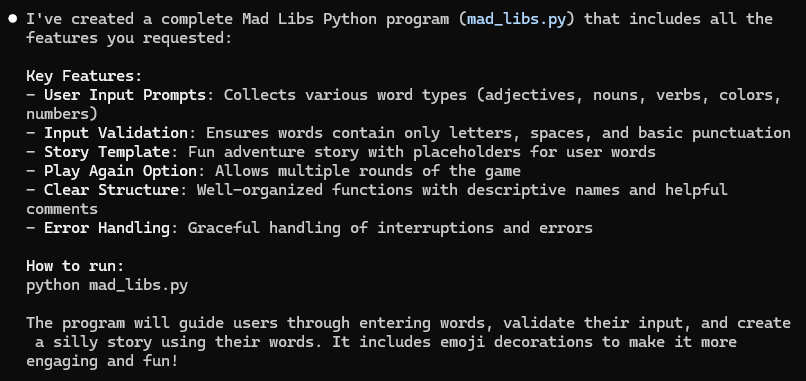

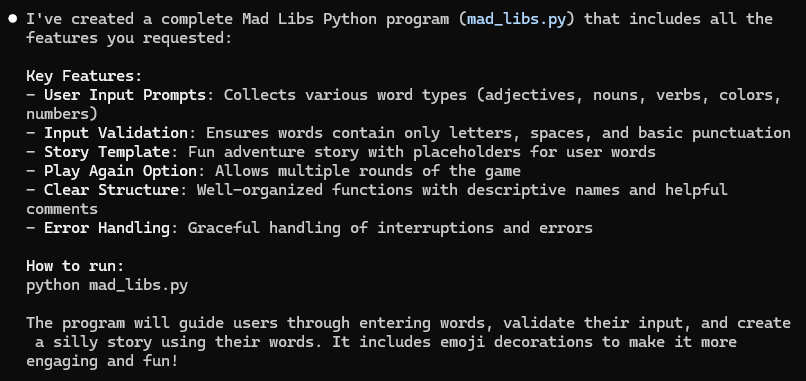

As soon as Claude is completed, it should write a abstract of what it has completed and directions so that you can observe up. I bought the next message:

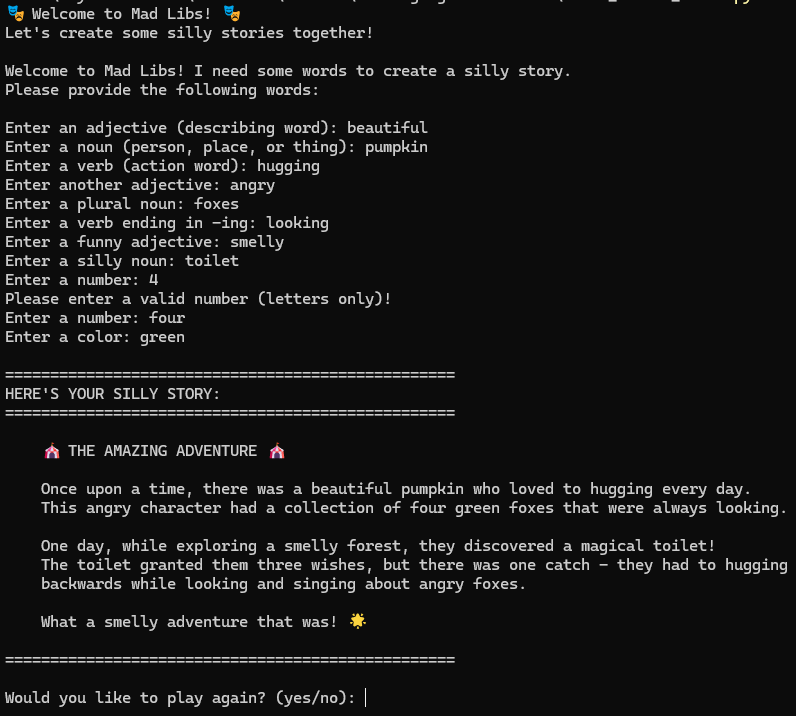

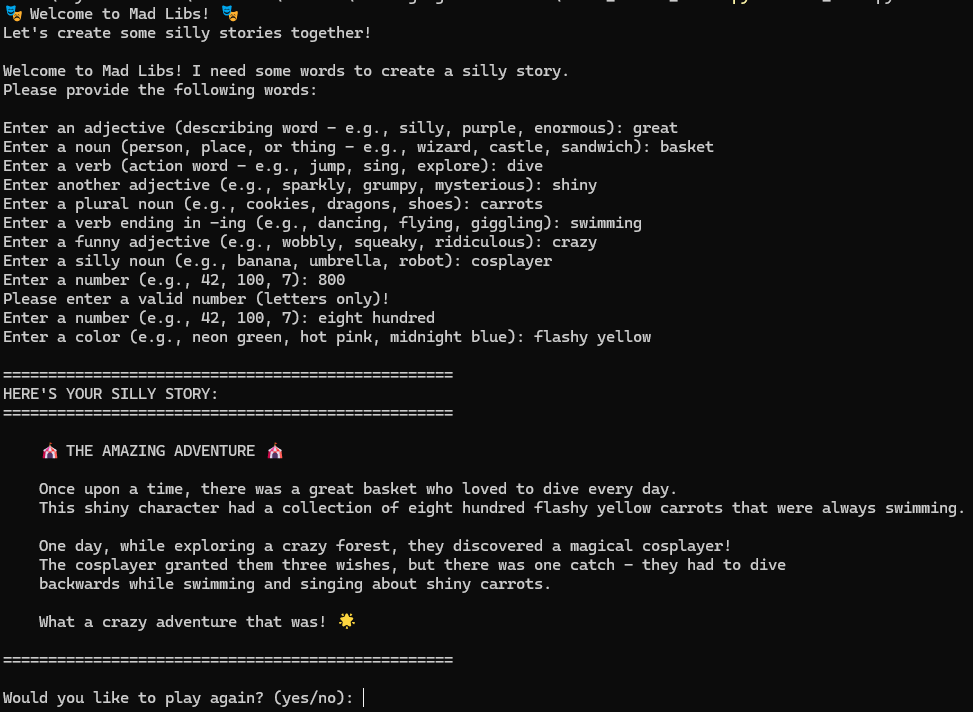

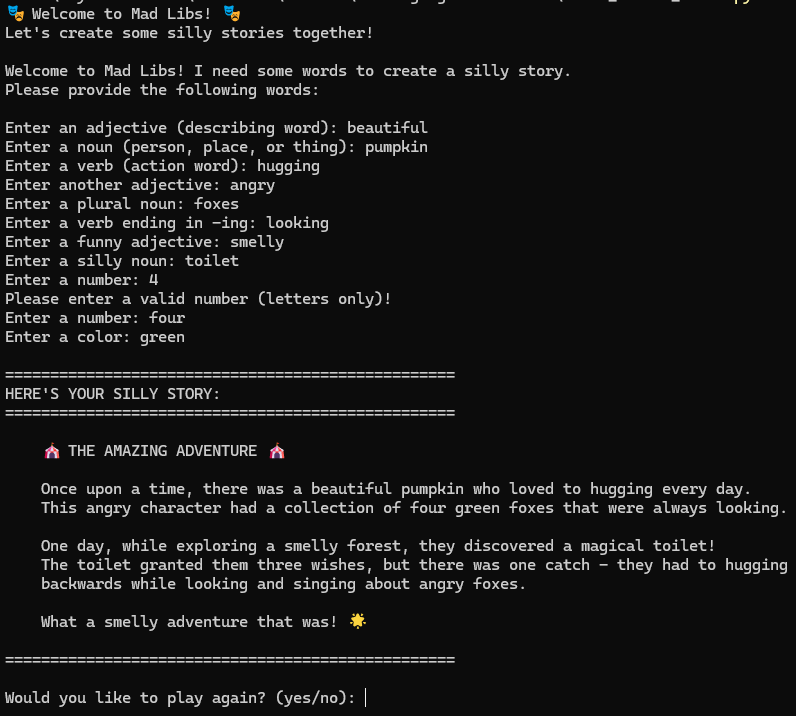

Attempt operating the venture as instructed and examine if every part works as anticipated. For me, it labored, and I bought the next output:

begin, however you’ll be able to refine it to make it higher. For instance, if you happen to thought it wasn’t clear what sort of phrases it needed, suggest so as to add examples to every phrase immediate:

Please add examples to every phrase immediate. It wasn't all the time clear what was anticipated of me.

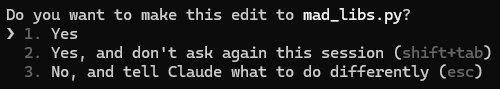

This time, Claude will ask if it’s okay to edit a file:

Select possibility 2 right here so future edits may be utilized with out having to ask you.

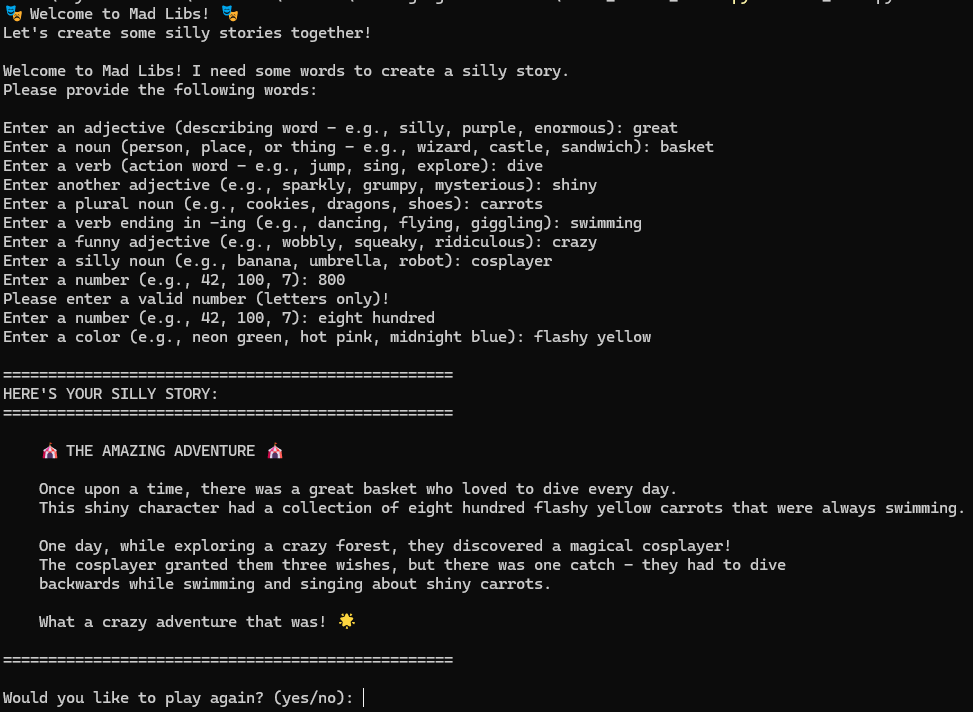

Now strive operating the venture once more with the enhancements in place.

This back-and-forth is key to working with Claude Code. Making gradual adjustments and iterating on the outcomes is an effective way to refine your code.

Shut the terminal window and prepare to dive deeper. Within the subsequent part, you’ll discover ways to work with present tasks and tips on how to get essentially the most out of Claude Code.