Posted by Sandhya Mohan – Product Supervisor, and Jose Alcérreca – Developer Relations Engineer

Software program growth is present process a big evolution, transferring past reactive assistants to clever brokers. These brokers do not simply supply solutions; they’ll create execution plans, make the most of exterior instruments, and make advanced, multi-file modifications. This leads to a extra succesful AI that may iteratively resolve difficult issues, basically altering how builders work.

At Google I/O 2025, we supplied a glimpse into our work on agentic AI in Android Studio, the built-in growth setting (IDE) targeted on Android growth. We showcased that by combining agentic AI with the built-in portfolio of instruments within Android Studio, the IDE is ready to help you in creating Android apps in ways in which have been by no means attainable earlier than. We are actually extremely excited to announce the following frontier in Android growth with the provision of ‘Agent Mode’ for Gemini in Android Studio.

These options can be found within the newest Android Studio Narwhal Characteristic Drop Canary launch, and will likely be rolled out to enterprise tier subscribers within the coming days. As with all new Android Studio options, we invite builders to present suggestions to direct our growth efforts and guarantee we’re creating the instruments it is advisable construct higher apps, sooner.

Agent Mode

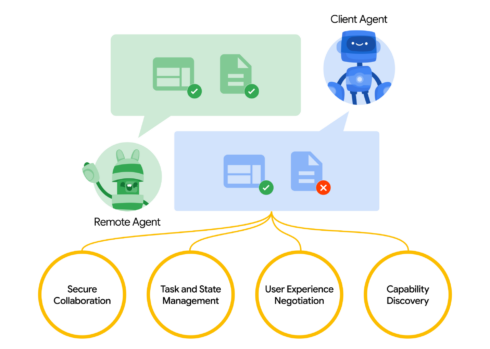

Gemini in Android Studio’s Agent Mode is a brand new experimental functionality designed to deal with advanced growth duties that transcend what you may expertise by simply chatting with Gemini.

With Agent Mode, you may describe a posh aim in pure language — from producing unit assessments to advanced refactors — and the agent formulates an execution plan that may span a number of recordsdata in your venture and executes underneath your path. Agent Mode makes use of a variety of IDE instruments for studying and modifying code, constructing the venture, looking the codebase and extra to assist Gemini full advanced duties from begin to end with minimal oversight from you.

To make use of Agent Mode, click on Gemini within the sidebar, then choose the Agent tab, and describe a activity you’d just like the agent to carry out. Some examples of duties you may attempt in Agent Mode embrace:

- Construct my venture and repair any errors

- Extract any hardcoded strings used throughout my venture and migrate to strings.xml

- Add assist for darkish mode to my utility

- Given an connected screenshot, implement a brand new display in my utility utilizing Materials 3

The agent then suggests edits and iteratively fixes bugs to finish duties. You possibly can evaluation, settle for, or reject the proposed modifications alongside the best way, and ask the agent to iterate in your suggestions.

![]()

Gemini breaks duties down right into a plan with easy steps. It additionally exhibits the listing of IDE instruments it wants to finish every step.

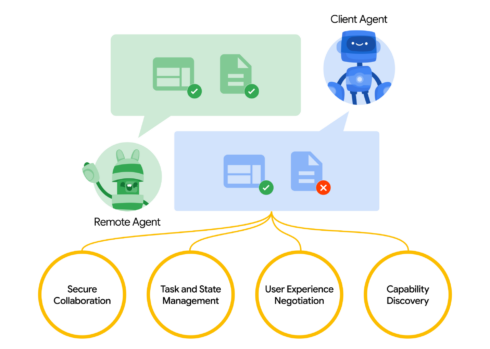

Whereas highly effective, you’re firmly in management, with the power to evaluation, refine and information the agent’s output at each step. When the agent proposes code modifications, you may select to simply accept or reject them.

![]()

The Agent waits for the developer to approve or reject a change.

Moreover, you may allow “Auto-approve” in case you are feeling fortunate 😎 — particularly helpful if you need to iterate on concepts as quickly as attainable.

You possibly can delegate routine, time-consuming work to the agent, releasing up your time for extra artistic, high-value work. Check out Agent Mode within the newest preview model of Android Studio – we look ahead to seeing what you construct! We’re investing in constructing extra agentic experiences for Gemini in Android Studio to make your growth much more intuitive, so you may count on to see extra agentic performance over the following a number of releases.

![]()

Gemini is able to understanding the context of your app

Supercharge Agent Mode along with your Gemini API key

![]()

The default Gemini mannequin has a beneficiant no-cost every day quota with a restricted context window. Nevertheless, now you can add your individual Gemini API key to develop Agent Mode’s context window to an enormous 1 million tokens with Gemini 2.5 Professional.

A bigger context window enables you to ship extra directions, code and attachments to Gemini, resulting in even increased high quality responses. That is particularly helpful when working with brokers, because the bigger context gives Gemini 2.5 Professional with the power to cause about advanced or long-running duties.

![]()

Add your API key within the Gemini settings

To allow this function, get a Gemini API key by navigating to Google AI Studio. Sign up and get a key by clicking on the “Get API key” button. Then, again in Android Studio, navigate to the settings by going to File (Android Studio on macOS) > Settings > Instruments > Gemini to enter your Gemini API key. Relaunch Gemini in Android Studio and get even higher responses from Agent Mode.

Remember to safeguard your Gemini API key, as further prices apply for Gemini API utilization related to a private API key. You possibly can monitor your Gemini API key utilization by navigating to AI Studio and choosing Get API key > Utilization & Billing.

Be aware that enterprise tier subscribers already get entry to Gemini 2.5 Professional and the expanded context window robotically with their Gemini Code Help license, so these builders is not going to see an API key choice.

Mannequin Context Protocol (MCP)

Gemini in Android Studio’s Agent Mode can now work together with exterior instruments through the Mannequin Context Protocol (MCP). This function gives a standardized approach for Agent Mode to make use of instruments and lengthen data and capabilities with the exterior setting.

There are a lot of instruments you may hook up with the MCP Host in Android Studio. For instance you possibly can combine with the Github MCP Server to create pull requests instantly from Android Studio. Listed here are some further use instances to contemplate.

On this preliminary launch of MCP assist within the IDE you’ll configure your MCP servers by means of a mcp.json file positioned within the configuration listing of Studio, utilizing the next format:

{

"mcpServers": {

"reminiscence": {

"command": "npx",

"args": [

"-y",

"@modelcontextprotocol/server-memory"

]

},

"sequential-thinking": {

"command": "npx",

"args": [

"-y",

"@modelcontextprotocol/server-sequential-thinking"

]

},

"github": {

"command": "docker",

"args": [

"run",

"-i",

"--rm",

"-e",

"GITHUB_PERSONAL_ACCESS_TOKEN",

"ghcr.io/github/github-mcp-server"

],

"env": {

"GITHUB_PERSONAL_ACCESS_TOKEN": ""

}

}

}

}

Instance configuration with two MCP servers

For this preliminary launch, we assist interacting with exterior instruments through the stdio transport as outlined within the MCP specification. We plan to assist the total suite of MCP options in upcoming Android Studio releases, together with the Streamable HTTP transport, exterior context assets, and immediate templates.

For extra info on tips on how to use MCP in Studio, together with the mcp.json configuration file format, please confer with the Android Studio MCP Host documentation.

By delegating routine duties to Gemini by means of Agent Mode, you’ll be capable of deal with extra modern and gratifying points of app growth. Obtain the most recent preview model of Android Studio on the canary launch channel right this moment to attempt it out, and tell us how a lot sooner app growth is for you!

As all the time, your suggestions is necessary to us – verify recognized points, report bugs, counsel enhancements, and be a part of our vibrant group on LinkedIn, Medium, YouTube, or X. Let’s construct the way forward for Android apps collectively!