At the moment at its person convention MongoDB.native NYC, the favored database firm introduced that the Search and Vector Search capabilities which have been obtainable within the Atlas cloud platform are actually obtainable in preview within the Group Version and Enterprise Server.

Beforehand, clients utilizing self-managed variations of MongoDB would have wanted to make use of a third-party service for vector databases, resulting in a fragmented search stack that provides pointless complexity and danger, based on MongoDB.

Ben Flast, director of product administration at MongoDB, defined that the workforce had been engaged on bringing this to the Group Version and Enterprise Server for some time, and have lastly gotten to some extent the place it’s able to be added.

“We introduced Search and Vector Search to market in Atlas solely six or seven years in the past, and the intention there was actually like the place did we expect we might construct a brand new service and evolve it in a short time, and we felt like a managed software program could be a better place to get that product began and get it to a extra mature place. And now that we’re there, we’re actually excited to convey it to the group as a result of a lot of the way in which MongoDB is used is within the Group Version,” he stated.

In line with Flast, one of many greatest issues was ensuring that Search and Vector Search might be as scalable and performant in self-managed variations as it’s in Atlas.

“What we launched immediately is the binary that sits beneath the search functionality. By having it as a standalone binary, you may put it on separate {hardware}, you may scale it up independently or run it regionally and have a single occasion,” he stated.

Vector search unlocks capabilities like autocomplete and fuzzy search, search faceting, inside search instruments, AI-powered semantic search, RAG, brokers, hybrid search, and textual content evaluation.

In line with MongoDB, a number of of its companions helped to check and validate these search capabilities within the Group Version, together with Volcano Engine Cloud, LangChain, and LlamaIndex.

Updates to Queryable Encryption

MongoDB additionally introduced the most recent launch of its platform, 8.2. In comparison with MongoDB 8.0, the most recent model offers 49% sooner efficiency for unindexed queries, 10% sooner in-memory reads, 20% sooner array traversal, and virtually triple the throughput for time-series bulk insertions, based on the corporate.

MongoDB 8.2 additionally provides partial match help to Queryable Encryption expertise. MongoDB defined that this permits textual content searches to be finished on encrypted knowledge with out revealing the underlying info.

Queryable Encryption permits knowledge to be protected at relaxation, in transit, and in use. In line with the corporate, encryption at relaxation and in transit is commonplace, however encrypting knowledge that’s in use has been tougher to attain. It is because encryption makes knowledge unreadable, and queries can’t be carried out on this state.

“As an illustration, a healthcare supplier may have to seek out all sufferers with diagnoses that embrace the phrase ‘diabetes.’ Nonetheless, with out decrypting the medical data, the database can’t seek for that time period,” the corporate wrote in a weblog publish. To work round this, organizations usually go away delicate fields unencrypted or construct separate search indexes.

With Queryable Encryption, queries may be finished on the encrypted delicate knowledge with out that knowledge ever being uncovered to the database server.

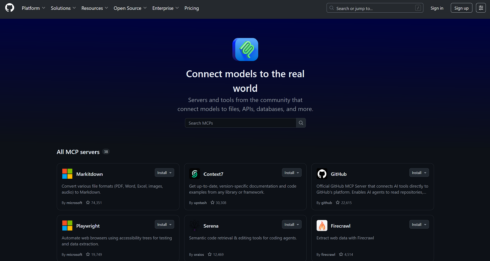

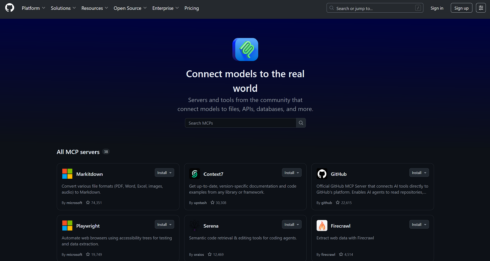

MongoDB MCP Server

After a profitable public preview, MongoDB introduced that its MCP Server is now typically obtainable.

As a part of immediately’s launch, enterprise-grade authentication with OIDC, LDAP, and Kerberos has been added, together with proxy connectivity. There’s additionally now self-hosted distant deployment help in order that groups can share deployments and have a centralized configuration.

The MongoDB Server may be obtained in a bundle with MongoDB for VS Code extension.

MongoDB AMP

Moreover, yesterday, the corporate introduced MongoDB AMP, a platform that applies AI to the appliance modernization course of. MongoDB AMP consists of an AI-powered software program platform, supply framework, and skilled engineers to information the technical implementation course of.

Shilpa Kolhar, SVP of product and engineering at MongoDB, defined that the AI brokers will deal with duties like including documentation that was lacking or including purposeful checks, after which specialists can take over when conditions come up that the tooling can’t deal with by itself.

“If you find yourself changing out of your legacy Java stack to Java Spring Boot, it’s an ordinary framework. The instruments deal with most of it and the shoppers see an enormous discount in time for code transformation. But it surely’s not nearly code transformation, proper? We need to have the code transformation in place and comply with all the very best practices which are wanted in software improvement. And corporations might need particular wants for his or her safety and compliance, and so forth, and that’s the place our specialists are available,” she stated.

She defined that many occasions, clients will are available and say they’ve one database, however then the transformation begins and so they uncover they’ve many various ones. “That’s the place we have to convey a number of instruments collectively, and that’s one other space the place our specialists are available and tie varied issues collectively,” she stated.

In line with Kolhar, this chance for such diversified infrastructure is without doubt one of the issues that makes legacy methods such an issue. As soon as an organizations goes via the modernization course of, nevertheless, their infrastructure will hopefully be standardized in such a manner that future modifications develop into a lot easier.

She additionally defined that for some time, there’s been a forwards and backwards of corporations pushing aside modernization as a result of they will’t assure the return on funding, however we’ve reached a cut-off date now the place legacy databases and software platforms can’t sustain with the tempo AI is altering issues.

She additionally stated that due to automation, modernization can occur a lot sooner, and never as many individuals must be devoted to the method.

“We’re prepared that will help you with the tooling we have now constructed over the previous few years and the expertise of the final 15 years,” she stated.