Apple appears unable to cease inflow of so-called “twin use” apps that look harmless on the floor however assist customers create deepfake porn — at a steep value.

Apple takes delight in regulating the App Retailer, and a part of that management is stopping pornographic apps altogether. Nonetheless, there are limits to this management provided that some apps can provide options that customers can simply abuse — seemingly with out Apple being conscious.

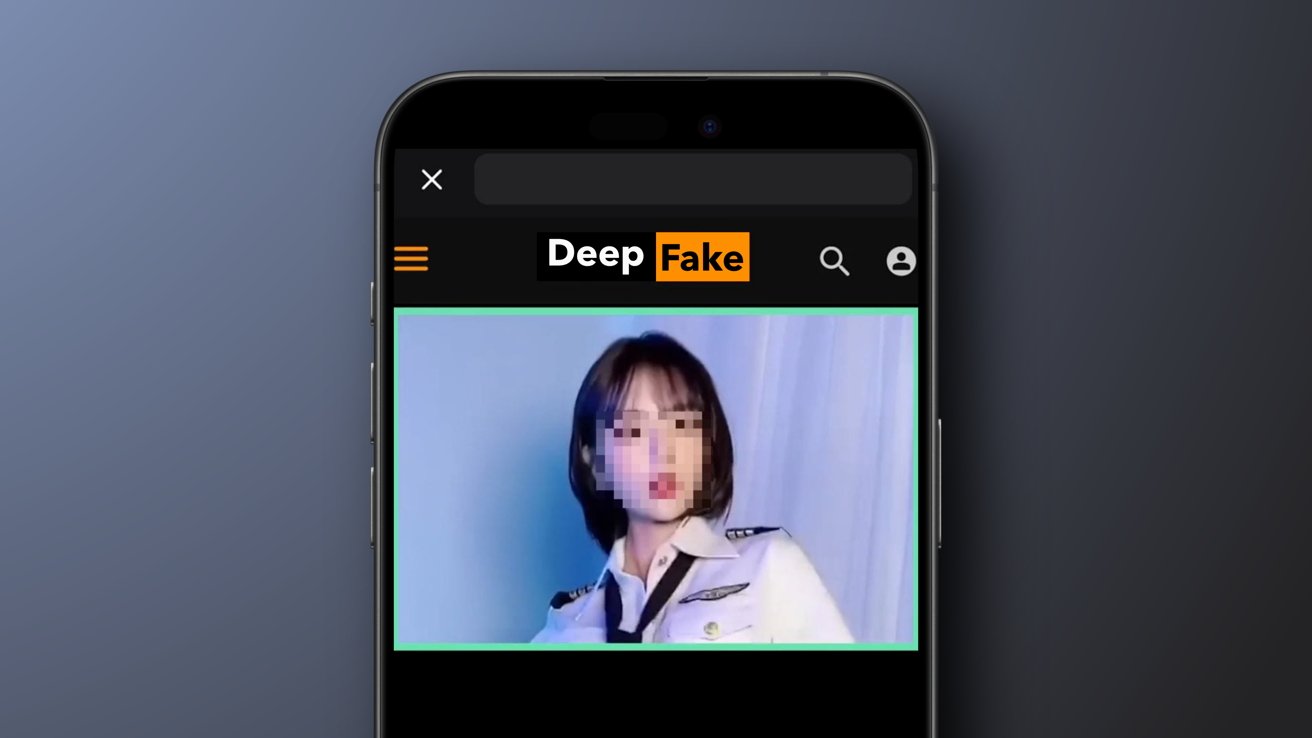

In keeping with a report from 404 Media, Apple struggles with a “twin use” drawback present in apps that supply options like face swapping. Whereas the characteristic is harmless sufficient at first look, customers are swapping faces onto pornography, typically utilizing minor’s faces.

The difficulty grew to become obvious when a reporter got here throughout a paid advert on Reddit for a face swap app. Face swapping tends to be simply discovered and sometimes free, so such an app would want a enterprise mannequin that enables paid advert placement.

What they discovered was an app providing customers the power to swap any face onto video from their “favourite web site,” with a picture suggesting Porn Hub as an choice. Apple does not enable porn-related apps on the App Retailer, however some apps referring to person content material usually characteristic such pictures and movies as a sort of loophole.

When Apple was alerted to the dual-use case of the marketed app, it was pulled. Nonetheless, it appeared Apple wasn’t conscious of the difficulty in any respect, and the app hyperlink needed to be shared.

This is not the primary time innocent-looking apps get by means of app assessment and provide a service that violates Apple’s tips. Whereas it is not as blatant a violation as altering a youngsters’s app right into a on line casino, the power to generate nonconsensual intimate imagery (NCII) was clearly not one thing on Apple’s radar.

Synthetic intelligence options in apps can create extremely sensible deep fakes, and it’s important for corporations like Apple to get forward of those issues. Whereas Apple will not be capable to cease such use instances from current, it may possibly no less than implement a coverage that may be enforced in app assessment — clear tips and guidelines round pornographic picture technology. It already stopped deepfake AI web sites from utilizing sign-in with Apple.

For instance, no app ought to be capable to supply video from Porn Hub. Apple may have particular guidelines in place for potential dual-use apps, like zero-tolerance bans for apps found attempting to create such content material.

Apple has taken nice care to make sure Apple Intelligence will not make nude pictures, however that should not be the top of its oversight. On condition that Apple argues it’s the finest arbiter of the App Retailer, it must take cost of things like NCII technology being promoted in adverts.

Face-swapping apps aren’t the one apps with an issue. Even apps that blatantly promote infidelity, intimate video chat, grownup chat, or different euphemisms get by means of app assessment.

Stories have lengthy recommended that app assessment is damaged, and regulators are uninterested in platitudes. Apple must get a deal with on the App Retailer or threat shedding management.