Introduction

In in the present day’s fast-paced enterprise world, the flexibility to extract related and correct information from various sources is essential for knowledgeable decision-making, course of optimization, and strategic planning. Whether or not it is analyzing buyer suggestions, extracting key info from authorized paperwork, or parsing internet content material, environment friendly information extraction can present priceless insights and streamline operations.

Enter giant language fashions (LLMs) and their APIs – highly effective instruments that make the most of superior pure language processing (NLP) to know and generate human-like textual content. Nevertheless, it is vital to notice that LLM APIs

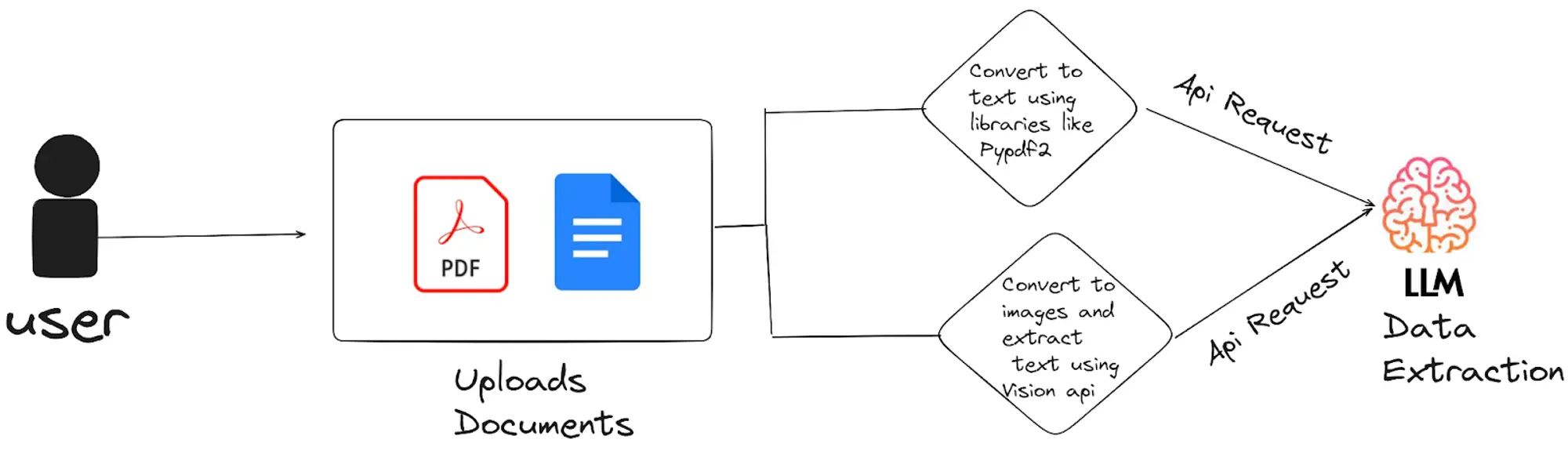

For doc evaluation, the standard workflow entails:

- Doc Conversion to Photos: Whereas some LLM APIs course of PDFs instantly, changing them to pictures usually enhances OCR accuracy, making it simpler to extract textual content from non-searchable or poorly scanned paperwork

- Textual content Extraction Strategies:

- Utilizing Imaginative and prescient APIs:

Imaginative and prescient APIs excel at extracting textual content from photographs, even in difficult situations involving advanced layouts, various fonts, or low-quality scans. This strategy ensures dependable textual content extraction from paperwork which might be troublesome to course of in any other case. - Direct Extraction from Machine-Readable PDFs:

For easy, machine-readable PDFs, libraries like PyPDF2 can extract textual content instantly with out changing the doc to pictures. This technique is quicker and extra environment friendly for paperwork the place the textual content is already selectable and searchable. - Enhancing Extraction with LLM APIs:

Right now, textual content may be instantly extracted and analyzed from picture in a single step utilizing LLMs. This built-in strategy simplifies the method by combining extraction, content material processing, key information level identification, abstract technology, and perception provision into one seamless operation. To discover how LLMs may be utilized to totally different information extraction situations, together with the combination of retrieval-augmented technology methods, see this overview of constructing RAG apps.

- Utilizing Imaginative and prescient APIs:

On this weblog, we’ll discover a couple of LLM APIs designed for information extraction instantly from information and examine their options. Desk of Contents:

- Understanding LLM APIs

- Choice Standards for High LLM APIs

- LLM APIs We Chosen For Information Extraction

- Comparative Evaluation of LLM APIs for Information Extraction

- Experiment evaluation

- API Options and Pricing Evaluation

- Different literature on the web Evaluation

- Conclusion

Understanding LLM APIs

What Are LLM APIs?

Giant language fashions are synthetic intelligence methods which have been educated on huge quantities of textual content information, enabling them to know and generate human-like language. LLM APIs, or utility programming interfaces, present builders and companies with entry to those highly effective language fashions, permitting them to combine these capabilities into their very own functions and workflows.

At their core, LLM APIs make the most of refined pure language processing algorithms to grasp the context and that means of textual content, going past easy sample matching or key phrase recognition. This depth of understanding is what makes LLMs so priceless for a variety of language-based duties, together with information extraction. For a deeper dive into how these fashions function, seek advice from this detailed information on what giant language fashions are.

Whereas conventional LLM APIs primarily give attention to processing and analyzing extracted textual content, multimodal fashions like ChatGPT and Gemini also can work together with photographs and different media varieties. These fashions do not carry out conventional information extraction (like OCR) however play an important function in processing, analyzing, and contextualizing each textual content and pictures, reworking information extraction and evaluation throughout numerous industries and use circumstances.

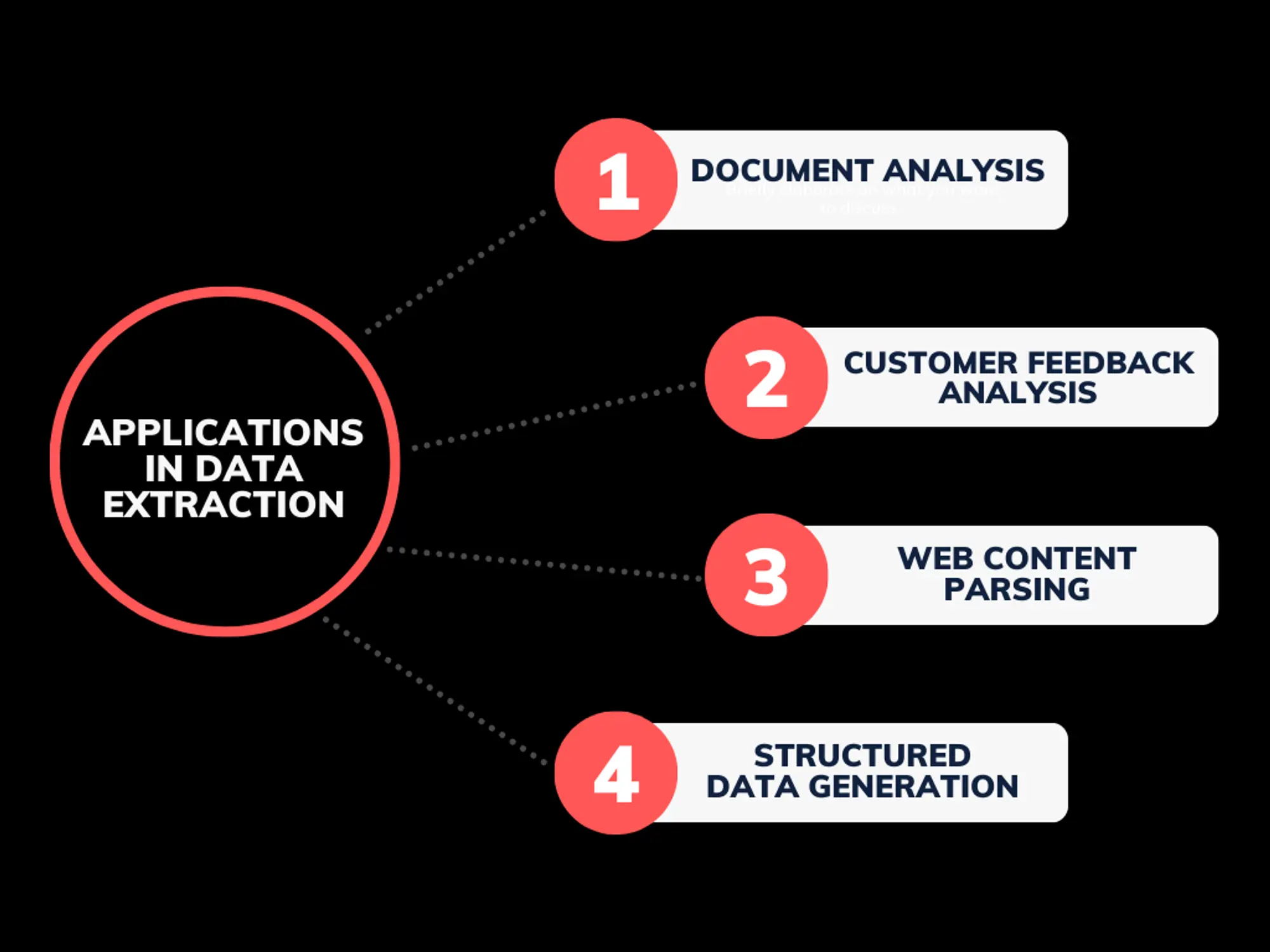

- Doc Evaluation: LLM APIs extract textual content from doc photographs, that are then parsed to establish key info from advanced paperwork like authorized contracts, monetary reviews, and regulatory filings.

- Buyer Suggestions Evaluation: After textual content extraction, LLM-powered sentiment evaluation and pure language understanding assist companies rapidly extract insights from buyer opinions, surveys, and help conversations.

- Net Content material Parsing: LLM APIs may be leveraged to course of and construction information extracted from internet pages, enabling the automation of duties like worth comparability, lead technology, and market analysis.

- Structured Information Technology: LLM APIs can generate structured information, similar to tables or databases, from unstructured textual content sources extracted from reviews or articles.

As you discover the world of LLM APIs in your information extraction wants, it is vital to think about the next key options that may make or break the success of your implementation:

Accuracy and Precision

Correct information extraction is the inspiration for knowledgeable decision-making and efficient course of automation. LLM APIs ought to exhibit a excessive degree of precision in understanding the context and extracting the related info from numerous sources, minimizing errors and inconsistencies.

Scalability

Your information extraction wants could develop over time, requiring an answer that may deal with growing volumes of knowledge and requests with out compromising efficiency. Search for LLM APIs that provide scalable infrastructure and environment friendly processing capabilities.

Integration Capabilities

Seamless integration together with your present methods and workflows is essential for a profitable information extraction technique. Consider the convenience of integrating LLM APIs with what you are promoting functions, databases, and different information sources.

Customization Choices

Whereas off-the-shelf LLM APIs can present glorious efficiency, the flexibility to fine-tune or customise the fashions to your particular {industry} or use case can additional improve the accuracy and relevance of the extracted information.

Safety and Compliance

When coping with delicate or confidential info, it is important to make sure that the LLM API you select adheres to strict safety requirements and regulatory necessities, similar to information encryption, person authentication, and entry management.

Context Lengths

The flexibility to course of and perceive longer enter sequences, referred to as context lengths, can considerably enhance the accuracy and coherence of the extracted information. Longer context lengths enable the LLM to higher grasp the general context and nuances of the knowledge, resulting in extra exact and related outputs.

Prompting Strategies

Superior prompting strategies, similar to few-shot studying and immediate engineering, allow LLM APIs to higher perceive and reply to particular information extraction duties. By rigorously crafting prompts that information the mannequin’s reasoning and output, customers can optimize the standard and relevance of the extracted information.

Structured Outputs

LLM APIs that may ship structured, machine-readable outputs, similar to JSON or CSV codecs, are notably priceless for information extraction use circumstances. These structured outputs facilitate seamless integration with downstream methods and automation workflows, streamlining all the information extraction course of.

Choice Standards for High LLM APIs

With these key options in thoughts, the subsequent step is to establish the highest LLM APIs that meet these standards. The APIs mentioned beneath have been chosen based mostly on their efficiency in real-world functions, alignment with industry-specific wants, and suggestions from builders and companies alike.

Elements Thought of:

- Efficiency Metrics: Together with accuracy, pace, and precision in information extraction.

- Advanced Doc Dealing with: The flexibility to deal with several types of paperwork

- Consumer Expertise: Ease of integration, customization choices, and the supply of complete documentation.

Now that we have explored the important thing options to think about, let’s dive into a better have a look at the highest LLM APIs we have chosen for information extraction:

OpenAI GPT-3/GPT-4 API

OpenAI API is understood for its superior GPT-4 mannequin, which excels in language understanding and technology. Its contextual extraction functionality permits it to keep up context throughout prolonged paperwork for exact info retrieval. The API helps customizable querying, letting customers give attention to particular particulars and offering structured outputs like JSON or CSV for straightforward information integration. With its multimodal capabilities, it will possibly deal with each textual content and pictures, making it versatile for numerous doc varieties. This mix of options makes OpenAI API a sturdy alternative for environment friendly information extraction throughout totally different domains.

Google Gemini API

Google Gemini API is Google’s newest LLM providing, designed to combine superior AI fashions into enterprise processes. It excels in understanding and producing textual content in a number of languages and codecs, making it appropriate for information extraction duties. Gemini is famous for its seamless integration with Google Cloud providers, which advantages enterprises already utilizing Google’s ecosystem. It options doc classification and entity recognition, enhancing its capability to deal with advanced paperwork and extract structured information successfully.

Claude 3.5 Sonnet API

Claude 3.5 Sonnet API by Anthropic focuses on security and interpretability, which makes it a novel choice for dealing with delicate and sophisticated paperwork. Its superior contextual understanding permits for exact information extraction in nuanced situations, similar to authorized and medical paperwork. Claude 3.5 Sonnet’s emphasis on aligning AI habits with human intentions helps decrease errors and enhance accuracy in vital information extraction duties.

Nanonets API

Nanonets will not be a standard LLM API however is very specialised for information extraction. It affords endpoints particularly designed to extract structured information from unstructured paperwork, similar to invoices, receipts, and contracts. A standout function is its no-code mannequin retraining course of—customers can refine fashions by merely annotating paperwork on the dashboard. Nanonets additionally integrates seamlessly with numerous apps and ERPs, enhancing its versatility for enterprises. G2 opinions spotlight its user-friendly interface and distinctive buyer help, particularly for dealing with advanced doc varieties effectively.

On this part, we’ll conduct a radical comparative evaluation of the chosen LLM APIs—Nanonets, OpenAI, Google Gemini, and Claude 3.5 Sonnet—specializing in their efficiency and options for information extraction.

Experiment Evaluation: We’ll element the experiments performed to guage every API’s effectiveness. This consists of an summary of the experimentation setup, such because the varieties of paperwork examined (e.g., multipage textual paperwork, invoices, medical data, and handwritten textual content), and the standards used to measure efficiency. We’ll analyze how every API handles these totally different situations and spotlight any notable strengths or weaknesses.

API Options and Pricing Evaluation: This part will present a comparative have a look at the important thing options and pricing constructions of every API. We’ll discover points similar to Token lengths, Charge limits, ease of integration, customization choices, and extra. Pricing fashions will probably be reviewed to evaluate the cost-effectiveness of every API based mostly on its options and efficiency.

Different Literature on the Web Evaluation: We’ll incorporate insights from present literature, person opinions, and {industry} reviews to offer further context and views on every API. This evaluation will assist to spherical out our understanding of every API’s status and real-world efficiency, providing a broader view of their strengths and limitations.

This comparative evaluation will show you how to make an knowledgeable resolution by presenting an in depth analysis of how these APIs carry out in observe and the way they stack up towards one another within the realm of knowledge extraction.

Experiment Evaluation

Experimentation Setup

We examined the next LLM APIs:

- Nanonets OCR (Full Textual content) and Customized Mannequin

- ChatGPT-4o-latest

- Gemini 1.5 Professional

- Claude 3.5 Sonnet

Doc Varieties Examined:

- Multipage Textual Doc: Evaluates how nicely APIs retain context and accuracy throughout a number of pages of textual content.

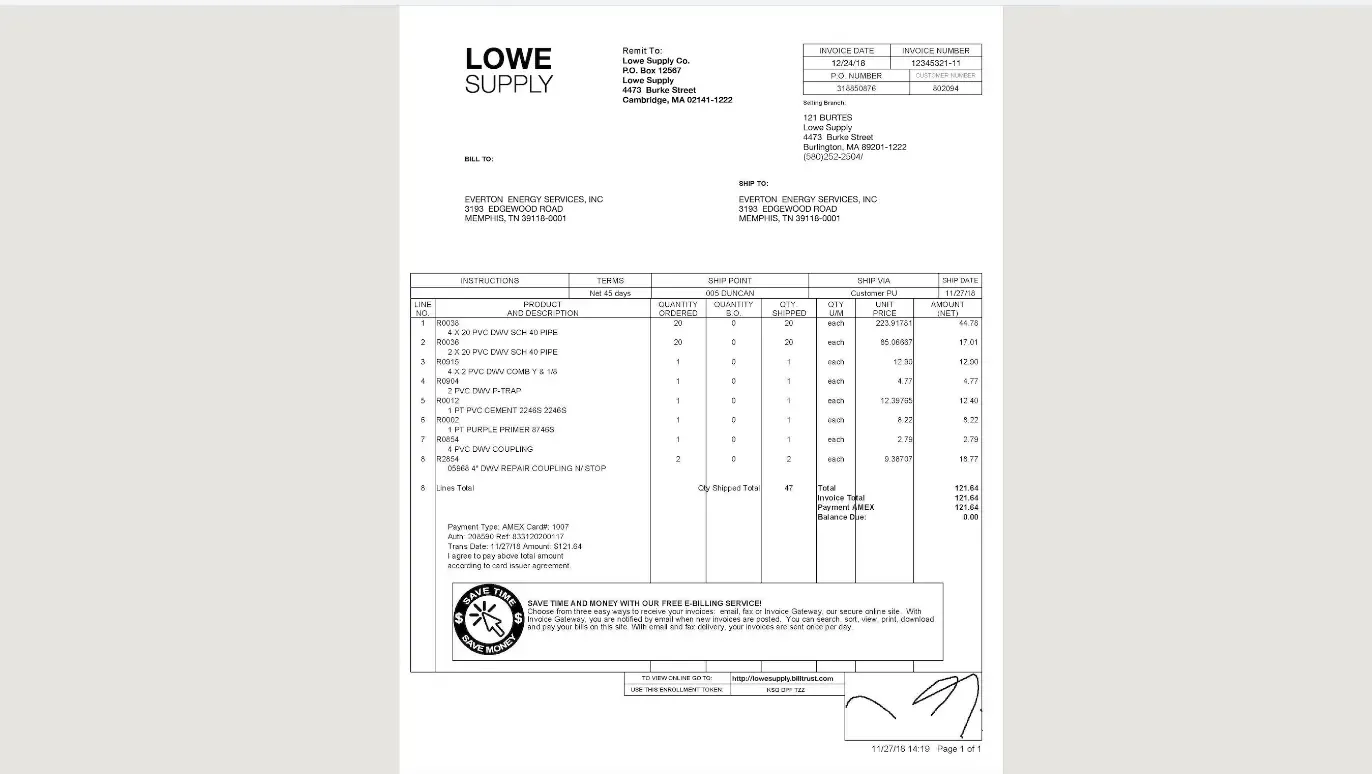

- Invoices/Receipt with Textual content and Tables: Assesses the flexibility to extract and interpret each structured (tables) and unstructured (textual content) information.

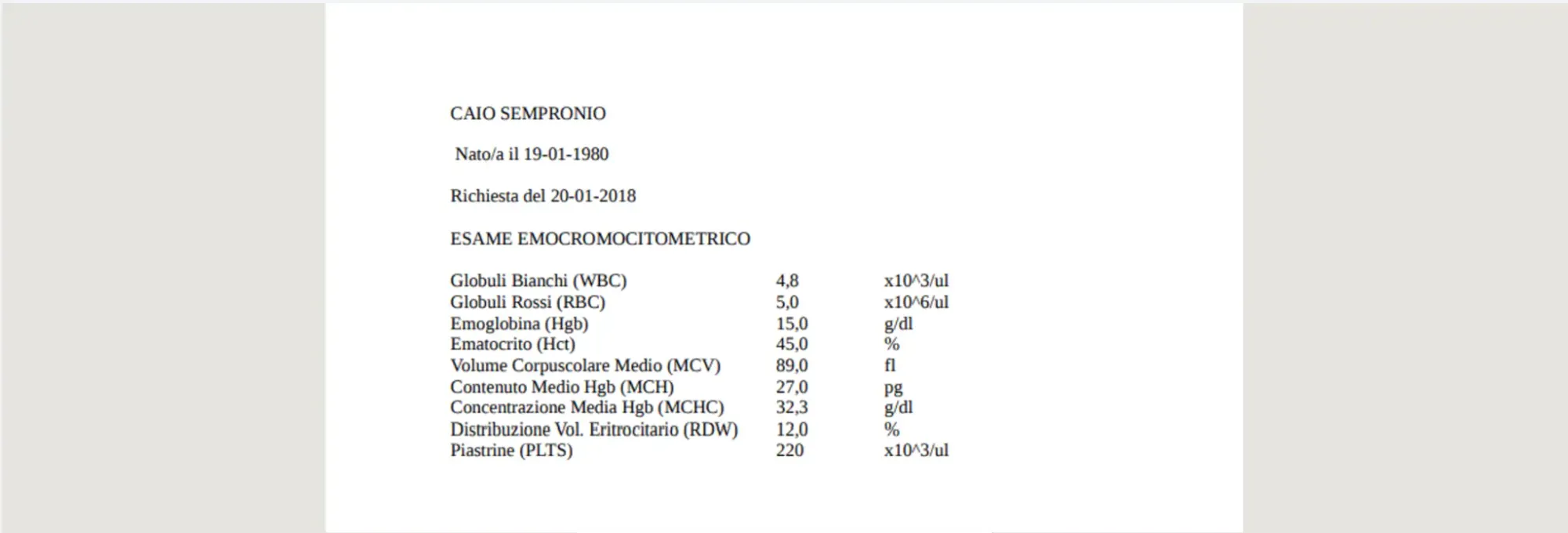

- Medical Report: Challenges APIs with advanced terminology, alphanumeric codes, and diverse textual content codecs.

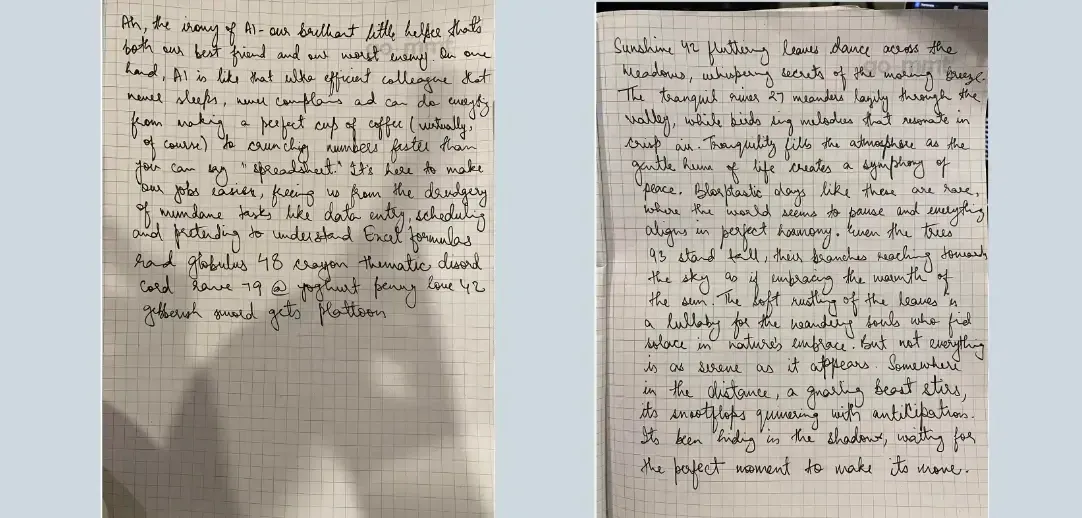

- Handwritten Doc: Checks the flexibility to acknowledge and extract inconsistent handwriting.

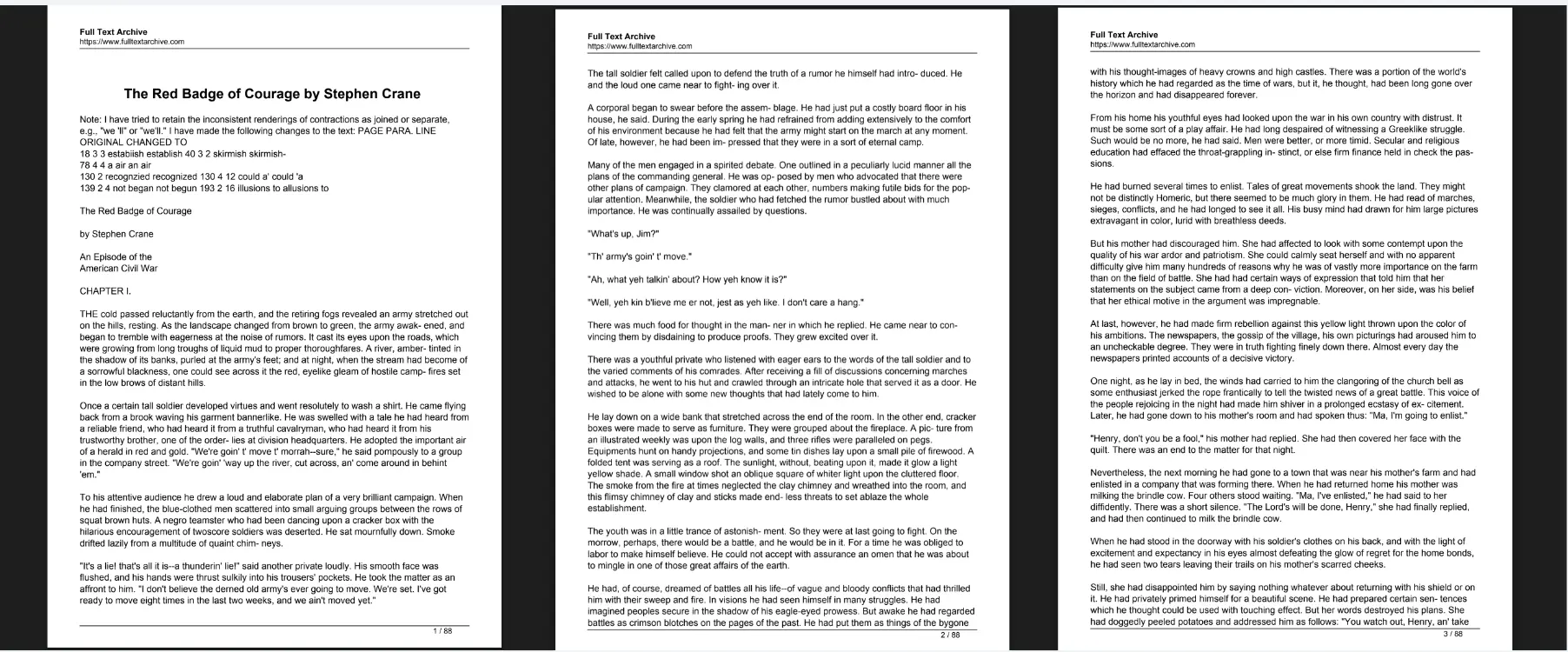

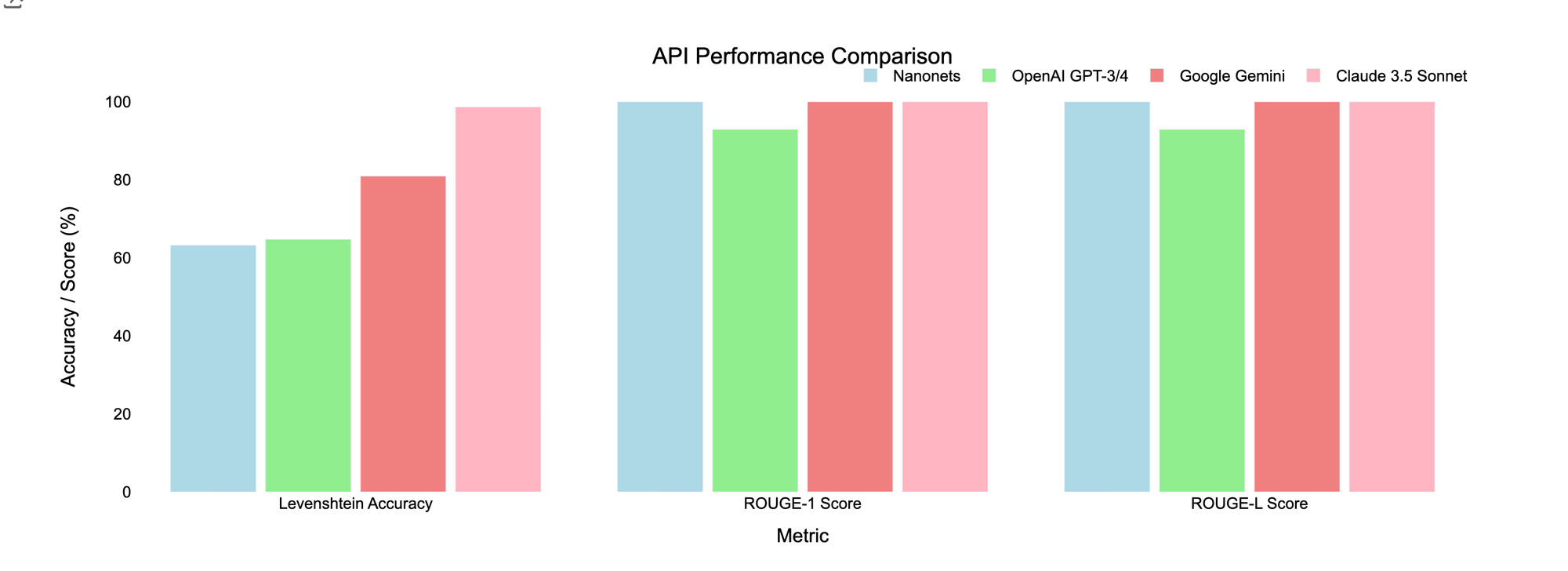

Multipage Textual Doc

Goal: Assess OCR precision and content material retention. Need to have the ability to extract uncooked textual content from the beneath paperwork.

Metrics Used:

- Levenshtein Accuracy: Measures the variety of edits required to match the extracted textual content with the unique, indicating OCR precision.

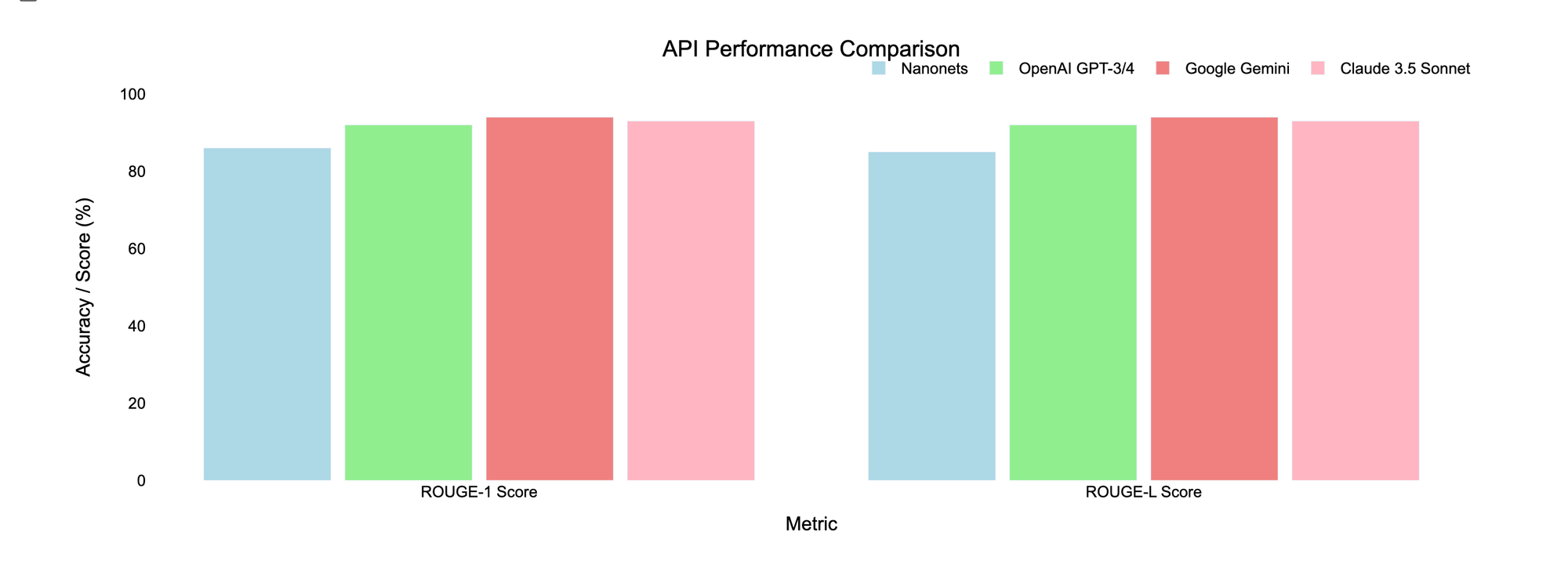

- ROUGE-1 Rating: Evaluates how nicely particular person phrases from the unique textual content are captured within the extracted output.

- ROUGE-L Rating: Checks how nicely the sequence of phrases and construction are preserved.

Paperwork Examined:

- Crimson badge of braveness.pdf (10 pages): A novel to check content material filtering and OCR accuracy.

- Self Generated PDF (1 web page): A single-page doc created to keep away from copyright points.

Outcomes

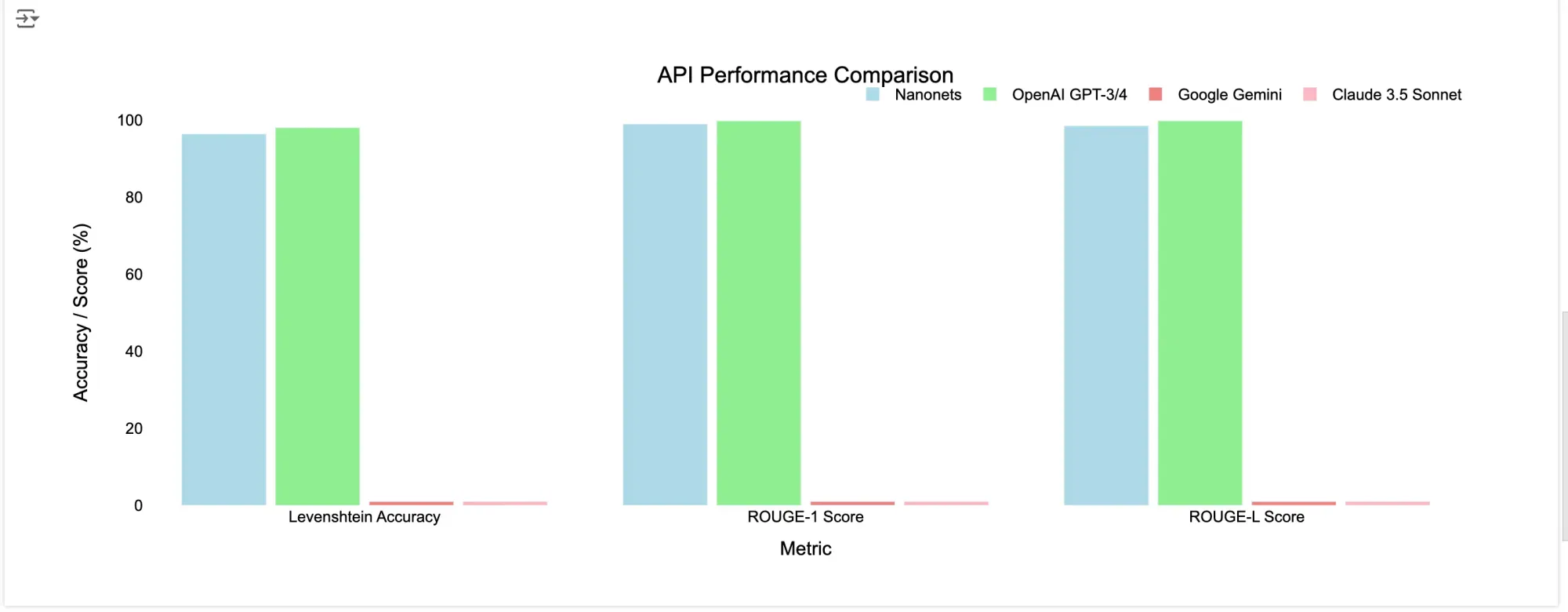

Crimson Badge of Braveness.pdf

| API | Final result | Levenshtein Accuracy | ROUGE-1 Rating | ROUGE-L Rating |

|---|---|---|---|---|

| Nanonets OCR | Success | 96.37% | 98.94% | 98.46% |

| ChatGPT-4o-latest | Success | 98% | 99.76% | 99.76% |

| Gemini 1.5 Professional | Error: Recitation |

x | x | x |

| Claude 3.5 Sonnet | Error: Output blocked by content material filtering coverage |

x | x | x |

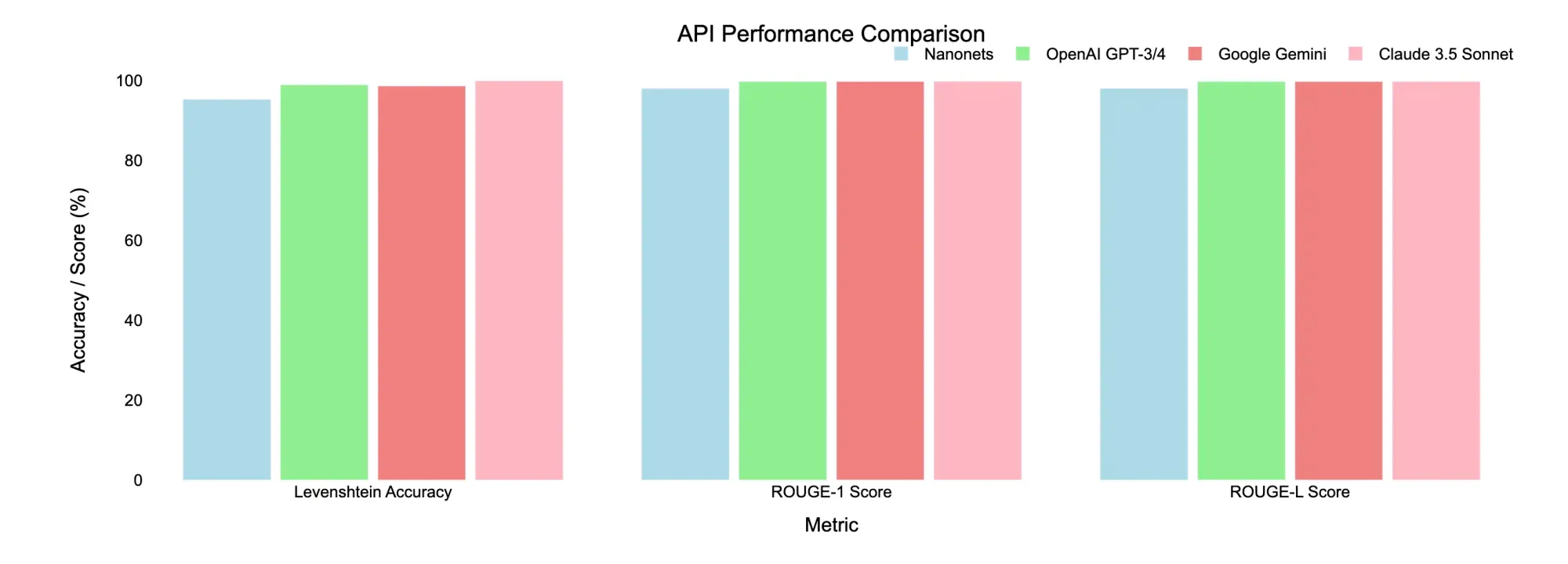

| API | Final result | Levenshtein Accuracy |

ROUGE-1 Rating |

ROUGE-L Rating |

|---|---|---|---|---|

| Nanonets OCR | Success | 95.24% | 97.98% | 97.98% |

| ChatGPT-4o-latest | Success | 98.92% | 99.73% | 99.73% |

| Gemini 1.5 Professional | Success | 98.62% | 99.73% | 99.73% |

| Claude 3.5 Sonnet | Success | 99.91% | 99.73% | 99.73% |

Key Takeaways

- Nanonets OCR and ChatGPT-4o-latest persistently carried out nicely throughout each paperwork, with excessive accuracy and quick processing occasions.

- Claude 3.5 Sonnet encountered points with content material filtering, making it much less dependable for paperwork that may set off such insurance policies, nonetheless when it comes to retaining the construction of the unique doc, it stood out as one of the best.

- Gemini 1.5 Professional struggled with “Recitation” errors, doubtless resulting from its content material insurance policies or non-conversational output textual content patterns

Conclusion: For paperwork that may have copyright points, Gemini and Claude won’t be excellent resulting from potential content material filtering restrictions. In such circumstances, Nanonets OCR or ChatGPT-4o-latest may very well be extra dependable selections.

💡

Total, whereas each Nanonets and ChatGPT-4o-latest carried out nicely right here, the downside with GPT was that we would have liked to make 10 separate requests (one for every web page) and convert PDFs to pictures earlier than processing. In distinction, Nanonets dealt with all the pieces in a single step.

Goal: Consider the effectiveness of various LLM APIs in extracting structured information from invoices and receipts. That is totally different from simply doing an OCR and consists of assessing their capability to precisely establish and extract key-value pairs and tables

Metrics Used:

- Precision: Measures the accuracy of extracting key-value pairs and desk information. It’s the ratio of appropriately extracted information to the overall variety of information factors extracted. Excessive precision signifies that the API extracts related info precisely with out together with too many false positives.

- Cell Accuracy: Assesses how nicely the API extracts information from tables, specializing in the correctness of knowledge inside particular person cells. This metric checks if the values within the cells are appropriately extracted and aligned with their respective headers.

Paperwork Examined:

- Take a look at Bill An bill with 13 key-value pairs and a desk with 8 rows and 5 columns based mostly on which we will probably be judging the accuracy

Outcomes

The outcomes are from once we carried out the experiment utilizing a generic immediate from Chatgpt, Gemini, and Claude and utilizing a generic bill template mannequin for Nanonets

Key-Worth Pair Extraction

| API | Essential Key-Worth Pairs Extracted | Essential Keys Missed | Key Values with Variations |

|---|---|---|---|

| Nanonets OCR | 13/13 | None | – |

| ChatGPT-4o-latest | 13/13 | None | Bill Date: 11/24/18 (Anticipated: 12/24/18), PO Quantity: 31.8850876 (Anticipated: 318850876) |

| Gemini 1.5 Professional | 12/13 | Vendor Identify | Bill Date: 12/24/18, PO Quantity: 318850876 |

| Claude 3.5 Sonnet | 12/13 | Vendor Tackle | Bill Date: 12/24/18, PO Quantity: 318850876 |

Desk Extraction

| API | Important Columns Extracted | Rows Extracted | Incorrect Cell Values |

|---|---|---|---|

| Nanonets OCR | 5/5 | 8/8 | 0/40 |

| ChatGPT-4o-latest | 5/5 | 8/8 | 1/40 |

| Gemini 1.5 Professional | 5/5 | 8/8 | 2/40 |

| Claude 3.5 Sonnet | 5/5 | 8/8 | 0/40 |

Key Takeaways

- Nanonets OCR proved to be extremely efficient for extracting each key-value pairs and desk information with excessive precision and cell accuracy.

- ChatGPT-4o-latest and Claude 3.5 Sonnet carried out nicely however had occasional points with OCR accuracy, affecting the extraction of particular values.

- Gemini 1.5 Professional confirmed limitations in dealing with some key-value pairs and cell values precisely, notably within the desk extraction.

Conclusion: For monetary paperwork, utilizing Nanonets for information extraction could be a better option. Whereas the opposite fashions can profit from tailor-made prompting methods to enhance their extraction capabilities, OCR accuracy is one thing that may require customized retraining lacking within the different 3. We’ll speak about this in additional element in a later part of the weblog.

Medical Doc

Goal: Consider the effectiveness of various LLM APIs in extracting structured information from a medical doc, notably specializing in textual content with superscripts, subscripts, alphanumeric characters, and specialised phrases.

Metrics Used:

- Levenshtein Accuracy: Measures the variety of edits required to match the extracted textual content with the unique, indicating OCR precision.

- ROUGE-1 Rating: Evaluates how nicely particular person phrases from the unique textual content are captured within the extracted output.

- ROUGE-L Rating: Checks how nicely the sequence of phrases and construction are preserved.

Paperwork Examined:

- Italian Medical Report A single-page doc with advanced textual content together with superscripts, subscripts, and alphanumeric characters.

Outcomes

| API | Levenshtein Accuracy | ROUGE-1 Rating | ROUGE-L Rating |

|---|---|---|---|

| Nanonets OCR | 63.21% | 100% | 100% |

| ChatGPT-4o-latest | 64.74% | 92.90% | 92.90% |

| Gemini 1.5 Professional | 80.94% | 100% | 100% |

| Claude 3.5 Sonnet | 98.66% | 100% | 100% |

Key Takeaways

- Gemini 1.5 Professional and Claude 3.5 Sonnet carried out exceptionally nicely in preserving the doc’s construction and precisely extracting advanced characters, with Claude 3.5 Sonnet main in general accuracy.

- Nanonets OCR offered first rate extraction outcomes however struggled with the complexity of the doc, notably with retaining the general construction of the doc, leading to decrease Levenshtein Accuracy.

- ChatGPT-4o-latest confirmed barely higher efficiency in preserving the structural integrity of the doc.

Conclusion: For medical paperwork with intricate formatting, Claude 3.5 Sonnet is essentially the most dependable choice for sustaining the unique doc’s construction. Nevertheless, if structural preservation is much less vital, Nanonets OCR and Google Gemini additionally supply sturdy options with excessive textual content accuracy.

Handwritten Doc

Goal: Assess the efficiency of varied LLM APIs in precisely extracting textual content from a handwritten doc, specializing in their capability to deal with irregular handwriting, various textual content sizes, and non-standardized formatting.

Metrics Used:

- ROUGE-1 Rating: Evaluates how nicely particular person phrases from the unique textual content are captured within the extracted output.

- ROUGE-L Rating: Checks how nicely the sequence of phrases and construction are preserved.

Paperwork Examined:

- Handwritten doc 1 A single-page doc with inconsistent handwriting, various textual content sizes, and non-standard formatting.

- Handwritten doc 2 A single-page doc with inconsistent handwriting, various textual content sizes, and non-standard formatting.

Outcomes

| API | ROUGE-1 Rating | ROUGE-L Rating |

|---|---|---|

| Nanonets OCR | 86% | 85% |

| ChatGPT-4o-latest | 92% | 92% |

| Gemini 1.5 Professional | 94% | 94% |

| Claude 3.5 Sonnet | 93% | 93% |

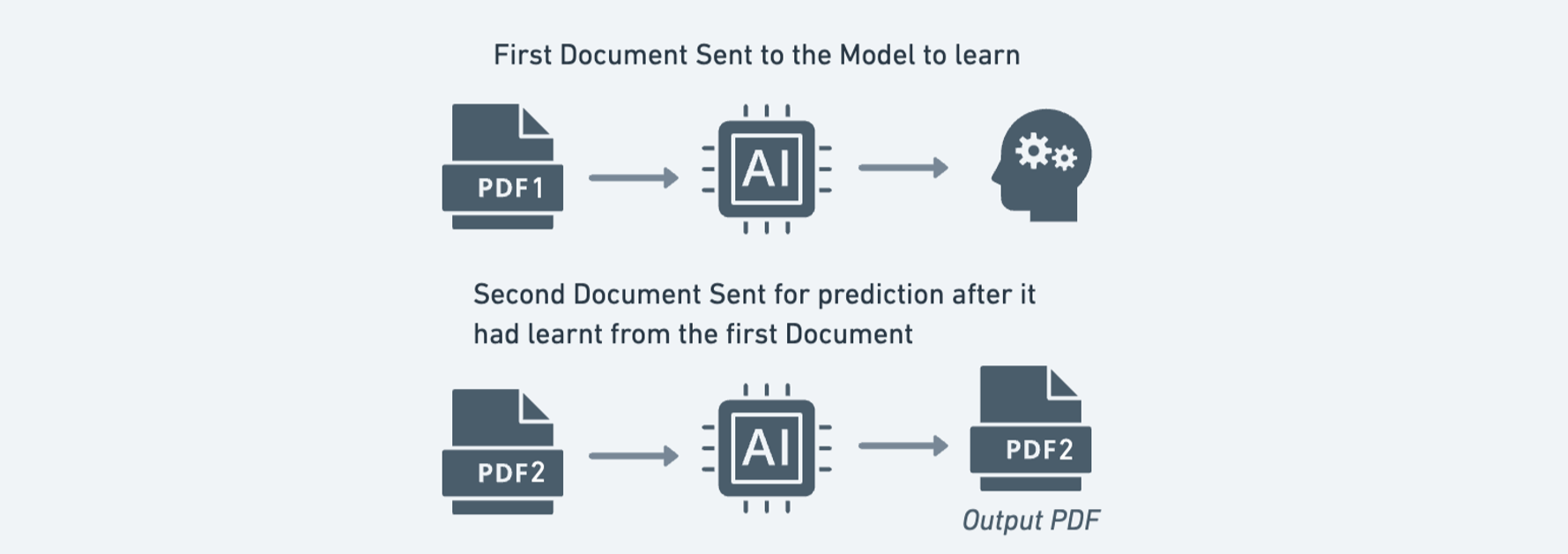

Impression of Coaching on Sonnet 3.5

To discover the potential for enchancment, the second doc was used to coach Claude 3.5 Sonnet earlier than extracting textual content from the primary doc. This resulted in a slight enchancment, with each ROUGE-1 and ROUGE-L scores will increase from 93% to 94%.

Key Takeaways

- ChatGPT-4o-latest Gemini 1.5 Professional and Claude 3.5 Sonnet carried out exceptionally nicely, with solely minimal variations between them. Claude 3.5 Sonnet, after further coaching, barely edged out Gemini 1.5 Professional in general accuracy.

- Nanonets OCR struggled just a little with irregular handwriting, however that is one thing that may be resolved with the no-code coaching that it affords, one thing we’ll cowl another time

Conclusion: For handwritten paperwork with irregular formatting, all of the 4 choices confirmed one of the best general efficiency. Retraining your mannequin can positively assist with bettering accuracy right here.

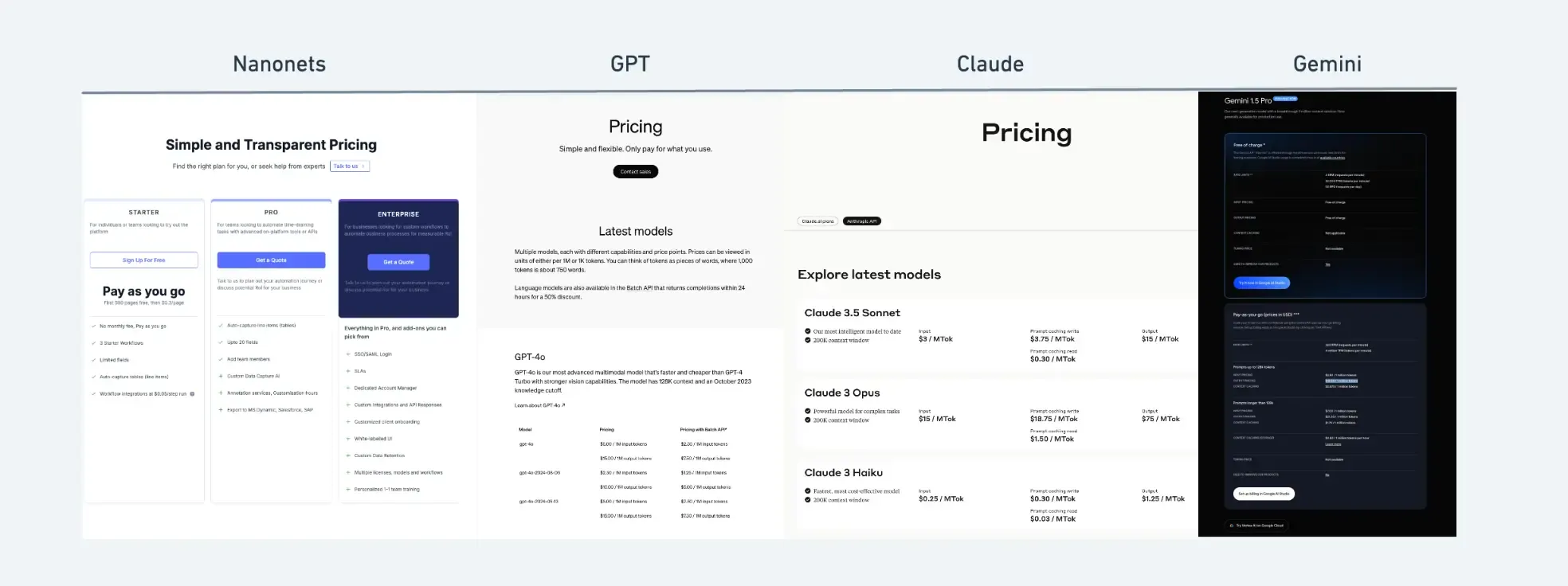

API Options and Pricing Evaluation

When choosing a Giant Language Mannequin (LLM) API for information extraction, understanding price limits, pricing, token lengths and extra options is likely to be essential as nicely. These components considerably influence how effectively and successfully you’ll be able to course of and extract information from giant paperwork or photographs. As an example, in case your information extraction job entails processing textual content that exceeds the token restrict of an API, chances are you’ll face challenges with truncation or incomplete information, or in case your request frequency surpasses the speed limits, you could possibly expertise delays or throttling, which might hinder the well timed processing of huge volumes of knowledge.

| Characteristic | OpenAI GPT-4 | Google Gemini 1.5 Professional | Anthropic Claude 3.5 Sonnet | Nanonets OCR |

|---|---|---|---|---|

| Token Restrict (Free) | N/A (No free tier) | 32,000 | 8,192 | N/A (OCR particular) |

| Token Restrict (Paid) | 32,768 (GPT-4 Turbo) | 4,000,000 | 200,000 | N/A (OCR-specific) |

| Charge Limits (Free) | N/A (No free tier) | 2 RPM | 5 RPM | 2 RPM |

| Charge Limits (Paid) | Varies by tier, as much as 10,000 TPM* | 360 RPM | Varies by tier, goes as much as 4000 RPM | Customized plans obtainable |

| Doc Varieties Supported | Picture | photographs, movies | Photos | Photos and PDFs |

| Mannequin Retraining | Not obtainable | Not obtainable | Not obtainable | Obtainable |

| Integrations with different Apps | Code-based API integration | Code-based API integration | Code-based API integration | Pre-built integrations with click-to-configure setup |

| Pricing Mannequin | Pay-per-token, tiered plans | Pay as you Go | Pay-per-token, tiered plans | Pay as you Go, Customized pricing based mostly on quantity |

| Beginning Worth | $0.03/1K tokens (immediate), $0.06/1K tokens (completion) for GPT-4 | $3.5/1M tokens (enter), $10.5/1M tokens (output) | $0.25/1M tokens (enter), $1.25/1M tokens (output) | workflow based mostly, $0.05/step run |

- TPM = Tokens Per Minute, RPM= Requests Per Minute

Hyperlinks for detailed pricing

Different Literature on the Web Evaluation

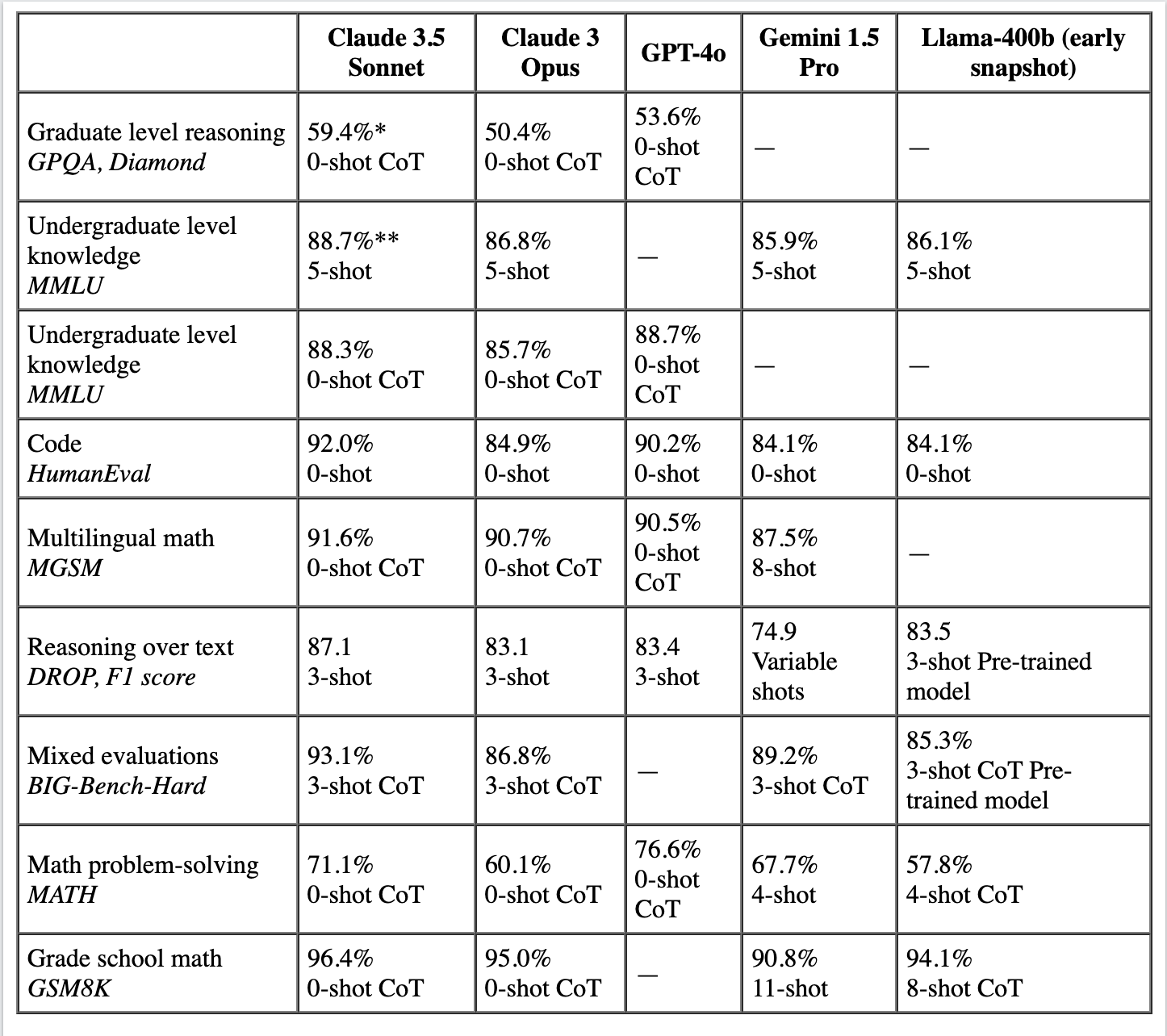

Along with our hands-on testing, we have additionally thought-about analyses obtainable from sources like Claude to offer a extra complete comparability of those main LLMs. The desk beneath presents an in depth comparative efficiency evaluation of varied AI fashions, together with Claude 3.5 Sonnet, Claude 3 Opus, GPT-4o, Gemini 1.5 Professional, and an early snapshot of Llama-400b. This analysis covers their skills in duties similar to reasoning, data retrieval, coding, and mathematical problem-solving. The fashions have been examined below totally different situations, like 0-shot, 3-shot, and 5-shot settings, which replicate the variety of examples offered to the mannequin earlier than producing an output. These benchmarks supply insights into every mannequin’s strengths and capabilities throughout numerous domains.

References:

Hyperlink 1

Hyperlink 2

Key Takeaways

- For detailed pricing and choices for every API, take a look at the hyperlinks offered above. They’ll show you how to examine and discover one of the best match in your wants.

- Moreover, whereas LLMs sometimes don’t supply retraining, Nanonets supplies these options for its OCR options. This implies you’ll be able to tailor the OCR to your particular necessities, doubtlessly bettering its accuracy.

- Nanonets additionally stands out with its pre-built integrations that make it simple to attach with different apps, simplifying the setup course of in comparison with the code-based integrations provided by different providers.

Conclusion

Choosing the suitable LLM API for information extraction is crucial, particularly for various doc varieties like invoices, medical data, and handwritten notes. Every API has distinctive strengths and limitations based mostly in your particular wants.

- Nanonets OCR excels in extracting structured information from monetary paperwork with excessive precision, particularly for key-value pairs and tables.

- ChatGPT-4 affords balanced efficiency throughout numerous doc varieties however might have immediate fine-tuning for advanced circumstances.

- Gemini 1.5 Professional and Claude 3.5 Sonnet are sturdy in dealing with advanced textual content, with Claude 3.5 Sonnet notably efficient in sustaining doc construction and accuracy.

For delicate or advanced paperwork, think about every API’s capability to protect the unique construction and deal with numerous codecs. Nanonets is right for monetary paperwork, whereas Claude 3.5 Sonnet is finest for paperwork requiring excessive structural accuracy.

In abstract, choosing the proper API is determined by understanding every choice’s strengths and the way they align together with your mission’s wants.

| Characteristic | Nanonets | OpenAI GPT-3/4 | Google Gemini | Anthropic Claude |

|---|---|---|---|---|

| Pace (Experiment) | Quickest | Quick | Sluggish | Quick |

| Strengths (Experiment) | Excessive precision in key-value pair extraction and structured outputs | Versatile throughout numerous doc varieties, quick processing | Glorious in handwritten textual content accuracy, handles advanced codecs nicely | High performer in retaining doc construction and sophisticated textual content accuracy |

| Weaknesses (Experiment) | Struggles with handwritten OCR | Wants fine-tuning for top accuracy in advanced circumstances | Occasional errors in structured information extraction, slower pace | Content material filtering points, particularly with copyrighted content material |

| Paperwork appropriate for | Monetary Paperwork | Dense Textual content Paperwork | Medical Paperwork, Handwritten Paperwork | Medical Paperwork, Handwritten Paperwork |

| Retraining Capabilities | No-code customized mannequin retraining obtainable | Positive tuning obtainable | Positive tuning obtainable | Positive tuning obtainable |

| Pricing Fashions | 3 (Pay-as-you-go, Professional, Enterprise) | 1 (Utilization-based, per-token pricing) | 1 (Utilization-based, per-token pricing) | 1 (Utilization-based, per-token pricing) |

| Integration Capabilities | Simple integration with ERP methods and customized workflows | Integrates nicely with numerous platforms, APIs | Seamless integration with Google Cloud providers | Sturdy integration with enterprise methods |

| Ease of Setup | Fast setup with an intuitive interface | Requires API data for setup | Simple setup with Google Cloud integration | Consumer-friendly setup with complete guides |