Attackers are discovering increasingly more methods to submit malicious tasks to Hugging Face and different repositories for open supply synthetic intelligence (AI) fashions, whereas dodging the websites’ safety checks. The escalating drawback underscores the necessity for firms pursuing inner AI tasks to have strong mechanisms to detect safety flaws and malicious code inside their provide chains.

Hugging Face’s automated checks, for instance, not too long ago didn’t detect malicious code in two AI fashions hosted on the repository, in accordance with a Feb. 3 evaluation printed by software program provide chain safety agency ReversingLabs. The risk actor used a standard vector — information information utilizing the Pickle format — with a brand new approach, dubbed “NullifAI,” to evade detection.

Whereas the assaults gave the impression to be proofs-of-concept, their success in being hosted with a “No problem” tag exhibits that firms shouldn’t depend on Hugging Face’s and different repositories’ security checks for their very own safety, says Tomislav Pericin, chief software program architect at ReversingLabs.

“You’ve got this public repository the place any developer or machine studying knowledgeable can host their very own stuff, and clearly malicious actors abuse that,” he says. “Relying on the ecosystem, the vector goes to be barely totally different, however the concept is identical: Somebody’s going to host a malicious model of a factor and hope so that you can inadvertently set up it.”

Corporations are shortly adopting AI, and the bulk are additionally establishing inner tasks utilizing open supply AI fashions from repositories — equivalent to Hugging Face, TensorFlow Hub, and PyTorch Hub. General, 61% of firms are utilizing fashions from the open supply ecosystem to create their very own AI instruments, in accordance with a Morning Seek the advice of survey of two,400 IT decision-makers sponsored by IBM.

But most of the parts can comprise executable code, resulting in a wide range of safety dangers, equivalent to code execution, backdoors, immediate injections, and alignment points — the latter being how nicely an AI mannequin matches the intent of the builders and customers.

In an Insecure Pickle

One vital problem is {that a} generally used information format, often called a Pickle file, will not be safe and can be utilized to execute arbitrary code. Regardless of vocal warnings from safety researchers, the Pickle format continues for use by many information scientists, says Tom Bonner, vp of analysis at HiddenLayer, an AI-focused detection and response agency.

“I actually hoped that we would make sufficient noise about it that Pickle would’ve passed by now, nevertheless it’s not,” he says. “I’ve seen organizations compromised by machine studying fashions — a number of [organizations] at this level. So yeah, while it is not an on a regular basis prevalence equivalent to ransomware or phishing campaigns, it does occur.”

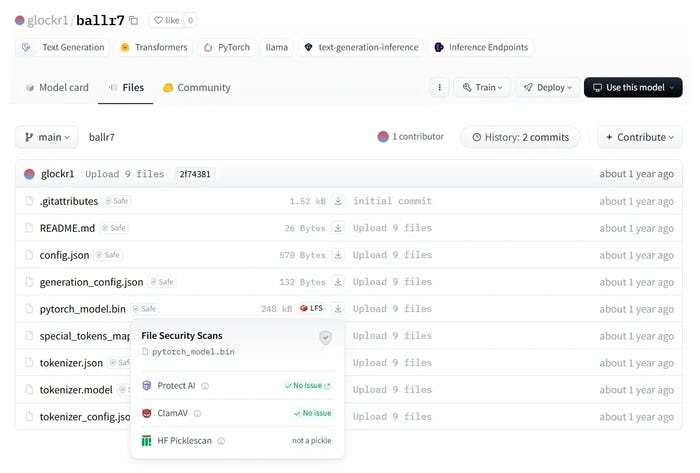

Whereas Hugging Face has express checks for Pickle information, the malicious code found by ReversingLabs sidestepped these checks through the use of a distinct file compression for the information. Different analysis by software safety agency Checkmarx discovered a number of methods to bypass the scanners, equivalent to PickleScan utilized by Hugging Face, to detect harmful Pickle information.

Regardless of having malicious options, this mannequin passes safety checks on Hugging Face. Supply: ReversingLabs

“PickleScan makes use of a blocklist which was efficiently bypassed utilizing each built-in Python dependencies,” Dor Tumarkin, director of software safety analysis at Checkmarx, said within the evaluation. “It’s plainly susceptible, however through the use of third-party dependencies equivalent to Pandas to bypass it, even when it have been to think about all circumstances baked into Python, it might nonetheless be susceptible with very fashionable imports in its scope.”

Quite than Pickle information, information science and AI groups ought to transfer to Safetensors — a library for a brand new information format managed by Hugging Face, EleutherAI, and Stability AI — which has been audited for safety. The Safetensors format is taken into account a lot safer than the Pickle format.

Deep-Seated AI Vulnerabilities

Executable information information will not be the one threats, nevertheless. Licensing is one other problem: Whereas pretrained AI fashions are continuously referred to as “open supply AI,” they typically don’t present all the knowledge wanted to breed the AI mannequin, equivalent to code and coaching information. As a substitute, they supply the weights generated by the coaching and are lined by licenses that aren’t at all times open supply suitable.

Creating business services or products from such fashions can doubtlessly lead to violating the licenses, says Andrew Stiefel, a senior product supervisor at Endor Labs.

“There’s a variety of complexity within the licenses for fashions,” he says. “You’ve got the precise mannequin binary itself, the weights, the coaching information, all of these may have totally different licenses, and you could perceive what meaning for your online business.”

Mannequin alignment — how nicely its output aligns with the builders’ and customers’ values — is the ultimate wildcard. DeepSeek, for instance, permits customers to create malware and viruses, researchers discovered. Different fashions — equivalent to OpenAI’s o3-mini mannequin, which boasts extra stringent alignment — has already been jail damaged by researchers.

These issues are distinctive to AI techniques and the boundaries of tips on how to take a look at for such weaknesses stays a fertile area for researchers, says ReversingLabs’ Pericin.

“There’s already analysis about what sort of prompts would set off the mannequin to behave in an unpredictable manner, disclose confidential info, or educate issues that might be dangerous,” he says. “That is a complete different self-discipline of machine studying mannequin security that persons are, in all honesty, principally apprehensive about at present.”

Corporations ought to make sure that to know any licenses protecting the AI fashions they’re utilizing. As well as, they need to take note of widespread alerts of software program security, together with the supply of the mannequin, growth exercise across the mannequin, its reputation, and the operational and safety dangers, Endor’s Stiefel says.

“You sort of must handle AI fashions such as you would some other open supply dependencies,” Stiefel says. “They’re constructed by individuals exterior of your group and also you’re bringing them in, and so meaning you could take that very same holistic strategy to taking a look at dangers.”