Safety researchers found a reputation confusion assault that permits entry to an Amazon Internet Providers account to anybody that publishes an Amazon Machine Picture (AMI) with a particular identify.

Dubbed “whoAMI,” the assault was crafted by DataDog researchers in August 2024, who demonstrated that it is potential for attackers to realize code execution inside AWS accounts by exploiting how software program initiatives retrieve AMI IDs.

Amazon confirmed the vulnerability and pushed a repair in September however the issue persists on the shopper facet in environments the place organizations fail to replace the code.

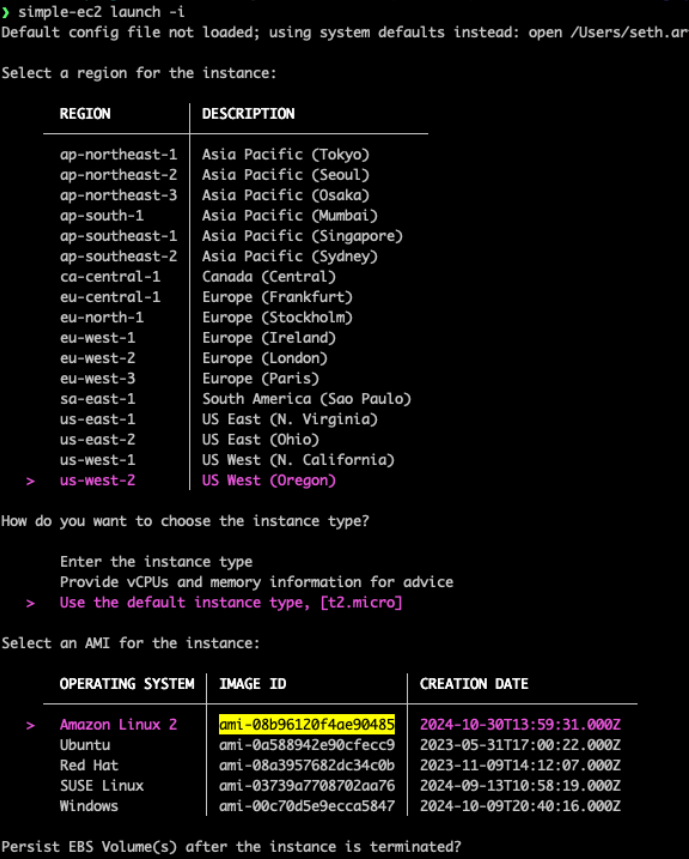

Finishing up the whoAMI assault

AMIs are digital machines preconfigured with the required software program (working system, functions) used for creating digital servers, that are referred to as EC2 (Elastic Compute Cloud) situations within the AWS ecosystem.

There are private and non-private AMIs, every with a particular identifier. Within the case of public ones, customers can search within the AWS catalog for the appropriate ID of the AMI they want.

To make it possible for the AMI is from a trusted supply within the AWS market, the search wants to incorporate the ‘house owners’ attribute, in any other case the chance of a whoAMI identify confusion assault will increase.

The whoAMI assault is feasible on account of misconfigured AMI choice in AWS environments:

- The retrieval of AMIs by software program utilizing the ec2:DescribeImages API with out specifying an proprietor

- Using wildcards by scripts as an alternative of particular AMI IDs

- The observe of some infrastructure-as-code instruments like Terraform utilizing “most_recent=true,” routinely choosing the newest AMI that matches the filter.

These situations permit the attackers to insert malicious AMIs within the choice course of by naming the useful resource equally to a trusted one. With out specifying an an proprietor, AWS returns all matching AMIs, together with the attacker’s.

If the parameter “most_recent” is about to “true,” the sufferer’s system supplies the newest AMIs added to {the marketplace}, which can embrace a malicious one which has a reputation just like a reputable entry.

Supply: DataDog

Principally, all an attacker must do is publish an AMI with a reputation that matches the sample utilized by trusted house owners, making it simple for customers to pick out it and launch an EC2 occasion.

The whoAMI assault doesn’t require breaching the goal’s AWS account. The attacker solely wants an AWS account to publish their backdoored AMI to the general public Neighborhood AMI catalog and strategically select a reputation that mimics the AMIs of their targets.

Datadog says that based mostly on their telemetry, about 1% of the organizations the corporate screens are susceptible to whoAMI assaults however “this vulnerability probably impacts hundreds of distinct AWS accounts.”

Amazon’s response and protection measures

DataDog researchers notified Amazon in regards to the flaw and the corporate confirmed that inside non-production programs have been susceptible to the whoAMI assault.

The problem was fastened final yr on September 19, and on December 1st AWS launched a brand new safety management named ‘Allowed AMIs’ permitting clients to create an permit checklist of trusted AMI suppliers.

AWS said that the vulnerability was not exploited exterior of the safety researchers’ checks, so no buyer information was compromised by way of whoAMI assaults.

Amazon advises clients to all the time specify AMI house owners when utilizing the “ec2:DescribeImages” API and allow the ‘Allowed AMIs’ function for added safety.

The brand new function is on the market by way of AWS Console → EC2 → Account Attributes → Allowed AMIs.

Beginning final November, Terraform 5.77 began serving warnings to customers when “most_recent = true” is used with out an proprietor filter, with stricter enforcement deliberate for future releases (6.0).

System admins should audit their configuration and replace their code on AMI sources (Terraform, AWS CLI, Python Boto3, and Go AWS SDK) for protected AMI retrieval.

To examine if untrusted AMIs are presently in use, allow AWS Audit Mode by means of ‘Allowed AMIs,’ and swap to ‘Enforcement Mode’ to dam them.

DataDog has additionally launched a scanner to examine AWS account for situations created from untrusted AMIs, accessible in this GitHub repository.