Think about that you just reverse engineered a chunk of malware in painstaking element solely to seek out that the malware creator created a barely modified model of the malware the following day. You would not need to redo all of your onerous work. One technique to keep away from beginning over from scratch is to make use of code comparability strategies to attempt to determine pairs of features within the outdated and new model which might be “the identical.” (I’ve used quotes as a result of “similar” on this occasion is a little bit of a nebulous idea, as we’ll see).

A number of instruments can assist in such conditions. A very fashionable industrial software is zynamics’ BinDiff, which is now owned by Google and is free. The SEI’s Pharos binary evaluation framework additionally features a code comparability utility referred to as fn2hash. On this SEI Weblog publish, the primary in a two-part collection on hashing, I first clarify the challenges of code comparability and current our resolution to the issue. I then introduce fuzzy hashing, a brand new sort of hashing algorithm that may carry out inexact matching. Lastly, I evaluate the efficiency of fn2hash and a fuzzy hashing algorithm utilizing quite a lot of experiments.

Background

Precise Hashing

fn2hash employs a number of kinds of hashing. Essentially the most generally used is known as place unbiased code (PIC) hashing. To see why PIC hashing is necessary, we’ll begin by taking a look at a naive precursor to PIC hashing: merely hashing the instruction bytes of a operate. We’ll name this actual hashing.

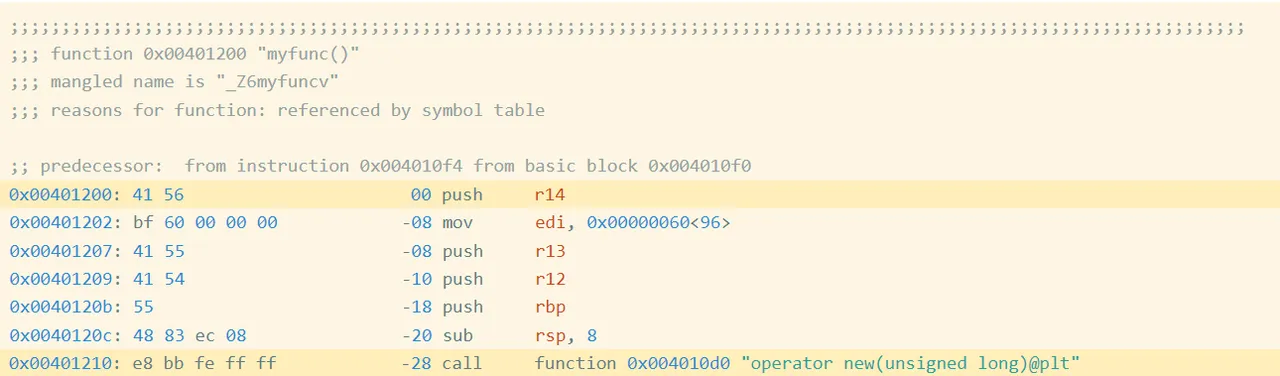

For instance, I compiled this straightforward program oo.cpp with g++. Determine 1 reveals the start of the meeting code for the operate myfunc (full code):

Determine 1: Meeting Code and Bytes from oo.gcc

Precise Bytes

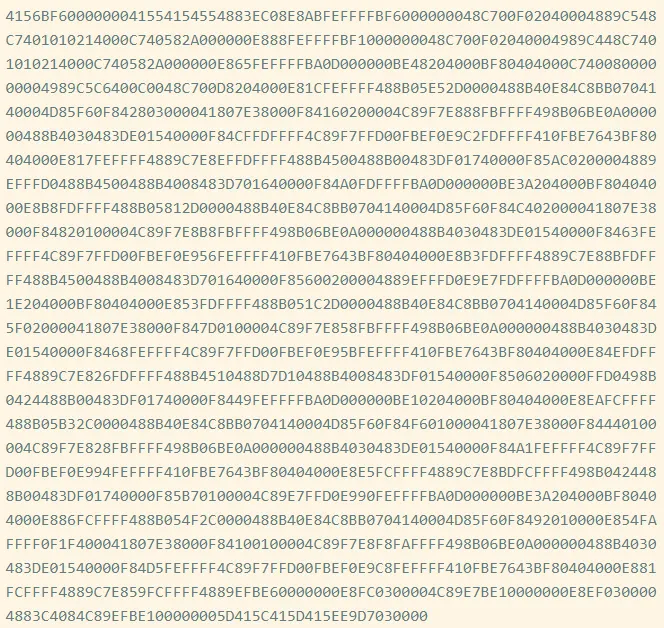

Within the first highlighted line of Determine 1, you may see that the primary instruction is a push r14, which is encoded by the hexadecimal instruction bytes 41 56. If we accumulate the encoded instruction bytes for each instruction within the operate, we get the next (Determine 2):

Determine 2: Precise Bytes in oo.gcc

We name this sequence the actual bytes of the operate. We will hash these bytes to get an actual hash, 62CE2E852A685A8971AF291244A1283A.

Shortcomings of Precise Hashing

The highlighted name at deal with 0x401210 is a relative name, which implies that the goal is specified as an offset from the present instruction (technically, the following instruction). For those who have a look at the instruction bytes for this instruction, it consists of the bytes bb fe ff ff, which is 0xfffffebb in little endian type. Interpreted as a signed integer worth, that is -325. If we take the deal with of the following instruction (0x401210 + 5 == 0x401215) after which add -325 to it, we get 0x4010d0, which is the deal with of operator new, the goal of the decision. Now we all know that bb fe ff ff is an offset from the following instruction. Such offsets are referred to as relative offsets as a result of they’re relative to the deal with of the following instruction.

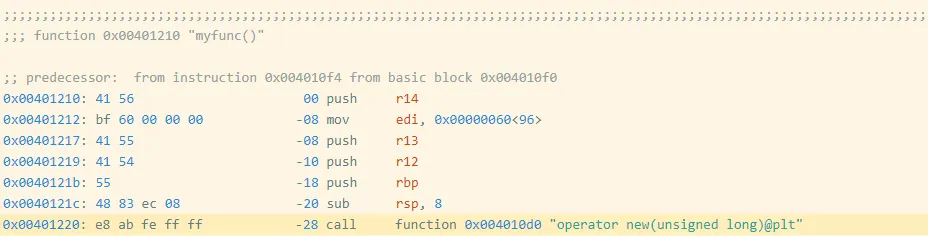

I created a barely modified program (oo2.gcc) by including an empty, unused operate earlier than myfunc (Determine 3). You’ll find the disassembly of myfunc for this executable right here.

If we take the precise hash of myfunc on this new executable, we get 05718F65D9AA5176682C6C2D5404CA8D. You’ll discover that is completely different from the hash for myfunc within the first executable, 62CE2E852A685A8971AF291244A1283A. What occurred? Let us take a look at the disassembly.

Determine 3: Meeting Code and Bytes from oo2.gcc

Discover that myfunc moved from 0x401200 to 0x401210, which additionally moved the deal with of the decision instruction from 0x401210 to 0x401220. The decision goal is specified as an offset from the (subsequent) instruction’s deal with, which modified by 0x10 == 16, so the offset bytes for the decision modified from bb fe ff ff (-325) to ab fe ff ff (-341 == -325 – 16). These modifications modify the precise bytes as proven in Determine 4.

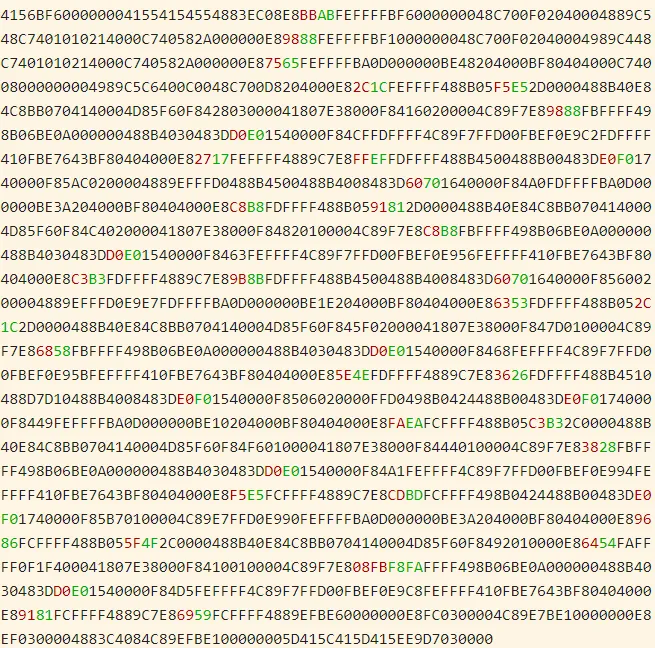

Determine 4: Precise Bytes in oo2.gcc

Determine 5 presents a visible comparability. Purple represents bytes which might be solely in oo.gcc, and inexperienced represents bytes in oo2.gcc. The variations are small as a result of the offset is just altering by 0x10, however this is sufficient to break actual hashing.

Determine 5: Distinction Between Precise Bytes in oo.gcc and oo2.gcc

PIC Hashing

The aim of PIC hashing is to compute a hash or signature of code in a method that preserves the hash when relocating the code. This requirement is necessary as a result of, as we simply noticed, modifying a program typically ends in small modifications to addresses and offsets, and we do not need these modifications to switch the hash. The instinct behind PIC hashing is very straight-forward: Establish offsets and addresses which might be prone to change if this system is recompiled, equivalent to bb fe ff ff, and easily set them to zero earlier than hashing the bytes. That method, if they modify as a result of the operate is relocated, the operate’s PIC hash will not change.

The next visible diff (Determine 6) reveals the variations between the precise bytes and the PIC bytes on myfunc in oo.gcc. Purple represents bytes which might be solely within the PIC bytes, and inexperienced represents the precise bytes. As anticipated, the primary change we are able to see is the byte sequence bb fe ff ff, which is modified to zeros.

Determine 6: Byte Distinction Between PIC Bytes (Purple) and Precise Bytes (Inexperienced)

If we hash the PIC bytes, we get the PIC hash EA4256ECB85EDCF3F1515EACFA734E17. And, as we might hope, we get the similar PIC hash for myfunc within the barely modified oo2.gcc.

Evaluating the Accuracy of PIC Hashing

The first motivation behind PIC hashing is to detect an identical code that’s moved to a unique location. However what if two items of code are compiled with completely different compilers or completely different compiler flags? What if two features are very related, however one has a line of code eliminated? These modifications would modify the non-offset bytes which might be used within the PIC hash, so it might change the PIC hash of the code. Since we all know that PIC hashing is not going to all the time work, on this part we focus on find out how to measure the efficiency of PIC hashing and evaluate it to different code comparability strategies.

Earlier than we are able to outline the accuracy of any code comparability method, we want some floor reality that tells us which features are equal. For this weblog publish, we use compiler debug symbols to map operate addresses to their names. Doing so supplies us with a floor reality set of features and their names. Additionally, for the needs of this weblog publish, we assume that if two features have the identical title, they’re “the identical.” (This, clearly, just isn’t true generally!)

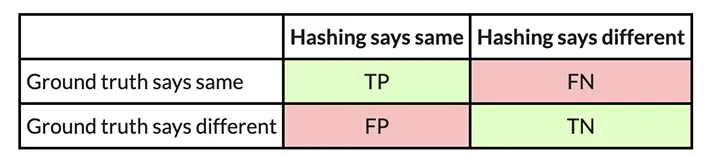

Confusion Matrices

So, for instance now we have two related executables, and we need to consider how effectively PIC hashing can determine equal features throughout each executables. We begin by contemplating all doable pairs of features, the place every pair accommodates a operate from every executable. This method is known as the Cartesian product between the features within the first executable and the features within the second executable. For every operate pair, we use the bottom reality to find out whether or not the features are the identical by seeing if they’ve the identical title. We then use PIC hashing to foretell whether or not the features are the identical by computing their hashes and seeing if they’re an identical. There are two outcomes for every willpower, so there are 4 potentialities in whole:

- True optimistic (TP): PIC hashing accurately predicted the features are equal.

- True unfavourable (TN): PIC hashing accurately predicted the features are completely different.

- False optimistic (FP): PIC hashing incorrectly predicted the features are equal, however they don’t seem to be.

- False unfavourable (FN): PIC hashing incorrectly predicted the features are completely different, however they’re equal.

To make it somewhat simpler to interpret, we coloration the great outcomes inexperienced and the dangerous outcomes pink. We will signify these in what is known as a confusion matrix:

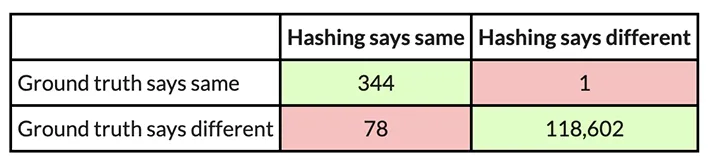

For instance, here’s a confusion matrix from an experiment through which I exploit PIC hashing to check openssl variations 1.1.1w and 1.1.1v when they’re each compiled in the identical method. These two variations of openssl are fairly related, so we might anticipate that PIC hashing would do effectively as a result of lots of features will likely be an identical however shifted to completely different addresses. And, certainly, it does:

Metrics: Accuracy, Precision, and Recall

So, when does PIC hashing work effectively and when does it not? To reply these questions, we’ll want a better technique to consider the standard of a confusion matrix as a single quantity. At first look, accuracy looks like essentially the most pure metric, which tells us: What number of pairs did hashing predict accurately? This is the same as

For the above instance, PIC hashing achieved an accuracy of

You may assume 99.9 % is fairly good, however if you happen to look carefully, there’s a delicate drawback: Most operate pairs are not equal. Based on the bottom reality, there are TP + FN = 344 + 1 = 345 equal operate pairs, and TN + FP = 118,602 + 78 = 118,680 nonequivalent operate pairs. So, if we simply guessed that every one operate pairs had been nonequivalent, we might nonetheless be proper 118680 / (118680 + 345) = 99.9 % of the time. Since accuracy weights all operate pairs equally, it isn’t essentially the most applicable metric.

As an alternative, we wish a metric that emphasizes optimistic outcomes, which on this case are equal operate pairs. This result’s per our aim in reverse engineering, as a result of understanding that two features are equal permits a reverse engineer to switch data from one executable to a different and save time.

Three metrics that focus extra on optimistic instances (i.e., equal features) are precision, recall, and F1 rating:

- Precision: Of the operate pairs hashing declared equal, what number of had been truly equal? This is the same as TP / (TP + FP).

- Recall: Of the equal operate pairs, what number of did hashing accurately declare as equal? This is the same as TP / (TP + FN).

- F1 rating: This can be a single metric that displays each the precision and recall. Particularly, it’s the harmonic imply of the precision and recall, or (2 ∗ Recall ∗ Precision) / (Recall + Precision). In comparison with the arithmetic imply, the harmonic imply is extra delicate to low values. Which means that if both the precision or recall is low, the F1 rating can even be low.

So, wanting on the instance above, we are able to compute the precision, recall, and F1 rating. The precision is 344 / (344 + 78) = 0.81, the recall is 344 / (344 + 1) = 0.997, and the F1 rating is 2 ∗ 0.81 ∗ 0.997 / (0.81 + 0.997) = 0.89. PIC hashing is ready to determine 81 % of equal operate pairs, and when it does declare a pair is equal it’s appropriate 99.7 % of the time. This corresponds to an F1 rating of 0.89 out of 1.0, which is fairly good.

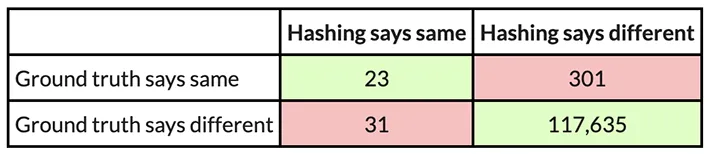

Now, you is perhaps questioning how effectively PIC hashing performs when there are extra substantial variations between executables. Let’s have a look at one other experiment. On this one, I evaluate an openssl executable compiled with GCC to at least one compiled with Clang. As a result of GCC and Clang generate meeting code in another way, we might anticipate there to be much more variations.

Here’s a confusion matrix from this experiment:

On this instance, PIC hashing achieved a recall of 23 / (23 + 301) = 0.07, and a precision of 23 / (23 + 31) = 0.43. So, PIC hashing can solely determine 7 % of equal operate pairs, however when it does declare a pair is equal it’s appropriate 43 % of the time. This corresponds to an F1 rating of 0.12 out of 1.0, which is fairly dangerous. Think about that you just spent hours reverse engineering the 324 features in one of many executables solely to seek out that PIC hashing was solely in a position to determine 23 of them within the different executable. So, you’ll be compelled to needlessly reverse engineer the opposite features from scratch. Can we do higher?

The Nice Fuzzy Hashing Debate

Within the subsequent publish on this collection, we are going to introduce a really completely different sort of hashing referred to as fuzzy hashing, and discover whether or not it might probably yield higher efficiency than PIC hashing alone. As with PIC hashing, fuzzy hashing reads a sequence of bytes and produces a hash. In contrast to PIC hashing, nonetheless, whenever you evaluate two fuzzy hashes, you might be given a similarity worth between 0.0 and 1.0, the place 0.0 means fully dissimilar, and 1.0 means fully related. We’ll current the outcomes of a number of experiments that pit PIC hashing and a preferred fuzzy hashing algorithm towards one another and look at their strengths and weaknesses within the context of malware reverse-engineering.