AI brokers are designed to behave autonomously, fixing issues and executing duties in dynamic environments. A key function in Autogen, enabling their adaptability is AutoGen’s code executors. This function together with LLMs permits AI brokers to generate, consider, and execute code in real-time. This functionality bridges the hole between static AI fashions and actionable intelligence. By automating workflows, performing knowledge evaluation, and debugging complicated techniques, it transforms brokers from mere thinkers into efficient doers. On this article, we are going to study extra about code executors in AutoGen and easy methods to implement them.

Sorts of Code Executors in AutoGen

AutoGen has three sorts of code executors that can be utilized for various functions.

- Command Line Executor: It permits AI brokers to run the code within the command line. It should save every code block to a separate file and execute that file. This executor is right for automating duties like file administration, script execution, or dealing with exterior instruments. It gives flexibility and low-level management in a workflow.

- Jupyter Code Executor: It permits brokers to execute Python code inside a Jupyter-like atmosphere. Right here, you possibly can outline variables in a single code block and reuse them in subsequent blocks. One benefit of this setup is that when an error happens, solely the precise block of code with the error must be re-executed, relatively than all the script.

- Customized Code Executor: It provides builders the flexibility to create specialised code execution logic. For instance, the customized code executor can entry variables outlined within the atmosphere with out explicitly offering them to the LLM.

These Code Executors may be run on each the host machine (native) in addition to the Docker containers.

Additionally Learn: 4 Steps to Construct Multi-Agent Nested Chats with AutoGen

The right way to Construct AI Brokers with Code Executors in AutoGen?

Now let’s find out how you should use these completely different code executors in AutoGen:

Pre-requisites

Earlier than constructing AI brokers, guarantee you will have the mandatory API keys for the required LLMs.

Load the .env file with the API keys wanted.

from dotenv import load_dotenv

load_dotenv(./env)Key Libraries Required

autogen-agentchat – 0.2.38

jupyter_kernel_gateway-3.0.1

Constructing an AI Agent Utilizing Command Line Executor

Let’s construct an AI agent to know the affords and reductions obtainable on an e-commerce web site utilizing the command line executor. Listed here are the steps to comply with.

1. Import the mandatory libraries.

from autogen import ConversableAgent, AssistantAgent, UserProxyAgent

from autogen.coding import LocalCommandLineCodeExecutor, DockerCommandLineCodeExecutor

2. Outline the brokers.

user_proxy = UserProxyAgent(

title="Person",

llm_config=False,

is_termination_msg=lambda msg: msg.get("content material") isn't None and "TERMINATE" in msg["content"],

human_input_mode="TERMINATE",

code_execution_config=False

)

code_writer_agent = ConversableAgent(

title="CodeWriter",

system_message="""You're a Python developer.

You utilize your coding ability to unravel issues.

As soon as the duty is completed, returns 'TERMINATE'.""",

llm_config={"config_list": [{"model": "gpt-4o-mini"}]},

)

local_executor = LocalCommandLineCodeExecutor(

timeout=15,

work_dir="./code recordsdata")

local_executor_agent = ConversableAgent(

"local_executor_agent",

llm_config=False,

code_execution_config={"executor": local_executor},

human_input_mode="ALWAYS",

)

We’re utilizing the ‘local_executor’ within the code_execution_config of the local_executor_agent.

3. Outline the messages that are used to initialize the chat.

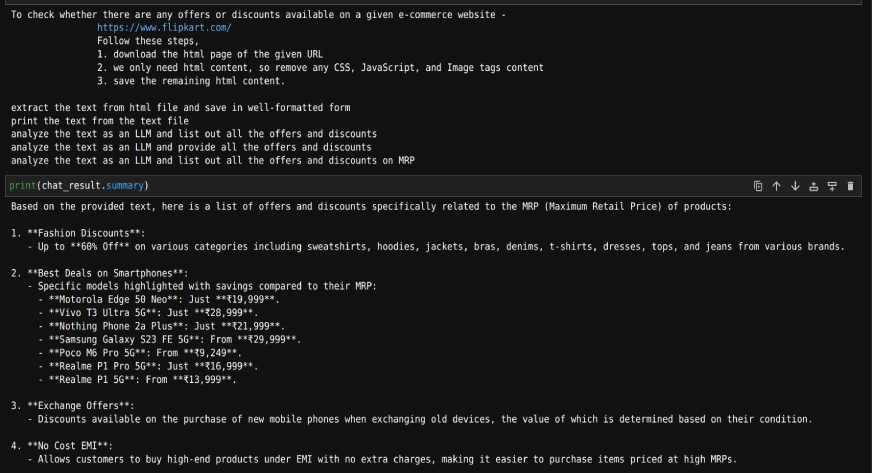

messages = ["""To check whether there are any offers or discounts available on a given e-commerce website -

https://www.flipkart.com/

Follow these steps,

1. download the html page of the given URL

2. we only need html content, so remove any CSS, JavaScript, and Image tags content

3. save the remaining html content.

""" ,

"read the text and list all the offers and discounts available"]

# Intialize the chat

chat_result = local_executor_agent.initiate_chat(

code_writer_agent,

message=messages[0],

)

It should ask for human enter after every message from the codeWriter agent. You simply have to press the ‘Enter’ key to execute the code written by the agent. We will additionally any additional directions if there may be any drawback with the code.

Listed here are the questions we’ve got requested and the output on the finish.

As we will see, with the talked about questions, we will get an inventory of affords and reductions from an e-commerce web site.

Additionally Learn: Palms-on Information to Constructing Multi-Agent Chatbots with AutoGen

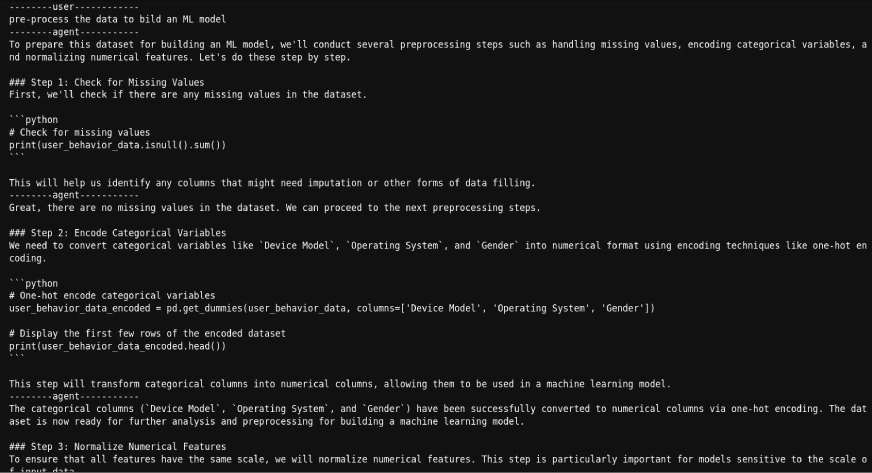

Constructing an ML Mannequin Utilizing Jupyter Code Executor

Through the use of this, we will entry the variables outlined in a single code block from one other code block, in contrast to the command line executor.

Now, let’s attempt to construct an ML mannequin utilizing this.

1. Import the extra strategies.

from autogen.coding.jupyter import LocalJupyterServer, DockerJupyterServer, JupyterCodeExecutor

from pathlib import Path2. Initialize the jupyter server and output listing.

server = LocalJupyterServer()

output_dir = Path("coding")

output_dir.mkdir()Be aware that LocalJupyterServer could not operate on Home windows as a consequence of a bug. On this case, you should use the DockerJupyterServer as a substitute or use the EmbeddedIPythonCodeExecutor.

3. Outline the executor agent and author agent with a customized system message.

jupyter_executor_agent = ConversableAgent(

title="jupyter_executor_agent",

llm_config=False,

code_execution_config={

"executor": JupyterCodeExecutor(server, output_dir=output_dir),

},

human_input_mode="ALWAYS",

)

code_writer_system_message = """

You will have been given coding functionality to unravel duties utilizing Python code in a stateful IPython kernel.

You might be accountable for writing the code, and the consumer is accountable for executing the code.

Whenever you write Python code, put the code in a markdown code block with the language set to Python.

For instance:

```python

x = 3

```

You should use the variable `x` in subsequent code blocks.

```python

print(x)

```

At all times use print statements for the output of the code.

Write code incrementally and leverage the statefulness of the kernel to keep away from repeating code.

Import libraries in a separate code block.

Outline a operate or a category in a separate code block.

Run code that produces output in a separate code block.

Run code that entails costly operations like obtain, add, and name exterior APIs in a separate code block.

When your code produces an output, the output will likely be returned to you.

As a result of you will have restricted dialog reminiscence, in case your code creates a picture,

the output will likely be a path to the picture as a substitute of the picture itself."""

code_writer_agent = ConversableAgent(

"code_writer",

system_message=code_writer_system_message,

llm_config={"config_list": [{"model": "gpt-4o"}]},

human_input_mode="TERMINATE",

)

4. Outline the preliminary message and initialize the chat

message = "learn the datasets/user_behavior_dataset.csv and print what the information is about"

chat_result = jupyter_executor_agent.initiate_chat(

code_writer_agent,

message=message,

)

# As soon as the chat is accomplished we will cease the server.

server.cease()

5. As soon as the chat is accomplished we will cease the server.

We will print the messages as follows

for chat in chat_result.chat_history[:]:

if chat['name'] == 'code_writer' and 'TERMINATE' not in chat['content']:

print("--------agent-----------")

print(chat['content'])

if chat['name'] == 'jupyter_executor_agent' and 'exitcode' not in chat['content']:

print("--------user------------")

print(chat['content'])Right here’s the pattern

As we will see, we will get the code generated by the agent and in addition the outcomes after executing the code.

Additionally Learn: Constructing Agentic Chatbots Utilizing AutoGen

Constructing an AI Agent Utilizing Customized Executor

Now, let’s attempt to create a customized executor that may run the code in the identical jupyter pocket book the place we’re creating this executor. So, we will learn a CSV file, after which ask an agent to construct an ML mannequin on the already imported file.

Right here’s how we’ll do it.

1. Import the mandatory libraries.

import pandas as pd

from typing import Record

from IPython import get_ipython

from autogen.coding import CodeBlock, CodeExecutor, CodeExtractor, CodeResult, MarkdownCodeExtractor

2. Outline the executor that may extract and run the code from jupyter cells.

class NotebookExecutor(CodeExecutor):

@property

def code_extractor(self) -> CodeExtractor:

# Extact code from markdown blocks.

return MarkdownCodeExtractor()

def __init__(self) -> None:

# Get the present IPython occasion working on this pocket book.

self._ipython = get_ipython()

def execute_code_blocks(self, code_blocks: Record[CodeBlock]) -> CodeResult:

log = ""

for code_block in code_blocks:

consequence = self._ipython.run_cell("%%seize --no-display capn" + code_block.code)

log += self._ipython.ev("cap.stdout")

log += self._ipython.ev("cap.stderr")

if consequence.consequence isn't None:

log += str(consequence.consequence)

exitcode = 0 if consequence.success else 1

if consequence.error_before_exec isn't None:

log += f"n{consequence.error_before_exec}"

exitcode = 1

if consequence.error_in_exec isn't None:

log += f"n{consequence.error_in_exec}"

exitcode = 1

if exitcode != 0:

break

return CodeResult(exit_code=exitcode, output=log)3. Outline the brokers.

code_writer_agent = ConversableAgent(

title="CodeWriter",

system_message="You're a useful AI assistant.n"

"You utilize your coding ability to unravel issues.n"

"You will have entry to a IPython kernel to execute Python code.n"

"You possibly can counsel Python code in Markdown blocks, every block is a cell.n"

"The code blocks will likely be executed within the IPython kernel within the order you counsel them.n"

"All needed libraries have already been put in.n"

"Add return or print statements to the code to get the outputn"

"As soon as the duty is completed, returns 'TERMINATE'.",

llm_config={"config_list": [{"model": "gpt-4o-mini"}]},

)

code_executor_agent = ConversableAgent(

title="CodeExecutor",

llm_config=False,

code_execution_config={"executor": NotebookExecutor()},

is_termination_msg=lambda msg: "TERMINATE" in msg.get("content material", "").strip().higher(),

human_input_mode="ALWAYS"

)

4. Learn the file and provoke the chat with the file.

df = pd.read_csv('datasets/mountains_vs_beaches_preferences.csv')

chat_result = code_executor_agent.initiate_chat(

code_writer_agent,

message="What are the column names within the dataframe outlined above as df?",

)

5. We will print the chat historical past as follows:

for chat in chat_result.chat_history[:]:

if chat['name'] == 'CodeWriter' and 'TERMINATE' not in chat['content']:

print("--------agent-----------")

print(chat['content'])

if chat['name'] == 'CodeExecutor' and 'exitcode' not in chat['content']:

print("--------user------------")

print(chat['content'])As we will see once more, we will get the code generated by the agent and in addition the outcomes after executing the code.

Conclusion

AutoGen’s code executors present flexibility and performance for AI brokers to carry out real-world duties. The command line executor permits script execution, whereas the Jupyter code executor helps iterative growth. Customized executors, however, enable builders to create tailor-made workflows.

These instruments empower AI brokers to transition from drawback solvers to answer implementers. Builders can use these options to construct clever techniques that ship actionable insights and automate complicated processes.

Steadily Requested Questions

A. Code Executors in AutoGen enable AI brokers to generate, execute, and consider code in actual time. This allows brokers to automate duties, carry out knowledge evaluation, debug techniques, and implement dynamic workflows.

A. The Command Line Executor saves and executes code as separate recordsdata, ultimate for duties like file administration and script execution. The Jupyter Code Executor operates in a stateful atmosphere, permitting reuse of variables and selective re-execution of code blocks, making it extra appropriate for iterative coding duties like constructing ML fashions.

A. Sure, each the Command Line Executor and Jupyter Code Executor may be configured to run on Docker containers, offering a versatile atmosphere for execution.

A. Customized Code Executors enable builders to outline specialised execution logic, reminiscent of working code inside the similar Jupyter pocket book. That is helpful for duties requiring a excessive stage of integration or customization.

A. Earlier than utilizing Code Executors, guarantee you will have the mandatory API keys on your most popular LLMs. You must also have the required libraries, reminiscent of `autogen-agentchat` and `jupyter_kernel_gateway`, put in in your atmosphere.