NVIDIA has introduced that its researchers have developed a brand new generative AI mannequin able to creating audio from textual content or audio prompts.

Fugatto, which is brief for Foundational Generative Audio Transformer Opus 1, can create music from textual content prompts, take away or add devices from present audio, and even change the accent or emotion in a voice.

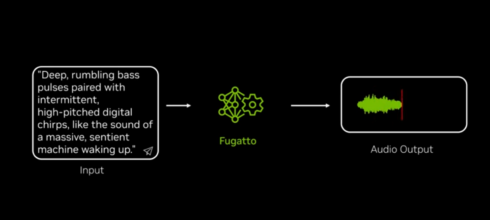

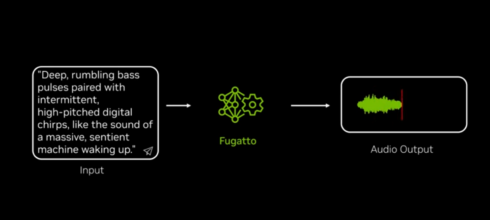

As an illustration, a promo video by NVIDIA exhibits a person prompting Fugatto to create “Deep, rumbling bass pulses paired with intermittent, high-pitched digital chirps, just like the sound of an enormous, sentient machine waking up.” One other instance was to offer an audio clip of an individual saying a brief sentence and asking to alter the tone from calm to indignant.

In accordance with NVIDIA, Fugatto builds on the analysis crew’s earlier work in areas like speech modeling, audio vocoding, and audio understanding.

It was developed by a various group of researchers all over the world — together with India, Brazil, China, Jordan, and South Korea — which NVIDIA says makes the mannequin’s multi-accent and multilingual capabilities higher. In accordance with the crew, one of many hardest challenges in constructing Fugatto was “producing a blended dataset that accommodates tens of millions of audio samples used for coaching.” To realize this, the crew used a method during which they generated knowledge and directions that expanded the vary of duties the mannequin may carry out, which improves efficiency and in addition permits it to tackle new duties while not having further knowledge.

The crew additionally meticulously studied present datasets to attempt to uncover any potential new relationships among the many knowledge.

In accordance with NVIDIA, throughout inference the mannequin makes use of a method referred to as ComposableART, which permits them to mix directions that in coaching had been solely seen individually. As an illustration, a immediate may ask for an audio snippet spoken in a tragic tone in a French accent.

“I needed to let customers mix attributes in a subjective or creative approach, deciding on how a lot emphasis they placed on each,” stated Rohan Badlani, one of many AI researchers who constructed Fugatto.

The mannequin can even generate sounds that may change over time, similar to a thunderstorm transferring by an space. It may additionally generate soundscapes of sounds it hasn’t heard collectively throughout coaching, like a thunderstorm transitioning into birds singing within the morning.

“Fugatto is our first step towards a future the place unsupervised multitask studying in audio synthesis and transformation emerges from knowledge and mannequin scale,” stated Rafael Valle, supervisor of utilized audio analysis at NVIDIA and one other member of the analysis crew that developed the mannequin.