“A quick-moving know-how subject the place new instruments, applied sciences and platforms are launched very continuously and the place it is vitally laborious to maintain up with new developments.” I could possibly be describing both the VR area or Knowledge Engineering, however the truth is this put up is in regards to the intersection of each.

Digital Actuality – The Subsequent Frontier in Media

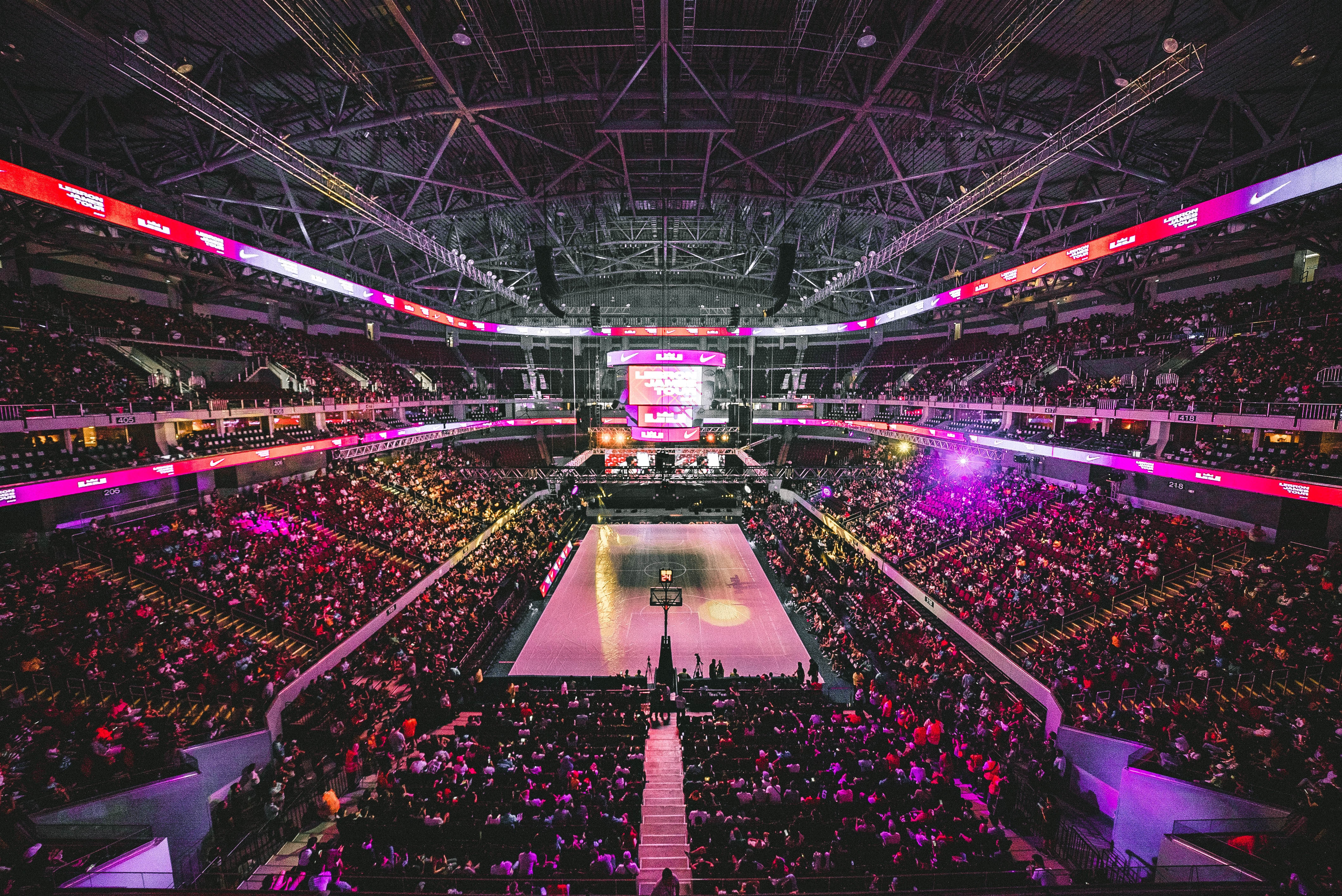

I work as a Knowledge Engineer at a number one firm within the VR area, with a mission to seize and transmit actuality in excellent constancy. Our content material varies from on-demand experiences to stay occasions like NBA video games, comedy reveals and music live shows. The content material is distributed by each our app, for a lot of the VR headsets out there, and likewise through Oculus Venues.

From a content material streaming perspective, our use case will not be very totally different from another streaming platform. We ship video content material by the Web; customers can open our app and flick thru totally different channels and choose which content material they need to watch. However that’s the place the similarities finish; from the second customers put their headsets on, we get their full consideration. In a standard streaming software, the content material will be streaming within the gadget however there isn’t a approach to know if the person is definitely paying consideration and even wanting on the gadget. In VR, we all know precisely when a person is actively consuming content material.

Streams of VR Occasion Knowledge

One integral a part of our immersive expertise providing is stay occasions. The primary distinction with conventional video-on-demand content material is that these experiences are streamed stay solely throughout the occasion. For instance, we stream stay NBA video games to most VR headsets out there. Reside occasions carry a special set of challenges in each the technical facets (cameras, video compression, encoding) and the information they generate from person habits.

Each person interplay in our app generates a person occasion that’s despatched to our servers: app opening, scrolling by the content material, deciding on a selected content material to verify the outline and title, opening content material and beginning to watch, stopping content material, fast-forwarding, exiting the app. Even whereas watching content material, the app generates a “beacon” occasion each few seconds. This uncooked knowledge from the units must be enriched with content material metadata and geolocation info earlier than it may be processed and analyzed.

VR is an immersive platform so customers can’t simply look away when a selected piece of content material will not be attention-grabbing to them; they’ll both preserve watching, swap to totally different content material or—within the worst-case situation—even take away their headsets. Figuring out what content material generates probably the most partaking habits from the customers is crucial for content material era and advertising and marketing functions. For instance, when a person enters our software, we need to know what drives their consideration. Are they excited by a selected sort of content material, or simply shopping the totally different experiences? As soon as they resolve what they need to watch, do they keep within the content material for all the period or do they simply watch just a few seconds? After watching a selected sort of content material (sports activities or comedy), do they preserve watching the identical type of content material? Are customers from a selected geographic location extra excited by a selected sort of content material? What in regards to the market penetration of the totally different VR platforms?

From a knowledge engineering perspective, it is a basic situation of clickstream knowledge, with a VR headset as an alternative of a mouse. Giant quantities of information from person habits are generated from the VR gadget, serialized in JSON format and routed to our backend programs the place knowledge is enriched, pre-processed and analyzed in each actual time and batch. We need to know what’s going on in our platform at this very second and we additionally need to know the totally different developments and statistics from this week, final month or the present yr for instance.

The Want for Operational Analytics

The clickstream knowledge situation has some well-defined patterns with confirmed choices for knowledge ingestion: streaming and messaging programs like Kafka and Pulsar, knowledge routing and transformation with Apache NiFi, knowledge processing with Spark, Flink or Kafka Streams. For the information evaluation half, issues are fairly totally different.

There are a number of totally different choices for storing and analyzing knowledge, however our use case has very particular necessities: real-time, low-latency analytics with quick queries on knowledge with no fastened schema, utilizing SQL because the question language. Our conventional knowledge warehouse answer offers us good outcomes for our reporting analytics, however doesn’t scale very effectively for real-time analytics. We have to get info and make selections in actual time: what’s the content material our customers discover extra partaking, from what elements of the world are they watching, how lengthy do they keep in a selected piece of content material, how do they react to commercials, A/B testing and extra. All this info can assist us drive an much more partaking platform for VR customers.

A greater rationalization of our use case is given by Dhruba Borthakur in his six propositions of Operational Analytics:

- Complicated queries

- Low knowledge latency

- Low question latency

- Excessive question quantity

- Reside sync with knowledge sources

- Blended varieties

Our queries for stay dashboards and actual time analytics are very complicated, involving joins, subqueries and aggregations. Since we’d like the data in actual time, low knowledge latency and low question latency are crucial. We confer with this as operational analytics, and such a system should help all these necessities.

Design for Human Effectivity

A further problem that most likely most different small firms face is the best way knowledge engineering and knowledge evaluation groups spend their time and assets. There are numerous superior open-source initiatives within the knowledge administration market – particularly databases and analytics engines – however as knowledge engineers we need to work with knowledge, not spend our time doing DevOps, putting in clusters, organising Zookeeper and monitoring tens of VMs and Kubernetes clusters. The precise stability between in-house improvement and managed companies helps firms give attention to revenue-generating duties as an alternative of sustaining infrastructure.

For small knowledge engineering groups, there are a number of issues when choosing the proper platform for operational analytics:

- SQL help is a key issue for fast improvement and democratization of the knowledge. We do not have time to spend studying new APIs and constructing instruments to extract knowledge, and by exposing our knowledge by SQL we allow our Knowledge Analysts to construct and run queries on stay knowledge.

- Most analytics engines require the information to be formatted and structured in a particular schema. Our knowledge is unstructured and generally incomplete and messy. Introducing one other layer of information cleaning, structuring and ingestion may even add extra complexity to our pipelines.

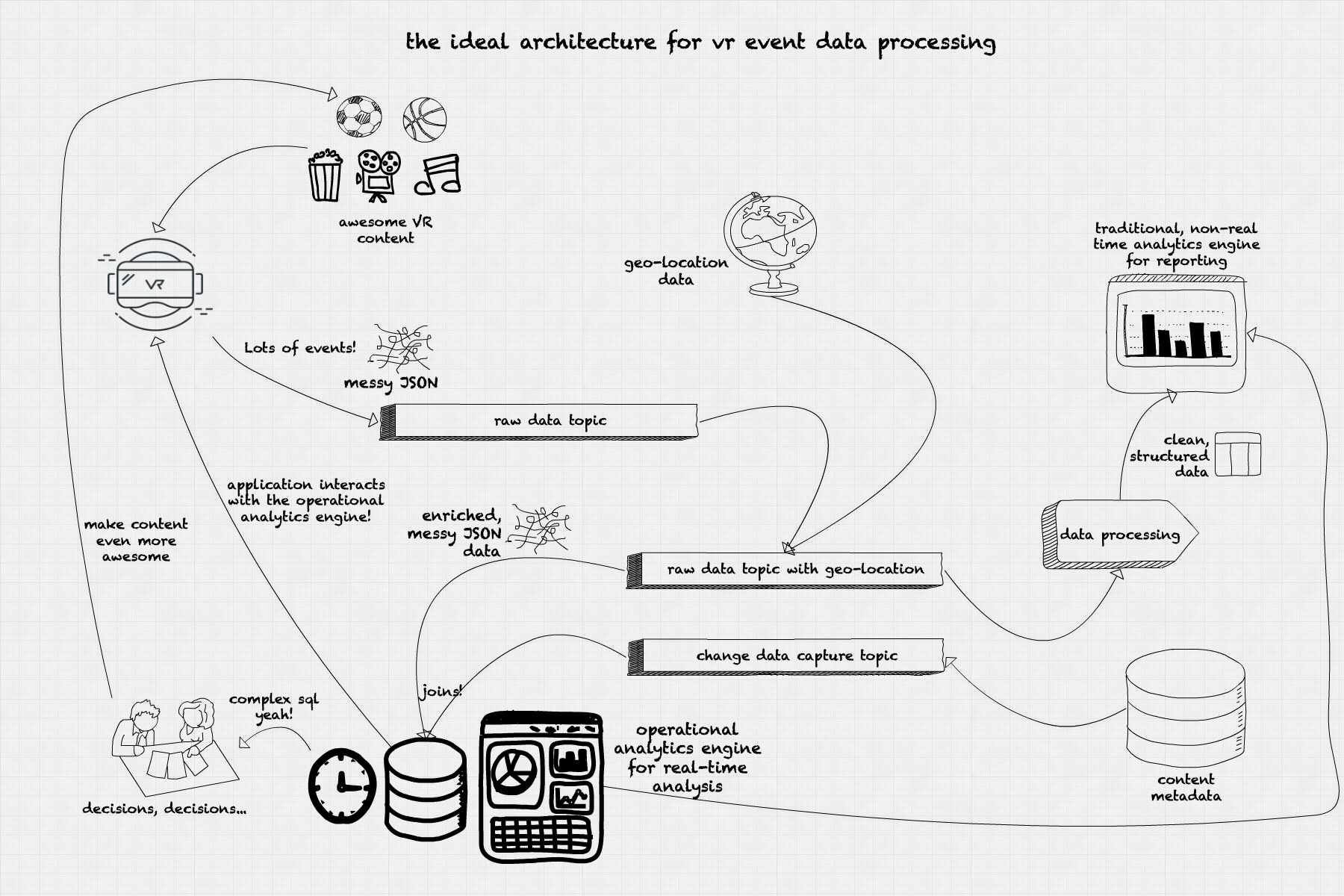

Our Ultimate Structure for Operational Analytics on VR Occasion Knowledge

Knowledge and Question Latency

How are our customers reacting to particular content material? Is that this commercial too invasive that customers cease watching the content material? Are customers from a selected geography consuming extra content material in the present day? What platforms are main the content material consumption now? All these questions will be answered by operational analytics. Good operational analytics would permit us to investigate the present developments in our platform and act accordingly, as within the following cases:

Is that this content material getting much less traction in particular geographies? We are able to add a promotional banner on our app focused to that particular geography.

Is that this commercial so invasive that’s inflicting customers to cease watching our content material? We are able to restrict the looks price or change the scale of the commercial on the fly.

Is there a major variety of outdated units accessing our platform for a selected content material? We are able to add content material with decrease definition to provide these customers a greater expertise.

These use circumstances have one thing in frequent: the necessity for a low-latency operational analytics engine. All these questions should be answered in a spread from milliseconds to some seconds.

Concurrency

Along with this, our use mannequin requires a number of concurrent queries. Totally different strategic and operational areas want totally different solutions. Advertising and marketing departments can be extra excited by numbers of customers per platform or area; engineering would need to know the way a selected encoding impacts the video high quality for stay occasions. Executives would need to see what number of customers are in our platform at a selected cut-off date throughout a stay occasion, and content material companions would have an interest within the share of customers consuming their content material by our platform. All these queries should run concurrently, querying the information in several codecs, creating totally different aggregations and supporting a number of totally different real-time dashboards. Every role-based dashboard will current a special perspective on the identical set of information: operational, strategic, advertising and marketing.

Actual-Time Resolution-Making and Reside Dashboards

With the intention to get the information to the operational analytics system shortly, our splendid structure would spend as little time as doable munging and cleansing knowledge. The information come from the units in JSON format, with just a few IDs figuring out the gadget model and mannequin, the content material being watched, the occasion timestamp, the occasion sort (beacon occasion, scroll, clicks, app exit), and the originating IP. All knowledge is nameless and solely identifies units, not the individual utilizing it. The occasion stream is ingested into our system in a publish/subscribe system (Kafka, Pulsar) in a selected matter for uncooked incoming knowledge. The information comes with an IP handle however with no location knowledge. We run a fast knowledge enrichment course of that attaches geolocation knowledge to our occasion and publishes to a different matter for enriched knowledge. The quick enrichment-only stage doesn’t clear any knowledge since we wish this knowledge to be ingested quick into the operational analytics engine. This enrichment will be carried out utilizing specialised instruments like Apache NiFi and even stream processing frameworks like Spark, Flink or Kafka Streams. On this stage additionally it is doable to sessionize the occasion knowledge utilizing windowing with timeouts, establishing whether or not a selected person remains to be within the platform primarily based on the frequency (or absence) of the beacon occasions.

A second ingestion path comes from the content material metadata database. The occasion knowledge should be joined with the content material metadata to transform IDs into significant info: content material sort, title, and period. The choice to affix the metadata within the operational analytics engine as an alternative of through the knowledge enrichment course of comes from two elements: the necessity to course of the occasions as quick as doable, and to dump the metadata database from the fixed level queries wanted for getting the metadata for a selected content material. By utilizing the change knowledge seize from the unique content material metadata database and replicating the information within the operational analytics engine we obtain two targets: keep a separation between the operational and analytical operations in our system, and likewise use the operational analytics engine as a question endpoint for our APIs.

As soon as the information is loaded within the operational analytics engine, we use visualization instruments like Tableau, Superset or Redash to create interactive, real-time dashboards. These dashboards are up to date by querying the operational analytics engine utilizing SQL and refreshed each few seconds to assist visualize the adjustments and developments from our stay occasion stream knowledge.

The insights obtained from the real-time analytics assist make selections on learn how to make the viewing expertise higher for our customers. We are able to resolve what content material to advertise at a selected cut-off date, directed to particular customers in particular areas utilizing a selected headset mannequin. We are able to decide what content material is extra partaking by inspecting the typical session time for that content material. We are able to embrace totally different visualizations in our app, carry out A/B testing and get ends in actual time.

Conclusion

Operational analytics permits enterprise to make selections in actual time, primarily based on a present stream of occasions. This sort of steady analytics is vital to understanding person habits in platforms like VR content material streaming at a worldwide scale, the place selections will be made in actual time on info like person geolocation, headset maker and mannequin, connection pace, and content material engagement. An operational analytics engine providing low-latency writes and queries on uncooked JSON knowledge, with a SQL interface and the flexibility to work together with our end-user API, presents an infinite variety of prospects for serving to make our VR content material much more superior!