This weblog publish focuses on new options and enhancements. For a complete listing, together with bug fixes, please see the launch notes.

Introducing the template to fine-tune Llama 3.1

Llama 3.1 is a set of pre-trained and instruction-tuned massive language fashions (LLMs) developed by Meta AI. It’s recognized for its open-source nature and spectacular capabilities, comparable to being optimized for multilingual dialogue use instances, prolonged context size of 128K, superior device utilization, and improved reasoning capabilities.

It’s out there in three mannequin sizes:

- 405 billion parameters: The flagship basis mannequin designed to push the boundaries of AI capabilities.

- 70 billion parameters: A extremely performant mannequin that helps a variety of use instances.

- 8 billion parameters: A light-weight, ultra-fast mannequin that retains lots of the superior options of its bigger counterpart, which makes it extremely succesful.

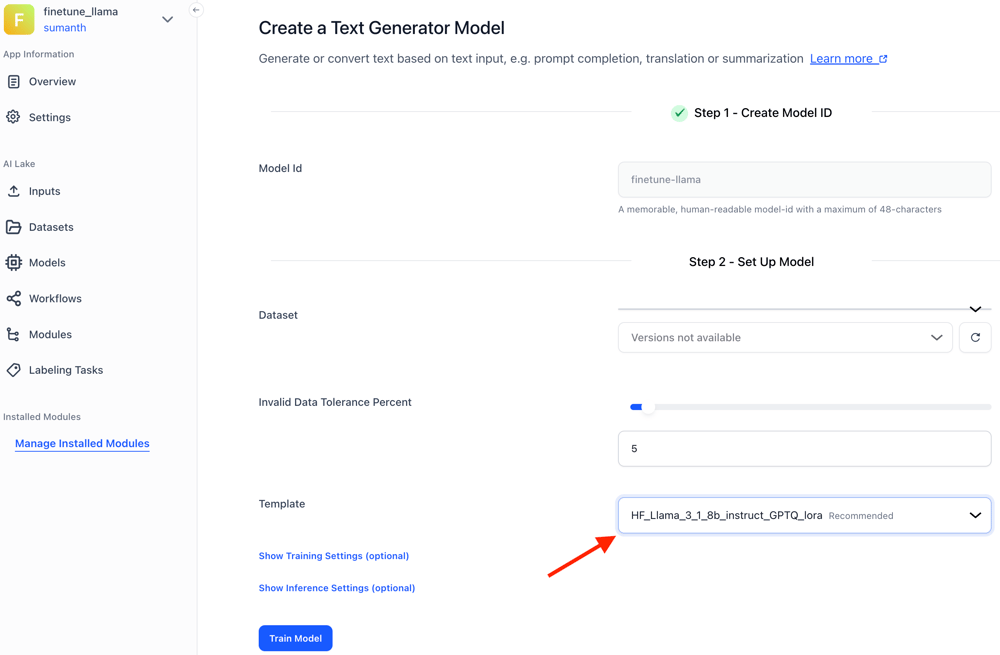

At Clarifai, we provide the 8 billion parameter model of Llama 3.1, which you’ll fine-tune utilizing the Llama 3.1 coaching template throughout the Platform UI for prolonged context, instruction-following, or purposes comparable to textual content technology and textual content classification duties. We transformed it into the Hugging Face Transformers format to boost its compatibility with our platform and pipelines, ease its consumption, and optimize its deployment in numerous environments.

To get probably the most out of the Llama 3.1 8B mannequin, we additionally quantized it utilizing the GPTQ quantization technique. Moreover, we employed the LoRA (Low-Rank Adaptation) technique to realize environment friendly and quick fine-tuning of the pre-trained Llama 3.1 8B mannequin.

High quality-tuning Llama 3.1 is simple: Begin by creating your Clarifai app and importing the info you need to fine-tune. Subsequent, add a brand new mannequin inside your app, and choose the “Textual content-Generator” mannequin sort. Select your uploaded information, customise the fine-tuning parameters, and prepare the mannequin. You’ll be able to even consider the mannequin immediately throughout the UI as soon as the coaching is completed.

Comply with this information to fine-tune the Llama 3.1 8b instruct mannequin with your personal information.

Printed new fashions

Clarifai-hosted fashions are those we host inside our Clarifai Cloud. Wrapped fashions are these hosted externally, however we deploy them on our platform utilizing their third-party API keys

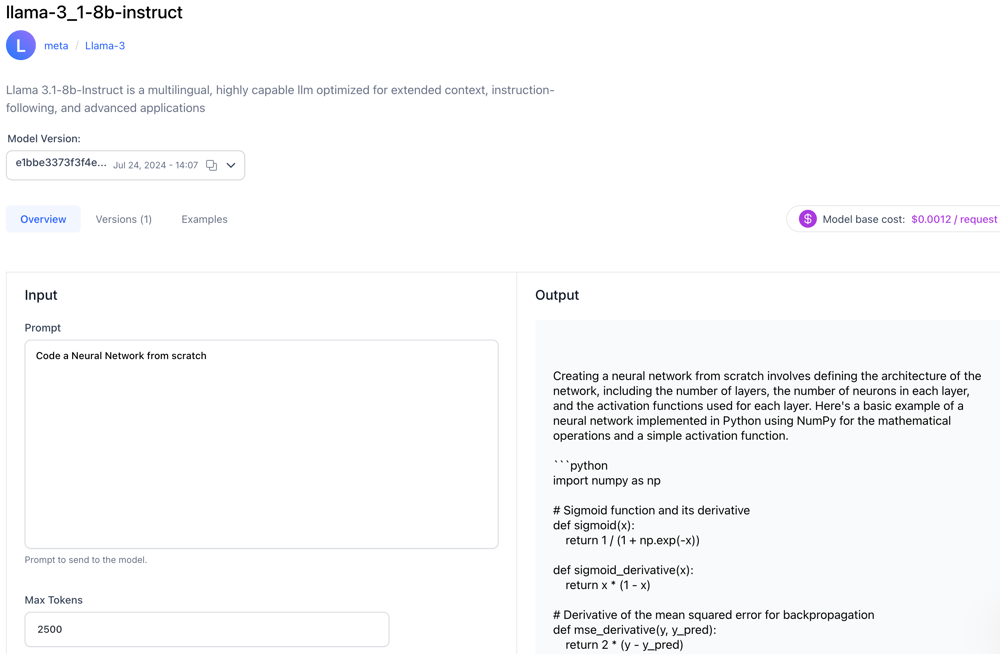

- Printed Llama 3.1-8b-Instruct, a multilingual, extremely succesful LLM optimized for prolonged context, instruction-following, and superior purposes.

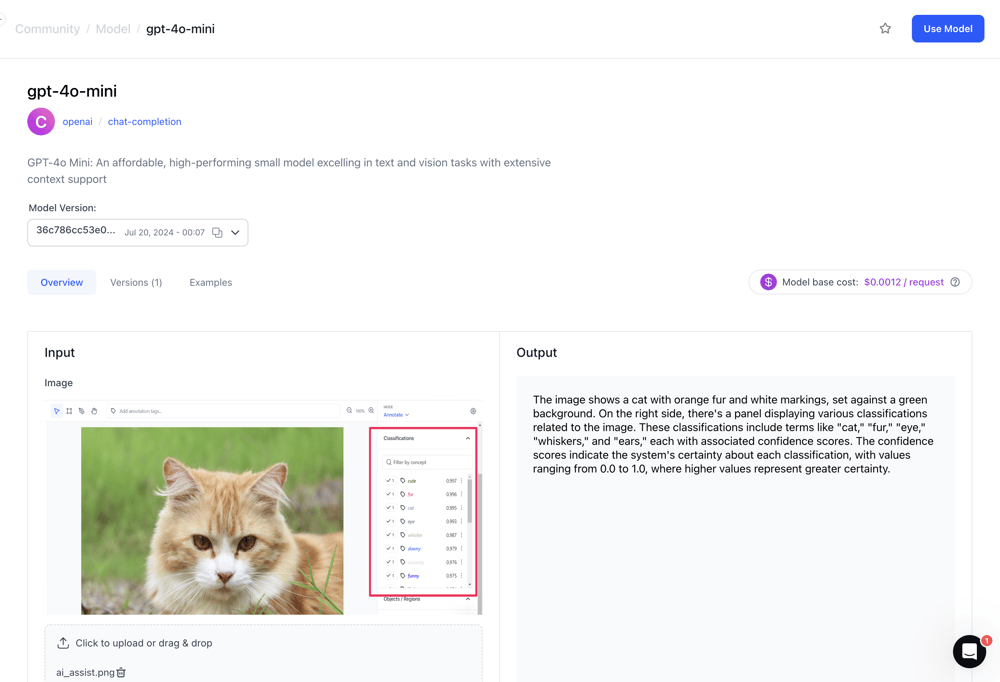

- Printed GPT-4o-mini, an inexpensive, high-performing small mannequin excelling in textual content and imaginative and prescient duties with intensive context help.

- Printed Qwen1.5-7B-Chat, an open-source, multilingual LLM with 32K token help, excelling in language understanding, alignment with human preferences, and aggressive tool-use capabilities.

- Printed Qwen2-7B-Instruct, a state-of-the-art multilingual language mannequin with 7.07 billion parameters, excelling in language understanding, technology, coding, and arithmetic, and supporting as much as 128,000 tokens.

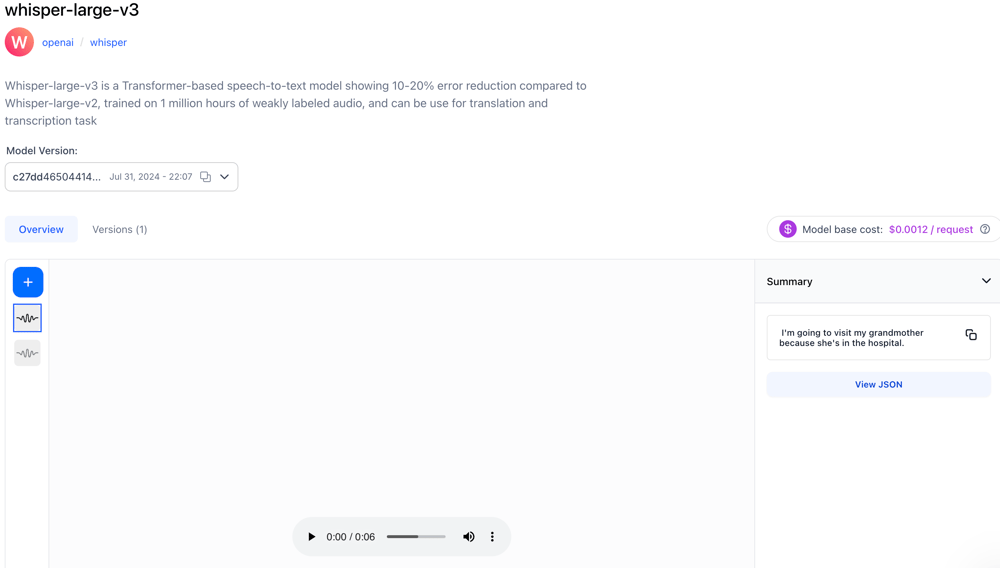

- Printed Whisper-Giant-v3, a Transformer-based speech-to-text mannequin displaying 10-20% error discount in comparison with Whisper-Giant-v2, skilled on 1 million hours of weakly labeled audio, and can be utilized for translation and transcription duties.

- Printed Llama-3-8b-Instruct-4bit, an instruction-tuned LLM optimized for dialogue use instances. It may possibly outperform lots of the out there open-source chat LLMs on frequent trade benchmarks.

- Printed Mistral-Nemo-Instruct, a state-of-the-art 12B multilingual LLM with a 128k token context size, optimized for reasoning, code technology, and world purposes.

- Printed Phi-3-Mini-4K-Instruct, a 3.8B parameter small language mannequin providing state-of-the-art efficiency in reasoning and instruction-following duties. It outperforms bigger fashions with its high-quality information coaching.

Added patch operations – Python SDK

Patch operations have been launched for apps, datasets, enter annotations, and ideas. You should utilize the Python SDK to both merge, take away, or overwrite your enter annotations, datasets, apps, and ideas. All three actions help overwriting by default however have particular conduct for lists of objects.

The merge motion will overwrite a key:worth with key:new_value or append to an current listing of values, merging dictionaries that match by a corresponding id subject.

The take away motion will overwrite a key:worth with key:new_value or delete something in an inventory that matches the supplied values’ IDs.

The overwrite motion will exchange the previous object with the brand new object.

Patching App

Beneath is an instance of performing a patch operation on an App. This consists of overwriting the bottom workflow, altering the app to an app template, and updating the app’s description, notes, default language, and picture URL. Notice that the ‘take away’ motion is barely used to take away the app’s picture.

Patching Dataset

Beneath is an instance of performing a patch operation on a dataset. Much like the app, you possibly can replace the dataset’s description, notes, and picture URL.

Patching Enter Annotation

Beneath is an instance of doing patch operation of Enter Annotations. Now we have uploaded the picture object together with the bounding field annotations and you may change that annotations utilizing the patch operations or take away the annotation.

Patching Ideas

Beneath is an instance of performing a patch operation on ideas. The one supported motion at present is overwrite. You should utilize this to vary the prevailing label names related to a picture.

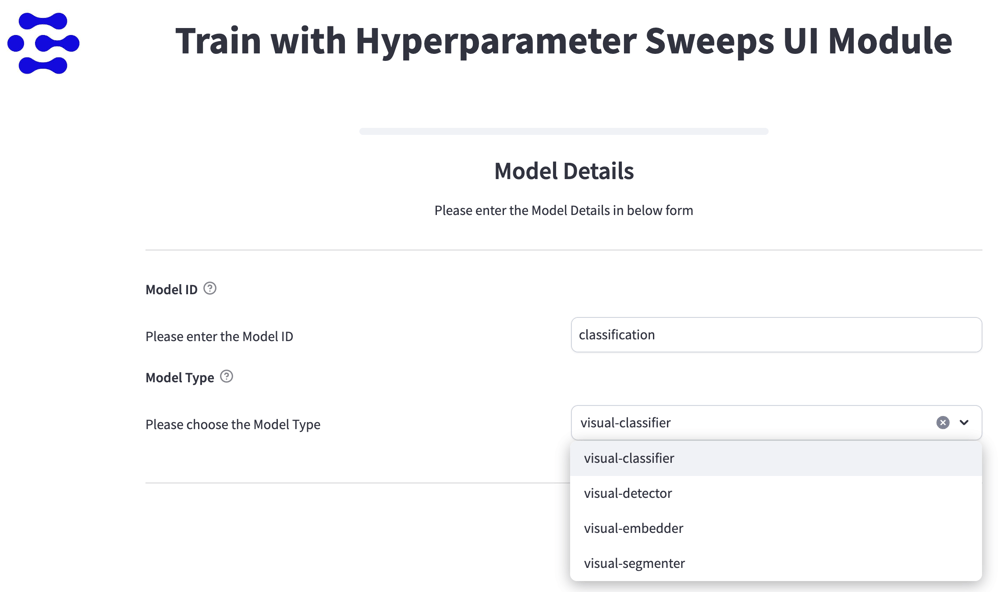

Improved the performance of the Hyperparamater Sweeps module

Discovering the suitable hyperparameters for coaching a mannequin may be tough, requiring a number of iterations to get them excellent. The Hyperparameter module simplifies this course of by permitting you to check completely different values and mixtures of hyperparameters.

Now you can set a variety of values for every hyperparameter and resolve how a lot to regulate them with every step. Plus, you possibly can combine and match completely different hyperparameters to see what works greatest. This manner, you possibly can rapidly uncover the optimum settings to your mannequin with out the necessity for fixed handbook changes.

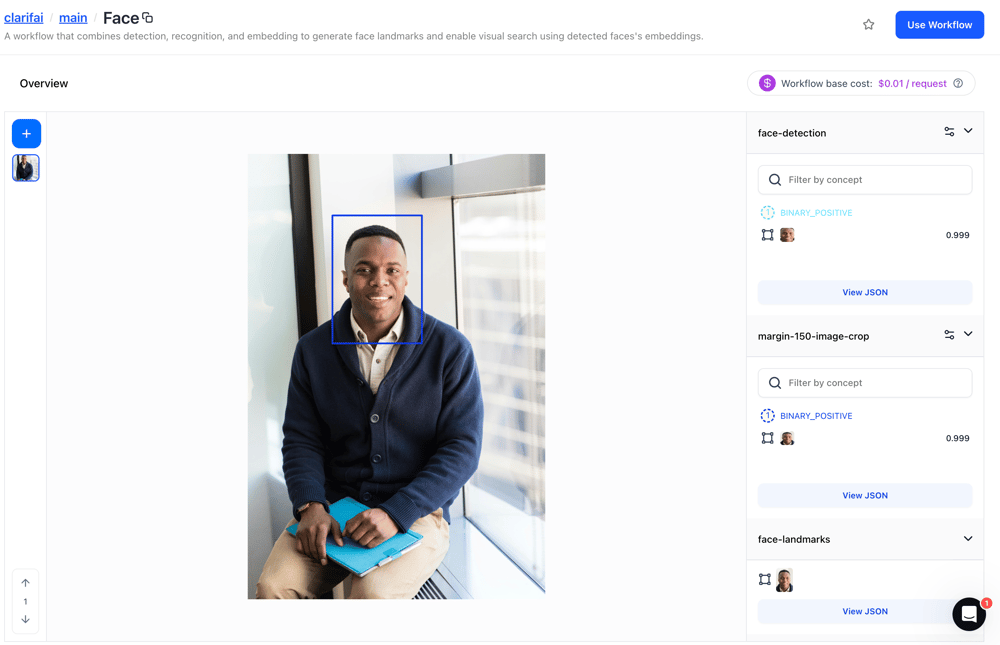

Improved the performance of the Face workflow

Workflows permits you to mix a number of fashions to hold out completely different operations on the Platform. The face workflow combines detection, recognition, and embedding fashions to generate face landmarks and allow visible search utilizing detected faces’s embeddings.

Once you add a picture, the workflow first detects the face after which crops it. Subsequent, it identifies key facial landmarks, such because the eyes and mouth. The picture is then aligned utilizing these keypoints. After alignment, it’s despatched to the visible embedder mannequin, which generates numerical vectors representing every face within the picture or video. Lastly, these embeddings are utilized by the face-clustering mannequin to group visually related faces.

Group Settings and Administration

- Applied restrictions on the power so as to add new organizations primarily based on the consumer’s present group depend and have entry

- If a consumer has created one group and doesn’t have entry to the a number of organizations characteristic, the “Add a company” button is now disabled. We additionally show an applicable tooltip to them.

- If a consumer has entry to the a number of organizations characteristic however has reached the utmost creation restrict of 20 organizations, the “Add a company” button is disabled. We additionally show an applicable tooltip to them.

Extra modifications

- We enabled the RAG SDK to make use of setting variables for enhanced safety, flexibility, and simplified configuration administration.

- Enabled deletion of related mannequin belongings when eradicating a mannequin annotation: Now, once you delete a mannequin annotation, the related mannequin belongings are additionally marked as deleted.

- Mounted points with Python and Node.js SDK code snippets: In the event you click on the “Use Mannequin” button on a person mannequin’s web page, the “Name by API / Use in a Workflow” modal seems. You’ll be able to then combine the displayed code snippets in numerous programming languages into your personal use case.

Beforehand, the code snippets for Python and Node.js SDKs for image-to-text fashions incorrectly outputted ideas as a substitute of the anticipated textual content. We fastened the difficulty to make sure the output is now appropriately supplied as textual content.

Prepared to start out constructing?

High quality-tuning LLMs permits you to tailor a pre-trained massive language mannequin to your group’s distinctive wants and aims. With our platform’s no-code expertise, you possibly can fine-tune LLMs effortlessly.

Discover our Quickstart tutorial for step-by-step steering to fine-tune Llama 3.1. Join right here to get began!

Thanks for studying, see you subsequent time 👋!